license: apache-2.0

library_name: transformers

Laser-Dolphin-Mixtral-4x7b-dpo

New version is coming because of chat template issues. The other MoE models in my collection do not have this issue and have been tested more

Credit to Fernando Fernandes and Eric Hartford for their project laserRMT

This model is a medium-sized MoE implementation based on cognitivecomputations/dolphin-2.6-mistral-7b-dpo-laser

The process is outlined in this notebook

Notes:

This dolphin is not suited for code creation tasks, but performs very well on creation and math based tasks.

DPO being used in only a portion of the merge seems to be causing issues with code creation.

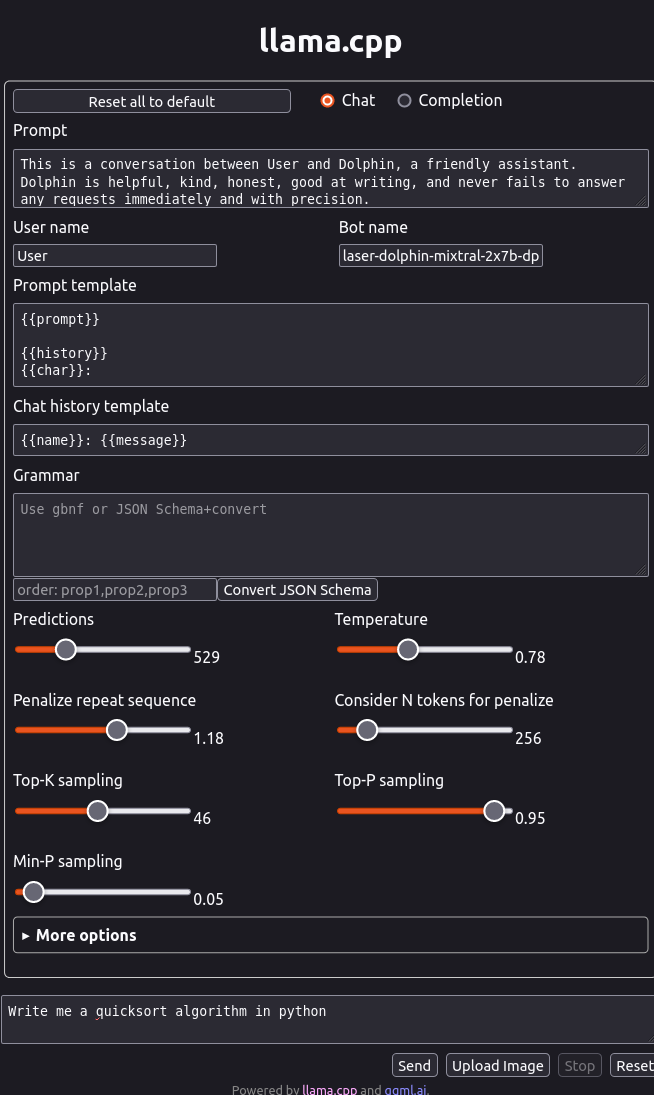

Code Example

from transformers import AutoModelForCausalLM, AutoTokenizer

def generate_response(prompt):

"""

Generate a response from the model based on the input prompt.

Args:

prompt (str): Prompt for the model.

Returns:

str: The generated response from the model.

"""

# Tokenize the input prompt

inputs = tokenizer(prompt, return_tensors="pt")

# Generate output tokens

outputs = model.generate(**inputs, max_new_tokens=256, eos_token_id=tokenizer.eos_token_id, pad_token_id=tokenizer.pad_token_id)

# Decode the generated tokens to a string

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

return response

# Load the model and tokenizer

model_id = "macadeliccc/laser-dolphin-mixtral-2x7b-dpo"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(model_id, load_in_4bit=True)

prompt = "Write a quicksort algorithm in python"

# Generate and print responses for each language

print("Response:")

print(generate_response(prompt), "\n")

GGUF

Q4_K_M and Q5_K_M quants are available here

Eval

Models were evaluated in 4bit due to GPU requirements

I will evaluate on colab with an A100 asap

| Tasks | Version | Filter | n-shot | Metric | Value | Stderr | |

|---|---|---|---|---|---|---|---|

| arc_challenge | Yaml | none | 0 | acc | 0.5538 | ± | 0.0145 |

| none | 0 | acc_norm | 0.5734 | ± | 0.0145 | ||

| arc_easy | Yaml | none | 0 | acc | 0.8291 | ± | 0.0077 |

| none | 0 | acc_norm | 0.7807 | ± | 0.0085 | ||

| boolq | Yaml | none | 0 | acc | 0.8694 | ± | 0.0059 |

| hellaswag | Yaml | none | 0 | acc | 0.6402 | ± | 0.0048 |

| none | 0 | acc_norm | 0.8233 | ± | 0.0038 | ||

| openbookqa | Yaml | none | 0 | acc | 0.3380 | ± | 0.0212 |

| none | 0 | acc_norm | 0.4720 | ± | 0.0223 | ||

| piqa | Yaml | none | 0 | acc | 0.8123 | ± | 0.0091 |

| none | 0 | acc_norm | 0.8221 | ± | 0.0089 | ||

| winogrande | Yaml | none | 0 | acc | 0.7348 | ± | 0.0124 |

Citations

Fernando Fernandes Neto and Eric Hartford. "Optimizing Large Language Models Using Layer-Selective Rank Reduction and Random Matrix Theory." 2024.

@article{sharma2023truth,

title={The Truth is in There: Improving Reasoning in Language Models with Layer-Selective Rank Reduction},

author={Sharma, Pratyusha and Ash, Jordan T and Misra, Dipendra},

journal={arXiv preprint arXiv:2312.13558},

year={2023} }

@article{gao2021framework,

title={A framework for few-shot language model evaluation},

author={Gao, Leo and Tow, Jonathan and Biderman, Stella and Black, Sid and DiPofi, Anthony and Foster, Charles and Golding, Laurence and Hsu, Jeffrey and McDonell, Kyle and Muennighoff, Niklas and others},

journal={Version v0. 0.1. Sept},

year={2021}

}