user

stringlengths 3

28

| created_at

timestamp[us] | body

stringlengths 1

173k

| issue_number

int64 1

2.57k

| __index_level_0__

int64 0

8.05k

|

|---|---|---|---|---|

natolambert | 2023-03-07T16:49:52 | Yup, took me a while to find the comments and that's right. May get more complicated in the future, but my deep dive result is: this code looks right. | 191 | 51 |

PanchenkoYehor | 2023-03-03T01:27:47 | The relevant open issue that currently is resolved by updating environment #183 | 190 | 52 |

HuggingFaceDocBuilderDev | 2023-03-03T08:23:48 | _The documentation is not available anymore as the PR was closed or merged._ | 190 | 53 |

lvwerra | 2023-03-06T08:23:33 | Looks great, thanks for fixing! | 190 | 54 |

lvwerra | 2023-03-06T14:02:02 | Not sure I fully understand the question :) Indeed, PEFT is being worked on in #145. In terms of RL algorithms only PPO is implemented at this point. | 189 | 55 |

ryan-caesar-ramos | 2023-03-06T14:40:54 | > In terms of RL algorithms only PPO is implemented at this point.

Thank you! This answers my question, I was wondering it `trl` had an implementation of ILQL. | 189 | 56 |

lvwerra | 2023-03-02T13:48:23 | This is the part where the model is optimized with PPO so several forward/backward passes and optimizations steps are run. | 188 | 57 |

tuzeao | 2023-03-03T01:58:53 | Thanks for reply. After reading the source code with the general workflow in README.md I have some clues now. | 188 | 58 |

younesbelkada | 2023-03-02T09:59:39 | Hello @gjmulder

Thanks for the issue!

I think there are few things that you need to take into account!

1- The convergence is usually faster if you pre-train the model in the target domain (here `imdb` generations) - because of the KL-penalty constraining the model to not diverge much from its original domain. If you want your `t5-small` model converge, you might need to tweak [`init_kl_coef`](https://github.com/lvwerra/trl/blob/a05ddbdd836d3217c80a4b3e679ba984bfd4fa24/trl/trainer/ppo_config.py#L37) (make it lower) - TDLR is that in our experiments we preferred to stick on models that has been already pre-trained on `imdb` for faster convergence

2- `google/flan-t5-small` is an instruction-based model, i.e. the model expects instructions as input (in the imdb example something in the lines of `Write a movie review: xxx`). So you need to deal with these models in a specific way. I can imagine that you can tweak the data processing script to add a variety of instructions + tweaking the `init_kl_coef` might help

Let me know if this helps! | 187 | 59 |

lvwerra | 2023-03-02T10:02:04 | Here's the colab notebook to fine-tune T5: https://colab.research.google.com/drive/17c9KHusZsKnzMVBWDEdQ5POsNLfR8ETR | 187 | 60 |

gjmulder | 2023-03-02T10:05:59 | Hi @younesbelkada @lvwerra fantastic advice, tyvm! This is going to help a lot when I start working with my own data.

I'm starting to appreciate that RL adds a new dimension of complexity to training because of all the other hyper-params one needs to gain an intuitive understanding of. | 187 | 61 |

HuggingFaceDocBuilderDev | 2023-03-01T19:56:04 | _The documentation is not available anymore as the PR was closed or merged._ | 186 | 62 |

HuggingFaceDocBuilderDev | 2023-03-01T19:54:36 | _The documentation is not available anymore as the PR was closed or merged._ | 185 | 63 |

HuggingFaceDocBuilderDev | 2023-03-01T11:27:16 | _The documentation is not available anymore as the PR was closed or merged._ | 184 | 64 |

younesbelkada | 2023-03-01T09:59:12 | Hello @Ytlskys

Can you share with us more details about your environment? What is the command you are using?

Thanks! | 183 | 65 |

NuvoleY | 2023-03-01T14:47:16 | Thank you for your attention!

datasets=2.0.0 torch= 1.12.1+cu116 tqdm=4.64.1 transformers= 4.26.1accelerate=0.16.0

Traceback (most recent call last):

File "D:\Python\Test\test\T\trl-main\examples\sentiment\scripts\gpt2-sentiment.py", line 135, in <module>

for epoch, batch in tqdm(enumerate(ppo_trainer.dataloader)):

File "E:\Python\python3.10.5\lib\site-packages\tqdm\std.py", line 1195, in __iter__

for obj in iterable:

File "E:\Python\python3.10.5\lib\site-packages\accelerate\data_loader.py", line 378, in __iter__

current_batch = next(dataloader_iter)

File "E:\Python\python3.10.5\lib\site-packages\torch\utils\data\dataloader.py", line 681, in __next__

data = self._next_data()

File "E:\Python\python3.10.5\lib\site-packages\torch\utils\data\dataloader.py", line 721, in _next_data

data = self._dataset_fetcher.fetch(index) # may raise StopIteration

File "E:\Python\python3.10.5\lib\site-packages\torch\utils\data\_utils\fetch.py", line 49, in fetch

data = [self.dataset[idx] for idx in possibly_batched_index]

File "E:\Python\python3.10.5\lib\site-packages\torch\utils\data\_utils\fetch.py", line 49, in <listcomp>

data = [self.dataset[idx] for idx in possibly_batched_index]

File "E:\Python\python3.10.5\lib\site-packages\datasets\arrow_dataset.py", line 1765, in __getitem__

return self._getitem(

File "E:\Python\python3.10.5\lib\site-packages\datasets\arrow_dataset.py", line 1750, in _getitem

formatted_output = format_table(

File "E:\Python\python3.10.5\lib\site-packages\datasets\formatting\formatting.py", line 532, in format_table

return formatter(pa_table, query_type=query_type)

File "E:\Python\python3.10.5\lib\site-packages\datasets\formatting\formatting.py", line 281, in __call__

return self.format_row(pa_table)

File "E:\Python\python3.10.5\lib\site-packages\datasets\formatting\torch_formatter.py", line 58, in format_row

return self.recursive_tensorize(row)

File "E:\Python\python3.10.5\lib\site-packages\datasets\formatting\torch_formatter.py", line 54, in recursive_tensorize

return map_nested(self._recursive_tensorize, data_struct, map_list=False)

File "E:\Python\python3.10.5\lib\site-packages\datasets\utils\py_utils.py", line 314, in map_nested

mapped = [

File "E:\Python\python3.10.5\lib\site-packages\datasets\utils\py_utils.py", line 315, in <listcomp>

_single_map_nested((function, obj, types, None, True, None))

File "E:\Python\python3.10.5\lib\site-packages\datasets\utils\py_utils.py", line 251, in _single_map_nested

return function(data_struct)

File "E:\Python\python3.10.5\lib\site-packages\datasets\formatting\torch_formatter.py", line 51, in _recursive_tensorize

return self._tensorize(data_struct)

File "E:\Python\python3.10.5\lib\site-packages\datasets\formatting\torch_formatter.py", line 43, in _tensorize

return torch.tensor(value, **{**default_dtype, **self.torch_tensor_kwargs})

TypeError: new(): invalid data type 'numpy.str_'

I'm sorry i do not know how to solve it

| 183 | 66 |

Ren-Ma | 2023-03-02T03:39:28 | I got the same issue when the code `for epoch, batch in tqdm(enumerate(ppo_trainer.dataloader)):` was executed. Google told me that some columns in the dataset are strings instead of numbers might be the reason. So I commented out `sample["query"] = tokenizer.decode(sample["input_ids"])` in the `build_dataset()` function, then added this line of code `batch["query"] = [tokenizer.decode(q.squeeze()) for q in batch["input_ids"]]` after `batch["response"] = [tokenizer.decode(r.squeeze()) for r in response_tensors]`. In this way, the error disappeared and we can still get "query" without using dataset/dataloader.

Hope this helps! | 183 | 67 |

dengyuning | 2023-03-02T03:50:33 | > I got the same issue when the code `for epoch, batch in tqdm(enumerate(ppo_trainer.dataloader)):` was executed. Google told me that some columns in the dataset are strings instead of numbers might be the reason. So I commented out `sample["query"] = tokenizer.decode(sample["input_ids"])` in the `build_dataset()` function, then added this line of code `batch["query"] = [tokenizer.decode(q.squeeze()) for q in batch["input_ids"]]` after `batch["response"] = [tokenizer.decode(r.squeeze()) for r in response_tensors]`. In this way, the error disappeared and we can still get "query" without using dataset/dataloader. Hope this helps!

What is your environment? I met another issue here :

| 183 | 68 |

Ren-Ma | 2023-03-02T07:28:09 | @dengyuning this seems not like an environment issue ... One guess is that you have inconsistent dimensions between `advantages` and `mask` when you execute `advantages = masked_whiten(advantages, mask)` in line 767 in ppo_trainer.py. I would suggest you to open debug mode, step into this file, run to line 767, and check the shapes of `advantages` and `mask`. | 183 | 69 |

younesbelkada | 2023-03-02T09:47:34 | @Ytlskys it seems that the issue might be related to `numpy` , can you try to upgrade it? (`pip install --upgrade numpy`)

@dengyuning @Ren-Ma I ran the `gpt2-sentiment.py` script with the latest version of `trl` and things seems to work fine! What version of `trl` are you using?

<img width="690" alt="Screenshot 2023-03-02 at 11 28 05" src="https://user-images.githubusercontent.com/49240599/222402593-474b1c64-ce4b-4a05-bf8f-547c37f1575e.png">

| 183 | 70 |

Ren-Ma | 2023-03-02T10:48:14 | > @Ytlskys it seems that the issue might be related to `numpy` , can you try to upgrade it? (`pip install --upgrade numpy`) @dengyuning @Ren-Ma I ran the `gpt2-sentiment.py` script with the latest version of `trl` and things seems to work fine! What version of `trl` are you using? <img alt="Screenshot 2023-03-02 at 11 28 05" width="690" src="https://user-images.githubusercontent.com/49240599/222402593-474b1c64-ce4b-4a05-bf8f-547c37f1575e.png">

trl 0.2.2.dev0, transformers 4.22.1, datasets 2.4.0, torch 1.11.0, numpy 1.19.5, tensorflow 1.14.0(downgrade from 2.xx otherwise would also complain bugs :) ) | 183 | 71 |

younesbelkada | 2023-03-02T10:51:53 | Can you try `trl==0.3.1`? And latest version of `torch` as well? | 183 | 72 |

NuvoleY | 2023-03-02T13:45:29 | @younesbelkada @Ren-Ma Thank you very much. I successfully solved this issue after using @Ren-Ma your method, but I got the same problem with @dengyuning later,I tried to upgrade the package and ran it successfully.Thanks again! | 183 | 73 |

dengyuning | 2023-03-03T07:01:14 | > @younesbelkada @Ren-Ma Thank you very much. I successfully solved this issue after using @Ren-Ma your method, but I got the same problem with @dengyuning later,I tried to upgrade the package and ran it successfully.Thanks again!

@Ytlskys Can you tell me your environment? I am using `trl 0.2.2.dev0, transformers 4.22.1, datasets 2.4.0, torch 1.10.0, numpy 1.21.2 tensorflow 2.9.1` and the job failed. | 183 | 74 |

NuvoleY | 2023-03-03T07:35:04 | trl 0.3.1,transformers 4.26.1,datasets 2.0.0,torch 1.12.1+cu116,tensorflow-gpu 2.10.0,numpy 1.23.4 | 183 | 75 |

younesbelkada | 2023-03-03T08:23:16 | @dengyuning can you trying upgrading your `torch` package? | 183 | 76 |

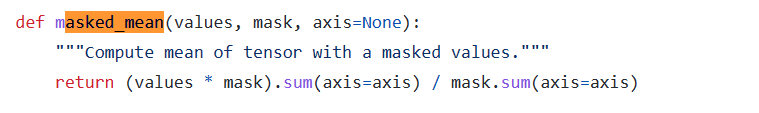

dengyuning | 2023-03-03T08:36:40 | > @dengyuning can you trying upgrading your `torch` package?

Thanks @younesbelkada

Yeah I think it has something to do with `torch`. I can only use `torch 1.10`. It does not support torch.sum(axis=None).

I changed the code in core.py :

to

and it works now.

| 183 | 77 |

younesbelkada | 2023-03-03T09:34:25 | Thanks so much @dengyuning for digging further!

I believe this can be upstreamed in https://github.com/lvwerra/trl/pull/190 ! Again thanks for this finding! | 183 | 78 |

lvwerra | 2023-03-06T13:58:16 | Closing this as #190 got merged. Feel free to reopen if the issue persists. | 183 | 79 |

HuggingFaceDocBuilderDev | 2023-02-28T16:46:34 | _The documentation is not available anymore as the PR was closed or merged._ | 182 | 80 |

HuggingFaceDocBuilderDev | 2023-02-28T14:51:32 | _The documentation is not available anymore as the PR was closed or merged._ | 181 | 81 |

lvwerra | 2023-02-28T09:49:45 | In PPO optimization a value estimation for each action (=generated token) is required. The value head (which is just an additional fc layer) makes that estimation. | 180 | 82 |

akk-123 | 2023-02-28T10:00:21 | ok, thanks | 180 | 83 |

xymtxwd | 2024-04-03T03:42:52 | Hi @lvwerra , thanks for the implementations! A n00b question: in my understanding, seems the goal of value head is to estimate the KL divergence between policy and reference model so that they do not differ too much. And in PPO training, the entire reference network is not updated. My question is:

I am wondering, when initializing reference network with additional value head, is this value head randomly initialized? Also I think probably the value head is updated in training, otherwise the output won't make sense due to random initialization. If so, wondering what's the label used to train it? Very curious to learn some details behind it.

I think without value head, we can use some likelihood to estimate pi_RL(y|x) and pi_SFT(y|x), but seems with it we can just use two scalars from value head to represent two probabilities. Wanted to learn if this understand is correct. Thank you so much! | 180 | 84 |

younesbelkada | 2023-02-28T08:53:44 | Hello @Jiayi079 ,

Thanks for reporting! What is your python version? It seems that everything is stable on our side since the CI runners manages to install everything correctly, but we are using python>=3.7, so that might be the issue | 179 | 85 |

Jiayi079 | 2023-02-28T08:56:02 | Hello @younesbelkada ,

Thank you so much for your quick response! I found the reason why is that I'm using python 3.11 which is too new to install torch. BTW, thanks! | 179 | 86 |

younesbelkada | 2023-02-28T08:59:56 | Thanks so much! Thanks for the report, we'll have a look about that, so you're saying that torch cannot be installed under `python=3.11` ? | 179 | 87 |

Jiayi079 | 2023-02-28T09:33:17 | yes, I think so. I download `python 3.8` and everything can work. I searched online and it shows that torch can only be installed under **python 3.7~3.9** | 179 | 88 |

younesbelkada | 2023-02-28T09:42:20 | Sounds good! Thanks a lot, therefore the solution is to use python < 3.11 for now until this will be resolved on `torch` side

Happy TRL-ing! | 179 | 89 |

lvwerra | 2023-02-28T09:39:53 | I think the main reason is that language models are pretrained and in the gpt2-sentiment example further fine-tuned on movie reviews. That means the model is already good at generating movie reviews before RL tuning and thus essentially short-cutting the exploration phase of RL. | 178 | 90 |

stwerner97 | 2023-02-28T09:59:25 | Thank you for your response!

Although I was thinking along similar lines, I am still surprised that the gradient steps start improving the results forthwith, since I believe that the agent's value estimates would need to match the pre-trained behaviour before gradient updates can start improving the generation of movie reviews. So I would have expected the results to deteriorate first. | 178 | 91 |

HuggingFaceDocBuilderDev | 2023-02-27T15:19:28 | _The documentation is not available anymore as the PR was closed or merged._ | 177 | 92 |

lvwerra | 2023-03-02T13:51:37 | So when we do the forward pass we actually predict one more token than we generated. E.g. when inputing 3 tokens the model will also predict a 4th token which is not needed since we just want to evaluate the 3 generated ones. Does that make sense? | 176 | 93 |

review-notebook-app[bot] | 2023-02-23T17:49:19 | Check out this pull request on <a href="https://app.reviewnb.com/lvwerra/trl/pull/175"><img align="absmiddle" alt="ReviewNB" height="28" class="BotMessageButtonImage" src="https://raw.githubusercontent.com/ReviewNB/support/master/images/button_reviewnb.png"/></a>

See visual diffs & provide feedback on Jupyter Notebooks.

---

<i>Powered by <a href='https://www.reviewnb.com/?utm_source=gh'>ReviewNB</a></i> | 175 | 94 |

HuggingFaceDocBuilderDev | 2023-02-23T17:58:13 | _The documentation is not available anymore as the PR was closed or merged._ | 175 | 95 |

shizhediao | 2023-02-23T17:41:10 | I think it should be

`query_tensors = df_batch['input_ids'].tolist()` | 174 | 96 |

lvwerra | 2023-02-23T17:42:33 | I just checked and indeed it should be `input_ids`. Do you want to open a PR? Otherwise I can do it later. | 174 | 97 |

shizhediao | 2023-02-23T17:43:42 | I am going to open a PR right now. Thanks for your confirmation! | 174 | 98 |

shizhediao | 2023-02-23T17:50:44 | hi I have opened a PR #175. | 174 | 99 |

lvwerra | 2023-02-23T17:44:21 | Yes, we use the raw logits, we found that usually works better than e.g. softmax normalized outputs. And you get one reward per sequence from the classifier model so no need to aggregate them. In theory you could pass a reward per token but this is not implemented at the moment. | 173 | 100 |

Mryangkaitong | 2023-02-25T04:58:12 | Thank you very much, I have another question, that is, the model I want to train with ppo is AutoModelForSeq2SeqLM, just refer to here(https://github.com/lvwerra/trl/blob/main/examples/sentiment/scripts/gpt2-sentiment.py#L104) and change it to

`from transformers import AutoModelForSeq2SeqLM

model = AutoModelForSeq2SeqLM.from_pretrained(config.model_name, trust_remote_code=True)

ref_model = AutoModelForSeq2SeqLM.from_pretrained(config.model_name, trust_remote_code=True)

`

The model can be loaded successfully

But when running to line 113, an error is reported (https://github.com/lvwerra/trl/blob/main/examples/sentiment/scripts/gpt2-sentiment.py#L113), the following error:

`alueError: model must be a PreTrainedModelWrapper, got <class 'transformers_modules.local.modeling_glm.GLMForConditionalGeneration'> - supported architectures are: (<class 'trl.models.modeling_value_head.AutoModelForCausalLMWithValueHead'>, <class 'trl.models.modeling_value_head.AutoModelForSeq2SeqLMWithValueHead'>)`

when i use

`

from trl import AutoModelForSeq2SeqLMWithValueHead

model = AutoModelForSeq2SeqLMWithValueHead.from_pretrained(config.model_name, trust_remote_code=True)

`

But get the following error:

`File "/root/anaconda3/envs/RLHF/lib/python3.8/site-packages/trl/models/modeling_base.py", line 81, in from_pretrained

pretrained_model = cls.transformers_parent_class.from_pretrained(

File "/root/anaconda3/envs/RLHF/lib/python3.8/site-packages/transformers/models/auto/auto_factory.py", line 434, in from_pretrained

config, kwargs = AutoConfig.from_pretrained(

File "/root/anaconda3/envs/RLHF/lib/python3.8/site-packages/transformers/models/auto/configuration_auto.py", line 855, in from_pretrained

raise ValueError(

ValueError: Loading /search/PPO/glm_0.3 requires you to execute the configuration file in that repo on your local machine. Make sure you have read the code there to avoid malicious use, then set the option trust_remote_code=True to remove this error.`

How can I adapt in the current trl framework so that I can use ppo to train my AutoModelForSeq2SeqLM model?

Thank you very much, looking forward to answer | 173 | 101 |

lvwerra | 2023-02-28T09:45:21 | @younesbelkada this might be an issue of a kwarg not being passed along to the original model class, wdyt? | 173 | 102 |

younesbelkada | 2023-02-28T09:52:00 | Hello @Mryangkaitong,

Thanks for the issue!

Yes this is possible, however the script below is working on the `main` branch of `trl`:

```python

from trl import AutoModelForSeq2SeqLMWithValueHead

model = AutoModelForSeq2SeqLMWithValueHead.from_pretrained("t5-small", trust_remote_code=True)

```

I believe you need to use the latest changes of the library, by installing `trl` from source:

```

pip install git+https://github.com/lvwerra/trl.git

```

I believe this has been fixed on https://github.com/lvwerra/trl/pull/147

Let us know if the problem still persists! | 173 | 103 |

Mryangkaitong | 2023-03-04T04:57:46 | ok , I have solved it | 173 | 104 |

shizhediao | 2023-02-23T06:39:09 | Seems that the shapes of `ppo/policy/advantages` are inconsistent

```

stats['ppo/policy/advantages'].shape torch.Size([832]) # process0

stats['ppo/policy/advantages'].shape torch.Size([896]) # process1

``` | 172 | 105 |

lvwerra | 2023-02-23T09:27:39 | Thanks for reporting, that indeed looks like the main issue! I'll look into a fix. | 172 | 106 |

shizhediao | 2023-02-23T09:29:26 | Thanks for your quick reply!

A good news: I fixed it by installing the latest version from GitHub instead of pip. and magically, it is solved.

Although I do not know what happened, but I think it may related to the recent bug fix (see the recent commits) | 172 | 107 |

HuggingFaceDocBuilderDev | 2023-02-22T16:47:42 | _The documentation is not available anymore as the PR was closed or merged._ | 170 | 108 |

HuggingFaceDocBuilderDev | 2023-02-21T17:33:27 | _The documentation is not available anymore as the PR was closed or merged._ | 169 | 109 |

HuggingFaceDocBuilderDev | 2023-02-21T10:19:03 | _The documentation is not available anymore as the PR was closed or merged._ | 168 | 110 |

natolambert | 2023-02-21T21:01:11 | For things like this, I think it's best to run a couple examples with and without the change. In theory, I think you can be right (@lvwerra probably knows).

I went back to the original repo and saw that TRL matches it.

https://github.com/openai/lm-human-preferences/blob/bd3775f200676e7c9ed438c50727e7452b1a52c1/lm_human_preferences/train_policy.py#L360 | 168 | 111 |

kashif | 2023-02-21T21:15:30 | thanks @natolambert yes the `approxkl` is fine since it's the square of the difference so the order is not important. It's the `policykl` which is not correct i believe. | 168 | 112 |

lvwerra | 2023-02-22T09:50:58 | I wonder where that actually came from since it was not part of the original implementation 😂. True, if `policykl` should measure the KL-div of the current to the old policy then it's the wrong way round. Since it's only used for monitoring I am up to changing it unless there is a strong incentive to keep it that way. | 168 | 113 |

kashif | 2023-02-22T09:52:36 | yes in any case it's just for logging, it's not used to back-prop etc. I check other implementations e.g. ray, spinningup etc. and they have it as in this fix. | 168 | 114 |

HuggingFaceDocBuilderDev | 2023-02-20T21:27:37 | _The documentation is not available anymore as the PR was closed or merged._ | 167 | 115 |

younesbelkada | 2023-02-21T17:33:57 | Thanks for approving

Actually let's close this PR as this change has been already done on #163 (precisely the commit [6185db0](https://github.com/lvwerra/trl/pull/163/commits/6185db0c6d86cc147873be6f0ec135c367d4ef48))! | 167 | 116 |

lvwerra | 2023-02-23T18:06:41 | Indeed, the total_ppo_epochs in the config are deprecated. If you want to train for multiple epochs it's best to add an additional for-loop around the dataloader:

```python

for epoch in range(num_epochs):

for step, batch in tqdm(enumerate(ppo_trainer.dataloader)):

query_tensors = batch["input_ids"]

...

```

Agreed that this is not very clear in the examples - will update them and remove the `total_ppo_epochs` from the config.

| 166 | 117 |

gjmulder | 2023-02-28T17:40:34 | Thanks for the reply. Should I leave this issue open to track your updates? | 166 | 118 |

github-actions[bot] | 2023-06-20T15:05:01 | This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

| 166 | 119 |

lvwerra | 2023-02-21T17:46:00 | Hi @ayulockin! That sounds great! I think a good first step would be to work on an example (e.g. in `examples`+`docs`) of a parameter sweep with W&B. Based on feedback and usage we can then see how/if we want to directly integrate it into the library. | 165 | 120 |

lvwerra | 2023-06-01T12:30:51 | Closing this for now - feel free to reopen if there's an update! | 165 | 121 |

lvwerra | 2023-02-21T17:44:00 | Yes, usually list of tensors are converted to histograms automatically (see rewards or ratios). Not sure what happened there but if you want to take a look that would be much appreciated. | 164 | 122 |

gjmulder | 2023-02-21T17:59:22 | I'm not too familiar with Wandb, so I had wondered if it could handle a list of tensors. `env/reward_dist` is rendering correctly as a histogram which is what made me suspect that tensors weren't supported. | 164 | 123 |

github-actions[bot] | 2023-06-20T15:05:02 | This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

| 164 | 124 |

HuggingFaceDocBuilderDev | 2023-02-17T13:09:55 | _The documentation is not available anymore as the PR was closed or merged._ | 163 | 125 |

edbeeching | 2023-02-20T15:50:29 | I just pushed a commit that resolves most of these changes.

Remaining questions / todo:

- For the upstreaming of properties / methods missing from PretrainedModelBase I added them as follows:

` model.config = model.pretrained_model.config

model.prepare_inputs_for_generation = model.pretrained_model.prepare_inputs_for_generation`

Alternatively, I could implement these actual methods?

- I agree with making peft a soft-dependency, but I have not implemented this yet.

- I agree with tests, but I have not implemented these yet.

I will implement the rest of the changes later in the week. | 163 | 126 |

younesbelkada | 2023-03-06T13:39:32 | Thanks @lvwerra for the review!

1- yes we can do it in a follow-up PR regarding docs

2- As the base model is kept untouched during training, you can just share the model-id of the base model and use it - that is also how the models are pushed using `peft` , we never share the entire model but the adapters only, as you can retrieve the model id of the base model using `PeftConfig`. Here is a nice example: https://huggingface.co/crumb/FLAN-OPT-6.7b-LoRA | 163 | 127 |

HuggingFaceDocBuilderDev | 2023-02-17T11:05:05 | _The documentation is not available anymore as the PR was closed or merged._ | 162 | 128 |

younesbelkada | 2023-02-22T11:00:36 | Thanks a mile for the extensive review @lvwerra ! Should have addressed them now and left few minor questions :) | 162 | 129 |

lvwerra | 2023-02-20T10:49:36 | We are doing several optimization steps on the model chaning its weights inside `step` so the initial value the model predicts (`values`) might change from the values shown in the following steps (`vpreds`). I agree the naming is not super intuitive :) | 161 | 130 |

lvwerra | 2023-03-06T14:00:19 | Closing this for now. Feel free to re-open if it is still unclear :) | 161 | 131 |

lvwerra | 2023-02-21T17:48:26 | Sounds really cool! Have you been able to test it already? If you have a working example then we can add it as an example! This might also be interesting to @lewtun. | 160 | 132 |

lvwerra | 2023-06-01T12:29:13 | Closing this for now - feel free to reopen if there's an update! | 160 | 133 |

review-notebook-app[bot] | 2023-02-16T17:53:40 | Check out this pull request on <a href="https://app.reviewnb.com/lvwerra/trl/pull/159"><img align="absmiddle" alt="ReviewNB" height="28" class="BotMessageButtonImage" src="https://raw.githubusercontent.com/ReviewNB/support/master/images/button_reviewnb.png"/></a>

See visual diffs & provide feedback on Jupyter Notebooks.

---

<i>Powered by <a href='https://www.reviewnb.com/?utm_source=gh'>ReviewNB</a></i> | 159 | 134 |

HuggingFaceDocBuilderDev | 2023-02-16T17:58:21 | _The documentation is not available anymore as the PR was closed or merged._ | 159 | 135 |

review-notebook-app[bot] | 2023-02-16T16:22:42 | Check out this pull request on <a href="https://app.reviewnb.com/lvwerra/trl/pull/158"><img align="absmiddle" alt="ReviewNB" height="28" class="BotMessageButtonImage" src="https://raw.githubusercontent.com/ReviewNB/support/master/images/button_reviewnb.png"/></a>

See visual diffs & provide feedback on Jupyter Notebooks.

---

<i>Powered by <a href='https://www.reviewnb.com/?utm_source=gh'>ReviewNB</a></i> | 158 | 136 |

HuggingFaceDocBuilderDev | 2023-02-16T16:31:46 | The docs for this PR live [here](/static-proxy?url=https%3A%2F%2Fmoon-ci-docs.huggingface.co%2Fdocs%2Ftrl%2Fpr_158). All of your documentation changes will be reflected on that endpoint. | 158 | 137 |

younesbelkada | 2023-02-16T16:38:05 | Arghf I think one of the commits removed the stdout of the cells that are below that are needed, could you revert that? 🙏

| 158 | 138 |

BirgerMoell | 2023-02-16T16:38:20 | I used the package nbstripout to remove the output logs. This did however remove all the output logs on commit.

Here is how to install it. Then it just works on commit.

nbstripout --install

I hope this is better. Otherwise we can maybe just commit the previous version that kept your logs intact. | 158 | 139 |

younesbelkada | 2023-02-16T16:39:39 | Yeah I think keeping the previous commit is better here! i.e. revert to the commit [4c46574](https://github.com/lvwerra/trl/pull/158/commits/4c46574fab95af8066bab00adb3953d8e1f8becc) | 158 | 140 |

BirgerMoell | 2023-02-16T17:54:59 | I made a new commit instead that seems clean. Was a bit messy to clean this up.

That one should just be the changes without any added output logs.

https://github.com/lvwerra/trl/pull/159 | 158 | 141 |

HuggingFaceDocBuilderDev | 2023-02-16T15:13:18 | _The documentation is not available anymore as the PR was closed or merged._ | 157 | 142 |

HuggingFaceDocBuilderDev | 2023-02-16T15:11:26 | _The documentation is not available anymore as the PR was closed or merged._ | 156 | 143 |

lvwerra | 2023-02-16T15:29:26 | What's the issue with `lm_logits` being in bf16? | 156 | 144 |

younesbelkada | 2023-02-16T15:30:52 | So that we can compute the loss in fp32 and I found that this was more stable + sometimes we log a list of tensors, and directly casting the loss in fp32 avoids the issue with `numpy` & `bf16` | 156 | 145 |

RylanSchaeffer | 2024-08-27T13:20:00 | @younesbelkada @lvwerra I'm running into a problem where this forced upcast causes a massive spike in memory for models with large vocabularies (e.g., Gemma 2 by Google). This then either throws an OOM error or forces me to cut the minibatch size in half, which doubles the PPO runtime

Issue: https://github.com/huggingface/trl/issues/1980

Could you please provide more information or evidence about the stability argument? | 156 | 146 |

younesbelkada | 2023-02-16T15:10:43 | Agreed, the fix seems to be to properly call `eval()` before `generate` and call `train()` afterwards | 155 | 147 |

HuggingFaceDocBuilderDev | 2023-02-16T14:33:34 | _The documentation is not available anymore as the PR was closed or merged._ | 154 | 148 |

HuggingFaceDocBuilderDev | 2023-02-21T17:07:09 | _The documentation is not available anymore as the PR was closed or merged._ | 153 | 149 |

lvwerra | 2023-02-22T10:59:03 | The breaking change actually also happens for users who currently use the library with `forward_batch_size`. What do you think about setting it default to `None` and overwrite `mini_batch_size` if it's set to another value with a warning that it affects now also the `mini_batch_size` if set to a value? | 153 | 150 |