|

--- |

|

license: mit |

|

language: |

|

- en |

|

tags: |

|

- comfyui |

|

- gguf-comfy |

|

- gguf-node |

|

widget: |

|

- text: >- |

|

anime style anime girl with massive fennec ears and one big fluffy tail, she |

|

has blonde long hair blue eyes wearing a maid outfit with a long black gold |

|

leaf pattern dress, walking slowly to the front with sweetie smile, holding |

|

a fancy black forest cake with candles on top in the kitchen of an old dark |

|

Victorian mansion lit by candlelight with a bright window to the foggy |

|

forest |

|

output: |

|

url: samples\ComfyUI_00001_.webp |

|

- text: >- |

|

a fox moving quickly in a beautiful winter scenery nature trees sunset |

|

tracking camera |

|

output: |

|

url: samples\ComfyUI_00002_.webp |

|

- text: drag it <metadata inside> |

|

output: |

|

url: samples\ComfyUI_00003_.png |

|

pipeline_tag: image-to-video |

|

--- |

|

|

|

# **gguf-node test pack** |

|

|

|

|

|

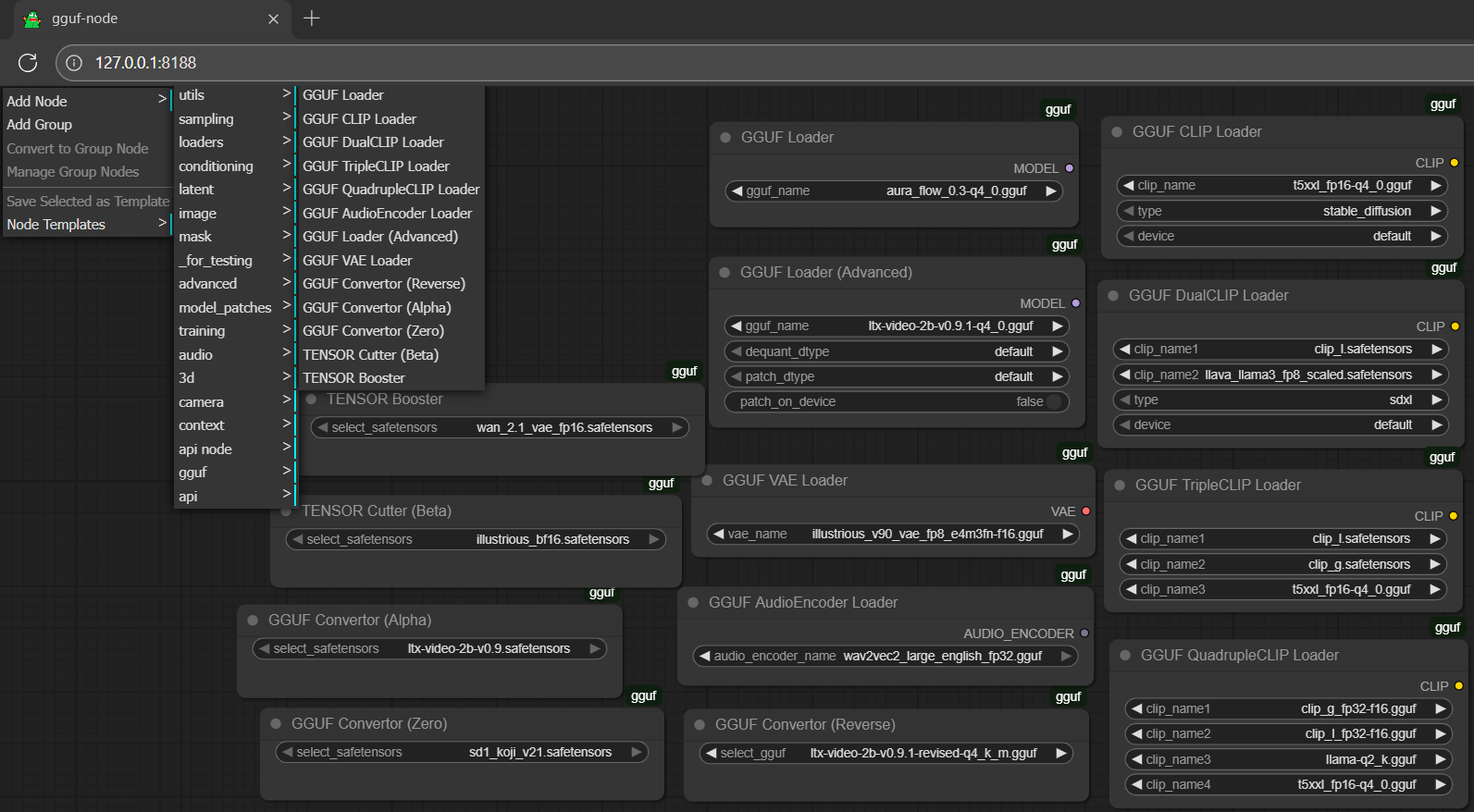

locate **gguf** from Add Node > extension dropdown menu (between 3d and api; second last option) |

|

|

|

[<img src="https://raw.githubusercontent.com/calcuis/comfy/master/gguf.gif" width="128" height="128">](https://github.com/calcuis/gguf) |

|

|

|

### **setup (in general)** |

|

- drag gguf file(s) to diffusion_models folder (`./ComfyUI/models/diffusion_models`) |

|

- drag clip or encoder(s) to text_encoders folder (`./ComfyUI/models/text_encoders`) |

|

- drag controlnet adapter(s), if any, to controlnet folder (`./ComfyUI/models/controlnet`) |

|

- drag lora adapter(s), if any, to loras folder (`./ComfyUI/models/loras`) |

|

- drag vae decoder(s) to vae folder (`./ComfyUI/models/vae`) |

|

|

|

## **run it straight (no installation needed way; recommended)** |

|

- get the comfy pack with the new gguf-node ([pack](https://github.com/calcuis/gguf/releases)) |

|

- run the .bat file in the main directory |

|

|

|

## **or, for existing user (alternative method)** |

|

- you could git clone the node to your `./ComfyUI/custom_nodes` (more details [here](https://github.com/calcuis/gguf/)) |

|

- either navigate to `./ComfyUI/custom_nodes` first or drag and drop the node clone (gguf repo) there |

|

|

|

### **workflow** |

|

- drag any workflow json file to the activated browser; or |

|

- drag any generated output file (i.e., picture, video, etc.; which contains the workflow metadata) to the activated browser |

|

|

|

### **simulator** |

|

- design your own prompt; or |

|

- generate random prompt/descriptor(s) by the [simulator](https://prompt.calcuis.us) (might not applicable for all models) |

|

|

|

<Gallery /> |

|

|

|

#### **cutter (beta)** |

|

- drag safetensors file(s) to diffusion_models folder (./ComfyUI/models/diffusion_models) |

|

- select the safetensors model; click `Queue` (run); simply track the progress from console |

|

- when it was done; the half-cut safetensors fp8_e4m3fn will be saved to the output folder (./ComfyUI/output) |

|

|

|

### **convertor (alpha)** |

|

- drag safetensors file(s) to diffusion_models folder (./ComfyUI/models/diffusion_models) |

|

- select the safetensors model; click `Queue` (run); track the progress from console |

|

- the converted gguf file will be saved to the output folder (./ComfyUI/output) |

|

|

|

### **disclaimer** |

|

- some models (original files) as well as part of the codes are obtained from somewhere or provided by someone else and we might not easily spot out the creator/contributor(s) behind, unless it was specified in the source; rather let it blank instead of anonymous/unnamed/unknown |

|

- we hope we are able to make more effort to trace the source; if it is your work, do let us know; we will address it back properly and probably; thanks for everything |

|

|

|

### **reference** |

|

- sd3.5, sdxl from [stabilityai](https://huggingface.co/stabilityai) |

|

- flux from [black-forest-labs](https://huggingface.co/black-forest-labs) |

|

- aura from [fal](https://huggingface.co/fal) |

|

- mochi from [genmo](https://huggingface.co/genmo) |

|

- hyvid from [tencent](https://huggingface.co/tencent) |

|

- ltxv from [lightricks](https://huggingface.co/Lightricks) |

|

- comfyui from [comfyanonymous](https://github.com/comfyanonymous/ComfyUI) |

|

- comfyui-gguf from [city96](https://github.com/city96/ComfyUI-GGUF) |

|

- llama.cpp from [ggerganov](https://github.com/ggerganov/llama.cpp) |

|

- llama-cpp-python from [abetlen](https://github.com/abetlen/llama-cpp-python) |

|

- gguf-connector [ggc](https://pypi.org/project/gguf-connector) |

|

- gguf-node ([pypi](https://pypi.org/project/gguf-node)|[repo](https://github.com/calcuis/gguf)|[pack](https://github.com/calcuis/gguf/releases)) |