gguf-node (beta) test pack

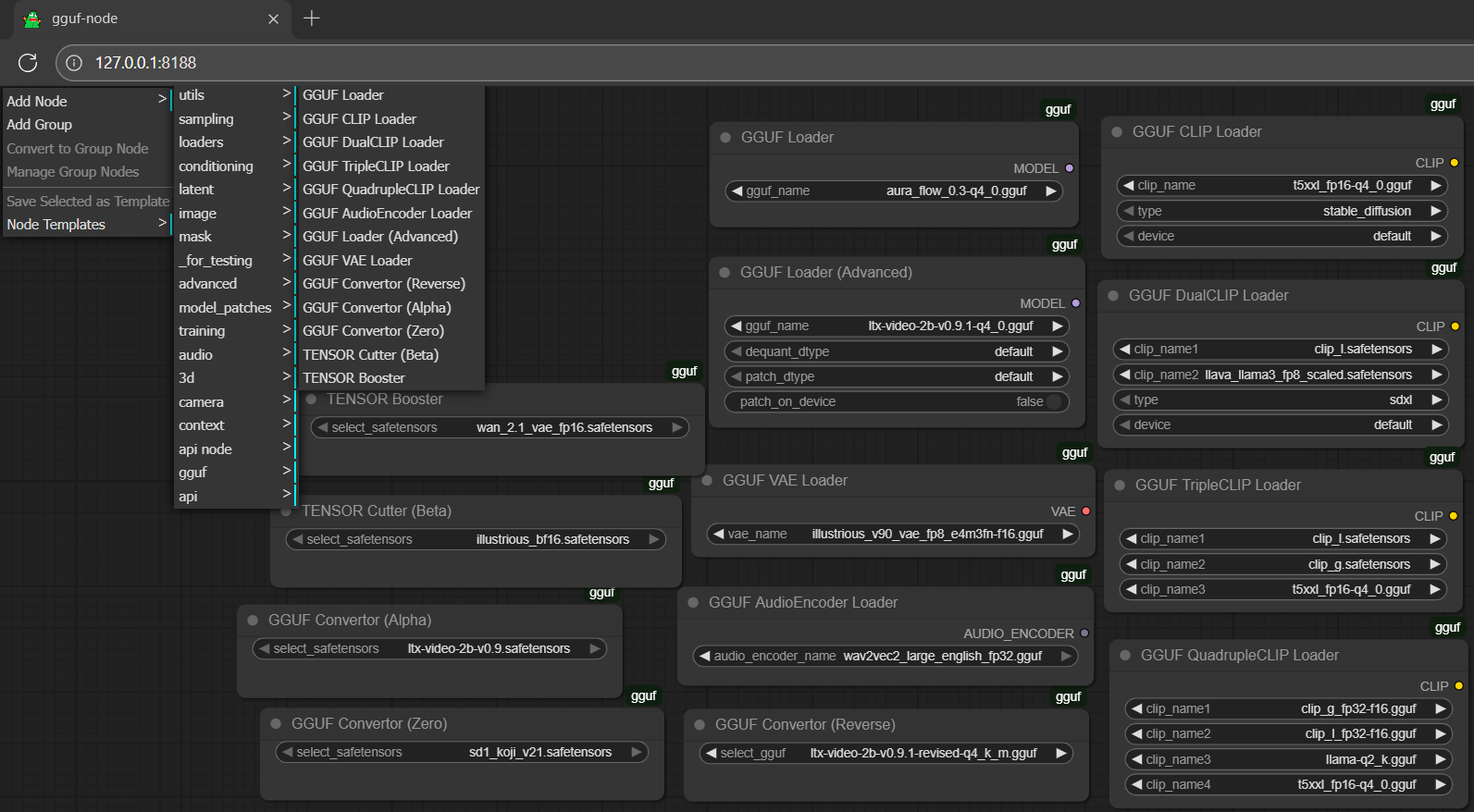

locate gguf from Add Node > extension dropdown menu (between 3d and api; second last option)

locate gguf from Add Node > extension dropdown menu (between 3d and api; second last option)

setup (in general)

- drag gguf file(s) to diffusion_models folder (./ComfyUI/models/diffusion_models)

- drag clip or encoder(s) to text_encoders folder (./ComfyUI/models/text_encoders)

- drag controlnet adapter(s), if any, to controlnet folder (./ComfyUI/models/controlnet)

- drag lora adapter(s), if any, to loras folder (./ComfyUI/models/loras)

- drag vae decoder(s) to vae folder (./ComfyUI/models/vae)

run it straight (no installation needed way)

- get the comfy pack with the new gguf-node (beta)

- run the .bat file in the main directory

workflow

- drag any workflow json file to the activated browser; or

- drag any generated output file (i.e., picture, video, etc.; which contains the workflow metadata) to the activated browser

simulator

- design your own prompt; or

- generate random prompt/descriptor(s) by the simulator (might not applicable for all models)

- Prompt

- anime style anime girl with massive fennec ears and one big fluffy tail, she has blonde long hair blue eyes wearing a maid outfit with a long black gold leaf pattern dress, walking slowly to the front with sweetie smile, holding a fancy black forest cake with candles on top in the kitchen of an old dark Victorian mansion lit by candlelight with a bright window to the foggy forest

- Prompt

- a fox moving quickly in a beautiful winter scenery nature trees sunset tracking camera

- Prompt

- drag it <metadata inside>

reference

- flux from black-forest-labs

- sd3.5, sdxl from stabilityai

- aura from fal

- mochi from genmo

- hyvid from tencent

- ltxv from lightricks

- comfyui from comfyanonymous

- comfyui-gguf from city96

- llama.cpp from ggerganov

- llama-cpp-python from abetlen

- gguf-connector ggc

- gguf-node beta

- Downloads last month

- 775

Inference API (serverless) does not yet support diffusers models for this pipeline type.