metadata

license: llama3.1

datasets:

- nvidia/OpenMathInstruct-2

language:

- en

base_model:

- meta-llama/Llama-3.1-8B-Instruct

model-index:

- name: Control-LLM-Llama3.1-8B-Math16

results:

- task:

type: code-evaluation

dataset:

type: mixed

name: Code Evaluation Dataset

metrics:

- name: pass_at_1,n=1 (code_instruct)

type: pass_at_1

value: 0.7840083073727934

stderr: 0.013257237506304915

verified: false

- name: pass_at_1,n=1 (humaneval_greedy_instruct)

type: pass_at_1

value: 0.8170731707317073

stderr: 0.03028135999593353

verified: false

- name: pass_at_1,n=1 (humaneval_plus_greedy_instruct)

type: pass_at_1

value: 0.7439024390243902

stderr: 0.03418746588364997

verified: false

- name: pass_at_1,n=1 (mbpp_plus_0shot_instruct)

type: pass_at_1

value: 0.8042328042328042

stderr: 0.0204357309715418

verified: false

- name: pass_at_1,n=1 (mbpp_sanitized_0shot_instruct)

type: pass_at_1

value: 0.7587548638132295

stderr: 0.02673991635681605

verified: false

- task:

type: original-capability

dataset:

type: meta/Llama-3.1-8B-Instruct-evals

name: Llama-3.1-8B-Instruct-evals Dataset

dataset_path: meta-llama/llama-3.1-8_b-instruct-evals

dataset_name: Llama-3.1-8B-Instruct-evals__arc_challenge__details

metrics:

- name: exact_match,strict-match (original_capability_instruct)

type: exact_match

value: 0.5630801459168563

stderr: 0.0028483348465514185

verified: false

- name: exact_match,strict-match (meta_arc_0shot_instruct)

type: exact_match

value: 0.8248927038626609

stderr: 0.01113972223585952

verified: false

- name: exact_match,strict-match (meta_gpqa_0shot_cot_instruct)

type: exact_match

value: 0.296875

stderr: 0.021609729061250887

verified: false

- name: exact_match,strict-match (meta_mmlu_0shot_instruct)

type: exact_match

value: 0.6815980629539952

stderr: 0.003931452244804845

verified: false

- name: exact_match,strict-match (meta_mmlu_pro_5shot_instruct)

type: exact_match

value: 0.4093251329787234

stderr: 0.004482884901882547

verified: false

library_name: transformers

pipeline_tag: text-generation

Control-LLM-Llama3.1-8B-Math16

This is a fine-tuned model of Llama-3.1-8B-Instruct for mathematical tasks on OpenCoder SFT dataset.

Linked Paper

This model is associated with the paper: Control-LLM.

Evaluation Results

Here is an overview of the evaluation results and findings:

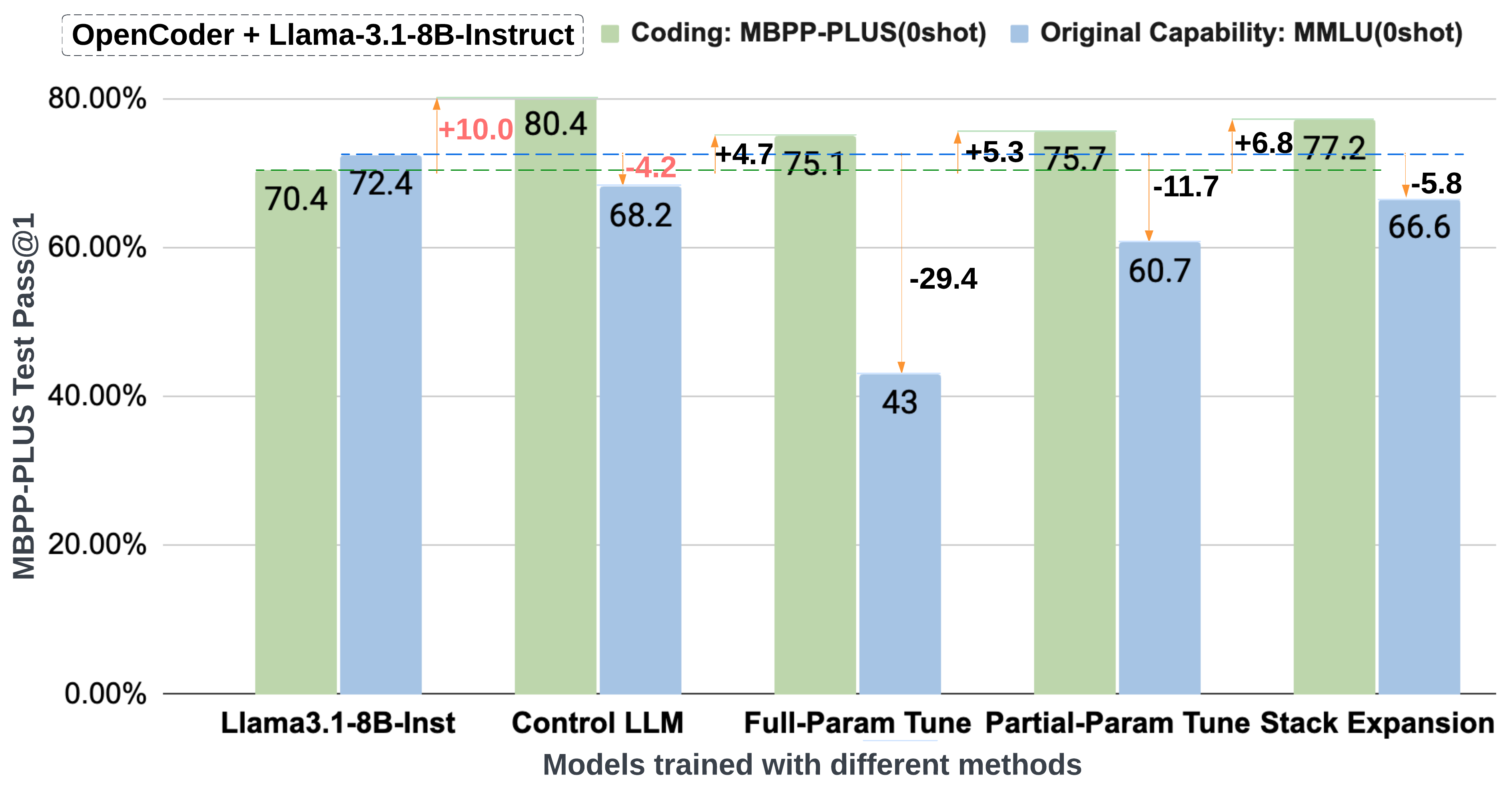

Benchmark Result and Catastrophic Forgetting on OpenCoder

The following plot illustrates benchmark result and catastrophic forgetting mitigation on the OpenCoder SFT dataset.

Benchmark Results Table

The table below summarizes evaluation results across coding tasks and original capabilities.

| Model | MB+ | MS | HE+ | HE | C-Avg | ARC | GP | MLU | MLUP | O-Avg | Overall |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Llama3.1-8B-Ins | 70.4 | 67.7 | 66.5 | 70.7 | 69.1 | 83.4 | 29.9 | 72.4 | 46.7 | 60.5 | 64.8 |

| OpenCoder-8B-Ins | 81.2 | 76.3 | 78.0 | 82.3 | 79.5 | 8.2 | 25.4 | 37.4 | 11.3 | 24.6 | 52.1 |

| Full Param Tune | 75.1 | 69.6 | 71.3 | 76.8 | 73.3 | 24.4 | 21.9 | 43.0 | 19.2 | 31.5 | 52.4 |

| Partial Param Tune | 75.7 | 71.6 | 74.4 | 79.3 | 75.0 | 70.2 | 28.1 | 60.7 | 32.4 | 48.3 | 61.7 |

| Stack Expansion | 77.2 | 72.8 | 73.2 | 78.7 | 75.6 | 80.0 | 26.3 | 66.6 | 38.2 | 54.2 | 64.9 |

| Hybrid Expansion* | 77.5 | 73.5 | 76.2 | 82.3 | 77.1 | 80.9 | 32.6 | 68.1 | 40.3 | 56.0 | 66.6 |

| Control LLM* | 80.4 | 75.9 | 74.4 | 81.1 | 78.3 | 82.5 | 29.7 | 68.2 | 40.9 | 56.3 | 67.3 |

Explanation:

- MB+: MBPP Plus

- MS: MBPP Sanitized

- HE+: HumanEval Plus

- HE: HumanEval

- C-Avg: Coding - Size Weighted Average across MB+, MS, HE+, and HE

- ARC: ARC benchmark

- GP: GPQA benchmark

- MLU: MMLU (Massive Multitask Language Understanding)

- MLUP: MMLU Pro

- O-Avg: Original Capability - Size Weighted Average across ARC, GPQA, MMLU, and MMLU Pro

- Overall: Combined average across all tasks