{}

Stable Video Diffusion Image-to-Video Model Card

Stable Video Diffusion (SVD) Image-to-Video is a diffusion model that takes in a still image as a conditioning frame, and generates a video from it.

Stable Video Diffusion (SVD) Image-to-Video is a diffusion model that takes in a still image as a conditioning frame, and generates a video from it.

Model Details

Model Description

(SVD) Image-to-Video is a latent diffusion model trained to generate short video clips from an image conditioning. This model was trained to generate 14 frames at resolution 576x1024 given a context frame of the same size. We also finetune the widely used f8-decoder for temporal consistency. For convenience, we additionally provide the model with the standard frame-wise decoder here.

- Developed by: Stability AI

- Funded by: Stability AI

- Model type: Generative image-to-video model

Model Sources

For research purposes, we recommend our generative-models Github repository (https://github.com/Stability-AI/generative-models),

which implements the most popular diffusion frameworks (both training and inference).

- Repository: https://github.com/Stability-AI/generative-models

- Paper: https://stability.ai/research/stable-video-diffusion-scaling-latent-video-diffusion-models-to-large-datasets

Evaluation

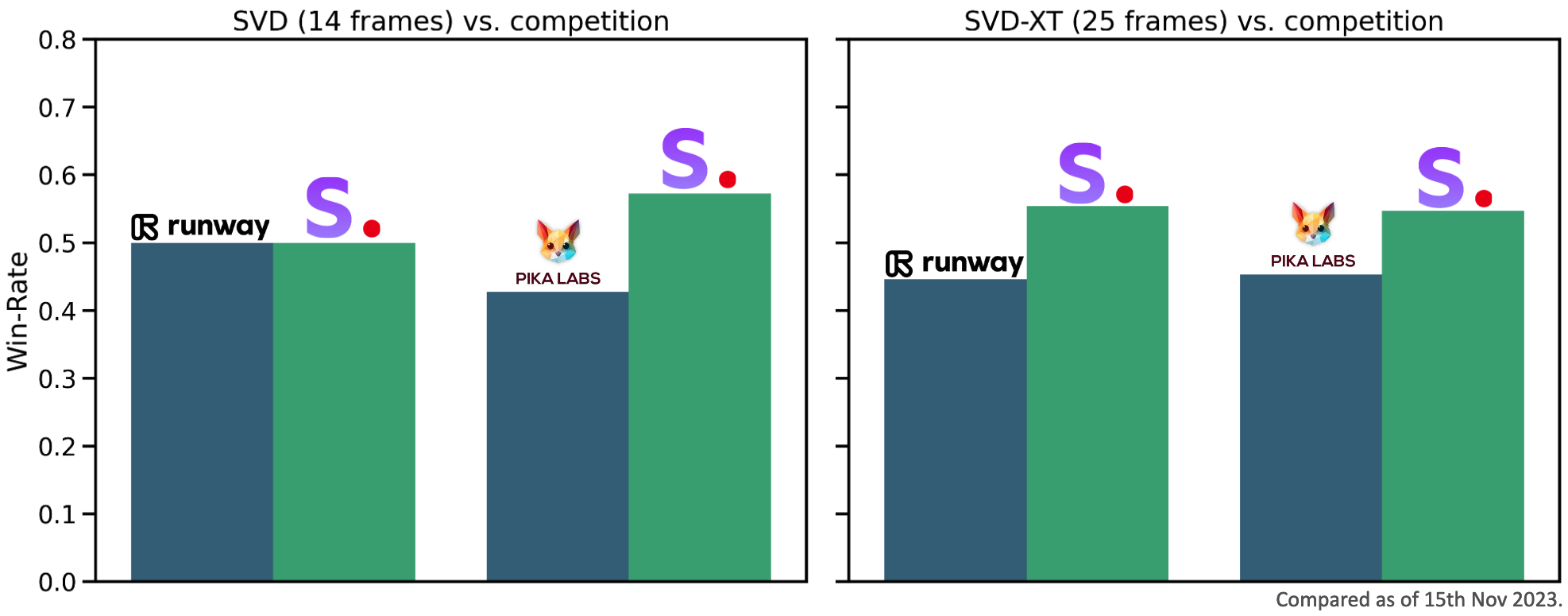

The chart above evaluates user preference for SVD-Image-to-Video over GEN-2 and PikaLabs.

SVD-Image-to-Video is preferred by human voters in terms of video quality. For details on the user study, we refer to the research paper

The chart above evaluates user preference for SVD-Image-to-Video over GEN-2 and PikaLabs.

SVD-Image-to-Video is preferred by human voters in terms of video quality. For details on the user study, we refer to the research paper

Uses

Inference

git clone [email protected]:Stability-AI/generative-models.git

cd generative-models

pip install -r ./requirements/pt2.txt

Download this model card

git lfs install

git clone https://huggingface.co/stabilityai/stable-video-diffusion-img2vid

PYTHONPATH=. streamlit run scripts/demo/video_sampling.py --server.port <port-id>

Direct Use

The model is intended for research purposes only. Possible research areas and tasks include

- Research on generative models.

- Safe deployment of models which have the potential to generate harmful content.

- Probing and understanding the limitations and biases of generative models.

- Generation of artworks and use in design and other artistic processes.

- Applications in educational or creative tools.

Excluded uses are described below.

Out-of-Scope Use

The model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model. The model should not be used in any way that violates Stability AI's Acceptable Use Policy.

Limitations and Bias

Limitations

- The generated videos are rather short (<= 4sec), and the model does not achieve perfect photorealism.

- The model may generate videos without motion, or very slow camera pans.

- The model cannot be controlled through text.

- The model cannot render legible text.

- Faces and people in general may not be generated properly.

- The autoencoding part of the model is lossy.

Recommendations

The model is intended for research purposes only.