metadata

base_model: stabilityai/stable-diffusion-3-medium-diffusers

library_name: diffusers

license: other

instance_prompt: photo of a A@K man

widget:

- text: photo of a A@K man in a restaurant

output:

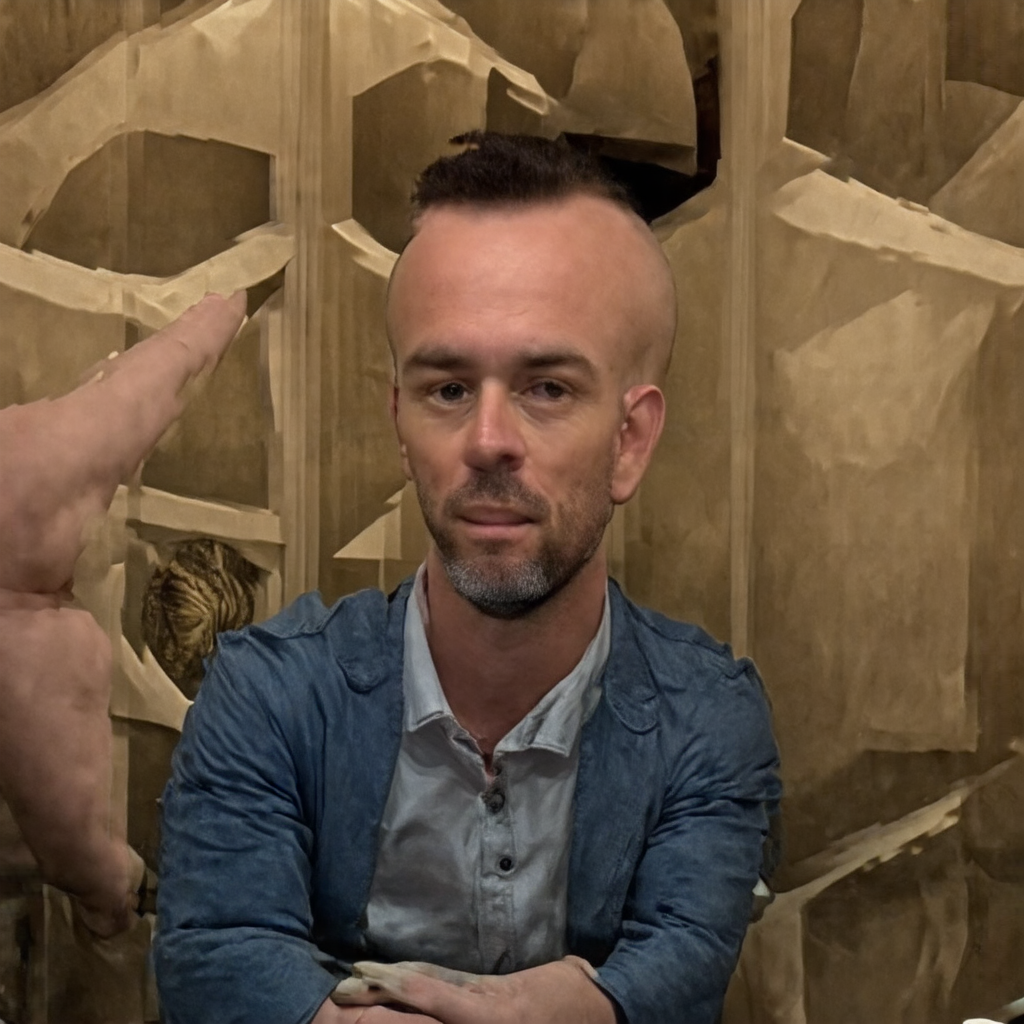

url: image_0.png

- text: photo of a A@K man in a restaurant

output:

url: image_1.png

- text: photo of a A@K man in a restaurant

output:

url: image_2.png

- text: photo of a A@K man in a restaurant

output:

url: image_3.png

tags:

- text-to-image

- diffusers-training

- diffusers

- template:sd-lora

- sd3

- sd3-diffusers

SD3 DreamBooth - mtreinik/output-20241203_235620

- Prompt

- photo of a A@K man in a restaurant

- Prompt

- photo of a A@K man in a restaurant

- Prompt

- photo of a A@K man in a restaurant

- Prompt

- photo of a A@K man in a restaurant

Model description

These are mtreinik/output-20241203_235620 DreamBooth weights for stabilityai/stable-diffusion-3-medium-diffusers.

The weights were trained using DreamBooth with the SD3 diffusers trainer.

Was the text encoder fine-tuned? False.

Trigger words

You should use photo of a A@K man to trigger the image generation.

Use it with the 🧨 diffusers library

from diffusers import AutoPipelineForText2Image

import torch

pipeline = AutoPipelineForText2Image.from_pretrained('mtreinik/output-20241203_235620', torch_dtype=torch.float16).to('cuda')

image = pipeline('photo of a A@K man in a restaurant').images[0]

License

Please adhere to the licensing terms as described [here](https://huggingface.co/stabilityai/stable-diffusion-3-medium/blob/main/LICENSE.md).

Intended uses & limitations

How to use

# TODO: add an example code snippet for running this diffusion pipeline

Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

Training details

[TODO: describe the data used to train the model]