metadata

license: mit

language:

- en

pipeline_tag: text-to-speech

tags:

- audiocraft

- audiogen

- styletts2

- audio

- synthesis

- shift

- audeering

- dkounadis

- sound

- scene

- acoustic-scene

- audio-generation

Affective TTS / SoundScapes

- SHIFT TTS tool

- Analysis of emotionality #1

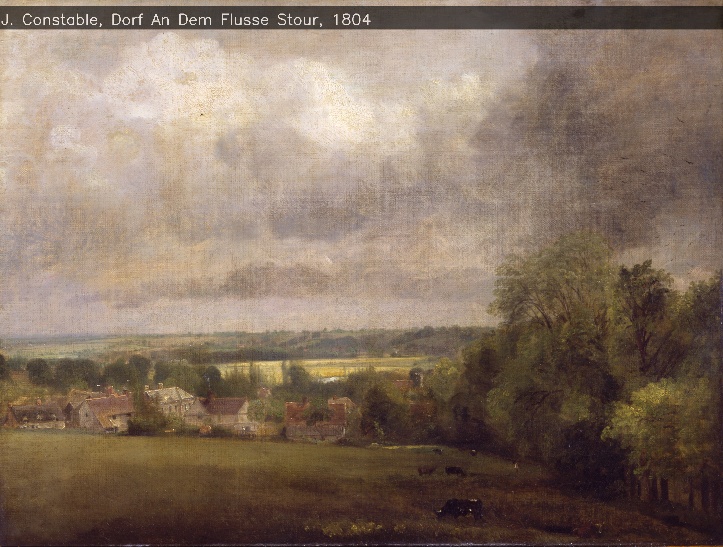

- Soundscape

e.g. trees, watervia AudioGen landscape2soundscape.pyshows how to overlay TTS & sound to image and create video

Available Voices

Flask API

Clone this repo

git clone https://huggingface.co/dkounadis/artificial-styletts2

Build env

virtualenv --python=python3 ~/.envs/.my_env

source ~/.envs/.my_env/bin/activate

cd shift/

pip install -r requirements.txt

Start API

CUDA_DEVICE_ORDER=PCI_BUS_ID HF_HOME=./hf_home CUDA_VISIBLE_DEVICES=2 python api.py

The following need api.py to be already running on a tmux session.

Landscape 2 Soundscape

# TTS & soundscape - overlay to .mp4

python landscape2soundscape.py

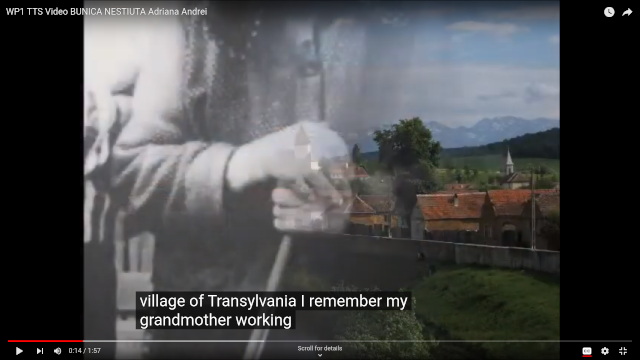

YouTube Videos / Examples

Substitute Native voice via TTS

Same video where Native voice is replaced with English TTS voice with similar emotion

Video dubbing from subtitles .srt

.srtVideo Dubbing

Generate dubbed video:

python tts.py --text assets/head_of_fortuna_en.srt --video assets/head_of_fortuna.mp4

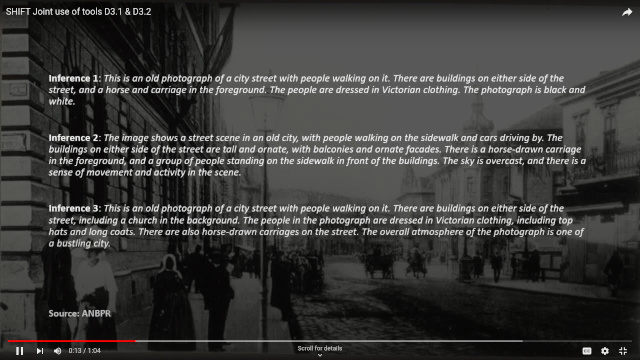

Joint Application of D3.1 & D3.2

From an image and text create a video:

python tts.py --text sample.txt --image assets/image_from_T31.jpg

Landscape 2 Soundscape

# Loads image & text & sound-scene text and creates .mp4

python landscape2soundscape.py

For SHIFT demo / Collaboration with SMB

- YouTube Videos

Live Demo - Paplay

Flask

CUDA_DEVICE_ORDER=PCI_BUS_ID HF_HOME=/data/dkounadis/.hf7/ CUDA_VISIBLE_DEVICES=4 python live_api.py

Client (Ubutu)

python live_demo.py # will ask text input & play soundscape