id

stringlengths 36

36

| status

stringclasses 1

value | inserted_at

timestamp[us] | updated_at

timestamp[us] | _server_id

stringlengths 36

36

| title

stringlengths 11

142

| authors

stringlengths 3

297

| filename

stringlengths 5

62

| content

stringlengths 2

64.1k

| content_class.responses

sequencelengths 1

1

| content_class.responses.users

sequencelengths 1

1

| content_class.responses.status

sequencelengths 1

1

| content_class.suggestion

sequencelengths 1

4

| content_class.suggestion.agent

null | content_class.suggestion.score

null |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

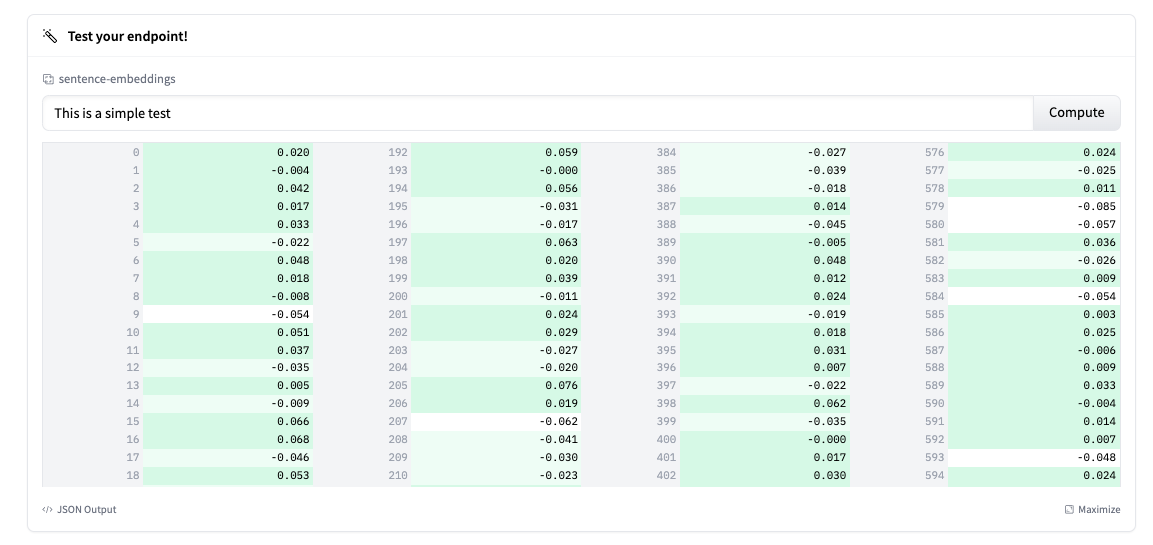

219aa29e-0f07-4209-9c1e-c425748fb4dd | completed | 2025-01-16T03:09:27.174882 | 2025-01-16T03:22:02.340539 | 98bd0dbe-d713-4aed-90c0-5275c461db75 | Results of the Open Source AI Game Jam | ThomasSimonini, dylanebert, osanseviero | game-jam-first-edition-results.md | From July 7th to July 11th, **we hosted our [first Open Source AI Game Jam](https://itch.io/jam/open-source-ai-game-jam)**, an exciting event that challenged game developers to create innovative games within a tight 48-hour window using AI.

The primary objective was **to create games that incorporate at least one Open Source AI Tool**. Although proprietary AI tools were allowed, we encouraged participants to integrate open-source tools into their game or workflow.

The response to our initiative was beyond our expectations, with over 1300 signups and **the submission of 88 amazing games**.

**You can try them here** 👉 https://itch.io/jam/open-source-ai-game-jam/entries

<iframe width="560" height="315" src="https://www.youtube.com/embed/UG9-gOAs2-4" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share" allowfullscreen></iframe>

## The Theme: Expanding

To inspire creativity, **we decided on the theme of "EXPANDING."** We left it open to interpretation, allowing developers to explore and experiment with their ideas, leading to a diverse range of games.

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/game-jam-first-edition-results/theme.jpeg" alt="Game Jam Theme"/>

The games were evaluated by their peers and contributors based on three key criteria: **fun, creativity, and adherence to the theme**.

The top 10 games were then presented to three judges ([Dylan Ebert](https://twitter.com/dylan_ebert_), [Thomas Simonini](https://twitter.com/ThomasSimonini) and [Omar Sanseviero](https://twitter.com/osanseviero)), **who selected the best game**.

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/game-jam-first-edition-results/jury.jpg" alt="Game Jam Judges"/>

## The Winner 🏆🥇

After careful deliberation, the judges **crowned one outstanding game as the Winner of the Open Source AI Game Jam**.

It's [Snip It](https://ohmlet.itch.io/snip-it) by [ohmlet](https://itch.io/profile/ohmlet) 👏👏👏.

Code: Ruben Gres

AI assets: Philippe Saade

Music / SFX: Matthieu Deloffre

In this AI-generated game, you visit a museum where the paintings come to life. **Snip the objects in the paintings to uncover their hidden secrets**.

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/game-jam-first-edition-results/snipit.jpg" alt="Snip it"/>

You can play it here 👉 https://ohmlet.itch.io/snip-it

## Participants Selection: Top 10 🥈🥉🏅

Out of the 88 fantastic submissions, these impressive games emerged as the Top 11 finalists.

### #1: Snip It

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/game-jam-first-edition-results/snipit2.jpg" alt="Snip it"/>

In addition to be the winner of the Game Jam, Snip it has been selected as the top participant selection.

🤖 Open Source Model Used: Stable Diffusion to generate the assets.

🎮👉 https://ohmlet.itch.io/snip-it

### #2: Yabbit Attack

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/game-jam-first-edition-results/yabbit.jpg" alt="Yabbit Attack"/>

In Yabbit Attack, your goal is to **beat the constantly adapting neural network behind the Yabbits**.

🤖 Used genetic algorithms in the context of natural selection and evolution.

🤖 Backgrounds visuals were generated using Stable Diffusion

🎮👉 https://visionistx.itch.io/yabbit-attack

### #3: Fish Dang Bot Rolling Land

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/game-jam-first-edition-results/fish.jpg" alt="Fish Dang Bot Rolling Land"/>

In this game, you take control of a fish-shaped robot named Fein, who is abandoned in a garbage dump with mechanical legs. Unexpectedly, it develops self-awareness, and upon awakening, it sees a dung beetle pushing a dung ball. Naturally, Fein assumes himself to be a dung beetle and harbours a dream of pushing the largest dung ball. With this dream in mind, it decides to embark on its own adventure.

🤖 Used Text To Speech model to generate the voices.

🎮👉 https://zeenaz.itch.io/fish-dang-rolling-laud

### #4: Everchanging Quest

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/game-jam-first-edition-results/everchanging.jpg" alt="Everchanging Quest"/>

In this game, you are the village's last hope. Arm yourself before embarking on your adventure, and don't hesitate to ask the locals for guidance. The world beyond the portal will never be the same, so be prepared. Defeat your enemies to collect points and find your way to the end.

🤖 Used GPT-4 to place the tiles and objects (proprietary) but also Starcoder to code (open source).

🎮👉 https://jofthomas.itch.io/everchanging-quest

### #5: Word Conquest

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/game-jam-first-edition-results/word.gif" alt="Word"/>

In this game, you need to write as many unrelated words as you can to conquer the map. The more unrelated, the farther away and the more score you get.

🤖 Used embeddings from all-MiniLM-L6-v2 model and GloVe to generate the map.

🎮👉 https://danielquelali.itch.io/wordconquest

### #6: Expanding Universe

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/game-jam-first-edition-results/universe.jpg" alt="Universe"/>

In this sandbox gravity game, you create an expanding universe and try to complete the challenges.

🤖 Used Dream Textures Blender (Stable Diffusion) add-on to create textures for all of the planets and stars and an LLM model to generate descriptions of the stars and planets.

🎮👉 https://carsonkatri.itch.io/expanding-universe

### #7: Hexagon Tactics: The Expanding Arena

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/game-jam-first-edition-results/hexagon.gif" alt="Hexagon"/>

In this game, you are dropped into an arena battle. Defeat your opponents, then upgrade your deck and the arena expands.

🤖 Stable Diffusion 1.5 to generate your own character (executable version of the game).

🎮👉 https://dgeisert.itch.io/hextactics

### #8: Galactic Domination

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/game-jam-first-edition-results/galactic.gif" alt="Galactic"/>

In this game, you embark on an interstellar journey as a spaceship captain, pitted against formidable spaceships in a battle for dominance. Your goal is to be the first to construct a powerful space station that will expand your influence and secure your supremacy in the vast expanse of the cosmos. As you navigate the treacherous battlefield, you must gather essential resources to fuel the growth of your space station. It's a construction race!

🤖 Unity ML-Agents (bot-AI works with reinforcement learning)

🤖 Charmed - Texture Generator

🤖 Soundful - Music generator

🤖 Elevenlabs - Voice generator

🤖 Scenario - Image generator

🎮👉 https://blastergames.itch.io/galactic-domination

### #9: Apocalypse Expansion

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/game-jam-first-edition-results/appocalypse.jpg" alt="Apocalypse"/>

In this game, you'll step into the decaying shoes of a zombie, driven by an insatiable hunger for human flesh. Your objective? To build the largest horde of zombies ever seen, while evading the relentless pursuit of the determined police force.

🤖 Used Stable Diffusion to generate the images

🤖 Used MusicGen (melody 1.5B) for the music

🎮👉 https://mad25.itch.io/apocalypse-expansion

### #10: Galactic Bride: Bullet Ballet

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/game-jam-first-edition-results/bride.jpg" alt="Bride"/>

In this game, you dive into an exhilarating bullet-hell journey to become the Star Prince's bride and fulfill your wishes.

🎮👉 https://n30hrtgdv.itch.io/galactic-bride-bullet-ballet

### #10: Singularity

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/game-jam-first-edition-results/singularity.gif" alt="Singularity"/>

This demo is a conceptual demonstration of what could soon be the generation of experiences/games in the near future.

🤖 Used Stable Diffusion

🎮👉 https://ilumine-ai.itch.io/dreamlike-hugging-face-open-source-ai-game-jam

In addition to this top 10, don't hesitate to check the other amazing games (Ghost In Smoke, Outopolis, Dungeons and Decoders...). You **can find the whole list here** 👉 https://itch.io/jam/open-source-ai-game-jam/entries | [

[

"community",

"multi_modal"

]

] | [

"2629e041-8c70-4026-8651-8bb91fd9749a"

] | [

"submitted"

] | [

"community",

"tools",

"implementation",

"multi_modal"

] | null | null |

39e3b685-4211-44bf-a8d5-b58f7b776f69 | completed | 2025-01-16T03:09:27.174893 | 2025-01-16T03:14:54.397264 | f78b43d8-74a5-4503-8bf3-a9dddf2caab0 | Hugging Face and AWS partner to make AI more accessible | jeffboudier, philschmid, juliensimon | aws-partnership.md | It’s time to make AI open and accessible to all. That’s the goal of this expanded long-term strategic partnership between Hugging Face and Amazon Web Services (AWS). Together, the two leaders aim to accelerate the availability of next-generation machine learning models by making them more accessible to the machine learning community and helping developers achieve the highest performance at the lowest cost.

## A new generation of open, accessible AI

Machine learning is quickly becoming embedded in all applications. As its impact on every sector of the economy comes into focus, it’s more important than ever to ensure every developer can access and assess the latest models. The partnership with AWS paves the way toward this future by making it faster and easier to build, train, and deploy the latest machine learning models in the cloud using purpose-built tools.

There have been significant advances in new Transformer and Diffuser machine learning models that process and generate text, audio, and images. However, most of these popular generative AI models are not publicly available, widening the gap of machine learning capabilities between the largest tech companies and everyone else. To counter this trend, AWS and Hugging Face are partnering to contribute next-generation models to the global AI community and democratize machine learning. Through the strategic partnership, Hugging Face will leverage AWS as a preferred cloud provider so developers in Hugging Face’s community can access AWS’s state-of-the-art tools (e.g., [Amazon SageMaker](https://aws.amazon.com/sagemaker), [AWS Trainium](https://aws.amazon.com/machine-learning/trainium/), [AWS Inferentia](https://aws.amazon.com/machine-learning/inferentia/)) to train, fine-tune, and deploy models on AWS. This will allow developers to further optimize the performance of their models for their specific use cases while lowering costs. Hugging Face will apply the latest in innovative research findings using Amazon SageMaker to build next-generation AI models. Together, Hugging Face and AWS are bridging the gap so the global AI community can benefit from the latest advancements in machine learning to accelerate the creation of generative AI applications.

“The future of AI is here, but it’s not evenly distributed,” said Clement Delangue, CEO of Hugging Face. “Accessibility and transparency are the keys to sharing progress and creating tools to use these new capabilities wisely and responsibly. Amazon SageMaker and AWS-designed chips will enable our team and the larger machine learning community to convert the latest research into openly reproducible models that anyone can build on.”

## Collaborating to scale AI in the cloud

This expanded strategic partnership enables Hugging Face and AWS to accelerate machine learning adoption using the latest models hosted on Hugging Face with the industry-leading capabilities of Amazon SageMaker. Customers can now easily fine-tune and deploy state-of-the-art Hugging Face models in just a few clicks on Amazon SageMaker and Amazon Elastic Computing Cloud (EC2), taking advantage of purpose-built machine learning accelerators including AWS Trainium and AWS Inferentia.

“Generative AI has the potential to transform entire industries, but its cost and the required expertise puts the technology out of reach for all but a select few companies,” said Adam Selipsky, CEO of AWS. “Hugging Face and AWS are making it easier for customers to access popular machine learning models to create their own generative AI applications with the highest performance and lowest costs. This partnership demonstrates how generative AI companies and AWS can work together to put this innovative technology into the hands of more customers.”

Hugging Face has become the central hub for machine learning, with more than [100,000 free and accessible machine learning models](https://huggingface.co/models) downloaded more than 1 million times daily by researchers, data scientists, and machine learning engineers. AWS is by far the most popular place to run models from the Hugging Face Hub. Since the [start of our collaboration](https://huggingface.co/blog/the-partnership-amazon-sagemaker-and-hugging-face), [Hugging Face on Amazon SageMaker](https://aws.amazon.com/machine-learning/hugging-face/) has grown exponentially. We are experiencing an exciting renaissance with generative AI, and we're just getting started. We look forward to what the future holds for Hugging Face, AWS, and the AI community. | [

[

"llm",

"transformers",

"mlops",

"deployment",

"tools"

]

] | [

"2629e041-8c70-4026-8651-8bb91fd9749a"

] | [

"submitted"

] | [

"llm",

"transformers",

"mlops",

"tools"

] | null | null |

511d7550-00a4-49ce-8c00-f842bb2453ae | completed | 2025-01-16T03:09:27.174899 | 2025-01-19T19:15:19.464361 | 55e7b2a5-9bce-412f-aebf-52bd3490b0e8 | Introducing the Open Arabic LLM Leaderboard | alielfilali01, Hamza-Alobeidli, rcojocaru, Basma-b, clefourrier | leaderboard-arabic.md | The Open Arabic LLM Leaderboard (OALL) is designed to address the growing need for specialized benchmarks in the Arabic language processing domain. As the field of Natural Language Processing (NLP) progresses, the focus often remains heavily skewed towards English, leaving a significant gap in resources for other languages. The OALL aims to balance this by providing a platform specifically for evaluating and comparing the performance of Arabic Large Language Models (LLMs), thus promoting research and development in Arabic NLP.

<script type="module" src="https://gradio.s3-us-west-2.amazonaws.com/4.4.0/gradio.js"> </script>

<gradio-app theme_mode="light" space="OALL/Open-Arabic-LLM-Leaderboard"></gradio-app>

This initiative is particularly significant given that it directly serves over 380 million Arabic speakers worldwide. By enhancing the ability to accurately evaluate and improve Arabic LLMs, we hope the OALL will play a crucial role in developing models and applications that are finely tuned to the nuances of the Arabic language, culture and heritage.

## Benchmarks, Metrics & Technical setup

### Benchmark Datasets

The Open Arabic LLM Leaderboard (OALL) utilizes an extensive and diverse collection of robust datasets to ensure comprehensive model evaluation.

- [AlGhafa benchmark](https://aclanthology.org/2023.arabicnlp-1.21): created by the TII LLM team with the goal of evaluating models on a range of abilities including reading comprehension, sentiment analysis, and question answering. It was initially introduced with 11 native Arabic datasets and was later extended to include an additional 11 datasets that are translations of other widely adopted benchmarks within the English NLP community.

- ACVA and AceGPT benchmarks: feature 58 datasets from the paper ["AceGPT, Localizing Large Language Models in Arabic"](https://arxiv.org/abs/2309.12053), and translated versions of the MMLU and EXAMS benchmarks to broaden the evaluation spectrum and cover a comprehensive range of linguistic tasks. These benchmarks are meticulously curated and feature various subsets that precisely capture the complexities and subtleties of the Arabic language.

### Evaluation Metrics

Given the nature of the tasks, which include multiple-choice and yes/no questions, the leaderboard primarily uses normalized log likelihood accuracy for all tasks. This metric was chosen for its ability to provide a clear and fair measurement of model performance across different types of questions.

### Technical setup

The technical setup for the Open Arabic LLM Leaderboard (OALL) uses:

- front- and back-ends inspired by the [`demo-leaderboard`](https://huggingface.co/demo-leaderboard-backend), with the back-end running locally on the TII cluster

- the `lighteval` library to run the evaluations. Significant contributions have been made to integrate the Arabic benchmarks discussed above into `lighteval`, to support out-of-the-box evaluations of Arabic models for the community (see [PR #44](https://github.com/huggingface/lighteval/pull/44) and [PR #95](https://github.com/huggingface/lighteval/pull/95) on GitHub for more details).

## Future Directions

We have many ideas about expanding the scope of the Open Arabic LLM Leaderboard. Plans are in place to introduce additional leaderboards under various categories, such as one for evaluating Arabic LLMs in Retrieval Augmented Generation (RAG) scenarios and another as a chatbot arena that calculates the ELO scores of different Arabic chatbots based on user preferences.

Furthermore, we aim to extend our benchmarks to cover more comprehensive tasks by developing the OpenDolphin benchmark, which will include about 50 datasets and will be an open replication of the work done by Nagoudi et al. in the paper titled [“Dolphin: A Challenging and Diverse Benchmark for Arabic NLG”](https://arxiv.org/abs/2305.14989). For those interested in adding their benchmarks or collaborating on the OpenDolphin project, please contact us through the discussion tab or at this [email address](mailto:[email protected]).

We’d love to welcome your contribution on these points! We encourage the community to contribute by submitting models, suggesting new benchmarks, or participating in discussions. We also encourage the community to make use of the top models of the current leaderboard to create new models through finetuning or any other techniques that might help your model to climb the ranks to the first place! You can be the next Arabic Open Models Hero!

We hope the OALL will encourage technological advancements and highlight the unique linguistic and cultural characteristics inherent to the Arabic language, and that our technical setup and learnings from deploying a large-scale, language-specific leaderboard can be helpful for similar initiatives in other underrepresented languages. This focus will help bridge the gap in resources and research, traditionally dominated by English-centric models, enriching the global NLP landscape with more diverse and inclusive tools, which is crucial as AI technologies become increasingly integrated into everyday life around the world.

## Submit Your Model !

### Model Submission Process

To ensure a smooth evaluation process, participants must adhere to specific guidelines when submitting models to the Open Arabic LLM Leaderboard:

1. **Ensure Model Precision Alignment:** It is critical that the precision of the submitted models aligns with that of the original models. Discrepancies in precision may result in the model being evaluated but not properly displayed on the leaderboard.

2. **Pre-Submission Checks:**

- **Load Model and Tokenizer:** Confirm that your model and tokenizer can be successfully loaded using AutoClasses. Use the following commands:

```python

from transformers import AutoConfig, AutoModel, AutoTokenizer

config = AutoConfig.from_pretrained("your model name", revision=revision)

model = AutoModel.from_pretrained("your model name", revision=revision)

tokenizer = AutoTokenizer.from_pretrained("your model name", revision=revision)

```

If you encounter errors, address them by following the error messages to ensure your model has been correctly uploaded.

- **Model Visibility:** Ensure that your model is set to public visibility. Additionally, note that if your model requires `use_remote_code=True`, this feature is not currently supported but is under development.

3. **Convert Model Weights to Safetensors:**

- Convert your model weights to safetensors, a safer and faster format for loading and using weights. This conversion also enables the inclusion of the model's parameter count in the `Extended Viewer`.

4. **License and Model Card:**

- **Open License:** Verify that your model is openly licensed. This leaderboard promotes the accessibility of open LLMs to ensure widespread usability.

- **Complete Model Card:** Populate your model card with detailed information. This data will be automatically extracted and displayed alongside your model on the leaderboard.

### In Case of Model Failure

If your model appears in the 'FAILED' category, this indicates that execution was halted. Review the steps outlined above to troubleshoot and resolve any issues. Additionally, test the following [script](https://gist.github.com/alielfilali01/d486cfc962dca3ed4091b7c562a4377f) on your model locally to confirm its functionality before resubmitting.

## Acknowledgements

We extend our gratitude to all contributors, partners, and sponsors, particularly the Technology Innovation Institute and Hugging Face for their substantial support in this project. TII has provided generously the essential computational resources, in line with their commitment to supporting community-driven projects and advancing open science within the Arabic NLP field, whereas Hugging Face has assisted with the integration and customization of their new evaluation framework and leaderboard template.

We would also like to express our thanks to Upstage for their work on the Open Ko-LLM Leaderboard, which served as a valuable reference and source of inspiration for our own efforts. Their pioneering contributions have been instrumental in guiding our approach to developing a comprehensive and inclusive Arabic LLM leaderboard.

## Citations and References

```

@misc{OALL,

author = {Elfilali, Ali and Alobeidli, Hamza and Fourrier, Clémentine and Boussaha, Basma El Amel and Cojocaru, Ruxandra and Habib, Nathan and Hacid, Hakim},

title = {Open Arabic LLM Leaderboard},

year = {2024},

publisher = {OALL},

howpublished = "\url{https://huggingface.co/spaces/OALL/Open-Arabic-LLM-Leaderboard}"

}

@inproceedings{almazrouei-etal-2023-alghafa,

title = "{A}l{G}hafa Evaluation Benchmark for {A}rabic Language Models",

author = "Almazrouei, Ebtesam and

Cojocaru, Ruxandra and

Baldo, Michele and

Malartic, Quentin and

Alobeidli, Hamza and

Mazzotta, Daniele and

Penedo, Guilherme and

Campesan, Giulia and

Farooq, Mugariya and

Alhammadi, Maitha and

Launay, Julien and

Noune, Badreddine",

editor = "Sawaf, Hassan and

El-Beltagy, Samhaa and

Zaghouani, Wajdi and

Magdy, Walid and

Abdelali, Ahmed and

Tomeh, Nadi and

Abu Farha, Ibrahim and

Habash, Nizar and

Khalifa, Salam and

Keleg, Amr and

Haddad, Hatem and

Zitouni, Imed and

Mrini, Khalil and

Almatham, Rawan",

booktitle = "Proceedings of ArabicNLP 2023",

month = dec,

year = "2023",

address = "Singapore (Hybrid)",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.arabicnlp-1.21",

doi = "10.18653/v1/2023.arabicnlp-1.21",

pages = "244--275",

abstract = "Recent advances in the space of Arabic large language models have opened up a wealth of potential practical applications. From optimal training strategies, large scale data acquisition and continuously increasing NLP resources, the Arabic LLM landscape has improved in a very short span of time, despite being plagued by training data scarcity and limited evaluation resources compared to English. In line with contributing towards this ever-growing field, we introduce AlGhafa, a new multiple-choice evaluation benchmark for Arabic LLMs. For showcasing purposes, we train a new suite of models, including a 14 billion parameter model, the largest monolingual Arabic decoder-only model to date. We use a collection of publicly available datasets, as well as a newly introduced HandMade dataset consisting of 8 billion tokens. Finally, we explore the quantitative and qualitative toxicity of several Arabic models, comparing our models to existing public Arabic LLMs.",

}

@misc{huang2023acegpt,

title={AceGPT, Localizing Large Language Models in Arabic},

author={Huang Huang and Fei Yu and Jianqing Zhu and Xuening Sun and Hao Cheng and Dingjie Song and Zhihong Chen and Abdulmohsen Alharthi and Bang An and Ziche Liu and Zhiyi Zhang and Junying Chen and Jianquan Li and Benyou Wang and Lian Zhang and Ruoyu Sun and Xiang Wan and Haizhou Li and Jinchao Xu},

year={2023},

eprint={2309.12053},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

@misc{lighteval,

author = {Fourrier, Clémentine and Habib, Nathan and Wolf, Thomas and Tunstall, Lewis},

title = {LightEval: A lightweight framework for LLM evaluation},

year = {2023},

version = {0.3.0},

url = {https://github.com/huggingface/lighteval}

}

``` | [

[

"llm",

"research",

"benchmarks",

"community"

]

] | [

"2629e041-8c70-4026-8651-8bb91fd9749a"

] | [

"submitted"

] | [

"llm",

"benchmarks",

"research",

"community"

] | null | null |

c109fc69-710a-411d-b8f1-1012538a77e0 | completed | 2025-01-16T03:09:27.174904 | 2025-01-16T03:20:16.163861 | 63d0803a-fac2-43fd-961b-1ff3ad120d78 | Happy 1st anniversary 🤗 Diffusers! | stevhliu, sayakpaul, pcuenq | diffusers-turns-1.md | 🤗 Diffusers is happy to celebrate its first anniversary! It has been an exciting year, and we're proud and grateful for how far we've come thanks to our community and open-source contributors. Last year, text-to-image models like DALL-E 2, Imagen, and Stable Diffusion captured the world's attention with their ability to generate stunningly photorealistic images from text, sparking a massive surge of interest and development in generative AI. But access to these powerful models was limited.

At Hugging Face, our mission is to democratize good machine learning by collaborating and helping each other build an open and ethical AI future together. Our mission motivated us to create the 🤗 Diffusers library so *everyone* can experiment, research, or simply play with text-to-image models. That’s why we designed the library as a modular toolbox, so you can customize a diffusion model’s components or just start using it out-of-the-box.

As 🤗 Diffusers turns 1, here’s an overview of some of the most notable features we’ve added to the library with the help of our community. We are proud and immensely grateful for being part of an engaged community that promotes accessible usage, pushes diffusion models beyond just text-to-image generation, and is an all-around inspiration.

**Table of Contents**

* [Striving for photorealism](#striving-for-photorealism)

* [Video pipelines](#video-pipelines)

* [Text-to-3D models](#text-to-3d-models)

* [Image editing pipelines](#image-editing-pipelines)

* [Faster diffusion models](#faster-diffusion-models)

* [Ethics and safety](#ethics-and-safety)

* [Support for LoRA](#support-for-lora)

* [Torch 2.0 optimizations](#torch-20-optimizations)

* [Community highlights](#community-highlights)

* [Building products with 🤗 Diffusers](#building-products-with-🤗-diffusers)

* [Looking forward](#looking-forward)

## Striving for photorealism

Generative AI models are known for creating photorealistic images, but if you look closely, you may notice certain things that don't look right, like generating extra fingers on a hand. This year, the DeepFloyd IF and Stability AI SDXL models made a splash by improving the quality of generated images to be even more photorealistic.

[DeepFloyd IF](https://stability.ai/blog/deepfloyd-if-text-to-image-model) - A modular diffusion model that includes different processes for generating an image (for example, an image is upscaled 3x to produce a higher resolution image). Unlike Stable Diffusion, the IF model works directly on the pixel level, and it uses a large language model to encode text.

[Stable Diffusion XL (SDXL)](https://stability.ai/blog/sdxl-09-stable-diffusion) - The latest Stable Diffusion model from Stability AI, with significantly more parameters than its predecessor Stable Diffusion 2. It generates hyper-realistic images, leveraging a base model for close adherence to the prompt, and a refiner model specialized in the fine details and high-frequency content.

Head over to the DeepFloyd IF [docs](https://huggingface.co/docs/diffusers/v0.18.2/en/api/pipelines/if#texttoimage-generation) and the SDXL [docs](https://huggingface.co/docs/diffusers/v0.18.2/en/api/pipelines/stable_diffusion/stable_diffusion_xl) today to learn how to start generating your own images!

## Video pipelines

Text-to-image pipelines are cool, but text-to-video is even cooler! We currently support two text-to-video pipelines, [VideoFusion](https://huggingface.co/docs/diffusers/main/en/api/pipelines/text_to_video) and [Text2Video-Zero](https://huggingface.co/docs/diffusers/main/en/api/pipelines/text_to_video_zero).

If you’re already familiar with text-to-image pipelines, using a text-to-video pipeline is very similar:

```py

import torch

from diffusers import DiffusionPipeline

from diffusers.utils import export_to_video

pipe = DiffusionPipeline.from_pretrained("cerspense/zeroscope_v2_576w", torch_dtype=torch.float16)

pipe.enable_model_cpu_offload()

prompt = "Darth Vader surfing a wave"

video_frames = pipe(prompt, num_frames=24).frames

video_path = export_to_video(video_frames)

```

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/darthvader_cerpense.gif" alt="Generated video of Darth Vader surfing."/>

</div>

We expect text-to-video to go through a revolution during 🤗 Diffusers second year, and we are excited to see what the community builds on top of these to push the boundaries of video generation from language!

## Text-to-3D models

In addition to text-to-video, we also have text-to-3D generation now thanks to OpenAI’s [Shap-E](https://hf.co/papers/2305.02463) model. Shap-E is trained by encoding a large dataset of 3D-text pairs, and a diffusion model is conditioned on the encoder’s outputs. You can design 3D assets for video games, interior design, and architecture.

Try it out today with the [`ShapEPipeline`](https://huggingface.co/docs/diffusers/main/en/api/pipelines/shap_e#diffusers.ShapEPipeline) and [`ShapEImg2ImgPipeline`](https://huggingface.co/docs/diffusers/main/en/api/pipelines/shap_e#diffusers.ShapEImg2ImgPipeline).

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/hf-internal-testing/diffusers-images/resolve/main/shap_e/cake_out.gif" alt="3D render of a birthday cupcake generated using SHAP-E."/>

</div>

## Image editing pipelines

Image editing is one of the most practical use cases in fashion, material design, and photography. With diffusion models, the possibilities of image editing continue to expand.

We have many [pipelines](https://huggingface.co/docs/diffusers/main/en/using-diffusers/controlling_generation) in 🤗 Diffusers to support image editing. There are image editing pipelines that allow you to describe your desired edit as a prompt, removing concepts from an image, and even a pipeline that unifies multiple generation methods to create high-quality images like panoramas. With 🤗 Diffusers, you can experiment with the future of photo editing now!

## Faster diffusion models

Diffusion models are known to be time-intensive because of their iterative steps. With OpenAI’s [Consistency Models](https://huggingface.co/papers/2303.01469), the image generation process is significantly faster. Generating a single 256x256 resolution image only takes 3/4 of a second on a modern CPU! You can try this out in 🤗 Diffusers with the [`ConsistencyModelPipeline`](https://huggingface.co/docs/diffusers/main/en/api/pipelines/consistency_models).

On top of speedier diffusion models, we also offer many optimization techniques for faster inference like [PyTorch 2.0’s `scaled_dot_product_attention()` (SDPA) and `torch.compile()`](https://pytorch.org/blog/accelerated-diffusers-pt-20), sliced attention, feed-forward chunking, VAE tiling, CPU and model offloading, and more. These optimizations save memory, which translates to faster generation, and allow you to run inference on consumer GPUs. When you distribute a model with 🤗 Diffusers, all of these optimizations are immediately supported!

In addition to that, we also support specific hardware and formats like ONNX, the `mps` PyTorch device for Apple Silicon computers, Core ML, and others.

To learn more about how we optimize inference with 🤗 Diffusers, check out the [docs](https://huggingface.co/docs/diffusers/optimization/opt_overview)!

## Ethics and safety

Generative models are cool, but they also have the ability to produce harmful and NSFW content. To help users interact with these models responsibly and ethically, we’ve added a [`safety_checker`](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/stable_diffusion/safety_checker.py) component that flags inappropriate content generated during inference. Model creators can choose to incorporate this component into their models if they want.

In addition, generative models can also be used to produce disinformation. Earlier this year, the [Balenciaga Pope](https://www.theverge.com/2023/3/27/23657927/ai-pope-image-fake-midjourney-computer-generated-aesthetic) went viral for how realistic the image was despite it being fake. This underscores the importance and need for a mechanism to distinguish between generated and human content. That’s why we’ve added an invisible watermark for images generated by the SDXL model, which helps users be better informed.

The development of these features is guided by our [ethical charter](https://huggingface.co/docs/diffusers/main/en/conceptual/ethical_guidelines), which you can find in our documentation.

## Support for LoRA

Fine-tuning diffusion models is expensive and out of reach for most consumer GPUs. We added the Low-Rank Adaptation ([LoRA](https://huggingface.co/papers/2106.09685)) technique to close this gap. With LoRA, which is a method for parameter-efficient fine-tuning, you can fine-tune large diffusion models faster and consume less memory. The resulting model weights are also very lightweight compared to the original model, so you can easily share your custom models. If you want to learn more, [our documentation](https://huggingface.co/docs/diffusers/main/en/training/lora) shows how to perform fine-tuning and inference on Stable Diffusion with LoRA.

In addition to LoRA, we support other [training techniques](https://huggingface.co/docs/diffusers/main/en/training/overview) for personalized generation, including DreamBooth, textual inversion, custom diffusion, and more!

## Torch 2.0 optimizations

PyTorch 2.0 [introduced support](https://pytorch.org/get-started/pytorch-2.0/#pytorch-2x-faster-more-pythonic-and-as-dynamic-as-ever) for `torch.compile()`and `scaled_dot_product_attention()`, a more efficient implementation of the attention mechanism. 🤗 Diffusers [provides first-class support](https://huggingface.co/docs/diffusers/optimization/torch2.0) for these features resulting in massive speedups in inference latency, which can sometimes be more than twice as fast!

In addition to visual content (images, videos, 3D assets, etc.), we also added support for audio! Check out [the documentation](https://huggingface.co/docs/diffusers/using-diffusers/audio) to learn more.

## Community highlights

One of the most gratifying experiences of the past year has been seeing how the community is incorporating 🤗 Diffusers into their projects. From adapting Low-rank adaptation (LoRA) for faster training of text-to-image models to building a state-of-the-art inpainting tool, here are a few of our favorite projects:

<div class="mx-auto max-w-screen-xl py-8">

<div class="mb-8 sm:break-inside-avoid">

<blockquote class="rounded-xl !mb-0 bg-gray-50 p-6 shadow dark:bg-gray-800">

<p class="leading-relaxed text-gray-700">We built Core ML Stable Diffusion to make it easier for developers to add state-of-the-art generative AI capabilities in their iOS, iPadOS and macOS apps with the highest efficiency on Apple Silicon. We built on top of 🤗 Diffusers instead of from scratch as 🤗 Diffusers consistently stays on top of a rapidly evolving field and promotes much needed interoperability of new and old ideas.</p>

</blockquote>

<div class="flex items-center gap-4">

<img src="https://avatars.githubusercontent.com/u/10639145?s=200&v=4" class="h-12 w-12 rounded-full object-cover" />

<div class="text-sm">

<p class="font-medium">Atila Orhon</p>

</div>

</div>

</div>

<div class="mb-8 sm:break-inside-avoid">

<blockquote class="rounded-xl !mb-0 bg-gray-50 p-6 shadow dark:bg-gray-800">

<p class="leading-relaxed text-gray-700">🤗 Diffusers has been absolutely developer-friendly for me to dive right into stable diffusion models. Main differentiating factor clearly being that 🤗 Diffusers implementation is often not some code from research lab, that are mostly focused on high velocity driven. While research codes are often poorly written and difficult to understand (lack of typing, assertions, inconsistent design patterns and conventions), 🤗 Diffusers was a breeze to use for me to hack my ideas within couple of hours. Without it, I would have needed to invest significantly more amount of time to start hacking. Well-written documentations and examples are extremely helpful as well.</p>

</blockquote>

<div class="flex items-center gap-4">

<img src="https://avatars.githubusercontent.com/u/35953539?s=48&v=4" class="h-12 w-12 rounded-full object-cover" />

<div class="text-sm">

<p class="font-medium">Simo</p>

</div>

</div>

</div>

<div class="mb-8 sm:break-inside-avoid">

<blockquote class="rounded-xl !mb-0 bg-gray-50 p-6 shadow dark:bg-gray-800">

<p class="leading-relaxed text-gray-700">BentoML is the unified framework for for building, shipping, and scaling production-ready AI applications incorporating traditional ML, pre-trained AI models, Generative and Large Language Models. All Hugging Face Diffuser models and pipelines can be seamlessly integrated into BentoML applications, enabling the running of models on the most suitable hardware and independent scaling based on usage.</p>

</blockquote>

<div class="flex items-center gap-4">

<img src="https://avatars.githubusercontent.com/u/49176046?s=48&v=4" class="h-12 w-12 rounded-full object-cover" />

<div class="text-sm">

<p class="font-medium">BentoML</p>

</div>

</div>

</div>

<div class="mb-8 sm:break-inside-avoid">

<blockquote class="rounded-xl !mb-0 bg-gray-50 p-6 shadow dark:bg-gray-800">

<p class="leading-relaxed text-gray-700">Invoke AI is an open-source Generative AI tool built to empower professional creatives, from game designers and photographers to architects and product designers. Invoke recently launched their hosted offering at invoke.ai, allowing users to generate assets from any computer, powered by the latest research in open-source.</p>

</blockquote>

<div class="flex items-center gap-4">

<img src="https://avatars.githubusercontent.com/u/113954515?s=48&v=4" class="h-12 w-12 rounded-full object-cover" />

<div class="text-sm">

<p class="font-medium">InvokeAI</p>

</div>

</div>

</div>

<div class="mb-8 sm:break-inside-avoid">

<blockquote class="rounded-xl !mb-0 bg-gray-50 p-6 shadow dark:bg-gray-800">

<p class="leading-relaxed text-gray-700">TaskMatrix connects Large Language Model and a series of Visual Models to enable sending and receiving images during chatting.</p>

</blockquote>

<div class="flex items-center gap-4">

<img src="https://avatars.githubusercontent.com/u/6154722?s=48&v=4" class="h-12 w-12 rounded-full object-cover" />

<div class="text-sm">

<p class="font-medium">Chenfei Wu</p>

</div>

</div>

</div>

<div class="mb-8 sm:break-inside-avoid">

<blockquote class="rounded-xl !mb-0 bg-gray-50 p-6 shadow dark:bg-gray-800">

<p class="leading-relaxed text-gray-700">Lama Cleaner is a powerful image inpainting tool that uses Stable Diffusion technology to remove unwanted objects, defects, or people from your pictures. It can also erase and replace anything in your images with ease.</p>

</blockquote>

<div class="flex items-center gap-4">

<img src="https://github.com/Sanster/lama-cleaner/raw/main/assets/logo.png" class="h-12 w-12 rounded-full object-cover" />

<div class="text-sm">

<p class="font-medium">Qing</p>

</div>

</div>

</div>

<div class="mb-8 sm:break-inside-avoid">

<blockquote class="rounded-xl !mb-0 bg-gray-50 p-6 shadow dark:bg-gray-800">

<p class="leading-relaxed text-gray-700">Grounded-SAM combines a powerful Zero-Shot detector Grounding-DINO and Segment-Anything-Model (SAM) to build a strong pipeline to detect and segment everything with text inputs. When combined with 🤗 Diffusers inpainting models, Grounded-SAM can do highly controllable image editing tasks, including replacing specific objects, inpainting the background, etc.</p>

</blockquote>

<div class="flex items-center gap-4">

<img src="https://avatars.githubusercontent.com/u/113572103?s=48&v=4" class="h-12 w-12 rounded-full object-cover" />

<div class="text-sm">

<p class="font-medium">Tianhe Ren</p>

</div>

</div>

</div>

<div class="mb-8 sm:break-inside-avoid">

<blockquote class="rounded-xl !mb-0 bg-gray-50 p-6 shadow dark:bg-gray-800">

<p class="leading-relaxed text-gray-700">Stable-Dreamfusion leverages the convenient implementations of 2D diffusion models in 🤗 Diffusers to replicate recent text-to-3D and image-to-3D methods.</p>

</blockquote>

<div class="flex items-center gap-4">

<img src="https://avatars.githubusercontent.com/u/25863658?s=48&v=4" class="h-12 w-12 rounded-full object-cover" />

<div class="text-sm">

<p class="font-medium">kiui</p>

</div>

</div>

</div>

<div class="mb-8 sm:break-inside-avoid">

<blockquote class="rounded-xl !mb-0 bg-gray-50 p-6 shadow dark:bg-gray-800">

<p class="leading-relaxed text-gray-700">MMagic (Multimodal Advanced, Generative, and Intelligent Creation) is an advanced and comprehensive Generative AI toolbox that provides state-of-the-art AI models (e.g., diffusion models powered by 🤗 Diffusers and GAN) to synthesize, edit and enhance images and videos. In MMagic, users can use rich components to customize their own models like playing with Legos and manage the training loop easily.</p>

</blockquote>

<div class="flex items-center gap-4">

<img src="https://avatars.githubusercontent.com/u/10245193?s=48&v=4" class="h-12 w-12 rounded-full object-cover" />

<div class="text-sm">

<p class="font-medium">mmagic</p>

</div>

</div>

</div>

<div class="mb-8 sm:break-inside-avoid">

<blockquote class="rounded-xl !mb-0 bg-gray-50 p-6 shadow dark:bg-gray-800">

<p class="leading-relaxed text-gray-700">Tune-A-Video, developed by Jay Zhangjie Wu and his team at Show Lab, is the first to fine-tune a pre-trained text-to-image diffusion model using a single text-video pair and enables changing video content while preserving motion.</p>

</blockquote>

<div class="flex items-center gap-4">

<img src="https://avatars.githubusercontent.com/u/101181824?s=48&v=4" class="h-12 w-12 rounded-full object-cover" />

<div class="text-sm">

<p class="font-medium">Jay Zhangjie Wu</p>

</div>

</div>

</div>

</div>

We also collaborated with Google Cloud (who generously provided the compute) to provide technical guidance and mentorship to help the community train diffusion models with TPUs (check out a summary of the event [here](https://opensource.googleblog.com/2023/06/controlling-stable-diffusion-with-jax-diffusers-and-cloud-tpus.html)). There were many cool models such as this [demo](https://huggingface.co/spaces/mfidabel/controlnet-segment-anything) that combines ControlNet with Segment Anything.

<div class="flex justify-center">

<img src="https://github.com/mfidabel/JAX_SPRINT_2023/blob/8632f0fde7388d7a4fc57225c96ef3b8411b3648/EX_1.gif?raw=true" alt="ControlNet and SegmentAnything demo of a hot air balloon in various styles">

</div>

Finally, we were delighted to receive contributions to our codebase from over 300 contributors, which allowed us to collaborate together in the most open way possible. Here are just a few of the contributions from our community:

- [Model editing](https://github.com/huggingface/diffusers/pull/2721) by [@bahjat-kawar](https://github.com/bahjat-kawar), a pipeline for editing a model’s implicit assumptions

- [LDM3D](https://github.com/huggingface/diffusers/pull/3668) by [@estelleafl](https://github.com/estelleafl), a diffusion model for 3D images

- [DPMSolver](https://github.com/huggingface/diffusers/pull/3314) by [@LuChengTHU](https://github.com/LuChengTHU), improvements for significantly improving inference speed

- [Custom Diffusion](https://github.com/huggingface/diffusers/pull/3031) by [@nupurkmr9](https://github.com/nupurkmr9), a technique for generating personalized images with only a few images of a subject

Besides these, a heartfelt shoutout to the following contributors who helped us ship some of the most powerful features of Diffusers (in no particular order):

* [@takuma104](https://github.com/huggingface/diffusers/commits?author=takuma104)

* [@nipunjindal](https://github.com/huggingface/diffusers/commits?author=nipunjindal)

* [@isamu-isozaki](https://github.com/huggingface/diffusers/commits?author=isamu-isozaki)

* [@piEsposito](https://github.com/huggingface/diffusers/commits?author=piEsposito)

* [@Birch-san](https://github.com/huggingface/diffusers/commits?author=Birch-san)

* [@LuChengTHU](https://github.com/huggingface/diffusers/commits?author=LuChengTHU)

* [@duongna21](https://github.com/huggingface/diffusers/commits?author=duongna21)

* [@clarencechen](https://github.com/huggingface/diffusers/commits?author=clarencechen)

* [@dg845](https://github.com/huggingface/diffusers/commits?author=dg845)

* [@Abhinay1997](https://github.com/huggingface/diffusers/commits?author=Abhinay1997)

* [@camenduru](https://github.com/huggingface/diffusers/commits?author=camenduru)

* [@ayushtues](https://github.com/huggingface/diffusers/commits?author=ayushtues)

## Building products with 🤗 Diffusers

Over the last year, we also saw many companies choosing to build their products on top of 🤗 Diffusers. Here are a couple of products that have caught our attention:

- [PlaiDay](http://plailabs.com/): “PlaiDay is a Generative AI experience where people collaborate, create, and connect. Our platform unlocks the limitless creativity of the human mind, and provides a safe, fun social canvas for expression.”

- [Previs One](https://previs.framer.wiki/): “Previs One is a diffuser pipeline for cinematic storyboarding and previsualization — it understands film and television compositional rules just as a director would speak them.”

- [Zust.AI](https://zust.ai/): “We leverage Generative AI to create studio-quality product photos for brands and marketing agencies.”

- [Dashtoon](https://dashtoon.com/): “Dashtoon is building a platform to create and consume visual content. We have multiple pipelines that load multiple LORAs, multiple control-nets and even multiple models powered by diffusers. Diffusers has made the gap between a product engineer and a ML engineer super low allowing dashtoon to ship user value faster and better.”

- [Virtual Staging AI](https://www.virtualstagingai.app/): "Filling empty rooms with beautiful furniture using generative models.”

- [Hexo.AI](https://www.hexo.ai/): “Hexo AI helps brands get higher ROI on marketing spends through Personalized Marketing at Scale. Hexo is building a proprietary campaign generation engine which ingests customer data and generates brand compliant personalized creatives.”

If you’re building products on top of 🤗 Diffusers, we’d love to chat to understand how we can make the library better together! Feel free to reach out to [email protected] or [email protected].

## Looking forward

As we celebrate our first anniversary, we're grateful to our community and open-source contributors who have helped us come so far in such a short time. We're happy to share that we'll be presenting a 🤗 Diffusers demo at ICCV 2023 this fall – if you're attending, do come and see us! We'll continue to develop and improve our library, making it easier for everyone to use. We're also excited to see what the community will create next with our tools and resources. Thank you for being a part of our journey so far, and we look forward to continuing to democratize good machine learning together! 🥳

❤️ Diffusers team | [

[

"implementation",

"community",

"tools",

"image_generation"

]

] | [

"2629e041-8c70-4026-8651-8bb91fd9749a"

] | [

"submitted"

] | [

"image_generation",

"tools",

"community",

"implementation"

] | null | null |

54a339f7-213f-4f7e-b4c3-5c074f5a7b43 | completed | 2025-01-16T03:09:27.174908 | 2025-01-16T03:12:34.511246 | 64427aa3-fca2-485c-bc74-665c57b67b80 | Gradio 3.0 is Out! | abidlabs | gradio-blocks.md | ### Machine Learning Demos

Machine learning demos are an increasingly vital part of releasing a model. Demos allow anyone — not just ML engineers — to try out a model in the browser, give feedback on predictions, and build trust in the model if it performs well.

More than 600,000 ML demos have been built with the Gradio library since its first version in 2019, and today, we are thrilled to announce **Gradio 3.0**: a ground-up redesign of the Gradio library 🥳

### What's New in Gradio 3.0?

🔥 A complete redesign of the frontend, based on the feedback we're hearing from Gradio users:

* We've switched to modern technologies (like <a href="https://svelte.dev/" target="_blank">Svelte</a>) to build the Gradio frontend. We're seeing much smaller payloads and much faster page loads as a result!

* We've also embranced a much cleaner design that will allow Gradio demos to fit in visually in more settings (such as being <a href="/static-proxy?url=https%3A%2F%2Fdiscuss.huggingface.co%2Ft%2Fgradio-iframe-embedding%2F13021%2F9%3Fu%3Dabidlabs">embedded</a> in blog posts).

<img class="max-w-full mx-auto my-6" style="width: 54rem" src="/blog/assets/68_gradio_blocks/lion.jpg">

* We've revamped our existing components, like `Dataframe` to be more user-friendly (try dragging-and-dropping a CSV file into a Dataframe) as well as added new components, such as the `Gallery`, to allow you to build the right UI for your model.

<img class="max-w-full mx-auto my-6" style="width: 54rem" src="/blog/assets/68_gradio_blocks/dalle.jpg">

* We've added a `TabbedInterface` class which allows you to group together related demos as multiple tabs in one web app

<img class="max-w-full mx-auto my-6" style="width: 54rem" src="/blog/assets/68_gradio_blocks/tts.png">

Check out all the components you can use [on our (redesigned) docs](http://www.gradio.app/docs) 🤗!

🔥 We've created a new low-level language called **Gradio Blocks** that lets you build complex custom web apps, right in Python:

<img class="max-w-full mx-auto my-6" style="width: 54rem" src="/blog/assets/68_gradio_blocks/mindseye-lite.jpg">

Why did we create Blocks? Gradio demos are very easy to build, but what if you want more control over the layout of your demo, or more flexibility on how the data flows? For example, you might want to:

* Change the layout of your demo instead of just having all of the inputs on the left and outputs on the right

* Have multi-step interfaces, in which the output of one model becomes the input to the next model, or have more flexible data flows in general

* Change a component's properties (for example, the choices in a Dropdown) or its visibilty based on user input

The low-level Blocks API allows you to do all of this, right in Python.

Here's an example of a Blocks demo that creates two simple demos and uses tabs to group them together:

```python

import numpy as np

import gradio as gr

def flip_text(x):

return x[::-1]

def flip_image(x):

return np.fliplr(x)

with gr.Blocks() as demo:

gr.Markdown("Flip text or image files using this demo.")

with gr.Tabs():

with gr.TabItem("Flip Text"):

text_input = gr.Textbox()

text_output = gr.Textbox()

# this demo runs whenever the input textbox changes

text_input.change(flip_text, inputs=text_input, outputs=text_output)

with gr.TabItem("Flip Image"):

with gr.Row():

image_input = gr.Image()

image_output = gr.Image()

button = gr.Button("Flip")

# this demo runs whenever the button is clicked

button.click(flip_image, inputs=image_input, outputs=image_output)

demo.launch()

```

Once you run `launch()`, the following demo will appear:

<img class="max-w-full mx-auto my-6" style="width: 54rem" src="/blog/assets/68_gradio_blocks/flipper.png">

For a step-by-step introduction to Blocks, check out [the dedicated Blocks Guide](https://www.gradio.app/introduction_to_blocks/)

### The Gradio Blocks Party

We're very excited about Gradio Blocks -- and we'd love for you to try it out -- so we are organizing a competition, **the Gradio Blocks Party** (😉), to see who can build the best demos with Blocks. By building these demos, we can make state-of-the-art machine learning accessible, not just to engineers, but anyone who can use an Internet browser!

Even if you've never used Gradio before, this is the perfect time to start, because the Blocks Party is running until the end of May. We'll be giving out 🤗 merch and other prizes at the end of the Party for demos built using Blocks.

Learn more about Blocks Party here: https://huggingface.co/spaces/Gradio-Blocks/README | [

[

"mlops",

"implementation",

"deployment",

"tools"

]

] | [

"2629e041-8c70-4026-8651-8bb91fd9749a"

] | [

"submitted"

] | [

"mlops",

"tools",

"implementation",

"deployment"

] | null | null |

f65a829d-7385-46e1-b892-befb2b035d58 | completed | 2025-01-16T03:09:27.174913 | 2025-01-18T14:45:39.294236 | a4ba3514-5392-41d1-80a2-ce10dc7191a7 | Hugging Face and AMD partner on accelerating state-of-the-art models for CPU and GPU platforms | juliensimon | huggingface-and-amd.md | <kbd>

<img src="assets/148_huggingface_amd/01.png">

</kbd>

Whether language models, large language models, or foundation models, transformers require significant computation for pre-training, fine-tuning, and inference. To help developers and organizations get the most performance bang for their infrastructure bucks, Hugging Face has long been working with hardware companies to leverage acceleration features present on their respective chips.

Today, we're happy to announce that AMD has officially joined our [Hardware Partner Program](https://huggingface.co/hardware). Our CEO Clement Delangue gave a keynote at AMD's [Data Center and AI Technology Premiere](https://www.amd.com/en/solutions/data-center/data-center-ai-premiere.html) in San Francisco to launch this exciting new collaboration.

AMD and Hugging Face work together to deliver state-of-the-art transformer performance on AMD CPUs and GPUs. This partnership is excellent news for the Hugging Face community at large, which will soon benefit from the latest AMD platforms for training and inference.

The selection of deep learning hardware has been limited for years, and prices and supply are growing concerns. This new partnership will do more than match the competition and help alleviate market dynamics: it should also set new cost-performance standards.

## Supported hardware platforms

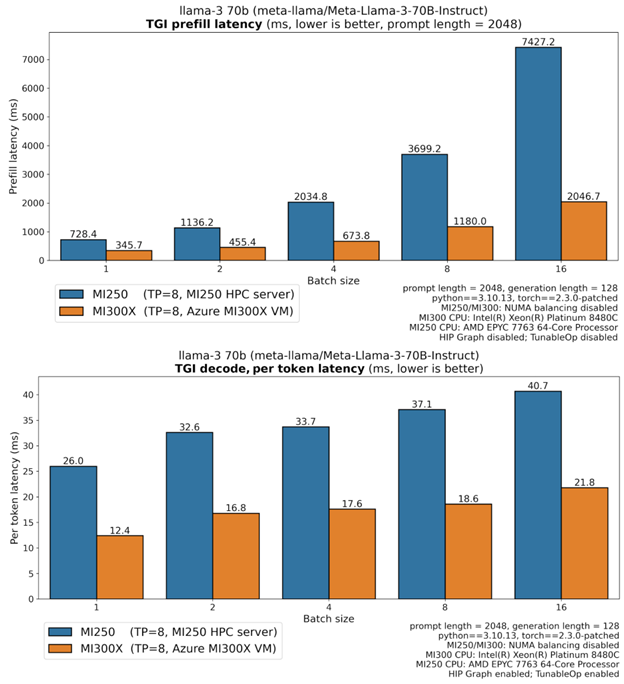

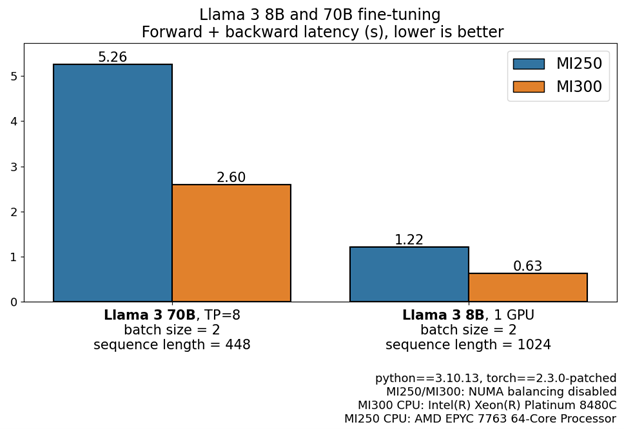

On the GPU side, AMD and Hugging Face will first collaborate on the enterprise-grade Instinct MI2xx and MI3xx families, then on the customer-grade Radeon Navi3x family. In initial testing, AMD [recently reported](https://youtu.be/mPrfh7MNV_0?t=462) that the MI250 trains BERT-Large 1.2x faster and GPT2-Large 1.4x faster than its direct competitor.

On the CPU side, the two companies will work on optimizing inference for both the client Ryzen and server EPYC CPUs. As discussed in several previous posts, CPUs can be an excellent option for transformer inference, especially with model compression techniques like quantization.

Lastly, the collaboration will include the [Alveo V70](https://www.xilinx.com/applications/data-center/v70.html) AI accelerator, which can deliver incredible performance with lower power requirements.

## Supported model architectures and frameworks

We intend to support state-of-the-art transformer architectures for natural language processing, computer vision, and speech, such as BERT, DistilBERT, ROBERTA, Vision Transformer, CLIP, and Wav2Vec2. Of course, generative AI models will be available too (e.g., GPT2, GPT-NeoX, T5, OPT, LLaMA), including our own BLOOM and StarCoder models. Lastly, we will also support more traditional computer vision models, like ResNet and ResNext, and deep learning recommendation models, a first for us.

We'll do our best to test and validate these models for PyTorch, TensorFlow, and ONNX Runtime for the above platforms. Please remember that not all models may be available for training and inference for all frameworks or all hardware platforms.

## The road ahead

Our initial focus will be ensuring the models most important to our community work great out of the box on AMD platforms. We will work closely with the AMD engineering team to optimize key models to deliver optimal performance thanks to the latest AMD hardware and software features. We will integrate the [AMD ROCm SDK](https://www.amd.com/graphics/servers-solutions-rocm) seamlessly in our open-source libraries, starting with the transformers library.

Along the way, we'll undoubtedly identify opportunities to optimize training and inference further, and we'll work closely with AMD to figure out where to best invest moving forward through this partnership. We expect this work to lead to a new [Optimum](https://huggingface.co/docs/optimum/index) library dedicated to AMD platforms to help Hugging Face users leverage them with minimal code changes, if any.

## Conclusion

We're excited to work with a world-class hardware company like AMD. Open-source means the freedom to build from a wide range of software and hardware solutions. Thanks to this partnership, Hugging Face users will soon have new hardware platforms for training and inference with excellent cost-performance benefits. In the meantime, feel free to visit the [AMD page](https://huggingface.co/amd) on the Hugging Face hub. Stay tuned!

*This post is 100% ChatGPT-free.* | [

[

"llm",

"transformers",

"optimization",

"efficient_computing"

]

] | [

"2629e041-8c70-4026-8651-8bb91fd9749a"

] | [

"submitted"

] | [

"llm",

"transformers",

"optimization",

"efficient_computing"

] | null | null |

79442a9a-6413-4fad-b5c2-5f8d1673dd5e | completed | 2025-01-16T03:09:27.174918 | 2025-01-16T03:12:47.039305 | c6d0fb69-a1eb-4b21-95a9-714757826685 | Open-Source Text Generation & LLM Ecosystem at Hugging Face | merve | os-llms.md | [Updated on July 24, 2023: Added Llama 2.]

Text generation and conversational technologies have been around for ages. Earlier challenges in working with these technologies were controlling both the coherence and diversity of the text through inference parameters and discriminative biases. More coherent outputs were less creative and closer to the original training data and sounded less human. Recent developments overcame these challenges, and user-friendly UIs enabled everyone to try these models out. Services like ChatGPT have recently put the spotlight on powerful models like GPT-4 and caused an explosion of open-source alternatives like Llama to go mainstream. We think these technologies will be around for a long time and become more and more integrated into everyday products.

This post is divided into the following sections:

1. [Brief background on text generation](#brief-background-on-text-generation)

2. [Licensing](#licensing)

3. [Tools in the Hugging Face Ecosystem for LLM Serving](#tools-in-the-hugging-face-ecosystem-for-llm-serving)

4. [Parameter Efficient Fine Tuning (PEFT)](#parameter-efficient-fine-tuning-peft)

## Brief Background on Text Generation

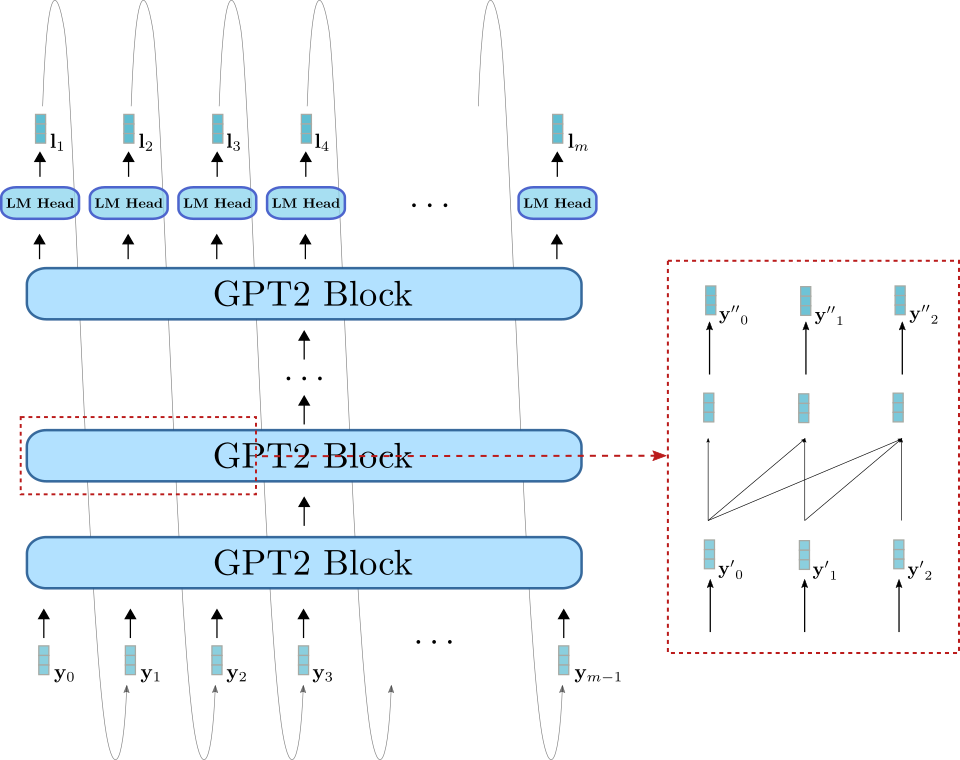

Text generation models are essentially trained with the objective of completing an incomplete text or generating text from scratch as a response to a given instruction or question. Models that complete incomplete text are called Causal Language Models, and famous examples are GPT-3 by OpenAI and [Llama](https://ai.meta.com/blog/large-language-model-Llama-meta-ai/) by Meta AI.

One concept you need to know before we move on is fine-tuning. This is the process of taking a very large model and transferring the knowledge contained in this base model to another use case, which we call _a downstream task_. These tasks can come in the form of instructions. As the model size grows, it can generalize better to instructions that do not exist in the pre-training data, but were learned during fine-tuning.

Causal language models are adapted using a process called reinforcement learning from human feedback (RLHF). This optimization is mainly made over how natural and coherent the text sounds rather than the validity of the answer. Explaining how RLHF works is outside the scope of this blog post, but you can find more information about this process [here](https://huggingface.co/blog/rlhf).

For example, GPT-3 is a causal language _base_ model, while the models in the backend of ChatGPT (which is the UI for GPT-series models) are fine-tuned through RLHF on prompts that can consist of conversations or instructions. It’s an important distinction to make between these models.

On the Hugging Face Hub, you can find both causal language models and causal language models fine-tuned on instructions (which we’ll give links to later in this blog post). Llama is one of the first open-source LLMs to have outperformed/matched closed-source ones. A research group led by Together has created a reproduction of Llama's dataset, called Red Pajama, and trained LLMs and instruction fine-tuned models on it. You can read more about it [here](https://www.together.xyz/blog/redpajama) and find [the model checkpoints on Hugging Face Hub](https://huggingface.co/models?sort=trending&search=togethercomputer%2Fredpajama). By the time this blog post is written, three of the largest causal language models with open-source licenses are [MPT-30B by MosaicML](https://huggingface.co/mosaicml/mpt-30b), [XGen by Salesforce](https://huggingface.co/Salesforce/xgen-7b-8k-base) and [Falcon by TII UAE](https://huggingface.co/tiiuae/falcon-40b), available completely open on Hugging Face Hub.

Recently, Meta released [Llama 2](https://ai.meta.com/Llama/), an open-access model with a license that allows commercial use. As of now, Llama 2 outperforms all of the other open-source large language models on different benchmarks. [Llama 2 checkpoints on Hugging Face Hub](https://huggingface.co/meta-Llama) are compatible with transformers, and the largest checkpoint is available for everyone to try at [HuggingChat](https://huggingface.co/chat/). You can read more about how to fine-tune, deploy and prompt with Llama 2 in [this blog post](https://huggingface.co/blog/llama2).

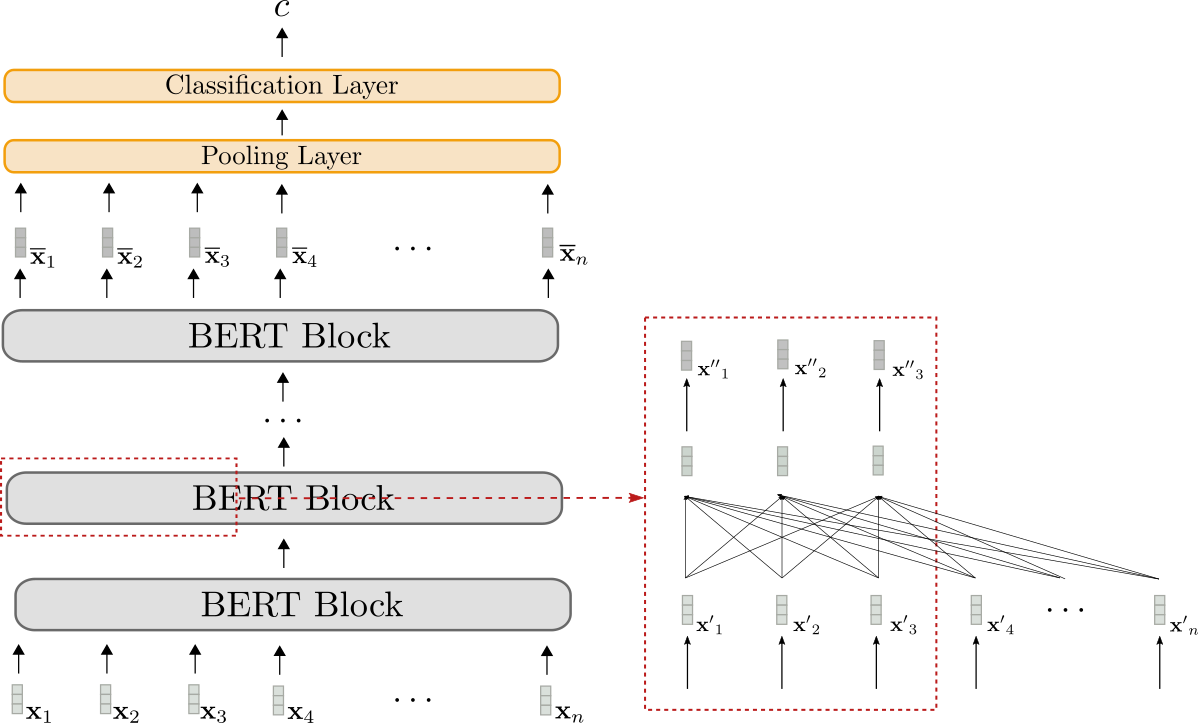

The second type of text generation model is commonly referred to as the text-to-text generation model. These models are trained on text pairs, which can be questions and answers or instructions and responses. The most popular ones are T5 and BART (which, as of now, aren’t state-of-the-art). Google has recently released the FLAN-T5 series of models. FLAN is a recent technique developed for instruction fine-tuning, and FLAN-T5 is essentially T5 fine-tuned using FLAN. As of now, the FLAN-T5 series of models are state-of-the-art and open-source, available on the [Hugging Face Hub](https://huggingface.co/models?search=google/flan). Note that these are different from instruction-tuned causal language models, although the input-output format might seem similar. Below you can see an illustration of how these models work.

Having more variation of open-source text generation models enables companies to keep their data private, to adapt models to their domains faster, and to cut costs for inference instead of relying on closed paid APIs. All open-source causal language models on Hugging Face Hub can be found [here](https://huggingface.co/models?pipeline_tag=text-generation), and text-to-text generation models can be found [here](https://huggingface.co/models?pipeline_tag=text2text-generation&sort=trending).

### Models created with love by Hugging Face with BigScience and BigCode 💗

Hugging Face has co-led two science initiatives, BigScience and BigCode. As a result of them, two large language models were created, [BLOOM](https://huggingface.co/bigscience/bloom) 🌸 and [StarCoder](https://huggingface.co/bigcode/starcoder) 🌟.

BLOOM is a causal language model trained on 46 languages and 13 programming languages. It is the first open-source model to have more parameters than GPT-3. You can find all the available checkpoints in the [BLOOM documentation](https://huggingface.co/docs/transformers/model_doc/bloom).

StarCoder is a language model trained on permissive code from GitHub (with 80+ programming languages 🤯) with a Fill-in-the-Middle objective. It’s not fine-tuned on instructions, and thus, it serves more as a coding assistant to complete a given code, e.g., translate Python to C++, explain concepts (what’s recursion), or act as a terminal. You can try all of the StarCoder checkpoints [in this application](https://huggingface.co/spaces/bigcode/bigcode-playground). It also comes with a [VSCode extension](https://marketplace.visualstudio.com/items?itemName=HuggingFace.huggingface-vscode).

Snippets to use all models mentioned in this blog post are given in either the model repository or the documentation page of that model type in Hugging Face.

## Licensing

Many text generation models are either closed-source or the license limits commercial use. Fortunately, open-source alternatives are starting to appear and being embraced by the community as building blocks for further development, fine-tuning, or integration with other projects. Below you can find a list of some of the large causal language models with fully open-source licenses:

- [Falcon 40B](https://huggingface.co/tiiuae/falcon-40b)

- [XGen](https://huggingface.co/tiiuae/falcon-40b)

- [MPT-30B](https://huggingface.co/mosaicml/mpt-30b)

- [Pythia-12B](https://huggingface.co/EleutherAI/pythia-12b)

- [RedPajama-INCITE-7B](https://huggingface.co/togethercomputer/RedPajama-INCITE-7B-Base)

- [OpenAssistant (Falcon variant)](https://huggingface.co/OpenAssistant/falcon-40b-sft-mix-1226)

There are two code generation models, [StarCoder by BigCode](https://huggingface.co/models?sort=trending&search=bigcode%2Fstarcoder) and [Codegen by Salesforce](https://huggingface.co/models?sort=trending&search=salesforce%2Fcodegen). There are model checkpoints in different sizes and open-source or [open RAIL](https://huggingface.co/blog/open_rail) licenses for both, except for [Codegen fine-tuned on instruction](https://huggingface.co/Salesforce/codegen25-7b-instruct).

The Hugging Face Hub also hosts various models fine-tuned for instruction or chat use. They come in various styles and sizes depending on your needs.

- [MPT-30B-Chat](https://huggingface.co/mosaicml/mpt-30b-chat), by Mosaic ML, uses the CC-BY-NC-SA license, which does not allow commercial use. However, [MPT-30B-Instruct](https://huggingface.co/mosaicml/mpt-30b-instruct) uses CC-BY-SA 3.0, which can be used commercially.

- [Falcon-40B-Instruct](https://huggingface.co/tiiuae/falcon-40b-instruct) and [Falcon-7B-Instruct](https://huggingface.co/tiiuae/falcon-7b-instruct) both use the Apache 2.0 license, so commercial use is also permitted.

- Another popular family of models is OpenAssistant, some of which are built on Meta's Llama model using a custom instruction-tuning dataset. Since the original Llama model can only be used for research, the OpenAssistant checkpoints built on Llama don’t have full open-source licenses. However, there are OpenAssistant models built on open-source models like [Falcon](https://huggingface.co/models?search=openassistant/falcon) or [pythia](https://huggingface.co/models?search=openassistant/pythia) that use permissive licenses.

- [StarChat Beta](https://huggingface.co/HuggingFaceH4/starchat-beta) is the instruction fine-tuned version of StarCoder, and has BigCode Open RAIL-M v1 license, which allows commercial use. Instruction-tuned coding model of Salesforce, [XGen model](https://huggingface.co/Salesforce/xgen-7b-8k-inst), only allows research use.

If you're looking to fine-tune a model on an existing instruction dataset, you need to know how a dataset was compiled. Some of the existing instruction datasets are either crowd-sourced or use outputs of existing models (e.g., the models behind ChatGPT). [ALPACA](https://crfm.stanford.edu/2023/03/13/alpaca.html) dataset created by Stanford is created through the outputs of models behind ChatGPT. Moreover, there are various crowd-sourced instruction datasets with open-source licenses, like [oasst1](https://huggingface.co/datasets/OpenAssistant/oasst1) (created by thousands of people voluntarily!) or [databricks/databricks-dolly-15k](https://huggingface.co/datasets/databricks/databricks-dolly-15k). If you'd like to create a dataset yourself, you can check out [the dataset card of Dolly](https://huggingface.co/datasets/databricks/databricks-dolly-15k#sources) on how to create an instruction dataset. Models fine-tuned on these datasets can be distributed.

You can find a comprehensive table of some open-source/open-access models below.

| Model | Dataset | License | Use |

| | [

[

"llm",

"transformers",

"tools",

"text_generation"

]

] | [

"2629e041-8c70-4026-8651-8bb91fd9749a"

] | [

"submitted"

] | [

"llm",

"text_generation",

"tools",

"transformers"

] | null | null |

4df721c0-0038-476b-9547-0bd49d6d8d47 | completed | 2025-01-16T03:09:27.174923 | 2025-01-19T19:05:32.491986 | 4cf05f8a-50f4-489e-88ba-e55cf91fb2d4 | Generating Stories: AI for Game Development #5 | dylanebert | ml-for-games-5.md | **Welcome to AI for Game Development!** In this series, we'll be using AI tools to create a fully functional farming game in just 5 days. By the end of this series, you will have learned how you can incorporate a variety of AI tools into your game development workflow. I will show you how you can use AI tools for:

1. Art Style

2. Game Design

3. 3D Assets

4. 2D Assets

5. Story

Want the quick video version? You can watch it [here](https://www.tiktok.com/@individualkex/video/7197505390353960235). Otherwise, if you want the technical details, keep reading!

**Note:** This post makes several references to [Part 2](https://huggingface.co/blog/ml-for-games-2), where we used ChatGPT for Game Design. Read Part 2 for additional context on how ChatGPT works, including a brief overview of language models and their limitations.

## Day 5: Story

In [Part 4](https://huggingface.co/blog/ml-for-games-4) of this tutorial series, we talked about how you can use Stable Diffusion and Image2Image as a tool in your 2D Asset workflow.

In this final part, we'll be using AI for Story. First, I'll walk through my [process](#process) for the farming game, calling attention to ⚠️ **Limitations** to watch out for. Then, I'll talk about relevant technologies and [where we're headed](#where-were-headed) in the context of game development. Finally, I'll [conclude](#conclusion) with the final game.

### Process

**Requirements:** I'm using [ChatGPT](https://openai.com/blog/chatgpt/) throughout this process. For more information on ChatGPT and language modeling in general, I recommend reading [Part 2](https://huggingface.co/blog/ml-for-games-2) of the series. ChatGPT isn't the only viable solution, with many emerging competitors, including open-source dialog agents. Read ahead to learn more about [the emerging landscape](#the-emerging-landscape) of dialog agents.

1. **Ask ChatGPT to write a story.** I provide plenty of context about my game, then ask ChatGPT to write a story summary.

<div align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/chatgpt1.png" alt="ChatGPT for Story #1">

</div>

ChatGPT then responds with a story summary that is extremely similar to the story of the game [Stardew Valley](https://www.stardewvalley.net/).

> ⚠️ **Limitation:** Language models are susceptible to reproducing existing stories.

This highlights the importance of using language models as a tool, rather than as a replacement for human creativity. In this case, relying solely on ChatGPT would result in a very unoriginal story.

2. **Refine the results.** As with Image2Image in [Part 4](https://huggingface.co/blog/ml-for-games-4), the real power of these tools comes from back-and-forth collaboration. So, I ask ChatGPT directly to be more original.

<div align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/chatgpt2.png" alt="ChatGPT for Story #2">

</div>

This is already much better. I continue to refine the result, such as asking to remove elements of magic since the game doesn't contain magic. After a few rounds of back-and-forth, I reach a description I'm happy with. Then, it's a matter of generating the actual content that tells this story.

3. **Write the content.** Once I'm happy with the story summary, I ask ChatGPT to write the in-game story content. In the case of this farming game, the only written content is the description of the game, and the description of the items in the shop.

<div align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/chatgpt3.png" alt="ChatGPT for Story #3">

</div>

Not bad. However, there is definitely no help from experienced farmers in the game, nor challenges or adventures to discover.

4. **Refine the content.** I continue to refine the generated content to better fit the game.

<div align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/chatgpt4.png" alt="ChatGPT for Story #4">

</div>

I'm happy with this result. So, should I use it directly? Maybe. Since this is a free game being developed for an AI tutorial, probably. However, it may not be straightforward for commercial products, having potential unintended legal, ethical, and commercial ramifications.

> ⚠️ **Limitation:** Using outputs from language models directly may have unintended legal, ethical, and commercial ramifications.