Datasets:

pretty_name: Lucie Training Dataset

license: cc-by-nc-sa-4.0

language:

- en

- fr

- de

- es

- it

- code

multilinguality:

- multilingual

task_categories:

- text-generation

- text2text-generation

task_ids:

- language-modeling

tags:

- text-generation

- conditional-text-generation

size_categories:

- n>1T

viewer: true

configs:

- config_name: default

data_files:

- path: data/*/*/*/*parquet

split: train

- config_name: en

data_files:

- path: data/natural/en/*/*parquet

split: train

- config_name: fr

data_files:

- path: data/natural/fr/*/*parquet

split: train

- config_name: de

data_files:

- path: data/natural/de/*/*parquet

split: train

- config_name: es

data_files:

- path: data/natural/es/*/*parquet

split: train

- config_name: it

data_files:

- path: data/natural/it/*/*parquet

split: train

- config_name: de,fr

data_files:

- path: data/natural/de-fr/*/*.parquet

split: train

- config_name: es,en

data_files:

- path: data/natural/es-en/*/*.parquet

split: train

- config_name: fr,en

data_files:

- path: data/natural/fr-en/*/*.parquet

split: train

- config_name: it,en

data_files:

- path: data/natural/it-en/*/*.parquet

split: train

- config_name: natural

data_files:

- path: data/natural/*/*/*.parquet

split: train

- config_name: code

data_files:

- path: data/code/*/*/*parquet

split: train

- config_name: code-assembly

data_files:

- path: data/code/assembly/*/*.parquet

split: train

- config_name: code-c

data_files:

- path: data/code/c/*/*.parquet

split: train

- config_name: code-c#

data_files:

- path: data/code/c#/*/*.parquet

split: train

- config_name: code-c++

data_files:

- path: data/code/c++/*/*.parquet

split: train

- config_name: code-clojure

data_files:

- path: data/code/clojure/*/*.parquet

split: train

- config_name: code-dart

data_files:

- path: data/code/dart/*/*.parquet

split: train

- config_name: code-elixir

data_files:

- path: data/code/elixir/*/*.parquet

split: train

- config_name: code-erlang

data_files:

- path: data/code/erlang/*/*.parquet

split: train

- config_name: code-fortran

data_files:

- path: data/code/fortran/*/*.parquet

split: train

- config_name: code-go

data_files:

- path: data/code/go/*/*.parquet

split: train

- config_name: code-haskell

data_files:

- path: data/code/haskell/*/*.parquet

split: train

- config_name: code-java

data_files:

- path: data/code/java/*/*.parquet

split: train

- config_name: code-javascript

data_files:

- path: data/code/javascript/*/*.parquet

split: train

- config_name: code-julia

data_files:

- path: data/code/julia/*/*.parquet

split: train

- config_name: code-kotlin

data_files:

- path: data/code/kotlin/*/*.parquet

split: train

- config_name: code-lua

data_files:

- path: data/code/lua/*/*.parquet

split: train

- config_name: code-mathematica

data_files:

- path: data/code/mathematica/*/*.parquet

split: train

- config_name: code-matlab

data_files:

- path: data/code/matlab/*/*.parquet

split: train

- config_name: code-ocaml

data_files:

- path: data/code/ocaml/*/*.parquet

split: train

- config_name: code-perl

data_files:

- path: data/code/perl/*/*.parquet

split: train

- config_name: code-php

data_files:

- path: data/code/php/*/*.parquet

split: train

- config_name: code-python

data_files:

- path: data/code/python/*/*.parquet

split: train

- config_name: code-r

data_files:

- path: data/code/r/*/*.parquet

split: train

- config_name: code-racket

data_files:

- path: data/code/racket/*/*.parquet

split: train

- config_name: code-ruby

data_files:

- path: data/code/ruby/*/*.parquet

split: train

- config_name: code-rust

data_files:

- path: data/code/rust/*/*.parquet

split: train

- config_name: code-scala

data_files:

- path: data/code/scala/*/*.parquet

split: train

- config_name: code-swift

data_files:

- path: data/code/swift/*/*.parquet

split: train

- config_name: code-tex

data_files:

- path: data/code/tex/*/*.parquet

split: train

- config_name: code-typescript

data_files:

- path: data/code/typescript/*/*.parquet

split: train

- config_name: AmendementsParlement

data_files:

- path: data/natural/*/AmendementsParlement/*.parquet

split: train

- config_name: AmericanStories

data_files:

- path: data/natural/*/AmericanStories/*.parquet

split: train

- config_name: Claire

data_files:

- path: data/natural/*/Claire/*.parquet

split: train

- config_name: Claire-en

data_files:

- path: data/natural/en/Claire/*.parquet

split: train

- config_name: Claire-fr

data_files:

- path: data/natural/fr/Claire/*.parquet

split: train

- config_name: CroissantAligned

data_files:

- path: data/natural/*/CroissantAligned/*.parquet

split: train

- config_name: DiscoursPublics

data_files:

- path: data/natural/*/DiscoursPublics/*.parquet

split: train

- config_name: Europarl

data_files:

- path: data/natural/*/Europarl/*.parquet

split: train

- config_name: Europarl-de

data_files:

- path: data/natural/de/Europarl/*.parquet

split: train

- config_name: Europarl-en

data_files:

- path: data/natural/en/Europarl/*.parquet

split: train

- config_name: Europarl-es

data_files:

- path: data/natural/es/Europarl/*.parquet

split: train

- config_name: Europarl-fr

data_files:

- path: data/natural/fr/Europarl/*.parquet

split: train

- config_name: EuroparlAligned

data_files:

- path: data/natural/*/EuroparlAligned/*.parquet

split: train

- config_name: EuroparlAligned-de,fr

data_files:

- path: data/natural/de-fr/EuroparlAligned/*.parquet

split: train

- config_name: EuroparlAligned-es,en

data_files:

- path: data/natural/es-en/EuroparlAligned/*.parquet

split: train

- config_name: EuroparlAligned-fr,en

data_files:

- path: data/natural/fr-en/EuroparlAligned/*.parquet

split: train

- config_name: EuroparlAligned-it,en

data_files:

- path: data/natural/it-en/EuroparlAligned/*.parquet

split: train

- config_name: Eurovoc

data_files:

- path: data/natural/*/Eurovoc/*.parquet

split: train

- config_name: Eurovoc-de

data_files:

- path: data/natural/de/Eurovoc/*.parquet

split: train

- config_name: Eurovoc-en

data_files:

- path: data/natural/en/Eurovoc/*.parquet

split: train

- config_name: Eurovoc-es

data_files:

- path: data/natural/es/Eurovoc/*.parquet

split: train

- config_name: Eurovoc-it

data_files:

- path: data/natural/it/Eurovoc/*.parquet

split: train

- config_name: FineWebEdu

data_files:

- path: data/natural/*/FineWebEdu/*.parquet

split: train

- config_name: GallicaMonographies

data_files:

- path: data/natural/*/GallicaMonographies/*.parquet

split: train

- config_name: GallicaPress

data_files:

- path: data/natural/*/GallicaPress/*.parquet

split: train

- config_name: Gutenberg

data_files:

- path: data/natural/*/Gutenberg/*.parquet

split: train

- config_name: Gutenberg-de

data_files:

- path: data/natural/de/Gutenberg/*.parquet

split: train

- config_name: Gutenberg-en

data_files:

- path: data/natural/en/Gutenberg/*.parquet

split: train

- config_name: Gutenberg-es

data_files:

- path: data/natural/es/Gutenberg/*.parquet

split: train

- config_name: Gutenberg-fr

data_files:

- path: data/natural/fr/Gutenberg/*.parquet

split: train

- config_name: Gutenberg-it

data_files:

- path: data/natural/it/Gutenberg/*.parquet

split: train

- config_name: HAL

data_files:

- path: data/natural/*/HAL/*.parquet

split: train

- config_name: InterventionsParlement

data_files:

- path: data/natural/*/InterventionsParlement/*.parquet

split: train

- config_name: LEGI

data_files:

- path: data/natural/*/LEGI/*.parquet

split: train

- config_name: MathPile

data_files:

- path: data/natural/*/MathPile/*.parquet

split: train

- config_name: OpenData

data_files:

- path: data/natural/*/OpenData/*.parquet

split: train

- config_name: OpenEdition

data_files:

- path: data/natural/*/OpenEdition/*.parquet

split: train

- config_name: PeS2o

data_files:

- path: data/natural/*/PeS2o/*.parquet

split: train

- config_name: PeS2o-s2ag

data_files:

- path: data/natural/*/PeS2o/*s2ag.parquet

split: train

- config_name: PeS2o-s2orc

data_files:

- path: data/natural/*/PeS2o/*s2orc.parquet

split: train

- config_name: Pile

data_files:

- path: data/natural/*/Pile/*.parquet

split: train

- config_name: Pile-DM_Mathematics

data_files:

- path: data/natural/*/Pile/*DM_Mathematics.parquet

split: train

- config_name: Pile-FreeLaw

data_files:

- path: data/natural/*/Pile/*FreeLaw.parquet

split: train

- config_name: Pile-NIH_ExPorter

data_files:

- path: data/natural/*/Pile/*NIH_ExPorter.parquet

split: train

- config_name: Pile-PhilPapers

data_files:

- path: data/natural/*/Pile/*PhilPapers.parquet

split: train

- config_name: Pile-StackExchange

data_files:

- path: data/natural/*/Pile/*StackExchange.parquet

split: train

- config_name: Pile-USPTO_Backgrounds

data_files:

- path: data/natural/*/Pile/*USPTO_Backgrounds.parquet

split: train

- config_name: Pile-Ubuntu_IRC

data_files:

- path: data/natural/*/Pile/*Ubuntu_IRC.parquet

split: train

- config_name: QuestionsEcritesParlement

data_files:

- path: data/natural/*/QuestionsEcritesParlement/*.parquet

split: train

- config_name: RedPajama

data_files:

- path: data/natural/*/RedPajama/*.parquet

split: train

- config_name: RedPajama-de

data_files:

- path: data/natural/de/RedPajama/*.parquet

split: train

- config_name: RedPajama-es

data_files:

- path: data/natural/es/RedPajama/*.parquet

split: train

- config_name: RedPajama-fr

data_files:

- path: data/natural/fr/RedPajama/*.parquet

split: train

- config_name: RedPajama-it

data_files:

- path: data/natural/it/RedPajama/*.parquet

split: train

- config_name: Stac

data_files:

- path: data/natural/*/Stac/*.parquet

split: train

- config_name: TheStack

data_files:

- path: data/code/*/TheStack/*.parquet

split: train

- config_name: Theses

data_files:

- path: data/natural/*/Theses/*.parquet

split: train

- config_name: Wikipedia

data_files:

- path: data/natural/*/Wikipedia/*.parquet

split: train

- config_name: Wikipedia-de

data_files:

- path: data/natural/de/Wikipedia/*.parquet

split: train

- config_name: Wikipedia-en

data_files:

- path: data/natural/en/Wikipedia/*.parquet

split: train

- config_name: Wikipedia-es

data_files:

- path: data/natural/es/Wikipedia/*.parquet

split: train

- config_name: Wikipedia-fr

data_files:

- path: data/natural/fr/Wikipedia/*.parquet

split: train

- config_name: Wikipedia-it

data_files:

- path: data/natural/it/Wikipedia/*.parquet

split: train

- config_name: Wikisource

data_files:

- path: data/natural/*/Wikisource/*.parquet

split: train

- config_name: Wiktionary

data_files:

- path: data/natural/*/Wiktionary/*.parquet

split: train

- config_name: YouTube

data_files:

- path: data/natural/*/YouTube/*.parquet

split: train

Dataset Card

The Lucie Training Dataset is a curated collection of text data in English, French, German, Spanish and Italian culled from a variety of sources including: web data, video subtitles, academic papers, digital books, newspapers, and magazines, some of which were processed by Optical Character Recognition (OCR). It also contains samples of diverse programming languages.

The Lucie Training Dataset was used to pretrain Lucie-7B, a foundation LLM with strong capabilities in French and English.

Table of Contents:

- Dataset Description

- Example use in python

- License

- Citation

- Contact

Dataset Description

This dataset was made to provide an extensive and diverse dataset for training Large Language Models (LLMs). Here are some of the principal features of the corpus:

- Data mix:

- The dataset contains equal amounts of French and English data -- it is in fact one of the biggest collections of French text data that has been preprocessed for LLM training -- with the aim of minimizing anglo-centric cultural biases.

- German, Spanish and Italian are also represented in small amounts.

- Code is also included to boost the reasoning capabilities of LLMs.

- Data filtering and deduplication:

- The dataset has been cleaned in an effort to remove very low-quality data.

- Duplicate data samples have been removed to some extent, following best practices.

- Ethics:

- Special care has been taken to respect copyright laws and individual privacy. All books, newspapers, monographies, and magazines are in the public domain (which depends on the author's date of death and the country of publication).

- All web data in the dataset came from sites with robots.txt files that do not forbid crawling.

Dataset Structure

The corpus contains the following information for each text sample:

text: the text sample itself.source: an identifier for the source(s) of the text sample (Wikipedia,RedPajama,Gutenberg, …). All sources are described in detail in this document.id: an identifier that is unique among the source.language: the language of the text sample (relying on the source, that information can be wrong).Possible values:

- an ISO 639-1 code of a natural language:

en,fr,de,es, orit; - a common name prefixed by "

code:" of a programming language:code:python,code:c++, …; or - a list of ISO 639-1 codes separated by commas, if the text sample is multilingual:

fr,en,de,fr,es,en,it,en, or one of those pairs in the opposite order if the languages appear in the opposite order in the text.

- an ISO 639-1 code of a natural language:

url (optional): the URL of the original text sample on the web, if available.title (optional): the title of the original text sample, if available.author (optional): the author of the original text sample, if available. Note:

Usually the author name in plain text, except forGutenberg where it is the JSON serialized object of the author metadata.date (optional): the publication date of the original text sample, if available. Note:

The text format of the source depends on the source.quality_signals (optional): a list of quality signals about the text sample, in JSON format (that could be used for further filtering or sample weighting).Note:

It can include indicators computed by `fasttext` and `CCNet`, statistics about occurrences of characters, words, special characters, etc. This field is always a JSON serialized object.extra (optional): extra information about the text sample, in JSON format.

This can include metadata about the source subset, the rights, etc.Examples of metadata (except from text) are shown for each source in metadata_examples.json.

Dataset Composition

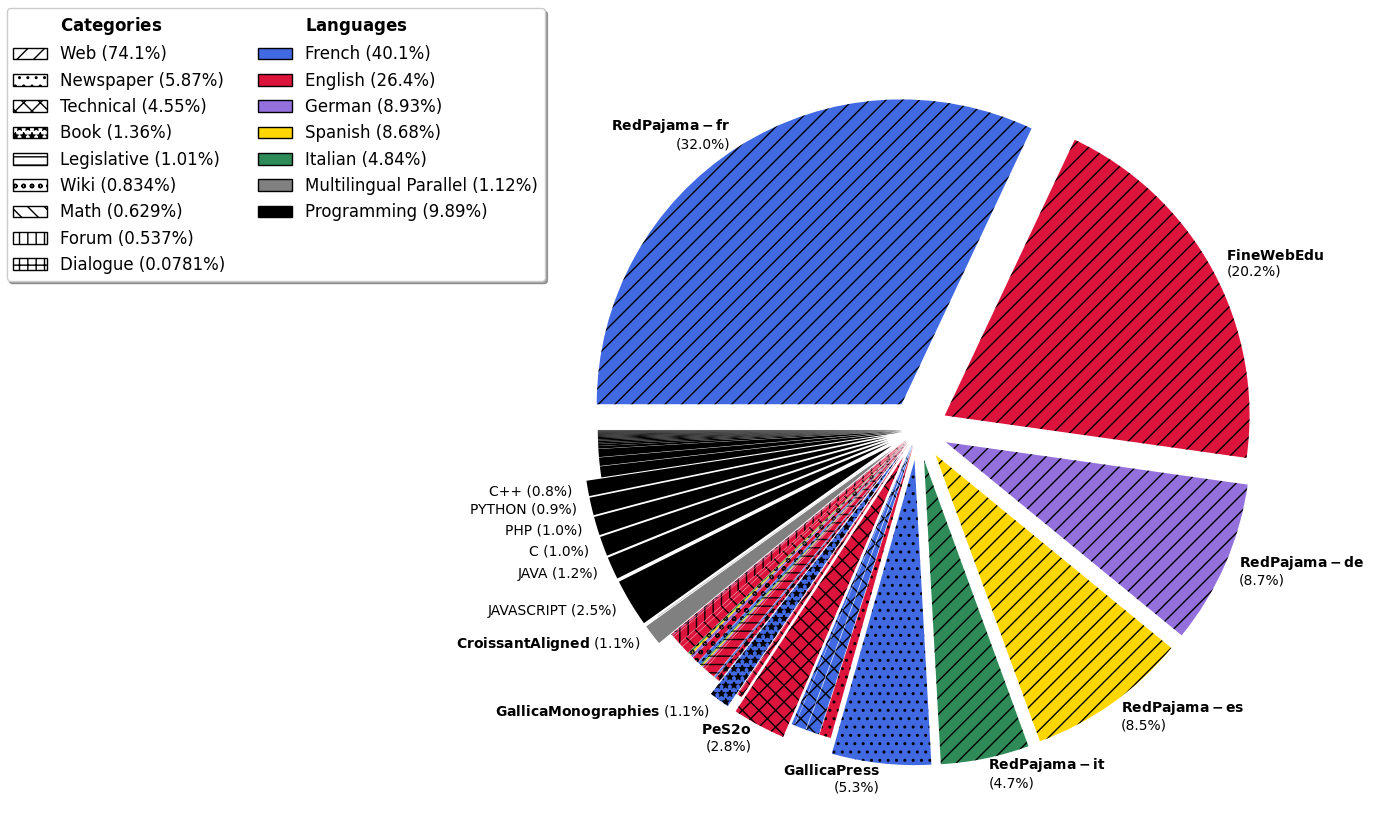

The following figure shows the distribution of the dataset by language (colors) and category (hatch patterns).

The following table provides an overview of the dataset composition, broken down by source and language. Sources are grouped by category. The table provides the numbers of documents, words, tokens, and characters for each subset. All numbers in this table are available in the CSV file dataset_composition.csv. The Number of tokens was computed using the tokenizer of Lucie-7B LLM.

| subset | language | M docs | B words | B tokens | B chars | |

|---|---|---|---|---|---|---|

| TOTAL | 2186.562 | 1356.021 | 2314.862 | 8842.200 | ||

| French (fr) | 653.812 | 583.687 | 928.618 | 3619.672 | composition details | |

| English (en) | 554.289 | 412.202 | 611.894 | 2553.541 | composition details | |

| code | 125.769 | 51.306 | 228.954 | 630.749 | composition details | |

| German (de) | 165.915 | 105.609 | 206.610 | 764.779 | composition details | |

| Spanish (es) | 171.651 | 123.857 | 200.825 | 759.457 | composition details | |

| Italian (it) | 99.440 | 62.051 | 112.031 | 404.454 | composition details | |

| fr-en | 410.032 | 17.016 | 25.494 | 107.658 | composition details | |

| it-en | 1.901 | 0.100 | 0.151 | 0.638 | ||

| es-en | 1.961 | 0.103 | 0.143 | 0.631 | ||

| de-fr | 1.792 | 0.0908 | 0.141 | 0.621 | ||

Category: Web | ||||||

| RedPajama | French (fr) | 640.770 | 477.758 | 741.023 | 2974.596 | composition details |

| German (de) | 162.779 | 103.078 | 201.371 | 747.631 | composition details | |

| Spanish (es) | 169.447 | 121.751 | 197.125 | 746.984 | composition details | |

| Italian (it) | 97.324 | 60.194 | 108.416 | 393.012 | composition details | |

| FineWebEdu | English (en) | 421.209 | 327.453 | 467.837 | 2018.215 | composition details |

Category: Newspaper | ||||||

| GallicaPress | French (fr) | 3.205 | 67.496 | 121.606 | 408.882 | |

| AmericanStories | English (en) | 59.420 | 8.902 | 14.313 | 50.844 | composition details |

Category: Technical | ||||||

| PeS2o | English (en) | 38.972 | 42.296 | 65.365 | 268.963 | |

| HAL | French (fr) | 0.349 | 9.356 | 16.224 | 58.308 | |

| Theses | French (fr) | 0.102 | 7.547 | 14.060 | 47.758 | |

| Pile (USPTO_Backgrounds) | English (en) | 5.139 | 3.492 | 5.105 | 22.309 | |

| OpenEdition | French (fr) | 0.939 | 2.225 | 3.604 | 14.459 | |

| Pile (PhilPapers) | English (en) | 0.0308 | 0.363 | 0.618 | 2.304 | |

| Pile (NIH_ExPorter) | English (en) | 0.914 | 0.288 | 0.431 | 1.979 | |

Category: Book | ||||||

| GallicaMonographies | French (fr) | 0.278 | 15.106 | 25.169 | 90.456 | |

| Gutenberg | English (en) | 0.0563 | 3.544 | 5.516 | 20.579 | |

| French (fr) | 0.00345 | 0.227 | 0.383 | 1.392 | ||

| German (de) | 0.00188 | 0.0987 | 0.193 | 0.654 | ||

| Italian (it) | 0.000958 | 0.0657 | 0.129 | 0.414 | ||

| Spanish (es) | 0.000735 | 0.0512 | 0.0920 | 0.303 | ||

Category: Legislative Texts | ||||||

| Pile (FreeLaw) | English (en) | 3.415 | 8.204 | 14.011 | 52.580 | |

| Eurovoc | English (en) | 0.272 | 1.523 | 2.571 | 9.468 | |

| Italian (it) | 0.245 | 0.731 | 1.527 | 4.867 | ||

| German (de) | 0.247 | 0.678 | 1.497 | 4.915 | ||

| Spanish (es) | 0.246 | 0.757 | 1.411 | 4.684 | ||

| OpenData | French (fr) | 1.169 | 0.755 | 1.209 | 4.638 | |

| QuestionsEcritesParlement | French (fr) | 0.189 | 0.108 | 0.156 | 0.705 | |

| LEGI | French (fr) | 0.621 | 0.0878 | 0.145 | 0.563 | |

| AmendementsParlement | French (fr) | 0.673 | 0.0452 | 0.0738 | 0.274 | |

Category: Legislative Transcripts | ||||||

| Europarl | German (de) | 0.0102 | 0.0451 | 0.0734 | 0.327 | |

| Spanish (es) | 0.0103 | 0.0524 | 0.0733 | 0.325 | ||

| French (fr) | 0.0103 | 0.0528 | 0.0717 | 0.339 | ||

| English (en) | 0.0111 | 0.0563 | 0.0690 | 0.339 | ||

| DiscoursPublics | French (fr) | 0.110 | 0.163 | 0.238 | 1.025 | |

| InterventionsParlement | French (fr) | 1.832 | 0.104 | 0.157 | 0.654 | |

Category: Wiki | ||||||

| Wikipedia | English (en) | 6.893 | 4.708 | 7.898 | 26.616 | |

| German (de) | 2.877 | 1.709 | 3.476 | 11.252 | ||

| French (fr) | 2.648 | 1.726 | 2.940 | 9.879 | ||

| Spanish (es) | 1.947 | 1.245 | 2.124 | 7.161 | ||

| Italian (it) | 1.870 | 1.060 | 1.959 | 6.161 | ||

| wikisource | French (fr) | 0.186 | 0.523 | 0.795 | 3.080 | |

| wiktionary | French (fr) | 0.650 | 0.0531 | 0.117 | 0.347 | |

Category: Math | ||||||

| MathPile | English (en) | 0.737 | 3.408 | 9.637 | 27.290 | |

| Pile (DM_Mathematics) | English (en) | 0.992 | 1.746 | 4.928 | 8.127 | |

Category: Forum | ||||||

| Pile (StackExchange) | English (en) | 15.269 | 4.534 | 10.275 | 33.609 | |

| Pile (Ubuntu_IRC) | English (en) | 0.0104 | 0.867 | 2.159 | 5.610 | |

Category: Dialogue | ||||||

| Claire | English (en) | 0.949 | 0.818 | 1.161 | 4.709 | composition details |

| French (fr) | 0.0393 | 0.210 | 0.311 | 1.314 | composition details | |

| YouTube | French (fr) | 0.0375 | 0.145 | 0.336 | 1.003 | |

| Stac | English (en) | 0.0000450 | 0.0000529 | 0.000121 | 0.000327 | |

Category: Multilingual Parallel Corpora | ||||||

| CroissantAligned | fr-en | 408.029 | 16.911 | 25.351 | 107.003 | |

| EuroparlAligned | it-en | 1.901 | 0.100 | 0.151 | 0.638 | |

| fr-en | 2.003 | 0.105 | 0.143 | 0.655 | ||

| es-en | 1.961 | 0.103 | 0.143 | 0.631 | ||

| de-fr | 1.792 | 0.0908 | 0.141 | 0.621 | ||

Category: Programming | ||||||

| TheStack | JAVASCRIPT | 21.109 | 8.526 | 58.609 | 141.647 | |

| JAVA | 20.152 | 7.421 | 27.680 | 89.297 | ||

| C | 8.626 | 5.916 | 24.092 | 57.428 | ||

| PHP | 15.905 | 4.865 | 22.883 | 66.844 | ||

| PYTHON | 12.962 | 5.434 | 21.683 | 64.304 | ||

| C++ | 6.378 | 4.584 | 18.835 | 50.892 | ||

| C# | 10.839 | 3.574 | 13.381 | 46.286 | ||

| GO | 4.730 | 2.735 | 10.262 | 25.738 | ||

| TYPESCRIPT | 10.637 | 2.617 | 9.836 | 28.815 | ||

| RUST | 1.387 | 0.872 | 3.241 | 9.529 | ||

| RUBY | 3.405 | 0.646 | 2.392 | 7.139 | ||

| SWIFT | 1.756 | 0.553 | 1.876 | 6.134 | ||

| KOTLIN | 2.243 | 0.454 | 1.758 | 5.769 | ||

| SCALA | 1.362 | 0.457 | 1.587 | 4.862 | ||

| TEX | 0.398 | 0.394 | 1.507 | 3.805 | ||

| LUA | 0.559 | 0.318 | 1.367 | 3.279 | ||

| DART | 0.933 | 0.308 | 1.242 | 3.864 | ||

| PERL | 0.392 | 0.297 | 1.149 | 2.634 | ||

| MATHEMATICA | 0.0269 | 0.120 | 1.117 | 1.720 | ||

| ASSEMBLY | 0.248 | 0.209 | 0.867 | 1.575 | ||

| HASKELL | 0.545 | 0.307 | 0.807 | 2.364 | ||

| FORTRAN | 0.165 | 0.192 | 0.780 | 1.843 | ||

| JULIA | 0.299 | 0.152 | 0.660 | 1.539 | ||

| OCAML | 0.160 | 0.130 | 0.430 | 1.107 | ||

| ERLANG | 0.0994 | 0.0657 | 0.260 | 0.726 | ||

| ELIXIR | 0.282 | 0.0731 | 0.258 | 0.737 | ||

| CLOJURE | 0.126 | 0.0448 | 0.179 | 0.492 | ||

| R | 0.0392 | 0.0278 | 0.158 | 0.305 | ||

| MATLAB | 0.000967 | 0.00865 | 0.0427 | 0.0372 | ||

| RACKET | 0.00420 | 0.00479 | 0.0153 | 0.0378 | ||

Details on Data Sources

AmendementsParlement

- Source: Corpus contributed by OpenLLM partners.

- Extracted from: Regards citoyens (nodeputes.fr, nossenateurs.fr). API. License: CC BY-SA.

- Description: A collection of proposed amendments by the French parliament: the legal text and description of the requested modification.

- Citation: No paper found.

AmericanStories

- Source: dell-research-harvard/AmericanStories. License: CC BY 4.0.

- Extracted from: Chronicling America. License: Open.

- Description: "The American Stories dataset is a collection of full article texts extracted from historical U.S. newspaper images. It includes nearly 20 million scans from the public domain Chronicling America collection maintained by the Library of Congress. The dataset is designed to address the challenges posed by complex layouts and low OCR quality in existing newspaper datasets" (from the dataset card). Dataset containing text retrieved through OCR.

- Citation: Melissa Dell, Jacob Carlson, Tom Bryan, Emily Silcock, Abhishek Arora, Zejiang Shen, Luca D'Amico-Wong, Quan Le, Pablo Querubin and Leander Heldring (2023). "American Stories: A Large-Scale Structured Text Dataset of Historical U.S. Newspapers," arxiv:2308.12477.

Claire (French and English)

- Sources:

- French dataset: OpenLLM-France/Claire-Dialogue-French-0.1. License: CC BY-NC-SA 4.0.

- English dataset: OpenLLM-France/Claire-Dialogue-English-0.1. License: CC BY-NC-SA 4.0.

- Extracted from: see the datacards for the French and English datasets.

- Description: The Claire datasets are composed of transcripts of spoken conversations -- including parliamentary proceedings, interviews, debates, meetings, and free conversations -- as well as some written conversations from theater plays and written chats. The dataset is designed to help downstream performance of models fine-tuned for tasks requiring the comprehension of spontaneous spoken conversation, such as meeting summarization. Each dialogue is split into speech turns, and each speech turn is labeled with the name of the speaker or a unique identifier.

- Citation: Julie Hunter, Jérôme Louradour, Virgile Rennard, Ismaïl Harrando, Guokan Shang, Jean-Pierre Lorré (2023). The Claire French Dialogue Dataset. arXiv:2311.16840.

CroissantAligned

- Source: croissantllm/croissant_dataset_no_web_data (subset:

aligned_36b). License: not specified. - Extracted from:

- Translation pairs: OPUS (99.6% of the data in CroissantAligned). Pairs extracted from OPUS are labeled as "UnbabelFrEn". License: .

- Thesis abstracts: French thesis abstract pairs. License: ETALAB-Licence-Ouverte-v2.0.

- Song lyrics: lacoccinelle. License: .

- Description: Data extracted from OPUS takes the form of sentences pairs, where one sentence is in French and the other is in English. OPUS pairs were passed through a custom pipeline designed to select the highest quality sentences pairs. Selected pairs are labeled "UnbabelFrEn" in the CroissantAligned dataset. The thesis abstract subset contains pairs of French or English thesis abstracts paired with translations written by the thesis author. The song lyrics are translated by contributors to www.lacoccinelle.net. Parallel data are used to boost the multilingual capabilities of models trained on them (Faysse et al.,2024).

- Citation: Manuel Faysse, Patrick Fernandes, Nuno M. Guerreiro, António Loison, Duarte M. Alves, Caio Corro, Nicolas Boizard, João Alves, Ricardo Rei, Pedro H. Martins, Antoni Bigata Casademunt, François Yvon, André F.T. Martins, Gautier Viaud, Céline Hudelot, Pierre Colombo (2024). "CroissantLLM: A Truly Bilingual French-English Language Model," arXiv:2402.00786.

DiscoursPublics

- Source: Corpus contributed by OpenLLM partners.

- Extracted from: Vie Publique.

- Description: A collection of public speeches from the principal public actors in France including speeches from the French President starting from 1974 and from the Prime Minister and members of the government starting from 1980.

- Citation: No paper found.

Europarl (monolingual and parallel)

- Sources:

fr-en,es-en,it-enparallel data: Europarl v7. License: Open.fr,en,de,esmonolingual data andde-frparallel data: Europarl v10. License: Open.

- Description: "The Europarl parallel corpus is extracted from the proceedings of the European Parliament. It includes versions in 21 European languages: Romanic (French, Italian, Spanish, Portuguese, Romanian), Germanic (English, Dutch, German, Danish, Swedish), Slavik (Bulgarian, Czech, Polish, Slovak, Slovene), Finni-Ugric (Finnish, Hungarian, Estonian), Baltic (Latvian, Lithuanian), and Greek. The goal of the extraction and processing was to generate sentence aligned text for statistical machine translation systems" (www.statmt.org).

- Citation: Philipp Koehn (2005). "Europarl: A Parallel Corpus for Statistical Machine Translation," MT Summit.

Eurovoc

- Source: EuropeanParliament/Eurovoc. License: EUPL 1.1.

- Extracted from: Cellar. License: Open.

- Description: A collection of mutlilingual documents from the data repository of the Publications Office of the European Union annotated with Eurovoc labels. Dataset containing text retrieved through OCR.

- Citations:

- Ilias Chalkidis, Emmanouil Fergadiotis, Prodromos Malakasiotis, Nikolaos Aletras, and Ion Androutsopoulos (2019). "Extreme Multi-Label Legal Text Classification: A Case Study in EU Legislation," Proceedings of the Natural Legal Language Processing Workshop 2019, pages 78–87, Minneapolis, Minnesota. Association for Computational Linguistics.

- Ilias Chalkidis, Manos Fergadiotis, Prodromos Malakasiotis and Ion Androutsopoulos (2019). "Large-Scale Multi-Label Text Classification on EU Legislation," Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics (ACL 2019), Florence, Italy, (short papers).

- Andrei-Marius Avram, Vasile Pais, and Dan Ioan Tufis (2021). "PyEuroVoc: A Tool for Multilingual Legal Document Classification with EuroVoc Descriptors," Proceedings of the International Conference on Recent Advances in Natural Language Processing (RANLP 2021), pages 92–101, Held Online. INCOMA Ltd.

- Zein Shaheen, Gerhard Wohlgenannt and Erwin Filtz (2020). "Large scale legal text classification using transformer models," arXiv:2010.12871.

FineWebEdu

- Source: HuggingFaceFW/fineweb-edu. License: ODC-BY.

- Extracted from: FineWeb. License: ODC-BY.

- Description: A 1.3 trillion token selection from FineWeb, which contains 15 trillion tokens of curated data from 96 Common Crawl dumps. Content in FineWebEdu has been selected by a custom designed classifier for its high-quality, educational content. Knowledge cutoff: 2019-2024.

- Citation: Guilherme Penedo, Hynek Kydlíček, Loubna Ben allal, Anton Lozhkov, Margaret Mitchell, Colin Raffel, Leandro Von Werra, Thomas Wolf (2024). "The FineWeb Datasets: Decanting the Web for the Finest Text Data at Scale," arXiv:2406.17557.

GallicaMonographies

- Source: Corpus contributed by OpenLLM partners. A version is also published here: PleIAs/French-PD-Books. License: None (public domain).

- Extracted from: Gallicagram.

- Description: A large collection of French monographies in the public domain made available through the French National Library (Gallica). Dataset containing text retrieved through OCR.

- Citation: No paper found.

GallicaPress

- Source: Corpus contributed by OpenLLM partners. A version is also published here: PleIAs/French-PD-Newspapers. License: None (public domain).

- Extracted from: Gallicagram.

- Description: A large collection of French newspapers and periodicals in the public domain made available through the French National Library (Gallica). Dataset containing text retrieved through OCR.

- Citation: No paper found.

Gutenberg

- Source: Corpus compiled by OpenLLM partners.

- Extracted from:

- aleph.gutenberg.org via Project Gutenberg. License: Open.

- pgcorpus. License: CC BY-4.0.

- Description: A collection of free eBooks, manually prepared by human annotators.

- Citation: No paper found.

HAL

- Source: The ROOTS corpus by BigScience (unpublished). License: CC BY-4.0.

- Extracted from: HAL.

- Description: A collection of scientific papers and manuscripts distributed through an open science platform. Dataset containing text retrieved through OCR.

- Citation: Hugo Laurençon, Lucile Saulnier, Thomas Wang, Christopher Akiki, Albert Villanova del Moral, Teven Le Scao, Leandro Von Werra, Chenghao Mou, Eduardo González Ponferrada, Huu Nguyen, Jörg Frohberg, Mario Šaško, Quentin Lhoest, Angelina McMillan-Major, Gerard Dupont, Stella Biderman, Anna Rogers, Loubna Ben allal, Francesco De Toni, Giada Pistilli, Olivier Nguyen, Somaieh Nikpoor, Maraim Masoud, Pierre Colombo, Javier de la Rosa, Paulo Villegas, Tristan Thrush, Shayne Longpre, Sebastian Nagel, Leon Weber, Manuel Muñoz, Jian Zhu, Daniel Van Strien, Zaid Alyafeai, Khalid Almubarak, Minh Chien Vu, Itziar Gonzalez-Dios, Aitor Soroa, Kyle Lo, Manan Dey, Pedro Ortiz Suarez, Aaron Gokaslan, Shamik Bose, David Adelani, Long Phan, Hieu Tran, Ian Yu, Suhas Pai, Jenny Chim, Violette Lepercq, Suzana Ilic, Margaret Mitchell, Sasha Alexandra Luccioni, Yacine Jernite (2022). The BigScience ROOTS Corpus: A 1.6TB Composite Multilingual Dataset. Advances in Neural Information Processing Systems (NeurIPS), 35, 31809-31826.

InterventionsParlement

- Source: Corpus contributed by OpenLLM partners.

- Extracted from: Regards citoyens (nodeputes.fr, nossenateurs.fr). API. License: CC BY-SA.

- Description: Transcripts of speeches made during French parlementary debates.

- Citation: No paper found.

MathPile

- Source: GAIR/MathPile_Commercial. License: CC BY-SA 4.0

- Extracted from: MathPile. License: CC BY-SA-NC 4.0.

- Description: A preprocessed collection of documents focused on math, including Textbooks, arXiv, Wikipedia, ProofWiki, StackExchange, and web pages from Common Crawl. The content targets a range of levels, from kindergarten through postgraduate level. MathPile_Commercial was obtained by removing documents from MathPile that do not allow commercial use.

- Citation: Zengzhi Wang, Rui Xia and Pengfei Liu (2023). "Generative AI for Math: Part I -- MathPile: A Billion-Token-Scale Pretraining Corpus for Math," arXiv:2312.17120.

OpenData

- Source: Nicolas-BZRD/DILA_OPENDATA_FR_2023 (balo, dole, inca, kali, legi and sarde subsets). License: ODC-BY.

- Extracted from: OpenData (Data collection date: October, 2023).

- Description: "The French Government Open Data (DILA) Dataset is a collection of text data extracted from various sources provided by the French government, specifically the Direction de l'information légale et administrative (DILA). This dataset contains a wide range of legal, administrative, and legislative documents. The data has been organized into several categories for easy access and analysis" (from the dataset card).

- Citation: No paper found.

OpenEdition

- Source: Corpus contributed by OpenLLM partners.

- Extracted from: Open Edition.

- Description:

- Citation: No paper found.

PeS2o

- Source: allenai/peS2o. License: ODC BY-v1.0

- Extracted from: S2ORC (see aclanthology). Knowledge cutoff: 2023-01-03.

- Description: A preprocessed collection of academic papers designed for pre-training of language models. PeS2o is composed of two subsets: one containing full papers and one containing only paper titles and abstracts. Dataset containing (some) text retrieved through OCR.

- Citation: Luca Soldaini and Kyle Lo (2023). "peS2o (Pretraining Efficiently on S2ORC) Dataset}, Allen Institute for AI. GitHub.

Pile (Uncopyrighted)

- Source: monology/pile-uncopyrighted. License: Other.

- Extracted from: FreeLaw, StackExchange, USPTO Backgrounds, DM Mathematics, Ubuntu IRC, PhilPapers, NIH ExPorter from The Pile. License: MIT.

- Description (from the Datasheet):

- FreeLaw: "The Free Law Project is US registered non-profit that provide access to millions of legal opinions and analytical tools for academic studies in the legal realm."

- StackExchange: "The StackExchange dataset is a dump of anonymized user-contributed content on the Stack Exchange network, a popular collection of websites centered around user-contributed questions and answers."

- USPTO Backgrounds: "The USPTO Backgrounds dataset is a set of background sections from patents granted by the United States Patent and Trademark Office, derived from its published bulk archives."

- DM Mathematics: "The DeepMind Mathematics dataset consists of a collection of mathematical problems such as algebra, arithmetic, calculus, number theory, and probability, formatted as natural language prompts Saxton et al., 2019."

- Ubuntu IRC: "The Ubuntu IRC dataset is derived from the publicly available chatlogs of all Ubunturelated channels on the Freenode IRC chat server."

- PhilPapers: a dataset of open access philosophy publications from an international database maintained by the Center for Digital Philosophy at the University of Western Ontario.

- NIH ExPORTER: "The NIH Grant abstracts provides a bulk-data repository for awarded applications through the ExPORTER4 service covering the fiscal years 1985-present."

- Citation:

- Leo Gao, Stella Biderman, Sid Black, Laurence Golding, Travis Hoppe, Charles Foster, Jason Phang, Horace He, Anish Thite, Noa Nabeshima, Shawn Presser, Connor Leahy (2020). "The Pile: An 800GB Dataset of Diverse Text for Language Modeling," arXiv:2101.00027.

- Stella Biderman, Kieran Bicheno, Leo Gao (2022). "Datasheet for the Pile," arXiv:2201.07311.

QuestionsEcritesParlement

- Source: Corpus contributed by OpenLLM partners.

- Extracted from: Regards citoyens (text). License: CC BY-NC-SA.

- Description: Collection of long written questions, read during a session at the French National Assembly. Questions are asked by a member of the French parliament and addressed to a minister (who is given two months to respond).

- Citation: No paper found.

RedPajama (v2)

- Source: togethercomputer/RedPajama-Data-V2. License: Apache 2.0 (data preparation code), Not specified (data) but see Common Crawl terms of use.

- Extracted from: Common Crawl.

- Description: "RedPajama-V2 is an open dataset for training large language models. The dataset includes over 100B text documents coming from 84 CommonCrawl snapshots and processed using the CCNet pipeline. Out of these, there are 30B documents in the corpus that additionally come with quality signals, and 20B documents that are deduplicated" (from GitHub). Knowledge cutoff: 2014-2023.

- Citation: Together Computer (2023). "RedPajama-Data-v2: an Open Dataset with 30 Trillion Tokens for Training Large Language Models," GitHub.

STAC

- Source: STAC. License: CC BY-SA-NC 4.0.

- Extracted from: STAC. The full STAC corpus contains annotations for discourse structure. We use only the text of the chats.

- Description: A collection of chats from an online version of the game Settlers of Catan.

- Citation: Nicholas Asher, Julie Hunter, Mathieu Morey, Farah Benamara and Stergos Afantenos (2016). "Discourse structure and dialogue acts in multiparty dialogue: the STAC corpus," The Tenth International Conference on Language Resources and Evaluation (LREC 2016). European Language Resources Association, pp. 2721-2727.

TheStack

- Source: bigcode/the-stack-dedup. License: Other (mixture of copyleft licenses).

- Extracted from: GHarchive

- Description: "The Stack contains over 6TB of permissively-licensed source code files covering 358 programming languages. The dataset was created as part of the BigCode Project, an open scientific collaboration working on the responsible development of Large Language Models for Code (Code LLMs). The Stack serves as a pre-training dataset for Code LLMs, i.e., code-generating AI systems which enable the synthesis of programs from natural language descriptions as well as other from code snippets. This is the near-deduplicated version with 3TB data" (from the dataset card).

- Citation: Denis Kocetkov, Raymond Li, Loubna Ben Allal, Jia Li, Chenghao Mou, Carlos Muñoz Ferrandis, Yacine Jernite, Margaret Mitchell, Sean Hughes, Thomas Wolf, Dzmitry Bahdanau, Leandro von Werra and Harm de Vries (2022). "The Stack: 3 TB of permissively licensed source code," arxiv:2211.15533.

Theses

- Source: Corpus contributed by OpenLLM partners.

- Extracted from: theses.fr and HAL.

- Description: A collection of doctoral theses published in France. Dataset containing text retrieved through OCR.

- Citation: No paper found.

Wikipedia, Wikisource, Wiktionary

- Source: Corpus contributed by LINAGORA Labs (OpenLLM-France). Also published here:

- Extracted from: Wikimedia dumps. License: GFDL/CC BY-SA.

- Description:

- Citation: No paper found.

YouTube

- Source: Corpus contributed by LINAGORA Labs (OpenLLM-France).

- Extracted from: YouTube. License: .

- Description: French subtitles from videos published with permissive licenses on YouTube.

- Citation: No paper found.

Example use in python

Load the dataset using the datasets library:

from datasets import load_dataset

kwargs = {"split": "train", "streaming": True}

dataset = load_dataset("OpenLLM-France/Lucie-Training-Dataset", **kwargs)

for sample in dataset:

text = sample["text"]

# ... do something with the text

Several configurations are available to select a language, a source, or both, illustrated in the following examples.

Load data in French:

dataset = load_dataset("OpenLLM-France/Lucie-Training-Dataset", "fr", **kwargs)

Load data where French and English are aligned:

dataset = load_dataset("OpenLLM-France/Lucie-Training-Dataset", "fr,en", **kwargs)

Load data corresponding to files with programming languages:

dataset = load_dataset("OpenLLM-France/Lucie-Training-Dataset", "code", **kwargs)

Load data in Python:

dataset = load_dataset("OpenLLM-France/Lucie-Training-Dataset", "code-python", **kwargs)

Load data from Wikipedia (in available languages):

dataset = load_dataset("OpenLLM-France/Lucie-Training-Dataset", "Wikipedia", **kwargs)

Load data from French pages of Wikipedia (wikipedia.fr):

dataset = load_dataset("OpenLLM-France/Lucie-Training-Dataset", "Wikipedia-fr", **kwargs)

License

TODO

Citation

TODO

Contact

[email protected]