Crowd-sourced Open Preference Dataset for Text-to-Image Generation

In the rapidly evolving field of text-to-image generation, human preference datasets have shown to have a great impact on the quality and the alignment of models. The outputs of these models are hard to measure in a meaningful way, let alone obtaining a subjective, quantifiable metric to evaluate or train against. Human preferences, in a large enough quantity and quality, can provide this metric.

Collecting such a dataset of human preferences has one key challenge though: They require a huge amount of human capital, a large enough base of annotators, to be able to collect such a dataset of relevant dimensions in a workable timespan. Which traditionally comes at a huge cost.

Due to these limitations publicly available human preference datasets are scarce, limiting the communities ability to develop cutting edge models.

The Huggingface community “Data is better together” set out to change this, putting out an open call to collect preference for 17’000 image pairs in their Open Preferences Dataset V1.

TLDR; We wanted to contribute and have collected over 170’000 human preferences from 49'241 individual annotators from across the globe in less than 2 days.

The Input Dataset

The input data set is described in detail in the blog post the “data is better together” community posted alongside the dataset. Below a short summary:

Overview

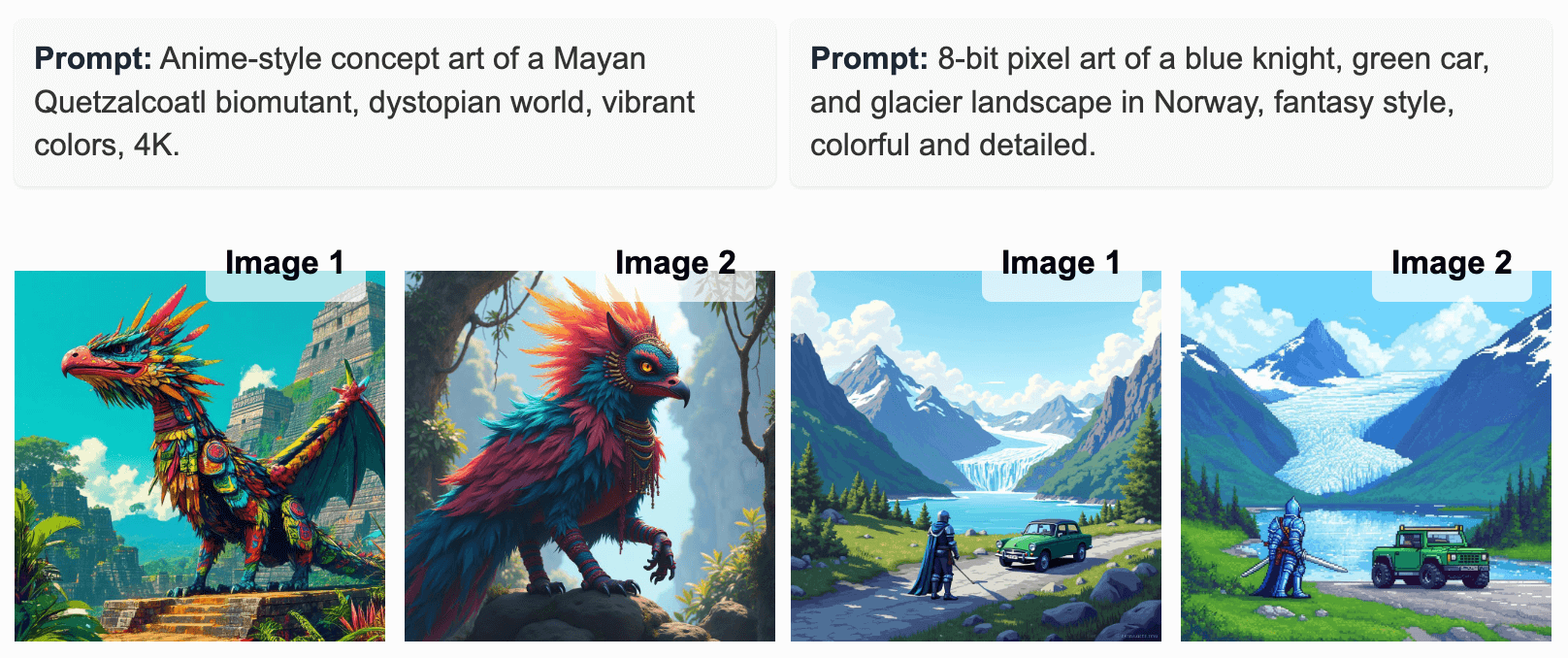

The open-image-preferences-v1 dataset was created using cleaned and filtered prompts, enhanced with synthetic data generation via Distilabel. Images were generated using Flux and Stable Diffusion models.

Input Prompts and Filtering

Prompts from Imgsys were filtered for duplicates and toxicity using automated classifiers (text/image) and manual reviews. NSFW content was removed, ensuring safe and high-quality data.

Synthetic Prompt Enhancement

Prompts were rewritten into diverse categories (e.g., Cinematic, Anime, Neonpunk) and varying complexities (simplified vs. detailed). For example:

- Default: "A harp without strings."

- Stylized: "A harp without strings, in an anime style, set against a pastel background."

- Quality: "A harp without strings, in anime style, with rich textures, golden-hour light."

Image Generation

Images were created using:

- stabilityai/stable-diffusion-3.5-large

- black-forest-labs/FLUX.1-dev

Both models generated images for simplified and complex prompts within the same categories.

The Results

The “Data is better together” community supplied 34k images, organized in 17k pairs which were generated from two versions of 8.5k base prompts (One simple, one stylized/quality prompt with the same core semantics). The images are available from the open-image-preferences-v1 dataset. In their associated results dataset they provide human preferences for 10k of the 17k pairs. For each pair, ~3 preferences were collected. To expand on the dataset we have collected 10 preferences for each of the 17k pairs, resulting in a total of 170k preferences using the API of our Rapidata annotation platform

Main Differences

Our approach to preference collection means that the new dataset differs from the original in the following ways:

- Our annotators select between just the two image options, whereas the original dataset additionally includes the options “both good” and "both bad". However, due to the higher volume of preferences, equally good images can be inferred from an even preference distribution (see section: Annotator Alignment).

- Our preferences are collected from a much larger and more diverse set of annotators. Where the original each annotator on average provided 120 preferences, our annotators provided on average 3.5 preferences. Moreover, roughly half of the annotations of the original set were provided by five annotators. This means that our dataset should be much less prone to individual annotator bias.

- The higher number of preferences per image pair means that a more nuanced analysis can be performed where you can quantify to which extent one image is preferred over the other by looking at the preference distribution.

- Our dataset provides additional metadata for each preference, such as the country and language of the annotator. This data is available in the

detailed_resultscolumn of the dataset.

Annotator Alignment

For the original dataset, the authors analyzed the alignment between annotators by looking at the ratio of unique responses for each image pair. Given that we can have a maximum of two unique responses per image, this analysis is not directly applicable to our dataset. Instead we have analyzed the alignment as the ratio between the most selected and the total number of preferences for each image pair. The results are shown in the histogram below. Low alignment indicates that both models performed equally well, whereas high alignment means that one model was clearly preferred over the other. We have also created a binarized version of the dataset, where a single preferred model is inferred from the results and ambiguous/equal results were discarded. What is often not clear from just general preference data, is deeper insights into why one image is preferred over the other. E.g., is it because the image is more visually pleasing or because the image aligns better with the prompt? In previous preference datasets collected by us, such as our benchmark data we specify three different criteria for the preference; style, coherence, and prompt-image alignment.

Model Performance

Similar to what was done for the original dataset, we also analyzed the performance of the two models across different categories. While ties were not the most prominent for any of the categories, our results otherwise mostly align with those of the original dataset. The FLUX model was preferred for Anime and Manga, whereas the Stable Diffusion model was preferred otherwise, i.e.:

- FLUX-dev better: Anime, Manga

- SD3.5-XL better: Cinematic, Digital art, Fantasy art, Illustration, Neonpunk, Painting, Pixel art, Photographic, Animation, 3D Model

Interestingly, based on our data, SD3.5-XL was significantly preferred for 3D Model, whereas the original dataset showed a preference for FLUX-dev. Further analysis could be carried out to understand the reasons behind this. E.g. whether there is a high representation of the 3D Model category in the additional 7k pairs considered, or potentially due to the annotator bias mentioned earlier.

Annotator Diversity

As mentioned, our data comes from a large and diverse set of annotators. Where the original dataset represented just 250 annotators, presumably demographically very similar, our dataset is culturally diverse, representing 49,241 annotators from across the globe. The distribution of which country the responses originate from can be seen in the histogram below.

What's next?

We hope that this dataset can be leveraged to train and fine-tune new models, in particular making use of the extra information available from being able to quantify how much the image is preferred. Or even making use of the annotator metadata. Simple preferences like these are a great start, however they lack deeper insights. For example: was the image preferred due to a more pleasant art style, it was generally more coherent, or because it was better aligned with the prompt? Additionally, which parts of the image or prompt were misaligned? This sort of rich feedback is explored in our rich human feedback dataset, and would be interesting to apply to the open image preference dataset.