metadata

license: llama3.1

datasets:

- nvidia/OpenMathInstruct-2

language:

- en

metrics:

- accuracy

base_model:

- meta-llama/Llama-3.1-8B-Instruct

model-index:

- name: Control-LLM-Llama3.1-8B-Math16

results:

- task:

type: math-evaluation

dataset:

type: parquet

name: Math, Math Hard, GSM8K

dataset_kwargs:

data_files: >-

https://github.com/linkedin/ControlLLM/blob/main/src/controlllm/inference/llm_eval_harness/additional_tasks/math/joined_math.parquet

metrics:

- name: exact_match,none

type: exact_match

value: 0.6327358367133324

stderr: 0.0052245703347459605

verified: false

- name: exact_match,none (gsm8k_0shot_instruct)

type: exact_match

value: 0.9052312357846853

stderr: 0.008067791560015407

verified: false

- name: exact_match,none (meta_math_0shot_instruct)

type: exact_match

value: 0.6276

stderr: 0.006837616441401548

verified: false

- name: exact_match,none (meta_math_hard_0shot_instruct)

type: exact_match

value: 0.3806646525679758

stderr: 0.013349170720370741

verified: false

- task:

type: original-capability

dataset:

type: meta/Llama-3.1-8B-Instruct-evals

name: Llama-3.1-8B-Instruct-evals Dataset

dataset_path: meta-llama/llama-3.1-8_b-instruct-evals

dataset_name: Llama-3.1-8B-Instruct-evals__arc_challenge__details

metrics:

- name: exact_match,strict-match

type: exact_match

value: 0.5723263625528227

stderr: 0.002858377993520894

verified: false

- name: exact_match,strict-match (meta_arc_0shot_instruct)

type: exact_match

value: 0.7974248927038626

stderr: 0.01178043813618557

verified: false

- name: exact_match,strict-match (meta_gpqa_0shot_cot_instruct)

type: exact_match

value: 0.25223214285714285

stderr: 0.02054139101648797

verified: false

- name: exact_match,strict-match (meta_mmlu_0shot_instruct)

type: exact_match

value: 0.6837345107534539

stderr: 0.0039243761987253515

verified: false

- name: exact_match,strict-match (meta_mmlu_pro_5shot_instruct)

type: exact_match

value: 0.4324301861702128

stderr: 0.004516653585262379

verified: false

pipeline_tag: text-generation

library_name: transformers

Control-LLM-Llama3.1-8B-Math16

This is a fine-tuned model of Llama-3.1-8B-Instruct for mathematical tasks on OpenMath2 dataset, as described in the paper Control LLM: Controlled Evolution for Intelligence Retention in LLM.

Linked Paper

This model is associated with the paper: Control-LLM.

Linked Open Source code - training, eval and benchmark

This model is associated with the github: Control-LLM.

Evaluation Results

Here is an overview of the evaluation results and findings:

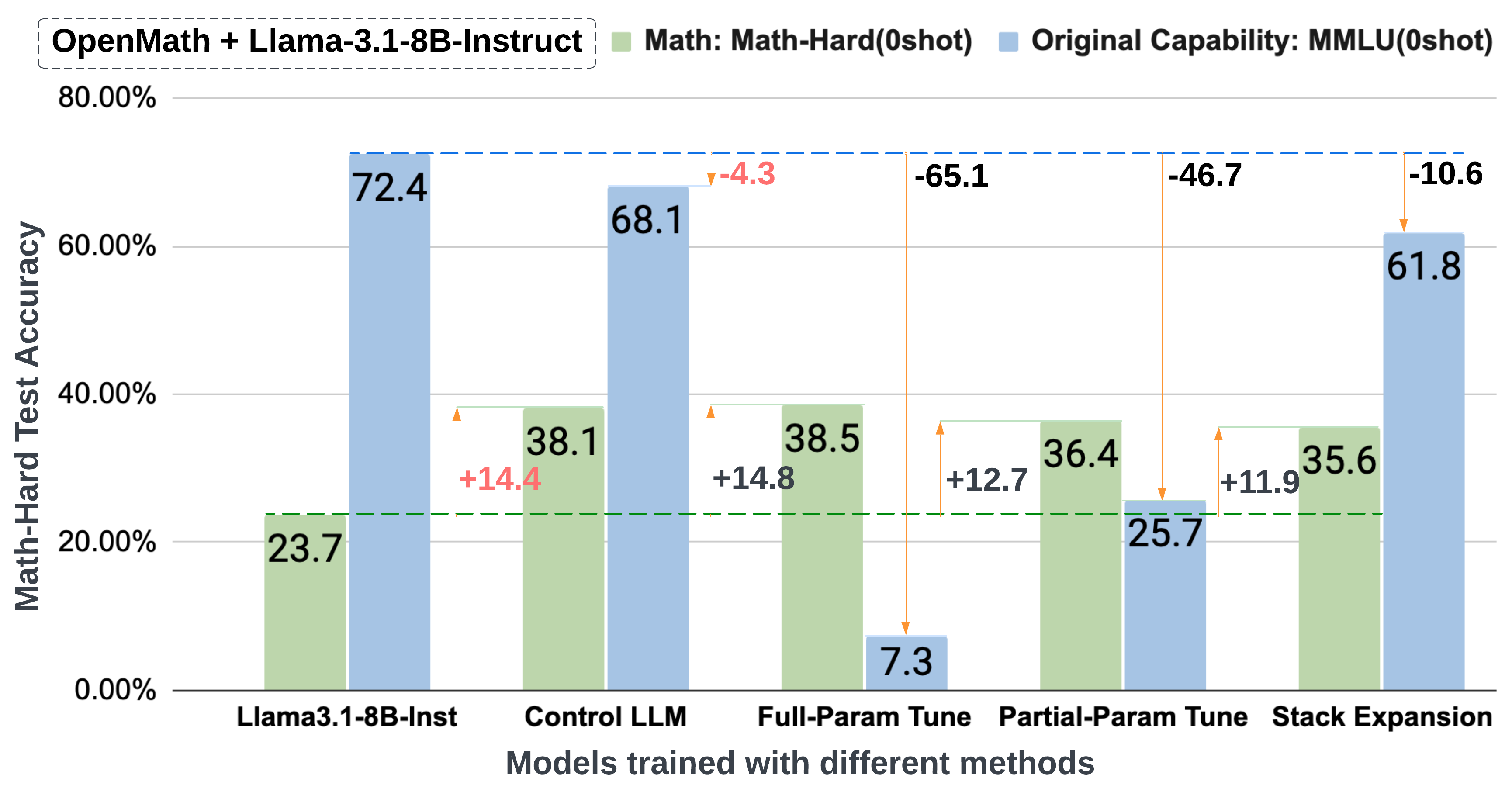

Benchmark Result and Catastrophic Forgetting on OpenMath

The following plot illustrates benchmark result and catastrophic forgetting mitigation on the OpenMath2 dataset.

Alignment Comparison

The plot below highlights the alignment comparison of the model trained with Control LLM and Full Parameter Tuning.

Benchmark Results Table

The table below summarizes evaluation results across mathematical tasks and original capabilities.

| Model | MH | M | G8K | M-Avg | ARC | GPQA | MLU | MLUP | O-Avg | Overall |

|---|---|---|---|---|---|---|---|---|---|---|

| Llama3.1-8B-Inst | 23.7 | 50.9 | 85.6 | 52.1 | 83.4 | 29.9 | 72.4 | 46.7 | 60.5 | 56.3 |

| OpenMath2-Llama3 | 38.4 | 64.1 | 90.3 | 64.3 | 45.8 | 1.3 | 4.5 | 19.5 | 12.9 | 38.6 |

| Full Tune | 38.5 | 63.7 | 90.2 | 63.9 | 58.2 | 1.1 | 7.3 | 23.5 | 16.5 | 40.1 |

| Partial Tune | 36.4 | 61.4 | 89.0 | 61.8 | 66.2 | 6.0 | 25.7 | 30.9 | 29.3 | 45.6 |

| Stack Exp. | 35.6 | 61.0 | 90.8 | 61.8 | 69.3 | 18.8 | 61.8 | 43.1 | 53.3 | 57.6 |

| Hybrid Exp. | 34.4 | 61.1 | 90.1 | 61.5 | 81.8 | 25.9 | 67.2 | 43.9 | 57.1 | 59.3 |

| Control LLM* | 38.1 | 62.7 | 90.4 | 63.2 | 79.7 | 25.2 | 68.1 | 43.6 | 57.2 | 60.2 |

Explanation:

- MH: MathHard

- M: Math

- G8K: GSM8K

- M-Avg: Math - Average across MathHard, Math, and GSM8K

- ARC: ARC benchmark

- GPQA: General knowledge QA

- MLU: MMLU (Massive Multitask Language Understanding)

- MLUP: MMLU Pro

- O-Avg: Orginal Capability - Average across ARC, GPQA, MMLU, and MMLUP

- Overall: Combined average across all tasks