Upload 6 files

Browse files- .streamlit/config.toml +3 -0

- Demo.py +254 -0

- Dockerfile +72 -0

- images/DependencyParserVisualizer.png +0 -0

- pages/Workflow & Model Overview.py +250 -0

- requirements.txt +8 -0

.streamlit/config.toml

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[theme]

|

| 2 |

+

base="light"

|

| 3 |

+

primaryColor="#29B4E8"

|

Demo.py

ADDED

|

@@ -0,0 +1,254 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

import sparknlp

|

| 3 |

+

from johnsnowlabs import nlp

|

| 4 |

+

from sparknlp.base import *

|

| 5 |

+

from sparknlp.annotator import *

|

| 6 |

+

from pyspark.ml import Pipeline

|

| 7 |

+

import pyspark.sql.functions as F

|

| 8 |

+

import pandas as pd

|

| 9 |

+

|

| 10 |

+

# Page Configuration

|

| 11 |

+

st.set_page_config(

|

| 12 |

+

layout="wide",

|

| 13 |

+

initial_sidebar_state="expanded"

|

| 14 |

+

)

|

| 15 |

+

|

| 16 |

+

# CSS Styling

|

| 17 |

+

st.markdown("""

|

| 18 |

+

<style>

|

| 19 |

+

.main-title {

|

| 20 |

+

font-size: 36px;

|

| 21 |

+

color: #4A90E2;

|

| 22 |

+

font-weight: bold;

|

| 23 |

+

text-align: center;

|

| 24 |

+

}

|

| 25 |

+

.section {

|

| 26 |

+

background-color: #f9f9f9;

|

| 27 |

+

padding: 10px;

|

| 28 |

+

border-radius: 10px;

|

| 29 |

+

margin-top: 10px;

|

| 30 |

+

}

|

| 31 |

+

.section p, .section ul {

|

| 32 |

+

color: #666666;

|

| 33 |

+

}

|

| 34 |

+

.table {

|

| 35 |

+

width: 100%;

|

| 36 |

+

border-collapse: collapse;

|

| 37 |

+

margin-top: 20px;

|

| 38 |

+

}

|

| 39 |

+

.table th, .table td {

|

| 40 |

+

border: 1px solid #ddd;

|

| 41 |

+

padding: 8px;

|

| 42 |

+

text-align: left;

|

| 43 |

+

}

|

| 44 |

+

.table th {

|

| 45 |

+

background-color: #4A90E2;

|

| 46 |

+

color: white;

|

| 47 |

+

}

|

| 48 |

+

.table td {

|

| 49 |

+

background-color: #f2f2f2;

|

| 50 |

+

}

|

| 51 |

+

</style>

|

| 52 |

+

""", unsafe_allow_html=True)

|

| 53 |

+

|

| 54 |

+

# Initialize Spark

|

| 55 |

+

@st.cache_resource

|

| 56 |

+

def init_spark():

|

| 57 |

+

return sparknlp.start()

|

| 58 |

+

|

| 59 |

+

# Create NLP Pipeline

|

| 60 |

+

@st.cache_resource

|

| 61 |

+

def create_pipeline():

|

| 62 |

+

document_assembler = DocumentAssembler() \

|

| 63 |

+

.setInputCol("text") \

|

| 64 |

+

.setOutputCol("document")

|

| 65 |

+

|

| 66 |

+

tokenizer = Tokenizer() \

|

| 67 |

+

.setInputCols(["document"]) \

|

| 68 |

+

.setOutputCol("token")

|

| 69 |

+

|

| 70 |

+

pos_tagger = PerceptronModel.pretrained("pos_anc", 'en') \

|

| 71 |

+

.setInputCols("document", "token") \

|

| 72 |

+

.setOutputCol("pos")

|

| 73 |

+

|

| 74 |

+

dep_parser = DependencyParserModel.pretrained('dependency_conllu') \

|

| 75 |

+

.setInputCols(["document", "pos", "token"]) \

|

| 76 |

+

.setOutputCol("dependency")

|

| 77 |

+

|

| 78 |

+

typed_dep_parser = TypedDependencyParserModel.pretrained('dependency_typed_conllu') \

|

| 79 |

+

.setInputCols(["token", "pos", "dependency"]) \

|

| 80 |

+

.setOutputCol("dependency_type")

|

| 81 |

+

|

| 82 |

+

pipeline = Pipeline(stages=[

|

| 83 |

+

document_assembler,

|

| 84 |

+

tokenizer,

|

| 85 |

+

pos_tagger,

|

| 86 |

+

dep_parser,

|

| 87 |

+

typed_dep_parser

|

| 88 |

+

])

|

| 89 |

+

return pipeline

|

| 90 |

+

|

| 91 |

+

# Fit Data to Pipeline

|

| 92 |

+

def fit_data(pipeline, text):

|

| 93 |

+

df = spark.createDataFrame([[text]]).toDF("text")

|

| 94 |

+

result = pipeline.fit(df).transform(df)

|

| 95 |

+

return result

|

| 96 |

+

|

| 97 |

+

# Render DataFrame as HTML Table

|

| 98 |

+

def render_table(df, sidebar=False):

|

| 99 |

+

html = df.to_html(classes="table", index=False, escape=False)

|

| 100 |

+

if sidebar:

|

| 101 |

+

st.sidebar.markdown(html, unsafe_allow_html=True)

|

| 102 |

+

else:

|

| 103 |

+

st.markdown(html, unsafe_allow_html=True)

|

| 104 |

+

|

| 105 |

+

def explain_tags(tag_type, tags, tag_dict):

|

| 106 |

+

explanations = [(tag, tag_dict[tag]) for tag in tags if tag in tag_dict]

|

| 107 |

+

if explanations:

|

| 108 |

+

df = pd.DataFrame(explanations, columns=[f"{tag_type} Tag", f"{tag_type} Meaning"])

|

| 109 |

+

df.index = [''] * len(df) # Hide the index

|

| 110 |

+

render_table(df, sidebar=True)

|

| 111 |

+

|

| 112 |

+

# Page Title and Subtitle

|

| 113 |

+

title = "Grammar Analysis & Dependency Parsing"

|

| 114 |

+

sub_title = "Visualize the syntactic structure of a sentence as a directed labeled graph."

|

| 115 |

+

|

| 116 |

+

st.markdown(f'<div class="main-title">{title}</div>', unsafe_allow_html=True)

|

| 117 |

+

st.markdown(f'<div style="text-align: center; color: #666666;">{sub_title}</div>', unsafe_allow_html=True)

|

| 118 |

+

|

| 119 |

+

# Example Sentences

|

| 120 |

+

examples = [

|

| 121 |

+

"John Snow is a good man. He knows a lot about science.",

|

| 122 |

+

"In what country is the WTO headquartered?",

|

| 123 |

+

"I was wearing my dark blue shirt and tie.",

|

| 124 |

+

"The Geneva Motor Show is the most popular car show of the year.",

|

| 125 |

+

"Bill Gates and Steve Jobs had periods of civility."

|

| 126 |

+

]

|

| 127 |

+

|

| 128 |

+

# Text Selection

|

| 129 |

+

selected_text = st.selectbox("Select an example", examples)

|

| 130 |

+

custom_input = st.text_input("Try it with your own sentence!")

|

| 131 |

+

|

| 132 |

+

text_to_analyze = custom_input if custom_input else selected_text

|

| 133 |

+

|

| 134 |

+

st.write('Text to analyze:')

|

| 135 |

+

HTML_WRAPPER = """<div class="scroll entities" style="overflow-x: auto;

|

| 136 |

+

border: 1px solid #e6e9ef; border-radius: 0.25rem;

|

| 137 |

+

padding: 1rem; margin-bottom: 2.5rem; white-space:pre-wrap">{}</div>"""

|

| 138 |

+

st.markdown(HTML_WRAPPER.format(text_to_analyze), unsafe_allow_html=True)

|

| 139 |

+

|

| 140 |

+

# Initialize Spark and Pipeline

|

| 141 |

+

spark = init_spark()

|

| 142 |

+

pipeline = create_pipeline()

|

| 143 |

+

output = fit_data(pipeline, text_to_analyze)

|

| 144 |

+

|

| 145 |

+

# Display Dependency Tree

|

| 146 |

+

st.write("Dependency Tree:")

|

| 147 |

+

nlp.load('dep.typed').viz_streamlit_dep_tree(

|

| 148 |

+

text=text_to_analyze,

|

| 149 |

+

title='',

|

| 150 |

+

sub_title='',

|

| 151 |

+

set_wide_layout_CSS=False,

|

| 152 |

+

generate_code_sample=False,

|

| 153 |

+

key="NLU_streamlit",

|

| 154 |

+

show_infos=False,

|

| 155 |

+

show_logo=False,

|

| 156 |

+

show_text_input=False,

|

| 157 |

+

)

|

| 158 |

+

|

| 159 |

+

# Display Raw Result

|

| 160 |

+

st.write("Raw Result:")

|

| 161 |

+

df = output.select(F.explode(F.arrays_zip(

|

| 162 |

+

output.token.result,

|

| 163 |

+

output.token.begin,

|

| 164 |

+

output.token.end,

|

| 165 |

+

output.pos.result,

|

| 166 |

+

output.dependency.result,

|

| 167 |

+

output.dependency_type.result

|

| 168 |

+

)).alias("cols")) \

|

| 169 |

+

.select(F.expr("cols['0']").alias("chunk"),

|

| 170 |

+

F.expr("cols['1']").alias("begin"),

|

| 171 |

+

F.expr("cols['2']").alias("end"),

|

| 172 |

+

F.expr("cols['3']").alias("pos"),

|

| 173 |

+

F.expr("cols['4']").alias("dependency"),

|

| 174 |

+

F.expr("cols['5']").alias("dependency_type")).toPandas()

|

| 175 |

+

|

| 176 |

+

render_table(df)

|

| 177 |

+

|

| 178 |

+

# Sidebar Content

|

| 179 |

+

# POS and Dependency dictionaries

|

| 180 |

+

pos_dict = {

|

| 181 |

+

"CC": "Coordinating conjunction", "CD": "Cardinal number", "DT": "Determiner",

|

| 182 |

+

"EX": "Existential there", "FW": "Foreign word", "IN": "Preposition or subordinating conjunction",

|

| 183 |

+

"JJ": "Adjective", "JJR": "Adjective, comparative", "JJS": "Adjective, superlative",

|

| 184 |

+

"LS": "List item marker", "MD": "Modal", "NN": "Noun, singular or mass",

|

| 185 |

+

"NNS": "Noun, plural", "NNP": "Proper noun, singular", "NNPS": "Proper noun, plural",

|

| 186 |

+

"PDT": "Predeterminer", "POS": "Possessive ending", "PRP": "Personal pronoun",

|

| 187 |

+

"PRP$": "Possessive pronoun", "RB": "Adverb", "RBR": "Adverb, comparative",

|

| 188 |

+

"RBS": "Adverb, superlative", "RP": "Particle", "SYM": "Symbol", "TO": "to",

|

| 189 |

+

"UH": "Interjection", "VB": "Verb, base form", "VBD": "Verb, past tense",

|

| 190 |

+

"VBG": "Verb, gerund or present participle", "VBN": "Verb, past participle",

|

| 191 |

+

"VBP": "Verb, non-3rd person singular present", "VBZ": "Verb, 3rd person singular present",

|

| 192 |

+

"WDT": "Wh-determiner", "WP": "Wh-pronoun", "WP$": "Possessive wh-pronoun",

|

| 193 |

+

"WRB": "Wh-adverb"

|

| 194 |

+

}

|

| 195 |

+

|

| 196 |

+

dependency_dict = {

|

| 197 |

+

"acl": "clausal modifier of noun (adjectival clause)",

|

| 198 |

+

"advcl": "adverbial clause modifier",

|

| 199 |

+

"advmod": "adverbial modifier",

|

| 200 |

+

"amod": "adjectival modifier",

|

| 201 |

+

"appos": "appositional modifier",

|

| 202 |

+

"aux": "auxiliary",

|

| 203 |

+

"case": "case marking",

|

| 204 |

+

"cc": "coordinating conjunction",

|

| 205 |

+

"ccomp": "clausal complement",

|

| 206 |

+

"clf": "classifier",

|

| 207 |

+

"compound": "compound",

|

| 208 |

+

"conj": "conjunct",

|

| 209 |

+

"cop": "copula",

|

| 210 |

+

"csubj": "clausal subject",

|

| 211 |

+

"dep": "unspecified dependency",

|

| 212 |

+

"det": "determiner",

|

| 213 |

+

"discourse": "discourse element",

|

| 214 |

+

"dislocated": "dislocated elements",

|

| 215 |

+

"expl": "expletive",

|

| 216 |

+

"fixed": "fixed multiword expression",

|

| 217 |

+

"flat": "flat multiword expression",

|

| 218 |

+

"goeswith": "goes with",

|

| 219 |

+

"iobj": "indirect object",

|

| 220 |

+

"list": "list",

|

| 221 |

+

"mark": "marker",

|

| 222 |

+

"nmod": "nominal modifier",

|

| 223 |

+

"nsubj": "nominal subject",

|

| 224 |

+

"nummod": "numeric modifier",

|

| 225 |

+

"obj": "object",

|

| 226 |

+

"obl": "oblique nominal",

|

| 227 |

+

"orphan": "orphan",

|

| 228 |

+

"parataxis": "parataxis",

|

| 229 |

+

"punct": "punctuation",

|

| 230 |

+

"reparandum": "overridden disfluency",

|

| 231 |

+

"root": "root",

|

| 232 |

+

"vocative": "vocative",

|

| 233 |

+

"xcomp": "open clausal complement"

|

| 234 |

+

}

|

| 235 |

+

|

| 236 |

+

# Get unique POS and dependency tags

|

| 237 |

+

unique_pos = df['pos'].unique()

|

| 238 |

+

unique_dep = df['dependency_type'].unique()

|

| 239 |

+

|

| 240 |

+

# Sidebar options for explanations

|

| 241 |

+

if st.sidebar.checkbox("Explain POS Tags"):

|

| 242 |

+

explain_tags("POS", unique_pos, pos_dict)

|

| 243 |

+

|

| 244 |

+

if st.sidebar.checkbox("Explain Dependency Types"):

|

| 245 |

+

explain_tags("Dependency", unique_dep, dependency_dict)

|

| 246 |

+

|

| 247 |

+

# Sidebar with Reference Notebook Link

|

| 248 |

+

colab_link = """

|

| 249 |

+

<a href="https://colab.research.google.com/github/JohnSnowLabs/spark-nlp-workshop/blob/master/tutorials/streamlit_notebooks/GRAMMAR_EN.ipynb">

|

| 250 |

+

<img src="https://colab.research.google.com/assets/colab-badge.svg" style="zoom: 1.3" alt="Open In Colab"/>

|

| 251 |

+

</a>

|

| 252 |

+

"""

|

| 253 |

+

st.sidebar.markdown('Reference Notebook:')

|

| 254 |

+

st.sidebar.markdown(colab_link, unsafe_allow_html=True)

|

Dockerfile

ADDED

|

@@ -0,0 +1,72 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Download base image ubuntu 18.04

|

| 2 |

+

FROM ubuntu:18.04

|

| 3 |

+

|

| 4 |

+

# Set environment variables

|

| 5 |

+

ENV NB_USER jovyan

|

| 6 |

+

ENV NB_UID 1000

|

| 7 |

+

ENV HOME /home/${NB_USER}

|

| 8 |

+

ENV JAVA_HOME /usr/lib/jvm/java-8-openjdk-amd64/

|

| 9 |

+

|

| 10 |

+

# Install required packages

|

| 11 |

+

RUN apt-get update && apt-get install -y \

|

| 12 |

+

tar \

|

| 13 |

+

wget \

|

| 14 |

+

bash \

|

| 15 |

+

rsync \

|

| 16 |

+

gcc \

|

| 17 |

+

libfreetype6-dev \

|

| 18 |

+

libhdf5-serial-dev \

|

| 19 |

+

libpng-dev \

|

| 20 |

+

libzmq3-dev \

|

| 21 |

+

python3 \

|

| 22 |

+

python3-dev \

|

| 23 |

+

python3-pip \

|

| 24 |

+

unzip \

|

| 25 |

+

pkg-config \

|

| 26 |

+

software-properties-common \

|

| 27 |

+

graphviz \

|

| 28 |

+

openjdk-8-jdk \

|

| 29 |

+

ant \

|

| 30 |

+

ca-certificates-java \

|

| 31 |

+

&& apt-get clean \

|

| 32 |

+

&& update-ca-certificates -f

|

| 33 |

+

|

| 34 |

+

# Install Python 3.8 and pip

|

| 35 |

+

RUN add-apt-repository ppa:deadsnakes/ppa \

|

| 36 |

+

&& apt-get update \

|

| 37 |

+

&& apt-get install -y python3.8 python3-pip \

|

| 38 |

+

&& apt-get clean

|

| 39 |

+

|

| 40 |

+

# Set up JAVA_HOME

|

| 41 |

+

RUN echo "export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64/" >> /etc/profile \

|

| 42 |

+

&& echo "export PATH=\$JAVA_HOME/bin:\$PATH" >> /etc/profile

|

| 43 |

+

# Create a new user named "jovyan" with user ID 1000

|

| 44 |

+

RUN useradd -m -u ${NB_UID} ${NB_USER}

|

| 45 |

+

|

| 46 |

+

# Switch to the "jovyan" user

|

| 47 |

+

USER ${NB_USER}

|

| 48 |

+

|

| 49 |

+

# Set home and path variables for the user

|

| 50 |

+

ENV HOME=/home/${NB_USER} \

|

| 51 |

+

PATH=/home/${NB_USER}/.local/bin:$PATH

|

| 52 |

+

|

| 53 |

+

# Set up PySpark to use Python 3.8 for both driver and workers

|

| 54 |

+

ENV PYSPARK_PYTHON=/usr/bin/python3.8

|

| 55 |

+

ENV PYSPARK_DRIVER_PYTHON=/usr/bin/python3.8

|

| 56 |

+

|

| 57 |

+

# Set the working directory to the user's home directory

|

| 58 |

+

WORKDIR ${HOME}

|

| 59 |

+

|

| 60 |

+

# Upgrade pip and install Python dependencies

|

| 61 |

+

RUN python3.8 -m pip install --upgrade pip

|

| 62 |

+

COPY requirements.txt /tmp/requirements.txt

|

| 63 |

+

RUN python3.8 -m pip install -r /tmp/requirements.txt

|

| 64 |

+

|

| 65 |

+

# Copy the application code into the container at /home/jovyan

|

| 66 |

+

COPY --chown=${NB_USER}:${NB_USER} . ${HOME}

|

| 67 |

+

|

| 68 |

+

# Expose port for Streamlit

|

| 69 |

+

EXPOSE 7860

|

| 70 |

+

|

| 71 |

+

# Define the entry point for the container

|

| 72 |

+

ENTRYPOINT ["streamlit", "run", "Demo.py", "--server.port=7860", "--server.address=0.0.0.0"]

|

images/DependencyParserVisualizer.png

ADDED

|

pages/Workflow & Model Overview.py

ADDED

|

@@ -0,0 +1,250 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

|

| 3 |

+

# Custom CSS for better styling

|

| 4 |

+

st.markdown("""

|

| 5 |

+

<style>

|

| 6 |

+

.main-title {

|

| 7 |

+

font-size: 36px;

|

| 8 |

+

color: #4A90E2;

|

| 9 |

+

font-weight: bold;

|

| 10 |

+

text-align: center;

|

| 11 |

+

}

|

| 12 |

+

.sub-title {

|

| 13 |

+

font-size: 24px;

|

| 14 |

+

color: #4A90E2;

|

| 15 |

+

margin-top: 20px;

|

| 16 |

+

}

|

| 17 |

+

.section {

|

| 18 |

+

background-color: #f9f9f9;

|

| 19 |

+

padding: 15px;

|

| 20 |

+

border-radius: 10px;

|

| 21 |

+

margin-top: 20px;

|

| 22 |

+

}

|

| 23 |

+

.section h2 {

|

| 24 |

+

font-size: 22px;

|

| 25 |

+

color: #4A90E2;

|

| 26 |

+

}

|

| 27 |

+

.section p, .section ul {

|

| 28 |

+

color: #666666;

|

| 29 |

+

}

|

| 30 |

+

.link {

|

| 31 |

+

color: #4A90E2;

|

| 32 |

+

text-decoration: none;

|

| 33 |

+

}

|

| 34 |

+

</style>

|

| 35 |

+

""", unsafe_allow_html=True)

|

| 36 |

+

|

| 37 |

+

# Title

|

| 38 |

+

st.markdown('<div class="main-title">Grammar Analysis & Dependency Parsing</div>', unsafe_allow_html=True)

|

| 39 |

+

|

| 40 |

+

# Introduction Section

|

| 41 |

+

st.markdown("""

|

| 42 |

+

<div class="section">

|

| 43 |

+

<p>Understanding the grammatical structure of sentences is crucial in Natural Language Processing (NLP) for various applications such as translation, text summarization, and information extraction. This page focuses on Grammar Analysis and Dependency Parsing, which help in identifying the grammatical roles of words in a sentence and the relationships between them.</p>

|

| 44 |

+

<p>We utilize Spark NLP, a robust library for NLP tasks, to perform Part-of-Speech (POS) tagging and Dependency Parsing, enabling us to analyze sentences at scale with high accuracy.</p>

|

| 45 |

+

</div>

|

| 46 |

+

""", unsafe_allow_html=True)

|

| 47 |

+

|

| 48 |

+

# Understanding Dependency Parsing

|

| 49 |

+

st.markdown('<div class="sub-title">Understanding Dependency Parsing</div>', unsafe_allow_html=True)

|

| 50 |

+

|

| 51 |

+

st.markdown("""

|

| 52 |

+

<div class="section">

|

| 53 |

+

<p>Dependency Parsing is a technique used to understand the grammatical structure of a sentence by identifying the dependencies between words. It maps out relationships such as subject-verb, adjective-noun, etc., which are essential for understanding the sentence's meaning.</p>

|

| 54 |

+

<p>In Dependency Parsing, each word in a sentence is linked to another word, creating a tree-like structure called a dependency tree. This structure helps in various NLP tasks, including information retrieval, question answering, and machine translation.</p>

|

| 55 |

+

</div>

|

| 56 |

+

""", unsafe_allow_html=True)

|

| 57 |

+

|

| 58 |

+

# Implementation Section

|

| 59 |

+

st.markdown('<div class="sub-title">Implementing Grammar Analysis & Dependency Parsing</div>', unsafe_allow_html=True)

|

| 60 |

+

|

| 61 |

+

st.markdown("""

|

| 62 |

+

<div class="section">

|

| 63 |

+

<p>The following example demonstrates how to implement a grammar analysis pipeline using Spark NLP. The pipeline includes stages for tokenization, POS tagging, and dependency parsing, extracting the grammatical relationships between words in a sentence.</p>

|

| 64 |

+

</div>

|

| 65 |

+

""", unsafe_allow_html=True)

|

| 66 |

+

|

| 67 |

+

st.code('''

|

| 68 |

+

import sparknlp

|

| 69 |

+

from sparknlp.base import *

|

| 70 |

+

from sparknlp.annotator import *

|

| 71 |

+

from pyspark.ml import Pipeline

|

| 72 |

+

import pyspark.sql.functions as F

|

| 73 |

+

|

| 74 |

+

# Initialize Spark NLP

|

| 75 |

+

spark = sparknlp.start()

|

| 76 |

+

|

| 77 |

+

# Stage 1: Document Assembler

|

| 78 |

+

document_assembler = DocumentAssembler()\\

|

| 79 |

+

.setInputCol("text")\\

|

| 80 |

+

.setOutputCol("document")

|

| 81 |

+

|

| 82 |

+

# Stage 2: Tokenizer

|

| 83 |

+

tokenizer = Tokenizer().setInputCols(["document"]).setOutputCol("token")

|

| 84 |

+

|

| 85 |

+

# Stage 3: POS Tagger

|

| 86 |

+

postagger = PerceptronModel.pretrained("pos_anc", "en")\\

|

| 87 |

+

.setInputCols(["document", "token"])\\

|

| 88 |

+

.setOutputCol("pos")

|

| 89 |

+

|

| 90 |

+

# Stage 4: Dependency Parsing

|

| 91 |

+

dependency_parser = DependencyParserModel.pretrained("dependency_conllu")\\

|

| 92 |

+

.setInputCols(["document", "pos", "token"])\\

|

| 93 |

+

.setOutputCol("dependency")

|

| 94 |

+

|

| 95 |

+

# Stage 5: Typed Dependency Parsing

|

| 96 |

+

typed_dependency_parser = TypedDependencyParserModel.pretrained("dependency_typed_conllu")\\

|

| 97 |

+

.setInputCols(["token", "pos", "dependency"])\\

|

| 98 |

+

.setOutputCol("dependency_type")

|

| 99 |

+

|

| 100 |

+

# Define the pipeline

|

| 101 |

+

pipeline = Pipeline(stages=[

|

| 102 |

+

document_assembler,

|

| 103 |

+

tokenizer,

|

| 104 |

+

postagger,

|

| 105 |

+

dependency_parser,

|

| 106 |

+

typed_dependency_parser

|

| 107 |

+

])

|

| 108 |

+

|

| 109 |

+

# Example sentence

|

| 110 |

+

example = spark.createDataFrame([

|

| 111 |

+

["Unions representing workers at Turner Newall say they are 'disappointed' after talks with stricken parent firm Federal Mogul."]

|

| 112 |

+

]).toDF("text")

|

| 113 |

+

|

| 114 |

+

# Apply the pipeline

|

| 115 |

+

result = pipeline.fit(spark.createDataFrame([[""]]).toDF("text")).transform(example)

|

| 116 |

+

|

| 117 |

+

# Display the results

|

| 118 |

+

result.select(

|

| 119 |

+

F.explode(

|

| 120 |

+

F.arrays_zip(

|

| 121 |

+

result.token.result,

|

| 122 |

+

result.pos.result,

|

| 123 |

+

result.dependency.result,

|

| 124 |

+

result.dependency_type.result

|

| 125 |

+

)

|

| 126 |

+

).alias("cols")

|

| 127 |

+

).select(

|

| 128 |

+

F.expr("cols['0']").alias("token"),

|

| 129 |

+

F.expr("cols['1']").alias("pos"),

|

| 130 |

+

F.expr("cols['2']").alias("dependency"),

|

| 131 |

+

F.expr("cols['3']").alias("dependency_type")

|

| 132 |

+

).show(truncate=False)

|

| 133 |

+

''', language='python')

|

| 134 |

+

|

| 135 |

+

# Example Output

|

| 136 |

+

st.text("""

|

| 137 |

+

+------------+---+------------+---------------+

|

| 138 |

+

|token |pos|dependency |dependency_type|

|

| 139 |

+

+------------+---+------------+---------------+

|

| 140 |

+

|Unions |NNP|ROOT |root |

|

| 141 |

+

|representing|VBG|workers |amod |

|

| 142 |

+

|workers |NNS|Unions |flat |

|

| 143 |

+

|at |IN |Turner |case |

|

| 144 |

+

|Turner |NNP|workers |flat |

|

| 145 |

+

|Newall |NNP|say |nsubj |

|

| 146 |

+

|say |VBP|Unions |parataxis |

|

| 147 |

+

|they |PRP|disappointed|nsubj |

|

| 148 |

+

|are |VBP|disappointed|nsubj |

|

| 149 |

+

|' |POS|disappointed|case |

|

| 150 |

+

|disappointed|JJ |say |nsubj |

|

| 151 |

+

|' |POS|disappointed|case |

|

| 152 |

+

|after |IN |talks |case |

|

| 153 |

+

|talks |NNS|disappointed|nsubj |

|

| 154 |

+

|with |IN |stricken |det |

|

| 155 |

+

|stricken |NN |talks |amod |

|

| 156 |

+

|parent |NN |Mogul |flat |

|

| 157 |

+

|firm |NN |Mogul |flat |

|

| 158 |

+

|Federal |NNP|Mogul |flat |

|

| 159 |

+

|Mogul |NNP|stricken |flat |

|

| 160 |

+

+------------+---+------------+---------------+

|

| 161 |

+

""")

|

| 162 |

+

|

| 163 |

+

# Visualizing the Dependencies

|

| 164 |

+

st.markdown('<div class="sub-title">Visualizing the Dependencies</div>', unsafe_allow_html=True)

|

| 165 |

+

|

| 166 |

+

st.markdown("""

|

| 167 |

+

<div class="section">

|

| 168 |

+

<p>For a visual representation of the dependencies, you can use the <b>spark-nlp-display</b> module, an open-source tool that makes visualizing dependencies straightforward and easy to integrate into your workflow.</p>

|

| 169 |

+

<p>First, install the module with pip:</p>

|

| 170 |

+

<code>pip install spark-nlp-display</code>

|

| 171 |

+

<p>Then, you can use the <code>DependencyParserVisualizer</code> class to create a visualization of the dependency tree:</p>

|

| 172 |

+

</div>

|

| 173 |

+

""", unsafe_allow_html=True)

|

| 174 |

+

|

| 175 |

+

st.code('''

|

| 176 |

+

from sparknlp_display import DependencyParserVisualizer

|

| 177 |

+

|

| 178 |

+

# Initialize the visualizer

|

| 179 |

+

dependency_vis = DependencyParserVisualizer()

|

| 180 |

+

|

| 181 |

+

# Display the dependency tree

|

| 182 |

+

dependency_vis.display(

|

| 183 |

+

result.collect()[0], # single example result

|

| 184 |

+

pos_col="pos",

|

| 185 |

+

dependency_col="dependency",

|

| 186 |

+

dependency_type_col="dependency_type",

|

| 187 |

+

)

|

| 188 |

+

''', language='python')

|

| 189 |

+

|

| 190 |

+

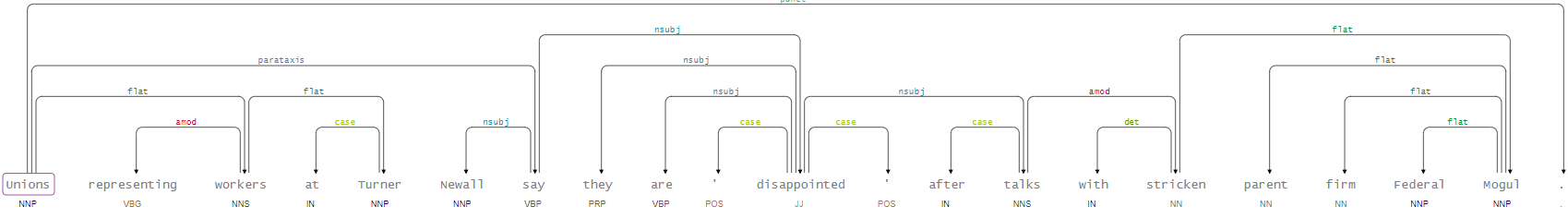

st.image('images\DependencyParserVisualizer.png', caption='The visualization of dependencies')

|

| 191 |

+

|

| 192 |

+

st.markdown("""

|

| 193 |

+

<div class="section">

|

| 194 |

+

<p>This code snippet will generate a visual dependency tree like shown above for the given sentence, clearly showing the grammatical relationships between words. The <code>spark-nlp-display</code> module provides an intuitive way to visualize complex dependency structures, aiding in the analysis and understanding of sentence grammar.</p>

|

| 195 |

+

</div>

|

| 196 |

+

""", unsafe_allow_html=True)

|

| 197 |

+

|

| 198 |

+

# Model Info Section

|

| 199 |

+

st.markdown('<div class="sub-title">Choosing the Right Model for Dependency Parsing</div>', unsafe_allow_html=True)

|

| 200 |

+

|

| 201 |

+

st.markdown("""

|

| 202 |

+

<div class="section">

|

| 203 |

+

<p>For dependency parsing, the models <b>"dependency_conllu"</b> and <b>"dependency_typed_conllu"</b> are used. These models are trained on a large corpus and are effective for extracting grammatical relations between words in English sentences.</p>

|

| 204 |

+

<p>To explore more models tailored for different NLP tasks, visit the <a class="link" href="https://sparknlp.org/models?annotator=DependencyParserModel" target="_blank">Spark NLP Models Hub</a>.</p>

|

| 205 |

+

</div>

|

| 206 |

+

""", unsafe_allow_html=True)

|

| 207 |

+

|

| 208 |

+

# References Section

|

| 209 |

+

st.markdown('<div class="sub-title">References</div>', unsafe_allow_html=True)

|

| 210 |

+

|

| 211 |

+

st.markdown("""

|

| 212 |

+

<div class="section">

|

| 213 |

+

<ul>

|

| 214 |

+

<li><a class="link" href="https://nlp.johnsnowlabs.com/docs/en/annotators" target="_blank" rel="noopener">Spark NLP documentation page</a> for all available annotators</li>

|

| 215 |

+

<li>Python API documentation for <a class="link" href="https://nlp.johnsnowlabs.com/api/python/reference/autosummary/sparknlp/annotator/pos/perceptron/index.html#sparknlp.annotator.pos.perceptron.PerceptronModel" target="_blank" rel="noopener">PerceptronModel</a> and <a href="https://nlp.johnsnowlabs.com/api/python/reference/autosummary/sparknlp/annotator/dependency/dependency_parser/index.html#sparknlp.annotator.dependency.dependency_parser.DependencyParserModel" target="_blank" rel="noopener">Dependency Parser</a></li>

|

| 216 |

+

<li>Scala API documentation for <a class="link" href="https://nlp.johnsnowlabs.com/api/com/johnsnowlabs/nlp/annotators/pos/perceptron/PerceptronModel.html" target="_blank" rel="noopener">PerceptronModel</a> and <a href="https://nlp.johnsnowlabs.com/api/com/johnsnowlabs/nlp/annotators/parser/dep/DependencyParserModel.html" target="_blank" rel="noopener">DependencyParserModel</a></li>

|

| 217 |

+

<li>For extended examples of usage of Spark NLP annotators, check the <a class="link" href="https://github.com/JohnSnowLabs/spark-nlp-workshop" target="_blank" rel="noopener">Spark NLP Workshop repository</a>.</li>

|

| 218 |

+

<li>Minsky, M.L. and Papert, S.A. (1969) Perceptrons. MIT Press, Cambridge.</li>

|

| 219 |

+

</ul>

|

| 220 |

+

</div>

|

| 221 |

+

""", unsafe_allow_html=True)

|

| 222 |

+

|

| 223 |

+

# Community & Support Section

|

| 224 |

+

st.markdown('<div class="sub-title">Community & Support</div>', unsafe_allow_html=True)

|

| 225 |

+

|

| 226 |

+

st.markdown("""

|

| 227 |

+

<div class="section">

|

| 228 |

+

<ul>

|

| 229 |

+

<li><a class="link" href="https://sparknlp.org/" target="_blank">Official Website</a>: Documentation and examples</li>

|

| 230 |

+

<li><a class="link" href="https://join.slack.com/t/spark-nlp/shared_invite/zt-198dipu77-L3UWNe_AJ8xqDk0ivmih5Q" target="_blank">Slack</a>: Live discussion with the community and team</li>

|

| 231 |

+

<li><a class="link" href="https://github.com/JohnSnowLabs/spark-nlp" target="_blank">GitHub</a>: Bug reports, feature requests, and contributions</li>

|

| 232 |

+

<li><a class="link" href="https://medium.com/spark-nlp" target="_blank">Medium</a>: Spark NLP articles</li>

|

| 233 |

+

<li><a class="link" href="https://www.youtube.com/channel/UCmFOjlpYEhxf_wJUDuz6xxQ/videos" target="_blank">YouTube</a>: Video tutorials</li>

|

| 234 |

+

</ul>

|

| 235 |

+

</div>

|

| 236 |

+

""", unsafe_allow_html=True)

|

| 237 |

+

|

| 238 |

+

# Quick Links Section

|

| 239 |

+

st.markdown('<div class="sub-title">Quick Links</div>', unsafe_allow_html=True)

|

| 240 |

+

|

| 241 |

+

st.markdown("""

|

| 242 |

+

<div class="section">

|

| 243 |

+

<ul>

|

| 244 |

+

<li><a class="link" href="https://sparknlp.org/docs/en/quickstart" target="_blank">Getting Started</a></li>

|

| 245 |

+

<li><a class="link" href="https://nlp.johnsnowlabs.com/models" target="_blank">Pretrained Models</a></li>

|

| 246 |

+

<li><a class="link" href="https://github.com/JohnSnowLabs/spark-nlp/tree/master/examples/python/annotation/text/english" target="_blank">Example Notebooks</a></li>

|

| 247 |

+

<li><a class="link" href="https://sparknlp.org/docs/en/install" target="_blank">Installation Guide</a></li>

|

| 248 |

+

</ul>

|

| 249 |

+

</div>

|

| 250 |

+

""", unsafe_allow_html=True)

|

requirements.txt

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

streamlit

|

| 2 |

+

st-annotated-text

|

| 3 |

+

streamlit-tags

|

| 4 |

+

pandas

|

| 5 |

+

numpy

|

| 6 |

+

spark-nlp

|

| 7 |

+

pyspark

|

| 8 |

+

johnsnowlabs

|