Spaces:

Running

Running

Commit

·

09b313f

1

Parent(s):

9244862

[ADD] Harness tasks, data display

Browse files- .gitignore +2 -0

- README.md +11 -2

- app.py +342 -60

- assets/entity_distribution.png +0 -0

- assets/image.png +0 -0

- assets/ner_evaluation_example.png +0 -0

- eval_metrics_app.py +75 -0

- medic-harness-requests/.gitattributes +58 -0

- medic-harness-results/.gitattributes +58 -0

- medic-harness-results/aaditya/Llama3-OpenBioLLM-70B/results_2024-07-24T15:26:36Z.json +37 -0

- medic-harness-results/meta-llama/Llama-3.1-8B-Instruct/results_2024-07-24T15:26:36Z.json +39 -0

- requirements.txt +4 -2

- src/about.py +231 -36

- src/display/css_html_js.py +7 -0

- src/display/utils.py +105 -25

- src/envs.py +10 -10

- src/leaderboard/read_evals.py +122 -62

- src/populate.py +11 -8

- src/submission/check_validity.py +17 -7

- src/submission/submit.py +107 -19

.gitignore

CHANGED

|

@@ -10,4 +10,6 @@ eval-queue/

|

|

| 10 |

eval-results/

|

| 11 |

eval-queue-bk/

|

| 12 |

eval-results-bk/

|

|

|

|

|

|

|

| 13 |

logs/

|

|

|

|

| 10 |

eval-results/

|

| 11 |

eval-queue-bk/

|

| 12 |

eval-results-bk/

|

| 13 |

+

eval-queue-local/

|

| 14 |

+

eval-results-local/

|

| 15 |

logs/

|

README.md

CHANGED

|

@@ -1,5 +1,5 @@

|

|

| 1 |

---

|

| 2 |

-

title:

|

| 3 |

emoji: 🥇

|

| 4 |

colorFrom: green

|

| 5 |

colorTo: indigo

|

|

@@ -7,8 +7,17 @@ sdk: gradio

|

|

| 7 |

app_file: app.py

|

| 8 |

pinned: true

|

| 9 |

license: apache-2.0

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 10 |

---

|

| 11 |

|

|

|

|

|

|

|

|

|

|

| 12 |

# Start the configuration

|

| 13 |

|

| 14 |

Most of the variables to change for a default leaderboard are in `src/env.py` (replace the path for your leaderboard) and `src/about.py` (for tasks).

|

|

@@ -41,4 +50,4 @@ If you encounter problem on the space, don't hesitate to restart it to remove th

|

|

| 41 |

You'll find

|

| 42 |

- the main table' columns names and properties in `src/display/utils.py`

|

| 43 |

- the logic to read all results and request files, then convert them in dataframe lines, in `src/leaderboard/read_evals.py`, and `src/populate.py`

|

| 44 |

-

-

|

|

|

|

| 1 |

---

|

| 2 |

+

title: Clinical NER Leaderboard

|

| 3 |

emoji: 🥇

|

| 4 |

colorFrom: green

|

| 5 |

colorTo: indigo

|

|

|

|

| 7 |

app_file: app.py

|

| 8 |

pinned: true

|

| 9 |

license: apache-2.0

|

| 10 |

+

tags:

|

| 11 |

+

- leaderboard

|

| 12 |

+

- submission:automatic

|

| 13 |

+

- test:public

|

| 14 |

+

- judge:auto

|

| 15 |

+

- modality:text

|

| 16 |

---

|

| 17 |

|

| 18 |

+

Also known as the NCER leaderboard HF, Huggingface. See the paper for more info: https://huggingface.co/papers/2410.05046.

|

| 19 |

+

|

| 20 |

+

|

| 21 |

# Start the configuration

|

| 22 |

|

| 23 |

Most of the variables to change for a default leaderboard are in `src/env.py` (replace the path for your leaderboard) and `src/about.py` (for tasks).

|

|

|

|

| 50 |

You'll find

|

| 51 |

- the main table' columns names and properties in `src/display/utils.py`

|

| 52 |

- the logic to read all results and request files, then convert them in dataframe lines, in `src/leaderboard/read_evals.py`, and `src/populate.py`

|

| 53 |

+

- teh logic to allow or filter submissions in `src/submission/submit.py` and `src/submission/check_validity.py`

|

app.py

CHANGED

|

@@ -1,5 +1,6 @@

|

|

|

|

|

|

|

|

| 1 |

import gradio as gr

|

| 2 |

-

from gradio_leaderboard import Leaderboard, ColumnFilter, SelectColumns

|

| 3 |

import pandas as pd

|

| 4 |

from apscheduler.schedulers.background import BackgroundScheduler

|

| 5 |

from huggingface_hub import snapshot_download

|

|

@@ -9,30 +10,41 @@ from src.about import (

|

|

| 9 |

CITATION_BUTTON_TEXT,

|

| 10 |

EVALUATION_QUEUE_TEXT,

|

| 11 |

INTRODUCTION_TEXT,

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

| 13 |

TITLE,

|

|

|

|

| 14 |

)

|

| 15 |

from src.display.css_html_js import custom_css

|

| 16 |

from src.display.utils import (

|

| 17 |

-

|

| 18 |

-

|

|

|

|

|

|

|

| 19 |

EVAL_COLS,

|

| 20 |

EVAL_TYPES,

|

|

|

|

|

|

|

| 21 |

AutoEvalColumn,

|

| 22 |

ModelType,

|

| 23 |

-

|

|

|

|

|

|

|

| 24 |

WeightType,

|

| 25 |

-

|

| 26 |

)

|

| 27 |

from src.envs import API, EVAL_REQUESTS_PATH, EVAL_RESULTS_PATH, QUEUE_REPO, REPO_ID, RESULTS_REPO, TOKEN

|

| 28 |

from src.populate import get_evaluation_queue_df, get_leaderboard_df

|

| 29 |

-

from src.submission.submit import add_new_eval

|

| 30 |

|

| 31 |

|

| 32 |

def restart_space():

|

| 33 |

API.restart_space(repo_id=REPO_ID)

|

| 34 |

|

| 35 |

-

|

| 36 |

try:

|

| 37 |

print(EVAL_REQUESTS_PATH)

|

| 38 |

snapshot_download(

|

|

@@ -48,8 +60,20 @@ try:

|

|

| 48 |

except Exception:

|

| 49 |

restart_space()

|

| 50 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 51 |

|

| 52 |

-

LEADERBOARD_DF = get_leaderboard_df(EVAL_RESULTS_PATH, EVAL_REQUESTS_PATH, COLS, BENCHMARK_COLS)

|

| 53 |

|

| 54 |

(

|

| 55 |

finished_eval_queue_df,

|

|

@@ -57,51 +81,288 @@ LEADERBOARD_DF = get_leaderboard_df(EVAL_RESULTS_PATH, EVAL_REQUESTS_PATH, COLS,

|

|

| 57 |

pending_eval_queue_df,

|

| 58 |

) = get_evaluation_queue_df(EVAL_REQUESTS_PATH, EVAL_COLS)

|

| 59 |

|

| 60 |

-

def init_leaderboard(dataframe):

|

| 61 |

-

if dataframe is None or dataframe.empty:

|

| 62 |

-

raise ValueError("Leaderboard DataFrame is empty or None.")

|

| 63 |

-

return Leaderboard(

|

| 64 |

-

value=dataframe,

|

| 65 |

-

datatype=[c.type for c in fields(AutoEvalColumn)],

|

| 66 |

-

select_columns=SelectColumns(

|

| 67 |

-

default_selection=[c.name for c in fields(AutoEvalColumn) if c.displayed_by_default],

|

| 68 |

-

cant_deselect=[c.name for c in fields(AutoEvalColumn) if c.never_hidden],

|

| 69 |

-

label="Select Columns to Display:",

|

| 70 |

-

),

|

| 71 |

-

search_columns=[AutoEvalColumn.model.name, AutoEvalColumn.license.name],

|

| 72 |

-

hide_columns=[c.name for c in fields(AutoEvalColumn) if c.hidden],

|

| 73 |

-

filter_columns=[

|

| 74 |

-

ColumnFilter(AutoEvalColumn.model_type.name, type="checkboxgroup", label="Model types"),

|

| 75 |

-

ColumnFilter(AutoEvalColumn.precision.name, type="checkboxgroup", label="Precision"),

|

| 76 |

-

ColumnFilter(

|

| 77 |

-

AutoEvalColumn.params.name,

|

| 78 |

-

type="slider",

|

| 79 |

-

min=0.01,

|

| 80 |

-

max=150,

|

| 81 |

-

label="Select the number of parameters (B)",

|

| 82 |

-

),

|

| 83 |

-

ColumnFilter(

|

| 84 |

-

AutoEvalColumn.still_on_hub.name, type="boolean", label="Deleted/incomplete", default=True

|

| 85 |

-

),

|

| 86 |

-

],

|

| 87 |

-

bool_checkboxgroup_label="Hide models",

|

| 88 |

-

interactive=False,

|

| 89 |

-

)

|

| 90 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 91 |

|

|

|

|

| 92 |

demo = gr.Blocks(css=custom_css)

|

| 93 |

with demo:

|

| 94 |

gr.HTML(TITLE)

|

|

|

|

| 95 |

gr.Markdown(INTRODUCTION_TEXT, elem_classes="markdown-text")

|

| 96 |

|

| 97 |

with gr.Tabs(elem_classes="tab-buttons") as tabs:

|

| 98 |

-

with gr.TabItem("🏅

|

| 99 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 100 |

|

| 101 |

-

|

| 102 |

-

gr.Markdown(LLM_BENCHMARKS_TEXT, elem_classes="markdown-text")

|

| 103 |

|

| 104 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 105 |

with gr.Column():

|

| 106 |

with gr.Row():

|

| 107 |

gr.Markdown(EVALUATION_QUEUE_TEXT, elem_classes="markdown-text")

|

|

@@ -146,8 +407,16 @@ with demo:

|

|

| 146 |

|

| 147 |

with gr.Row():

|

| 148 |

with gr.Column():

|

|

|

|

| 149 |

model_name_textbox = gr.Textbox(label="Model name")

|

|

|

|

| 150 |

revision_name_textbox = gr.Textbox(label="Revision commit", placeholder="main")

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 151 |

model_type = gr.Dropdown(

|

| 152 |

choices=[t.to_str(" : ") for t in ModelType if t != ModelType.Unknown],

|

| 153 |

label="Model type",

|

|

@@ -157,21 +426,29 @@ with demo:

|

|

| 157 |

)

|

| 158 |

|

| 159 |

with gr.Column():

|

| 160 |

-

|

| 161 |

-

|

| 162 |

-

|

| 163 |

-

|

| 164 |

-

|

| 165 |

-

|

| 166 |

)

|

| 167 |

-

|

| 168 |

-

choices=[

|

| 169 |

-

label="

|

| 170 |

multiselect=False,

|

| 171 |

-

value="

|

| 172 |

interactive=True,

|

| 173 |

-

|

| 174 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 175 |

|

| 176 |

submit_button = gr.Button("Submit Eval")

|

| 177 |

submission_result = gr.Markdown()

|

|

@@ -179,15 +456,20 @@ with demo:

|

|

| 179 |

add_new_eval,

|

| 180 |

[

|

| 181 |

model_name_textbox,

|

| 182 |

-

base_model_name_textbox,

|

| 183 |

revision_name_textbox,

|

| 184 |

-

|

| 185 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

| 186 |

model_type,

|

| 187 |

],

|

| 188 |

submission_result,

|

| 189 |

)

|

| 190 |

|

|

|

|

| 191 |

with gr.Row():

|

| 192 |

with gr.Accordion("📙 Citation", open=False):

|

| 193 |

citation_button = gr.Textbox(

|

|

@@ -201,4 +483,4 @@ with demo:

|

|

| 201 |

scheduler = BackgroundScheduler()

|

| 202 |

scheduler.add_job(restart_space, "interval", seconds=1800)

|

| 203 |

scheduler.start()

|

| 204 |

-

demo.queue(default_concurrency_limit=40).launch()

|

|

|

|

| 1 |

+

import subprocess

|

| 2 |

+

|

| 3 |

import gradio as gr

|

|

|

|

| 4 |

import pandas as pd

|

| 5 |

from apscheduler.schedulers.background import BackgroundScheduler

|

| 6 |

from huggingface_hub import snapshot_download

|

|

|

|

| 10 |

CITATION_BUTTON_TEXT,

|

| 11 |

EVALUATION_QUEUE_TEXT,

|

| 12 |

INTRODUCTION_TEXT,

|

| 13 |

+

LLM_BENCHMARKS_TEXT_1,

|

| 14 |

+

EVALUATION_EXAMPLE_IMG,

|

| 15 |

+

LLM_BENCHMARKS_TEXT_2,

|

| 16 |

+

# ENTITY_DISTRIBUTION_IMG,

|

| 17 |

+

LLM_BENCHMARKS_TEXT_3,

|

| 18 |

TITLE,

|

| 19 |

+

LOGO

|

| 20 |

)

|

| 21 |

from src.display.css_html_js import custom_css

|

| 22 |

from src.display.utils import (

|

| 23 |

+

DATASET_BENCHMARK_COLS,

|

| 24 |

+

TYPES_BENCHMARK_COLS,

|

| 25 |

+

DATASET_COLS,

|

| 26 |

+

Clinical_TYPES_COLS,

|

| 27 |

EVAL_COLS,

|

| 28 |

EVAL_TYPES,

|

| 29 |

+

NUMERIC_INTERVALS,

|

| 30 |

+

TYPES,

|

| 31 |

AutoEvalColumn,

|

| 32 |

ModelType,

|

| 33 |

+

ModelArch,

|

| 34 |

+

PromptTemplateName,

|

| 35 |

+

Precision,

|

| 36 |

WeightType,

|

| 37 |

+

fields,

|

| 38 |

)

|

| 39 |

from src.envs import API, EVAL_REQUESTS_PATH, EVAL_RESULTS_PATH, QUEUE_REPO, REPO_ID, RESULTS_REPO, TOKEN

|

| 40 |

from src.populate import get_evaluation_queue_df, get_leaderboard_df

|

| 41 |

+

from src.submission.submit import add_new_eval, PLACEHOLDER_DATASET_WISE_NORMALIZATION_CONFIG

|

| 42 |

|

| 43 |

|

| 44 |

def restart_space():

|

| 45 |

API.restart_space(repo_id=REPO_ID)

|

| 46 |

|

| 47 |

+

|

| 48 |

try:

|

| 49 |

print(EVAL_REQUESTS_PATH)

|

| 50 |

snapshot_download(

|

|

|

|

| 60 |

except Exception:

|

| 61 |

restart_space()

|

| 62 |

|

| 63 |

+

# Span based results

|

| 64 |

+

_, harness_datasets_original_df = get_leaderboard_df(EVAL_RESULTS_PATH, EVAL_REQUESTS_PATH, DATASET_COLS, DATASET_BENCHMARK_COLS, "accuracy", "datasets")

|

| 65 |

+

harness_datasets_leaderboard_df = harness_datasets_original_df.copy()

|

| 66 |

+

|

| 67 |

+

# _, span_based_types_original_df = get_leaderboard_df(EVAL_RESULTS_PATH, EVAL_REQUESTS_PATH, Clinical_TYPES_COLS, TYPES_BENCHMARK_COLS, "SpanBasedWithPartialOverlap", "clinical_types")

|

| 68 |

+

# span_based_types_leaderboard_df = span_based_types_original_df.copy()

|

| 69 |

+

|

| 70 |

+

# # Token based results

|

| 71 |

+

# _, token_based_datasets_original_df = get_leaderboard_df(EVAL_RESULTS_PATH, EVAL_REQUESTS_PATH, DATASET_COLS, DATASET_BENCHMARK_COLS, "TokenBasedWithMacroAverage", "datasets")

|

| 72 |

+

# token_based_datasets_leaderboard_df = token_based_datasets_original_df.copy()

|

| 73 |

+

|

| 74 |

+

# _, token_based_types_original_df = get_leaderboard_df(EVAL_RESULTS_PATH, EVAL_REQUESTS_PATH, Clinical_TYPES_COLS, TYPES_BENCHMARK_COLS, "TokenBasedWithMacroAverage", "clinical_types")

|

| 75 |

+

# token_based_types_leaderboard_df = token_based_types_original_df.copy()

|

| 76 |

|

|

|

|

| 77 |

|

| 78 |

(

|

| 79 |

finished_eval_queue_df,

|

|

|

|

| 81 |

pending_eval_queue_df,

|

| 82 |

) = get_evaluation_queue_df(EVAL_REQUESTS_PATH, EVAL_COLS)

|

| 83 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 84 |

|

| 85 |

+

def update_df(shown_columns, subset="datasets"):

|

| 86 |

+

leaderboard_table_df = harness_datasets_leaderboard_df.copy()

|

| 87 |

+

hidden_leader_board_df = harness_datasets_original_df

|

| 88 |

+

# else:

|

| 89 |

+

# match evaluation_metric:

|

| 90 |

+

# case "Span Based":

|

| 91 |

+

# leaderboard_table_df = span_based_types_leaderboard_df.copy()

|

| 92 |

+

# hidden_leader_board_df = span_based_types_original_df

|

| 93 |

+

# case "Token Based":

|

| 94 |

+

# leaderboard_table_df = token_based_types_leaderboard_df.copy()

|

| 95 |

+

# hidden_leader_board_df = token_based_types_original_df

|

| 96 |

+

# case _:

|

| 97 |

+

# pass

|

| 98 |

+

|

| 99 |

+

|

| 100 |

+

value_cols = [c.name for c in fields(AutoEvalColumn) if c.never_hidden] + shown_columns

|

| 101 |

+

|

| 102 |

+

return leaderboard_table_df[value_cols], hidden_leader_board_df

|

| 103 |

+

|

| 104 |

+

|

| 105 |

+

# Searching and filtering

|

| 106 |

+

def update_table(

|

| 107 |

+

hidden_df: pd.DataFrame,

|

| 108 |

+

columns: list,

|

| 109 |

+

query: str,

|

| 110 |

+

type_query: list = None,

|

| 111 |

+

architecture_query: list = None,

|

| 112 |

+

size_query: list = None,

|

| 113 |

+

precision_query: str = None,

|

| 114 |

+

show_deleted: bool = False,

|

| 115 |

+

):

|

| 116 |

+

filtered_df = filter_models(hidden_df, type_query, architecture_query, size_query, precision_query, show_deleted)

|

| 117 |

+

filtered_df = filter_queries(query, filtered_df)

|

| 118 |

+

df = select_columns(filtered_df, columns, list(hidden_df.columns))

|

| 119 |

+

return df

|

| 120 |

+

|

| 121 |

+

|

| 122 |

+

def search_table(df: pd.DataFrame, query: str) -> pd.DataFrame:

|

| 123 |

+

return df[(df[AutoEvalColumn.model.name].str.contains(query, case=False))]

|

| 124 |

+

|

| 125 |

+

|

| 126 |

+

def select_columns(df: pd.DataFrame, columns: list, cols:list) -> pd.DataFrame:

|

| 127 |

+

always_here_cols = [

|

| 128 |

+

AutoEvalColumn.model_type_symbol.name,

|

| 129 |

+

AutoEvalColumn.model.name,

|

| 130 |

+

]

|

| 131 |

+

# We use COLS to maintain sorting

|

| 132 |

+

filtered_df = df[always_here_cols + [c for c in cols if c in df.columns and c in columns]]

|

| 133 |

+

return filtered_df

|

| 134 |

+

|

| 135 |

+

|

| 136 |

+

def filter_queries(query: str, filtered_df: pd.DataFrame) -> pd.DataFrame:

|

| 137 |

+

final_df = []

|

| 138 |

+

if query != "":

|

| 139 |

+

queries = [q.strip() for q in query.split(";")]

|

| 140 |

+

for _q in queries:

|

| 141 |

+

_q = _q.strip()

|

| 142 |

+

if _q != "":

|

| 143 |

+

temp_filtered_df = search_table(filtered_df, _q)

|

| 144 |

+

if len(temp_filtered_df) > 0:

|

| 145 |

+

final_df.append(temp_filtered_df)

|

| 146 |

+

if len(final_df) > 0:

|

| 147 |

+

filtered_df = pd.concat(final_df)

|

| 148 |

+

filtered_df = filtered_df.drop_duplicates(

|

| 149 |

+

subset=[

|

| 150 |

+

AutoEvalColumn.model.name,

|

| 151 |

+

# AutoEvalColumn.precision.name,

|

| 152 |

+

# AutoEvalColumn.revision.name,

|

| 153 |

+

]

|

| 154 |

+

)

|

| 155 |

+

|

| 156 |

+

return filtered_df

|

| 157 |

+

|

| 158 |

+

|

| 159 |

+

def filter_models(

|

| 160 |

+

df: pd.DataFrame, type_query: list, architecture_query: list, size_query: list, precision_query: list, show_deleted: bool

|

| 161 |

+

) -> pd.DataFrame:

|

| 162 |

+

# Show all models

|

| 163 |

+

# if show_deleted:

|

| 164 |

+

# filtered_df = df

|

| 165 |

+

# else: # Show only still on the hub models

|

| 166 |

+

# filtered_df = df[df[AutoEvalColumn.still_on_hub.name] == True]

|

| 167 |

+

|

| 168 |

+

filtered_df = df

|

| 169 |

+

|

| 170 |

+

if type_query is not None:

|

| 171 |

+

type_emoji = [t[0] for t in type_query]

|

| 172 |

+

filtered_df = filtered_df.loc[df[AutoEvalColumn.model_type_symbol.name].isin(type_emoji)]

|

| 173 |

+

|

| 174 |

+

if architecture_query is not None:

|

| 175 |

+

arch_types = [t for t in architecture_query]

|

| 176 |

+

filtered_df = filtered_df.loc[df[AutoEvalColumn.architecture.name].isin(arch_types)]

|

| 177 |

+

# filtered_df = filtered_df.loc[df[AutoEvalColumn.architecture.name].isin(architecture_query + ["None"])]

|

| 178 |

+

|

| 179 |

+

if precision_query is not None:

|

| 180 |

+

if AutoEvalColumn.precision.name in df.columns:

|

| 181 |

+

filtered_df = filtered_df.loc[df[AutoEvalColumn.precision.name].isin(precision_query + ["None"])]

|

| 182 |

+

|

| 183 |

+

if size_query is not None:

|

| 184 |

+

numeric_interval = pd.IntervalIndex(sorted([NUMERIC_INTERVALS[s] for s in size_query]))

|

| 185 |

+

params_column = pd.to_numeric(df[AutoEvalColumn.params.name], errors="coerce")

|

| 186 |

+

mask = params_column.apply(lambda x: any(numeric_interval.contains(x)))

|

| 187 |

+

filtered_df = filtered_df.loc[mask]

|

| 188 |

+

|

| 189 |

+

return filtered_df

|

| 190 |

+

|

| 191 |

+

def change_submit_request_form(model_architecture):

|

| 192 |

+

match model_architecture:

|

| 193 |

+

case "Encoder":

|

| 194 |

+

return (

|

| 195 |

+

gr.Textbox(label="Threshold for gliner models", visible=False),

|

| 196 |

+

gr.Radio(

|

| 197 |

+

choices=["True", "False"],

|

| 198 |

+

label="Load GLiNER Tokenizer",

|

| 199 |

+

visible=False

|

| 200 |

+

),

|

| 201 |

+

gr.Dropdown(

|

| 202 |

+

choices=[prompt_template.value for prompt_template in PromptTemplateName],

|

| 203 |

+

label="Prompt for generation",

|

| 204 |

+

multiselect=False,

|

| 205 |

+

# value="HTML Highlighted Spans",

|

| 206 |

+

interactive=True,

|

| 207 |

+

visible=False

|

| 208 |

+

)

|

| 209 |

+

)

|

| 210 |

+

case "Decoder":

|

| 211 |

+

return (

|

| 212 |

+

gr.Textbox(label="Threshold for gliner models", visible=False),

|

| 213 |

+

gr.Radio(

|

| 214 |

+

choices=["True", "False"],

|

| 215 |

+

label="Load GLiNER Tokenizer",

|

| 216 |

+

visible=False

|

| 217 |

+

),

|

| 218 |

+

gr.Dropdown(

|

| 219 |

+

choices=[prompt_template.value for prompt_template in PromptTemplateName],

|

| 220 |

+

label="Prompt for generation",

|

| 221 |

+

multiselect=False,

|

| 222 |

+

# value="HTML Highlighted Spans",

|

| 223 |

+

interactive=True,

|

| 224 |

+

visible=True

|

| 225 |

+

)

|

| 226 |

+

)

|

| 227 |

+

case "GLiNER Encoder":

|

| 228 |

+

return (

|

| 229 |

+

gr.Textbox(label="Threshold for gliner models", visible=True),

|

| 230 |

+

gr.Radio(

|

| 231 |

+

choices=["True", "False"],

|

| 232 |

+

label="Load GLiNER Tokenizer",

|

| 233 |

+

visible=True

|

| 234 |

+

),

|

| 235 |

+

gr.Dropdown(

|

| 236 |

+

choices=[prompt_template.value for prompt_template in PromptTemplateName],

|

| 237 |

+

label="Prompt for generation",

|

| 238 |

+

multiselect=False,

|

| 239 |

+

# value="HTML Highlighted Spans",

|

| 240 |

+

interactive=True,

|

| 241 |

+

visible=False

|

| 242 |

+

)

|

| 243 |

+

)

|

| 244 |

|

| 245 |

+

|

| 246 |

demo = gr.Blocks(css=custom_css)

|

| 247 |

with demo:

|

| 248 |

gr.HTML(TITLE)

|

| 249 |

+

gr.HTML(LOGO, elem_classes="logo")

|

| 250 |

gr.Markdown(INTRODUCTION_TEXT, elem_classes="markdown-text")

|

| 251 |

|

| 252 |

with gr.Tabs(elem_classes="tab-buttons") as tabs:

|

| 253 |

+

with gr.TabItem("🏅 Closed Ended Evaluation", elem_id="llm-benchmark-tab-table", id=0):

|

| 254 |

+

with gr.Row():

|

| 255 |

+

with gr.Column():

|

| 256 |

+

with gr.Row():

|

| 257 |

+

search_bar = gr.Textbox(

|

| 258 |

+

placeholder=" 🔍 Search for your model (separate multiple queries with `;`) and press ENTER...",

|

| 259 |

+

show_label=False,

|

| 260 |

+

elem_id="search-bar",

|

| 261 |

+

)

|

| 262 |

+

with gr.Row():

|

| 263 |

+

shown_columns = gr.CheckboxGroup(

|

| 264 |

+

choices=[c.name for c in fields(AutoEvalColumn) if not c.hidden and not c.never_hidden and not c.clinical_type_col],

|

| 265 |

+

value=[

|

| 266 |

+

c.name

|

| 267 |

+

for c in fields(AutoEvalColumn)

|

| 268 |

+

if c.displayed_by_default and not c.hidden and not c.never_hidden and not c.clinical_type_col

|

| 269 |

+

],

|

| 270 |

+

label="Select columns to show",

|

| 271 |

+

elem_id="column-select",

|

| 272 |

+

interactive=True,

|

| 273 |

+

)

|

| 274 |

+

# with gr.Row():

|

| 275 |

+

# deleted_models_visibility = gr.Checkbox(

|

| 276 |

+

# value=False, label="Show gated/private/deleted models", interactive=True

|

| 277 |

+

# )

|

| 278 |

+

with gr.Column(min_width=320):

|

| 279 |

+

# with gr.Box(elem_id="box-filter"):

|

| 280 |

+

filter_columns_type = gr.CheckboxGroup(

|

| 281 |

+

label="Model Types",

|

| 282 |

+

choices=[t.to_str() for t in ModelType],

|

| 283 |

+

value=[t.to_str() for t in ModelType],

|

| 284 |

+

interactive=True,

|

| 285 |

+

elem_id="filter-columns-type",

|

| 286 |

+

)

|

| 287 |

+

# filter_columns_architecture = gr.CheckboxGroup(

|

| 288 |

+

# label="Architecture Types",

|

| 289 |

+

# choices=[i.value.name for i in ModelArch],

|

| 290 |

+

# value=[i.value.name for i in ModelArch],

|

| 291 |

+

# interactive=True,

|

| 292 |

+

# elem_id="filter-columns-architecture",

|

| 293 |

+

# )

|

| 294 |

+

filter_columns_size = gr.CheckboxGroup(

|

| 295 |

+

label="Model sizes (in billions of parameters)",

|

| 296 |

+

choices=list(NUMERIC_INTERVALS.keys()),

|

| 297 |

+

value=list(NUMERIC_INTERVALS.keys()),

|

| 298 |

+

interactive=True,

|

| 299 |

+

elem_id="filter-columns-size",

|

| 300 |

+

)

|

| 301 |

|

| 302 |

+

datasets_leaderboard_df, datasets_original_df = update_df(shown_columns.value, subset="datasets")

|

|

|

|

| 303 |

|

| 304 |

+

leaderboard_table = gr.components.Dataframe(

|

| 305 |

+

value=datasets_leaderboard_df[[c.name for c in fields(AutoEvalColumn) if c.never_hidden] + shown_columns.value],

|

| 306 |

+

headers=[c.name for c in fields(AutoEvalColumn) if c.never_hidden] + shown_columns.value,

|

| 307 |

+

datatype=TYPES,

|

| 308 |

+

elem_id="leaderboard-table",

|

| 309 |

+

interactive=False,

|

| 310 |

+

visible=True,

|

| 311 |

+

)

|

| 312 |

+

|

| 313 |

+

# Dummy leaderboard for handling the case when the user uses backspace key

|

| 314 |

+

# hidden_leaderboard_table_for_search = gr.components.Dataframe(

|

| 315 |

+

# value=datasets_original_df[DATASET_COLS],

|

| 316 |

+

# headers=DATASET_COLS,

|

| 317 |

+

# datatype=TYPES,

|

| 318 |

+

# visible=False,

|

| 319 |

+

# )

|

| 320 |

+

|

| 321 |

+

|

| 322 |

+

# search_bar.submit(

|

| 323 |

+

# update_table,

|

| 324 |

+

# [

|

| 325 |

+

# hidden_leaderboard_table_for_search,

|

| 326 |

+

# shown_columns,

|

| 327 |

+

# search_bar,

|

| 328 |

+

# filter_columns_type,

|

| 329 |

+

# # filter_columns_architecture

|

| 330 |

+

# ],

|

| 331 |

+

# leaderboard_table,

|

| 332 |

+

# )

|

| 333 |

+

# for selector in [

|

| 334 |

+

# shown_columns,

|

| 335 |

+

# filter_columns_type,

|

| 336 |

+

# # filter_columns_architecture,

|

| 337 |

+

# # filter_columns_size,

|

| 338 |

+

# # deleted_models_visibility,

|

| 339 |

+

# ]:

|

| 340 |

+

# selector.change(

|

| 341 |

+

# update_table,

|

| 342 |

+

# [

|

| 343 |

+

# hidden_leaderboard_table_for_search,

|

| 344 |

+

# shown_columns,

|

| 345 |

+

# search_bar,

|

| 346 |

+

# filter_columns_type,

|

| 347 |

+

# # filter_columns_architecture,

|

| 348 |

+

# ],

|

| 349 |

+

# leaderboard_table,

|

| 350 |

+

# queue=True,

|

| 351 |

+

# )

|

| 352 |

+

|

| 353 |

+

with gr.TabItem("🏅 Open Ended Evaluation", elem_id="llm-benchmark-tab-table", id=1):

|

| 354 |

+

pass

|

| 355 |

+

with gr.TabItem("🏅 Med Safety", elem_id="llm-benchmark-tab-table", id=2):

|

| 356 |

+

pass

|

| 357 |

+

|

| 358 |

+

with gr.TabItem("📝 About", elem_id="llm-benchmark-tab-table", id=3):

|

| 359 |

+

gr.Markdown(LLM_BENCHMARKS_TEXT_1, elem_classes="markdown-text")

|

| 360 |

+

gr.HTML(EVALUATION_EXAMPLE_IMG, elem_classes="logo")

|

| 361 |

+

gr.Markdown(LLM_BENCHMARKS_TEXT_2, elem_classes="markdown-text")

|

| 362 |

+

# gr.HTML(ENTITY_DISTRIBUTION_IMG, elem_classes="logo")

|

| 363 |

+

gr.Markdown(LLM_BENCHMARKS_TEXT_3, elem_classes="markdown-text")

|

| 364 |

+

|

| 365 |

+

with gr.TabItem("🚀 Submit here! ", elem_id="llm-benchmark-tab-table", id=4):

|

| 366 |

with gr.Column():

|

| 367 |

with gr.Row():

|

| 368 |

gr.Markdown(EVALUATION_QUEUE_TEXT, elem_classes="markdown-text")

|

|

|

|

| 407 |

|

| 408 |

with gr.Row():

|

| 409 |

with gr.Column():

|

| 410 |

+

|

| 411 |

model_name_textbox = gr.Textbox(label="Model name")

|

| 412 |

+

|

| 413 |

revision_name_textbox = gr.Textbox(label="Revision commit", placeholder="main")

|

| 414 |

+

|

| 415 |

+

model_arch = gr.Radio(

|

| 416 |

+

choices=[t.to_str(" : ") for t in ModelArch if t != ModelArch.Unknown],

|

| 417 |

+

label="Model Architecture",

|

| 418 |

+

)

|

| 419 |

+

|

| 420 |

model_type = gr.Dropdown(

|

| 421 |

choices=[t.to_str(" : ") for t in ModelType if t != ModelType.Unknown],

|

| 422 |

label="Model type",

|

|

|

|

| 426 |

)

|

| 427 |

|

| 428 |

with gr.Column():

|

| 429 |

+

label_normalization_map = gr.Textbox(lines=6, label="Label Normalization Map", placeholder=PLACEHOLDER_DATASET_WISE_NORMALIZATION_CONFIG)

|

| 430 |

+

gliner_threshold = gr.Textbox(label="Threshold for GLiNER models", visible=False)

|

| 431 |

+

gliner_tokenizer_bool = gr.Radio(

|

| 432 |

+

choices=["True", "False"],

|

| 433 |

+

label="Load GLiNER Tokenizer",

|

| 434 |

+

visible=False

|

| 435 |

)

|

| 436 |

+

prompt_name = gr.Dropdown(

|

| 437 |

+

choices=[prompt_template.value for prompt_template in PromptTemplateName],

|

| 438 |

+

label="Prompt for generation",

|

| 439 |

multiselect=False,

|

| 440 |

+

value="HTML Highlighted Spans",

|

| 441 |

interactive=True,

|

| 442 |

+

visible=False

|

| 443 |

+

)# should be a dropdown

|

| 444 |

+

|

| 445 |

+

# parsing_function - this is tied to the prompt & therefore does not need to be specified

|

| 446 |

+

# generation_parameters = gr.Textbox(label="Generation params in json format") just default for now

|

| 447 |

+

|

| 448 |

+

model_arch.change(fn=change_submit_request_form, inputs=model_arch, outputs=[

|

| 449 |

+

gliner_threshold,

|

| 450 |

+

gliner_tokenizer_bool,

|

| 451 |

+

prompt_name])

|

| 452 |

|

| 453 |

submit_button = gr.Button("Submit Eval")

|

| 454 |

submission_result = gr.Markdown()

|

|

|

|

| 456 |

add_new_eval,

|

| 457 |

[

|

| 458 |

model_name_textbox,

|

| 459 |

+

# base_model_name_textbox,

|

| 460 |

revision_name_textbox,

|

| 461 |

+

model_arch,

|

| 462 |

+

label_normalization_map,

|

| 463 |

+

gliner_threshold,

|

| 464 |

+

gliner_tokenizer_bool,

|

| 465 |

+

prompt_name,

|

| 466 |

+

# weight_type,

|

| 467 |

model_type,

|

| 468 |

],

|

| 469 |

submission_result,

|

| 470 |

)

|

| 471 |

|

| 472 |

+

|

| 473 |

with gr.Row():

|

| 474 |

with gr.Accordion("📙 Citation", open=False):

|

| 475 |

citation_button = gr.Textbox(

|

|

|

|

| 483 |

scheduler = BackgroundScheduler()

|

| 484 |

scheduler.add_job(restart_space, "interval", seconds=1800)

|

| 485 |

scheduler.start()

|

| 486 |

+

demo.queue(default_concurrency_limit=40).launch(allowed_paths=['./assets/'])

|

assets/entity_distribution.png

ADDED

|

assets/image.png

ADDED

|

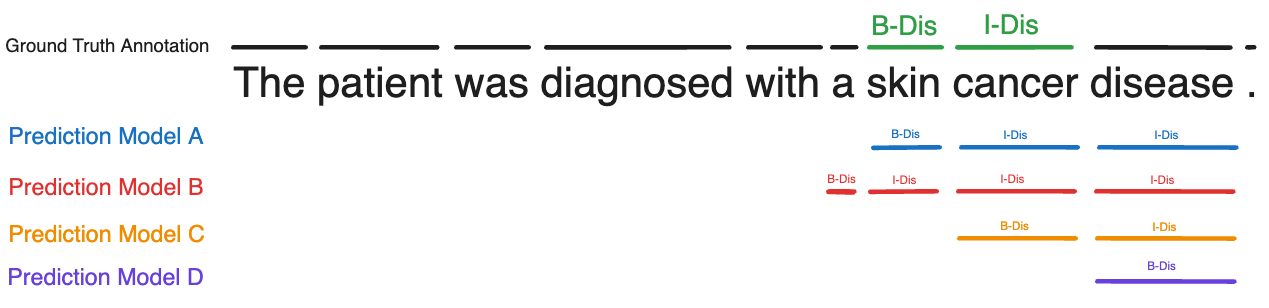

assets/ner_evaluation_example.png

ADDED

|

eval_metrics_app.py

ADDED

|

@@ -0,0 +1,75 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

|

| 3 |

+

# Function to compute evaluation metrics (dummy implementation)

|

| 4 |

+

def compute_metrics(gt_spans, pred_spans):

|

| 5 |

+

# Dummy implementation of a metric computation

|

| 6 |

+

# Replace this with actual metric computation logic

|

| 7 |

+

tp = len(set(gt_spans) & set(pred_spans))

|

| 8 |

+

fp = len(set(pred_spans) - set(gt_spans))

|

| 9 |

+

fn = len(set(gt_spans) - set(pred_spans))

|

| 10 |

+

precision = tp / (tp + fp) if (tp + fp) > 0 else 0

|

| 11 |

+

recall = tp / (tp + fn) if (tp + fn) > 0 else 0

|

| 12 |

+

f1_score = 2 * precision * recall / (precision + recall) if (precision + recall) > 0 else 0

|

| 13 |

+

|

| 14 |

+

return {"precision": precision, "recall": recall, "f1_score": f1_score}

|

| 15 |

+

|

| 16 |

+

def create_app():

|

| 17 |

+

with gr.Blocks() as demo:

|

| 18 |

+

# Input components

|

| 19 |

+

text_input = gr.Textbox(label="Input Text")

|

| 20 |

+

highlight_input = gr.Textbox(label="Highlight Text and Press Add")

|

| 21 |

+

|

| 22 |

+

gt_spans_state = gr.State([])

|

| 23 |

+

pred_spans_state = gr.State([])

|

| 24 |

+

|

| 25 |

+

# Buttons for ground truth and prediction

|

| 26 |

+

add_gt_button = gr.Button("Add to Ground Truth")

|

| 27 |

+

add_pred_button = gr.Button("Add to Predictions")

|

| 28 |

+

|

| 29 |

+

# Outputs for highlighted spans

|

| 30 |

+

gt_output = gr.HighlightedText(label="Ground Truth Spans")

|

| 31 |

+

pred_output = gr.HighlightedText(label="Predicted Spans")

|

| 32 |

+

|

| 33 |

+

# Compute metrics button and its output

|

| 34 |

+

compute_button = gr.Button("Compute Metrics")

|

| 35 |

+

metrics_output = gr.JSON(label="Metrics")

|

| 36 |

+

|

| 37 |

+

# Function to update spans

|

| 38 |

+

def update_spans(text, span, gt_spans, pred_spans, is_gt):

|

| 39 |

+

start_idx = text.find(span)

|

| 40 |

+

end_idx = start_idx + len(span)

|

| 41 |

+

new_span = (start_idx, end_idx)

|

| 42 |

+

if is_gt:

|

| 43 |

+

gt_spans.append(new_span)

|

| 44 |

+

gt_spans = list(set(gt_spans))

|

| 45 |

+

else:

|

| 46 |

+

pred_spans.append(new_span)

|

| 47 |

+

pred_spans = list(set(pred_spans))

|

| 48 |

+

return gt_spans, pred_spans, highlight_spans(text, gt_spans), highlight_spans(text, pred_spans)

|

| 49 |

+

|

| 50 |

+

# Function to highlight spans

|

| 51 |

+

def highlight_spans(text, spans):

|

| 52 |

+

span_dict = {}

|

| 53 |

+

for span in spans:

|

| 54 |

+

span_dict[(span[0], span[1])] = "highlight"

|

| 55 |

+

return span_dict

|

| 56 |

+

|

| 57 |

+

# Event handlers for buttons

|

| 58 |

+

add_gt_button.click(fn=update_spans, inputs=[text_input, highlight_input, gt_spans_state, pred_spans_state, gr.State(True)], outputs=[gt_spans_state, pred_spans_state, gt_output, pred_output])

|

| 59 |

+

add_pred_button.click(fn=update_spans, inputs=[text_input, highlight_input, gt_spans_state, pred_spans_state, gr.State(False)], outputs=[gt_spans_state, pred_spans_state, gt_output, pred_output])

|

| 60 |

+

|

| 61 |

+

# Function to compute metrics

|

| 62 |

+

def on_compute_metrics(gt_spans, pred_spans):

|

| 63 |

+

metrics = compute_metrics(gt_spans, pred_spans)

|

| 64 |

+

return metrics

|

| 65 |

+

|

| 66 |

+

compute_button.click(fn=on_compute_metrics, inputs=[gt_spans_state, pred_spans_state], outputs=metrics_output)

|

| 67 |

+

|

| 68 |

+

# Layout arrangement

|

| 69 |

+

text_input.change(fn=lambda x: x, inputs=text_input, outputs=[gt_output, pred_output])

|

| 70 |

+

|

| 71 |

+

return demo

|

| 72 |

+

|

| 73 |

+

# Run the app

|

| 74 |

+

demo = create_app()

|

| 75 |

+

demo.launch()

|

medic-harness-requests/.gitattributes

ADDED

|

@@ -0,0 +1,58 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

+

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

+

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

+

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

+

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

+

*.lz4 filter=lfs diff=lfs merge=lfs -text

|

| 12 |

+

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 13 |

+

*.model filter=lfs diff=lfs merge=lfs -text

|

| 14 |

+

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 15 |

+

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 16 |

+

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 17 |

+

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 18 |

+

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 19 |

+

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 20 |

+

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 21 |

+

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 22 |

+

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 23 |

+

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 24 |

+

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 25 |

+

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 26 |

+

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 27 |

+

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

+

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 29 |

+

*.tar filter=lfs diff=lfs merge=lfs -text

|

| 30 |

+

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 31 |

+

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 32 |

+

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 33 |

+

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 34 |

+

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

# Audio files - uncompressed

|

| 38 |

+

*.pcm filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

*.sam filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

*.raw filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

# Audio files - compressed

|

| 42 |

+

*.aac filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

*.flac filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

*.mp3 filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

*.ogg filter=lfs diff=lfs merge=lfs -text

|

| 46 |

+

*.wav filter=lfs diff=lfs merge=lfs -text

|

| 47 |

+

# Image files - uncompressed

|

| 48 |

+

*.bmp filter=lfs diff=lfs merge=lfs -text

|

| 49 |

+

*.gif filter=lfs diff=lfs merge=lfs -text

|

| 50 |

+

*.png filter=lfs diff=lfs merge=lfs -text

|

| 51 |

+

*.tiff filter=lfs diff=lfs merge=lfs -text

|

| 52 |

+

# Image files - compressed

|

| 53 |

+

*.jpg filter=lfs diff=lfs merge=lfs -text

|

| 54 |

+

*.jpeg filter=lfs diff=lfs merge=lfs -text

|

| 55 |

+

*.webp filter=lfs diff=lfs merge=lfs -text

|

| 56 |

+

# Video files - compressed

|

| 57 |

+

*.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 58 |

+

*.webm filter=lfs diff=lfs merge=lfs -text

|

medic-harness-results/.gitattributes

ADDED

|

@@ -0,0 +1,58 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

+

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

+

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

+

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

+

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

+

*.lz4 filter=lfs diff=lfs merge=lfs -text

|

| 12 |

+

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 13 |

+

*.model filter=lfs diff=lfs merge=lfs -text

|

| 14 |

+

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 15 |

+

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 16 |

+

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 17 |

+

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 18 |

+

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 19 |

+

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 20 |

+

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 21 |

+

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 22 |

+

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 23 |

+

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 24 |

+

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 25 |

+

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 26 |

+

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 27 |

+

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

+

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 29 |

+

*.tar filter=lfs diff=lfs merge=lfs -text

|

| 30 |

+

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 31 |

+

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 32 |

+

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 33 |

+

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 34 |

+

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

# Audio files - uncompressed

|

| 38 |

+

*.pcm filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

*.sam filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

*.raw filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

# Audio files - compressed

|

| 42 |

+

*.aac filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

*.flac filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

*.mp3 filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

*.ogg filter=lfs diff=lfs merge=lfs -text

|

| 46 |

+

*.wav filter=lfs diff=lfs merge=lfs -text

|

| 47 |

+

# Image files - uncompressed

|

| 48 |

+

*.bmp filter=lfs diff=lfs merge=lfs -text

|

| 49 |

+

*.gif filter=lfs diff=lfs merge=lfs -text

|

| 50 |

+

*.png filter=lfs diff=lfs merge=lfs -text

|

| 51 |

+

*.tiff filter=lfs diff=lfs merge=lfs -text

|

| 52 |

+

# Image files - compressed

|

| 53 |

+

*.jpg filter=lfs diff=lfs merge=lfs -text

|

| 54 |

+

*.jpeg filter=lfs diff=lfs merge=lfs -text

|

| 55 |

+

*.webp filter=lfs diff=lfs merge=lfs -text

|

| 56 |

+

# Video files - compressed

|

| 57 |

+

*.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 58 |

+

*.webm filter=lfs diff=lfs merge=lfs -text

|

medic-harness-results/aaditya/Llama3-OpenBioLLM-70B/results_2024-07-24T15:26:36Z.json

ADDED

|

@@ -0,0 +1,37 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"config": {

|

| 3 |

+

"model_name": "aaditya/Llama3-OpenBioLLM-70B",

|

| 4 |

+

"revision": "main",

|

| 5 |

+

"submitted_time": "2024-07-24 14:33:56+00:00",

|

| 6 |

+

"model_type": "domain-specific",

|

| 7 |

+

"num_params": 70000000000,

|

| 8 |

+

"private": false,

|

| 9 |

+

"evaluated_time": "2024-07-24T15:26:36Z"

|

| 10 |

+

},

|

| 11 |

+

"results": {

|

| 12 |

+

"MMLU": {

|

| 13 |

+

"accuracy": 90.4

|

| 14 |

+

},

|

| 15 |

+

"MMLU-Pro": {

|

| 16 |

+

"accuracy": 64.2

|

| 17 |

+

},

|

| 18 |

+

"MedMCQA": {

|

| 19 |

+

"accuracy": 73.2

|

| 20 |

+

},

|

| 21 |

+

"MedQA": {

|

| 22 |

+

"accuracy": 76.9

|

| 23 |

+

},

|

| 24 |

+

"USMLE": {

|

| 25 |

+

"accuracy": 79.0

|

| 26 |

+

},

|

| 27 |

+

"PubMedQA": {

|

| 28 |

+

"accuracy": 73.2

|

| 29 |

+

},

|

| 30 |

+

"ToxiGen": {

|

| 31 |

+

"accuracy": 91.3

|

| 32 |

+

},

|

| 33 |

+

"Average": {

|

| 34 |

+

"accuracy": 78.3

|

| 35 |

+

}

|

| 36 |

+

}

|

| 37 |

+

}

|

medic-harness-results/meta-llama/Llama-3.1-8B-Instruct/results_2024-07-24T15:26:36Z.json

ADDED

|

@@ -0,0 +1,39 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"config": {

|

| 3 |

+

"model_name": "meta-llama/Llama-3.1-8B-Instruct",

|

| 4 |

+

"revision": "main",

|

| 5 |

+

"submitted_time": "2024-07-24 14:33:56+00:00",

|

| 6 |

+

"model_type": "instruct-tuned",

|

| 7 |

+

"num_params": 8000000000,

|

| 8 |

+

"private": false,

|

| 9 |

+

"evaluated_time": "2024-07-24T15:26:36Z"

|

| 10 |

+

},

|

| 11 |

+

"results": {

|

| 12 |

+

"MMLU": {

|

| 13 |

+

"accuracy": 73.4

|

| 14 |

+

},

|

| 15 |

+

"MMLU-Pro": {

|

| 16 |

+

"accuracy": 49.9

|

| 17 |

+

},

|

| 18 |

+

"MedMCQA": {

|

| 19 |

+

"accuracy": 58.4

|

| 20 |

+

},

|

| 21 |

+

"MedQA": {

|

| 22 |

+

"accuracy": 62.0

|

| 23 |

+

},

|

| 24 |

+

"USMLE": {

|

| 25 |

+

"accuracy": 68.2

|

| 26 |

+

},

|

| 27 |

+

"PubMedQA": {

|

| 28 |

+

"accuracy": 76.2

|

| 29 |

+

},

|

| 30 |

+

"ToxiGen": {

|

| 31 |

+

"accuracy": 82.3

|

| 32 |

+

},

|

| 33 |

+

"Average": {

|

| 34 |

+

"accuracy": 67.2

|

| 35 |

+

}

|

| 36 |

+

}

|

| 37 |

+

}

|

| 38 |

+

|

| 39 |

+

|

requirements.txt

CHANGED

|

@@ -1,16 +1,18 @@

|

|

| 1 |

APScheduler

|

| 2 |

black

|

|

|

|

| 3 |

datasets

|

| 4 |

gradio

|

| 5 |

-

gradio[oauth]

|

| 6 |

-

gradio_leaderboard==0.0.9

|

| 7 |

gradio_client

|

| 8 |

huggingface-hub>=0.18.0

|

| 9 |

matplotlib

|

| 10 |

numpy

|

| 11 |

pandas

|

| 12 |

python-dateutil

|

|

|

|

| 13 |

tqdm

|

| 14 |

transformers

|

| 15 |

tokenizers>=0.15.0

|

|

|

|

|

|

|

| 16 |

sentencepiece

|

|

|

|

| 1 |

APScheduler

|

| 2 |

black

|

| 3 |

+

click

|

| 4 |

datasets

|

| 5 |

gradio

|

|

|

|

|

|

|

| 6 |

gradio_client

|

| 7 |

huggingface-hub>=0.18.0

|

| 8 |

matplotlib

|

| 9 |

numpy

|

| 10 |

pandas

|

| 11 |

python-dateutil

|

| 12 |

+

requests

|

| 13 |

tqdm

|

| 14 |

transformers

|

| 15 |

tokenizers>=0.15.0

|

| 16 |

+

git+https://github.com/EleutherAI/lm-evaluation-harness.git@b281b0921b636bc36ad05c0b0b0763bd6dd43463#egg=lm-eval

|

| 17 |

+

accelerate

|

| 18 |

sentencepiece

|

src/about.py

CHANGED

|

@@ -1,72 +1,267 @@

|

|

| 1 |

from dataclasses import dataclass

|

| 2 |

from enum import Enum

|

| 3 |

|

|

|

|

| 4 |

@dataclass

|

| 5 |

-

class

|

| 6 |

benchmark: str

|

| 7 |

metric: str

|

| 8 |

col_name: str

|

| 9 |

-

|

| 10 |

|

| 11 |

# Select your tasks here

|

| 12 |

# ---------------------------------------------------

|

| 13 |

-

class

|

| 14 |

-

# task_key in the json file, metric_key in the json file, name to display in the leaderboard

|

| 15 |

-

task0 = Task("anli_r1", "acc", "ANLI")

|

| 16 |

-

task1 = Task("logiqa", "acc_norm", "LogiQA")

|

|

|

|

|

|

|

|

|