Spaces:

Running

on

L40S

Running

on

L40S

Migrated from GitHub

Browse files- ORIGINAL_README.md +215 -0

- assert/lq/lq1.mp4 +0 -0

- assert/lq/lq2.mp4 +0 -0

- assert/lq/lq3.mp4 +0 -0

- assert/mask/lq3.png +0 -0

- assert/method.png +0 -0

- config/infer.yaml +21 -0

- infer.py +305 -0

- requirements.txt +10 -0

- src/dataset/dataset.py +50 -0

- src/dataset/face_align/align.py +36 -0

- src/dataset/face_align/yoloface.py +310 -0

- src/models/id_proj.py +20 -0

- src/models/model_insightface_360k.py +203 -0

- src/models/svfr_adapter/attention_processor.py +616 -0

- src/models/svfr_adapter/unet_3d_blocks.py +0 -0

- src/models/svfr_adapter/unet_3d_svd_condition_ip.py +536 -0

- src/pipelines/pipeline.py +812 -0

- src/utils/noise_util.py +25 -0

- src/utils/util.py +64 -0

ORIGINAL_README.md

ADDED

|

@@ -0,0 +1,215 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<!-- # SVFR: A Unified Framework for Generalized Video Face Restoration -->

|

| 2 |

+

|

| 3 |

+

<div>

|

| 4 |

+

<h1>SVFR: A Unified Framework for Generalized Video Face Restoration</h1>

|

| 5 |

+

</div>

|

| 6 |

+

|

| 7 |

+

[](https://arxiv.org/pdf/2501.01235)

|

| 8 |

+

[](https://wangzhiyaoo.github.io/SVFR/)

|

| 9 |

+

|

| 10 |

+

## 🔥 Overview

|

| 11 |

+

|

| 12 |

+

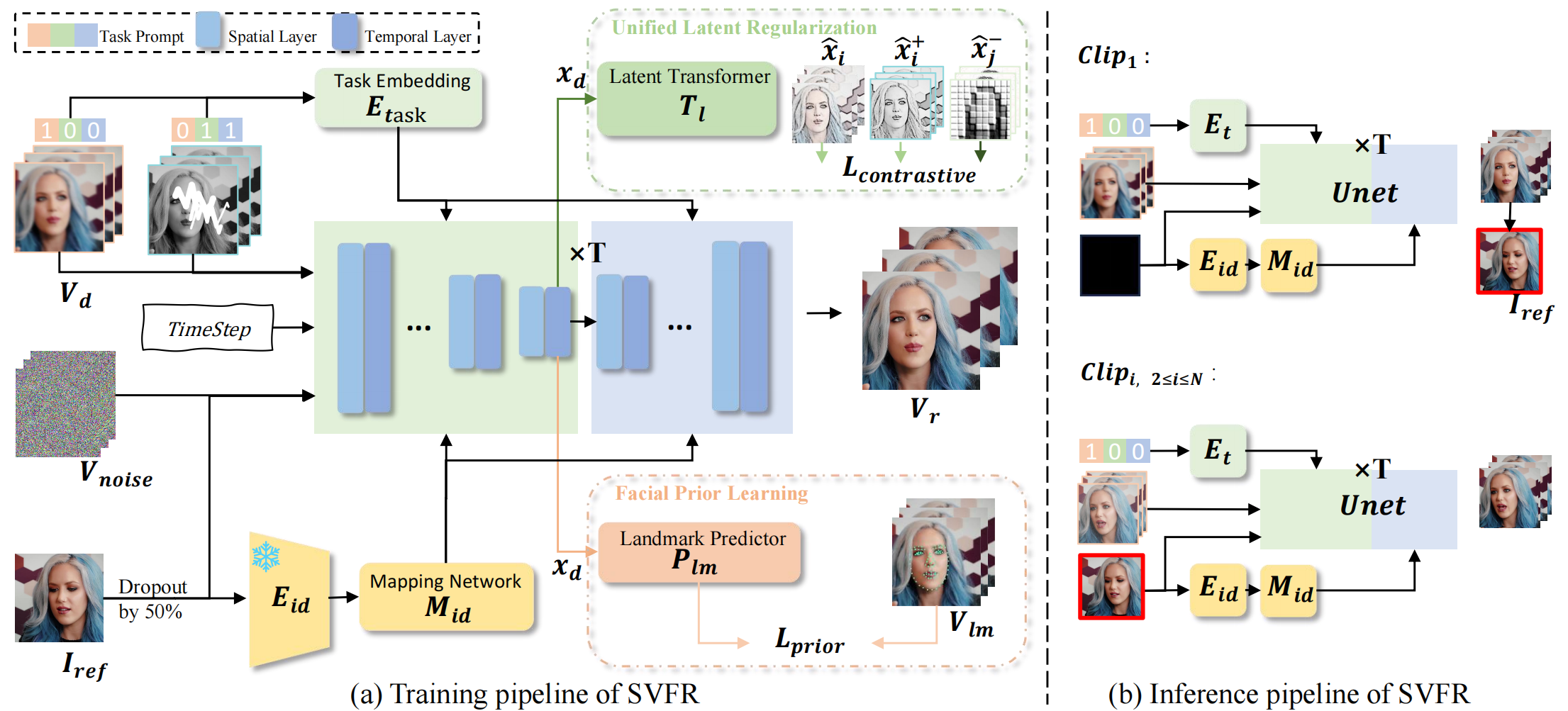

SVFR is a unified framework for face video restoration that supports tasks such as **BFR, Colorization, Inpainting**, and **their combinations** within one cohesive system.

|

| 13 |

+

|

| 14 |

+

<img src="assert/method.png">

|

| 15 |

+

|

| 16 |

+

## 🎬 Demo

|

| 17 |

+

|

| 18 |

+

### BFR

|

| 19 |

+

<!--

|

| 20 |

+

<div style="display: flex; gap: 10px;">

|

| 21 |

+

<video controls width="360">

|

| 22 |

+

<source src="https://wangzhiyaoo.github.io/SVFR/static/videos/wild-test/case1_bfr.mp4" type="video/mp4">

|

| 23 |

+

|

| 24 |

+

</video>

|

| 25 |

+

|

| 26 |

+

<video controls width="360">

|

| 27 |

+

<source src="https://wangzhiyaoo.github.io/SVFR/static/videos/wild-test/case4_bfr.mp4" type="video/mp4">

|

| 28 |

+

|

| 29 |

+

</video>

|

| 30 |

+

</div> -->

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

<!-- <div style="display: flex; gap: 10px;">

|

| 34 |

+

<video src="https://github.com/user-attachments/assets/49f985f3-a2db-4b9f-aed0-e9943bae9c17" controls width=45%></video>

|

| 35 |

+

<video src="https://github.com/user-attachments/assets/8fcd1dd9-79d3-4e57-b98e-a80ae2badfb5" controls width="45%"></video>

|

| 36 |

+

</div> -->

|

| 37 |

+

|

| 38 |

+

| Case1 | Case2 |

|

| 39 |

+

|--------------------------------------------------------------------------------------------------------------------------------|--------------------------------------------------------------------------------------------------------------------------------|

|

| 40 |

+

|<video src="https://github.com/user-attachments/assets/49f985f3-a2db-4b9f-aed0-e9943bae9c17" /> | <video src="https://github.com/user-attachments/assets/8fcd1dd9-79d3-4e57-b98e-a80ae2badfb5" /> |

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

<!-- <video src="https://wangzhiyaoo.github.io/SVFR/bfr"> -->

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

<!-- https://github.com/user-attachments/assets/49f985f3-a2db-4b9f-aed0-e9943bae9c17

|

| 48 |

+

|

| 49 |

+

https://github.com/user-attachments/assets/8fcd1dd9-79d3-4e57-b98e-a80ae2badfb5 -->

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

### BFR+Colorization

|

| 56 |

+

<!-- <div style="display: flex; gap: 10px;">

|

| 57 |

+

<video controls width="360">

|

| 58 |

+

<source src="https://wangzhiyaoo.github.io/SVFR/static/videos/wild-test/case10_bfr_colorization.mp4" type="video/mp4">

|

| 59 |

+

|

| 60 |

+

</video>

|

| 61 |

+

|

| 62 |

+

<video controls width="360">

|

| 63 |

+

<source src="https://wangzhiyaoo.github.io/SVFR/static/videos/wild-test/case12_bfr_colorization.mp4" type="video/mp4">

|

| 64 |

+

|

| 65 |

+

</video>

|

| 66 |

+

</div> -->

|

| 67 |

+

|

| 68 |

+

|

| 69 |

+

<!-- https://github.com/user-attachments/assets/795f4cb1-a7c9-41c5-9486-26e64a96bcf0

|

| 70 |

+

|

| 71 |

+

https://github.com/user-attachments/assets/6ccf2267-30be-4553-9ecc-f3e7e0ca1d6f -->

|

| 72 |

+

|

| 73 |

+

| Case3 | Case4 |

|

| 74 |

+

|--------------------------------------------------------------------------------------------------------------------------------|--------------------------------------------------------------------------------------------------------------------------------|

|

| 75 |

+

|<video src="https://github.com/user-attachments/assets/795f4cb1-a7c9-41c5-9486-26e64a96bcf0" /> | <video src="https://github.com/user-attachments/assets/6ccf2267-30be-4553-9ecc-f3e7e0ca1d6f" /> |

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

### BFR+Colorization+Inpainting

|

| 79 |

+

<!-- <div style="display: flex; gap: 10px;">

|

| 80 |

+

<video controls width="360">

|

| 81 |

+

<source src="https://wangzhiyaoo.github.io/SVFR/static/videos/wild-test/case14_bfr+colorization+inpainting.mp4" type="video/mp4">

|

| 82 |

+

|

| 83 |

+

</video>

|

| 84 |

+

|

| 85 |

+

<video controls width="360">

|

| 86 |

+

<source src="https://wangzhiyaoo.github.io/SVFR/static/videos/wild-test/case15_bfr+colorization+inpainting.mp4" type="video/mp4">

|

| 87 |

+

|

| 88 |

+

</video>

|

| 89 |

+

</div> -->

|

| 90 |

+

|

| 91 |

+

|

| 92 |

+

|

| 93 |

+

<!-- https://github.com/user-attachments/assets/6113819f-142b-4faa-b1c3-a2b669fd0786

|

| 94 |

+

|

| 95 |

+

https://github.com/user-attachments/assets/efdac23c-0ba5-4dad-ab8c-48904af5dd89

|

| 96 |

+

-->

|

| 97 |

+

|

| 98 |

+

|

| 99 |

+

| Case5 | Case6 |

|

| 100 |

+

|--------------------------------------------------------------------------------------------------------------------------------|--------------------------------------------------------------------------------------------------------------------------------|

|

| 101 |

+

|<video src="https://github.com/user-attachments/assets/6113819f-142b-4faa-b1c3-a2b669fd0786" /> | <video src="https://github.com/user-attachments/assets/efdac23c-0ba5-4dad-ab8c-48904af5dd89" /> |

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

## 🎙️ News

|

| 105 |

+

|

| 106 |

+

- **[2025.01.02]**: We released the initial version of the [inference code](#inference) and [models](#download-checkpoints). Stay tuned for continuous updates!

|

| 107 |

+

- **[2024.12.17]**: This repo is created!

|

| 108 |

+

|

| 109 |

+

## 🚀 Getting Started

|

| 110 |

+

|

| 111 |

+

## Setup

|

| 112 |

+

|

| 113 |

+

Use the following command to install a conda environment for SVFR from scratch:

|

| 114 |

+

|

| 115 |

+

```bash

|

| 116 |

+

conda create -n svfr python=3.9 -y

|

| 117 |

+

conda activate svfr

|

| 118 |

+

```

|

| 119 |

+

|

| 120 |

+

Install PyTorch: make sure to select the appropriate CUDA version based on your hardware, for example,

|

| 121 |

+

|

| 122 |

+

```bash

|

| 123 |

+

pip install torch==2.2.2 torchvision==0.17.2 torchaudio==2.2.2

|

| 124 |

+

```

|

| 125 |

+

|

| 126 |

+

Install Dependencies:

|

| 127 |

+

|

| 128 |

+

```bash

|

| 129 |

+

pip install -r requirements.txt

|

| 130 |

+

```

|

| 131 |

+

|

| 132 |

+

## Download checkpoints

|

| 133 |

+

|

| 134 |

+

<li>Download the Stable Video Diffusion</li>

|

| 135 |

+

|

| 136 |

+

```

|

| 137 |

+

conda install git-lfs

|

| 138 |

+

git lfs install

|

| 139 |

+

git clone https://huggingface.co/stabilityai/stable-video-diffusion-img2vid-xt models/stable-video-diffusion-img2vid-xt

|

| 140 |

+

```

|

| 141 |

+

|

| 142 |

+

<li>Download SVFR</li>

|

| 143 |

+

|

| 144 |

+

You can download checkpoints manually through link on [Google Drive](https://drive.google.com/drive/folders/1nzy9Vk-yA_DwXm1Pm4dyE2o0r7V6_5mn?usp=share_link).

|

| 145 |

+

|

| 146 |

+

Put checkpoints as follows:

|

| 147 |

+

|

| 148 |

+

```

|

| 149 |

+

└── models

|

| 150 |

+

├── face_align

|

| 151 |

+

│ ├── yoloface_v5m.pt

|

| 152 |

+

├── face_restoration

|

| 153 |

+

│ ├── unet.pth

|

| 154 |

+

│ ├── id_linear.pth

|

| 155 |

+

│ ├── insightface_glint360k.pth

|

| 156 |

+

└── stable-video-diffusion-img2vid-xt

|

| 157 |

+

├── vae

|

| 158 |

+

├── scheduler

|

| 159 |

+

└── ...

|

| 160 |

+

```

|

| 161 |

+

|

| 162 |

+

## Inference

|

| 163 |

+

|

| 164 |

+

### Inference single or multi task

|

| 165 |

+

|

| 166 |

+

```

|

| 167 |

+

python3 infer.py \

|

| 168 |

+

--config config/infer.yaml \

|

| 169 |

+

--task_ids 0 \

|

| 170 |

+

--input_path ./assert/lq/lq1.mp4 \

|

| 171 |

+

--output_dir ./results/

|

| 172 |

+

```

|

| 173 |

+

|

| 174 |

+

<li>task_id:</li>

|

| 175 |

+

|

| 176 |

+

> 0 -- bfr

|

| 177 |

+

> 1 -- colorization

|

| 178 |

+

> 2 -- inpainting

|

| 179 |

+

> 0,1 -- bfr and colorization

|

| 180 |

+

> 0,1,2 -- bfr and colorization and inpainting

|

| 181 |

+

> ...

|

| 182 |

+

|

| 183 |

+

### Inference with additional inpainting mask

|

| 184 |

+

|

| 185 |

+

```

|

| 186 |

+

# For Inference with Inpainting

|

| 187 |

+

# Add '--mask_path' if you need to specify the mask file.

|

| 188 |

+

|

| 189 |

+

python3 infer.py \

|

| 190 |

+

--config config/infer.yaml \

|

| 191 |

+

--task_ids 0,1,2 \

|

| 192 |

+

--input_path ./assert/lq/lq3.mp4 \

|

| 193 |

+

--output_dir ./results/

|

| 194 |

+

--mask_path ./assert/mask/lq3.png

|

| 195 |

+

```

|

| 196 |

+

|

| 197 |

+

## License

|

| 198 |

+

|

| 199 |

+

The code of SVFR is released under the MIT License. There is no limitation for both academic and commercial usage.

|

| 200 |

+

|

| 201 |

+

**The pretrained models we provided with this library are available for non-commercial research purposes only, including both auto-downloading models and manual-downloading models.**

|

| 202 |

+

|

| 203 |

+

|

| 204 |

+

## BibTex

|

| 205 |

+

```

|

| 206 |

+

@misc{wang2025svfrunifiedframeworkgeneralized,

|

| 207 |

+

title={SVFR: A Unified Framework for Generalized Video Face Restoration},

|

| 208 |

+

author={Zhiyao Wang and Xu Chen and Chengming Xu and Junwei Zhu and Xiaobin Hu and Jiangning Zhang and Chengjie Wang and Yuqi Liu and Yiyi Zhou and Rongrong Ji},

|

| 209 |

+

year={2025},

|

| 210 |

+

eprint={2501.01235},

|

| 211 |

+

archivePrefix={arXiv},

|

| 212 |

+

primaryClass={cs.CV},

|

| 213 |

+

url={https://arxiv.org/abs/2501.01235},

|

| 214 |

+

}

|

| 215 |

+

```

|

assert/lq/lq1.mp4

ADDED

|

Binary file (98.2 kB). View file

|

|

|

assert/lq/lq2.mp4

ADDED

|

Binary file (314 kB). View file

|

|

|

assert/lq/lq3.mp4

ADDED

|

Binary file (687 kB). View file

|

|

|

assert/mask/lq3.png

ADDED

|

assert/method.png

ADDED

|

config/infer.yaml

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

data:

|

| 2 |

+

n_sample_frames: 16

|

| 3 |

+

width: 512

|

| 4 |

+

height: 512

|

| 5 |

+

|

| 6 |

+

pretrained_model_name_or_path: "models/stable-video-diffusion-img2vid-xt"

|

| 7 |

+

unet_checkpoint_path: "models/face_restoration/unet.pth"

|

| 8 |

+

id_linear_checkpoint_path: "models/face_restoration/id_linear.pth"

|

| 9 |

+

net_arcface_checkpoint_path: "models/face_restoration/insightface_glint360k.pth"

|

| 10 |

+

# output_dir: 'result'

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

# test config

|

| 14 |

+

weight_dtype: 'fp16'

|

| 15 |

+

num_inference_steps: 30

|

| 16 |

+

decode_chunk_size: 16

|

| 17 |

+

overlap: 3

|

| 18 |

+

noise_aug_strength: 0.00

|

| 19 |

+

min_appearance_guidance_scale: 2.0

|

| 20 |

+

max_appearance_guidance_scale: 2.0

|

| 21 |

+

i2i_noise_strength: 1.0

|

infer.py

ADDED

|

@@ -0,0 +1,305 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import argparse

|

| 2 |

+

import warnings

|

| 3 |

+

import os

|

| 4 |

+

import numpy as np

|

| 5 |

+

import torch

|

| 6 |

+

import torch.utils.checkpoint

|

| 7 |

+

from PIL import Image

|

| 8 |

+

import random

|

| 9 |

+

|

| 10 |

+

from omegaconf import OmegaConf

|

| 11 |

+

from diffusers import AutoencoderKLTemporalDecoder

|

| 12 |

+

from diffusers.schedulers import EulerDiscreteScheduler

|

| 13 |

+

from transformers import CLIPVisionModelWithProjection

|

| 14 |

+

import torchvision.transforms as transforms

|

| 15 |

+

import torch.nn.functional as F

|

| 16 |

+

from src.models.svfr_adapter.unet_3d_svd_condition_ip import UNet3DConditionSVDModel

|

| 17 |

+

|

| 18 |

+

# pipeline

|

| 19 |

+

from src.pipelines.pipeline import LQ2VideoLongSVDPipeline

|

| 20 |

+

|

| 21 |

+

from src.utils.util import (

|

| 22 |

+

save_videos_grid,

|

| 23 |

+

seed_everything,

|

| 24 |

+

)

|

| 25 |

+

from torchvision.utils import save_image

|

| 26 |

+

|

| 27 |

+

from src.models.id_proj import IDProjConvModel

|

| 28 |

+

from src.models import model_insightface_360k

|

| 29 |

+

|

| 30 |

+

from src.dataset.face_align.align import AlignImage

|

| 31 |

+

|

| 32 |

+

warnings.filterwarnings("ignore")

|

| 33 |

+

|

| 34 |

+

import decord

|

| 35 |

+

import cv2

|

| 36 |

+

from src.dataset.dataset import get_affine_transform, mean_face_lm5p_256

|

| 37 |

+

|

| 38 |

+

BASE_DIR = '.'

|

| 39 |

+

|

| 40 |

+

def main(config,args):

|

| 41 |

+

if 'CUDA_VISIBLE_DEVICES' in os.environ:

|

| 42 |

+

cuda_visible_devices = os.environ['CUDA_VISIBLE_DEVICES']

|

| 43 |

+

print(f"CUDA_VISIBLE_DEVICES is set to: {cuda_visible_devices}")

|

| 44 |

+

else:

|

| 45 |

+

print("CUDA_VISIBLE_DEVICES is not set.")

|

| 46 |

+

|

| 47 |

+

save_dir = f"{BASE_DIR}/{args.output_dir}"

|

| 48 |

+

os.makedirs(save_dir,exist_ok=True)

|

| 49 |

+

|

| 50 |

+

vae = AutoencoderKLTemporalDecoder.from_pretrained(

|

| 51 |

+

f"{BASE_DIR}/{config.pretrained_model_name_or_path}",

|

| 52 |

+

subfolder="vae",

|

| 53 |

+

variant="fp16")

|

| 54 |

+

|

| 55 |

+

val_noise_scheduler = EulerDiscreteScheduler.from_pretrained(

|

| 56 |

+

f"{BASE_DIR}/{config.pretrained_model_name_or_path}",

|

| 57 |

+

subfolder="scheduler")

|

| 58 |

+

|

| 59 |

+

image_encoder = CLIPVisionModelWithProjection.from_pretrained(

|

| 60 |

+

f"{BASE_DIR}/{config.pretrained_model_name_or_path}",

|

| 61 |

+

subfolder="image_encoder",

|

| 62 |

+

variant="fp16")

|

| 63 |

+

unet = UNet3DConditionSVDModel.from_pretrained(

|

| 64 |

+

f"{BASE_DIR}/{config.pretrained_model_name_or_path}",

|

| 65 |

+

subfolder="unet",

|

| 66 |

+

variant="fp16")

|

| 67 |

+

|

| 68 |

+

weight_dir = 'models/face_align'

|

| 69 |

+

det_path = os.path.join(BASE_DIR, weight_dir, 'yoloface_v5m.pt')

|

| 70 |

+

align_instance = AlignImage("cuda", det_path=det_path)

|

| 71 |

+

|

| 72 |

+

to_tensor = transforms.Compose([

|

| 73 |

+

transforms.ToTensor(),

|

| 74 |

+

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))

|

| 75 |

+

])

|

| 76 |

+

|

| 77 |

+

import torch.nn as nn

|

| 78 |

+

class InflatedConv3d(nn.Conv2d):

|

| 79 |

+

def forward(self, x):

|

| 80 |

+

x = super().forward(x)

|

| 81 |

+

return x

|

| 82 |

+

# Add ref channel

|

| 83 |

+

old_weights = unet.conv_in.weight

|

| 84 |

+

old_bias = unet.conv_in.bias

|

| 85 |

+

new_conv1 = InflatedConv3d(

|

| 86 |

+

12,

|

| 87 |

+

old_weights.shape[0],

|

| 88 |

+

kernel_size=unet.conv_in.kernel_size,

|

| 89 |

+

stride=unet.conv_in.stride,

|

| 90 |

+

padding=unet.conv_in.padding,

|

| 91 |

+

bias=True if old_bias is not None else False,

|

| 92 |

+

)

|

| 93 |

+

param = torch.zeros((320, 4, 3, 3), requires_grad=True)

|

| 94 |

+

new_conv1.weight = torch.nn.Parameter(torch.cat((old_weights, param), dim=1))

|

| 95 |

+

if old_bias is not None:

|

| 96 |

+

new_conv1.bias = old_bias

|

| 97 |

+

unet.conv_in = new_conv1

|

| 98 |

+

unet.config["in_channels"] = 12

|

| 99 |

+

unet.config.in_channels = 12

|

| 100 |

+

|

| 101 |

+

|

| 102 |

+

id_linear = IDProjConvModel(in_channels=512, out_channels=1024).to(device='cuda')

|

| 103 |

+

|

| 104 |

+

# load pretrained weights

|

| 105 |

+

unet_checkpoint_path = os.path.join(BASE_DIR, config.unet_checkpoint_path)

|

| 106 |

+

unet.load_state_dict(

|

| 107 |

+

torch.load(unet_checkpoint_path, map_location="cpu"),

|

| 108 |

+

strict=True,

|

| 109 |

+

)

|

| 110 |

+

|

| 111 |

+

id_linear_checkpoint_path = os.path.join(BASE_DIR, config.id_linear_checkpoint_path)

|

| 112 |

+

id_linear.load_state_dict(

|

| 113 |

+

torch.load(id_linear_checkpoint_path, map_location="cpu"),

|

| 114 |

+

strict=True,

|

| 115 |

+

)

|

| 116 |

+

|

| 117 |

+

net_arcface = model_insightface_360k.getarcface(f'{BASE_DIR}/{config.net_arcface_checkpoint_path}').eval().to(device="cuda")

|

| 118 |

+

|

| 119 |

+

if config.weight_dtype == "fp16":

|

| 120 |

+

weight_dtype = torch.float16

|

| 121 |

+

elif config.weight_dtype == "fp32":

|

| 122 |

+

weight_dtype = torch.float32

|

| 123 |

+

elif config.weight_dtype == "bf16":

|

| 124 |

+

weight_dtype = torch.bfloat16

|

| 125 |

+

else:

|

| 126 |

+

raise ValueError(

|

| 127 |

+

f"Do not support weight dtype: {config.weight_dtype} during training"

|

| 128 |

+

)

|

| 129 |

+

|

| 130 |

+

image_encoder.to(weight_dtype)

|

| 131 |

+

vae.to(weight_dtype)

|

| 132 |

+

unet.to(weight_dtype)

|

| 133 |

+

id_linear.to(weight_dtype)

|

| 134 |

+

net_arcface.requires_grad_(False).to(weight_dtype)

|

| 135 |

+

|

| 136 |

+

pipe = LQ2VideoLongSVDPipeline(

|

| 137 |

+

unet=unet,

|

| 138 |

+

image_encoder=image_encoder,

|

| 139 |

+

vae=vae,

|

| 140 |

+

scheduler=val_noise_scheduler,

|

| 141 |

+

feature_extractor=None

|

| 142 |

+

|

| 143 |

+

)

|

| 144 |

+

pipe = pipe.to("cuda", dtype=unet.dtype)

|

| 145 |

+

|

| 146 |

+

seed_input = args.seed

|

| 147 |

+

seed_everything(seed_input)

|

| 148 |

+

|

| 149 |

+

video_path = args.input_path

|

| 150 |

+

task_ids = args.task_ids

|

| 151 |

+

|

| 152 |

+

if 2 in task_ids and args.mask_path is not None:

|

| 153 |

+

mask_path = args.mask_path

|

| 154 |

+

mask = Image.open(mask_path).convert("L")

|

| 155 |

+

mask_array = np.array(mask)

|

| 156 |

+

|

| 157 |

+

white_positions = mask_array == 255

|

| 158 |

+

|

| 159 |

+

print('task_ids:',task_ids)

|

| 160 |

+

task_prompt = [0,0,0]

|

| 161 |

+

for i in range(3):

|

| 162 |

+

if i in task_ids:

|

| 163 |

+

task_prompt[i] = 1

|

| 164 |

+

print("task_prompt:",task_prompt)

|

| 165 |

+

|

| 166 |

+

video_name = video_path.split('/')[-1]

|

| 167 |

+

# print(video_name)

|

| 168 |

+

|

| 169 |

+

if os.path.exists(os.path.join(save_dir, "result_frames", video_name[:-4])):

|

| 170 |

+

print(os.path.join(save_dir, "result_frames", video_name[:-4]))

|

| 171 |

+

# continue

|

| 172 |

+

|

| 173 |

+

cap = decord.VideoReader(video_path, fault_tol=1)

|

| 174 |

+

total_frames = len(cap)

|

| 175 |

+

T = total_frames #

|

| 176 |

+

print("total_frames:",total_frames)

|

| 177 |

+

step=1

|

| 178 |

+

drive_idx_start = 0

|

| 179 |

+

drive_idx_list = list(range(drive_idx_start, drive_idx_start + T * step, step))

|

| 180 |

+

assert len(drive_idx_list) == T

|

| 181 |

+

|

| 182 |

+

imSameIDs = []

|

| 183 |

+

vid_gt = []

|

| 184 |

+

for i, drive_idx in enumerate(drive_idx_list):

|

| 185 |

+

frame = cap[drive_idx].asnumpy()

|

| 186 |

+

imSameID = Image.fromarray(frame)

|

| 187 |

+

|

| 188 |

+

imSameID = imSameID.resize((512,512))

|

| 189 |

+

image_array = np.array(imSameID)

|

| 190 |

+

if 2 in task_ids and args.mask_path is not None:

|

| 191 |

+

image_array[white_positions] = [255, 255, 255] # mask for inpainting task

|

| 192 |

+

vid_gt.append(np.float32(image_array/255.))

|

| 193 |

+

imSameIDs.append(imSameID)

|

| 194 |

+

|

| 195 |

+

vid_lq = [(torch.from_numpy(frame).permute(2,0,1) - 0.5) / 0.5 for frame in vid_gt]

|

| 196 |

+

|

| 197 |

+

val_data = dict(

|

| 198 |

+

pixel_values_vid_lq = torch.stack(vid_lq,dim=0),

|

| 199 |

+

# pixel_values_ref_img=self.to_tensor(target_image),

|

| 200 |

+

# pixel_values_ref_concat_img=self.to_tensor(imSrc2),

|

| 201 |

+

task_ids=task_ids,

|

| 202 |

+

task_id_input=torch.tensor(task_prompt),

|

| 203 |

+

total_frames=total_frames,

|

| 204 |

+

)

|

| 205 |

+

|

| 206 |

+

window_overlap=0

|

| 207 |

+

inter_frame_list = get_overlap_slide_window_indices(val_data["total_frames"],config.data.n_sample_frames,window_overlap)

|

| 208 |

+

|

| 209 |

+

lq_frames = val_data["pixel_values_vid_lq"]

|

| 210 |

+

task_ids = val_data["task_ids"]

|

| 211 |

+

task_id_input = val_data["task_id_input"]

|

| 212 |

+

height, width = val_data["pixel_values_vid_lq"].shape[-2:]

|

| 213 |

+

|

| 214 |

+

print("Generating the first clip...")

|

| 215 |

+

output = pipe(

|

| 216 |

+

lq_frames[inter_frame_list[0]].to("cuda").to(weight_dtype), # lq

|

| 217 |

+

None, # ref concat

|

| 218 |

+

torch.zeros((1, len(inter_frame_list[0]), 49, 1024)).to("cuda").to(weight_dtype),# encoder_hidden_states

|

| 219 |

+

task_id_input.to("cuda").to(weight_dtype),

|

| 220 |

+

height=height,

|

| 221 |

+

width=width,

|

| 222 |

+

num_frames=len(inter_frame_list[0]),

|

| 223 |

+

decode_chunk_size=config.decode_chunk_size,

|

| 224 |

+

noise_aug_strength=config.noise_aug_strength,

|

| 225 |

+

min_guidance_scale=config.min_appearance_guidance_scale,

|

| 226 |

+

max_guidance_scale=config.max_appearance_guidance_scale,

|

| 227 |

+

overlap=config.overlap,

|

| 228 |

+

frames_per_batch=len(inter_frame_list[0]),

|

| 229 |

+

num_inference_steps=50,

|

| 230 |

+

i2i_noise_strength=config.i2i_noise_strength,

|

| 231 |

+

)

|

| 232 |

+

video = output.frames

|

| 233 |

+

|

| 234 |

+

ref_img_tensor = video[0][:,-1]

|

| 235 |

+

ref_img = (video[0][:,-1] *0.5+0.5).clamp(0,1) * 255.

|

| 236 |

+

ref_img = ref_img.permute(1,2,0).cpu().numpy().astype(np.uint8)

|

| 237 |

+

|

| 238 |

+

pts5 = align_instance(ref_img[:,:,[2,1,0]], maxface=True)[0][0]

|

| 239 |

+

|

| 240 |

+

warp_mat = get_affine_transform(pts5, mean_face_lm5p_256 * height/256)

|

| 241 |

+

ref_img = cv2.warpAffine(np.array(Image.fromarray(ref_img)), warp_mat, (height, width), flags=cv2.INTER_CUBIC)

|

| 242 |

+

ref_img = to_tensor(ref_img).to("cuda").to(weight_dtype)

|

| 243 |

+

|

| 244 |

+

save_image(ref_img*0.5 + 0.5,f"{save_dir}/ref_img_align.png")

|

| 245 |

+

|

| 246 |

+

ref_img = F.interpolate(ref_img.unsqueeze(0)[:, :, 0:224, 16:240], size=[112, 112], mode='bilinear')

|

| 247 |

+

_, id_feature_conv = net_arcface(ref_img)

|

| 248 |

+

id_embedding = id_linear(id_feature_conv)

|

| 249 |

+

|

| 250 |

+

print('Generating all video clips...')

|

| 251 |

+

video = pipe(

|

| 252 |

+

lq_frames.to("cuda").to(weight_dtype), # lq

|

| 253 |

+

ref_img_tensor.to("cuda").to(weight_dtype),

|

| 254 |

+

id_embedding.unsqueeze(1).repeat(1, len(lq_frames), 1, 1).to("cuda").to(weight_dtype), # encoder_hidden_states

|

| 255 |

+

task_id_input.to("cuda").to(weight_dtype),

|

| 256 |

+

height=height,

|

| 257 |

+

width=width,

|

| 258 |

+

num_frames=val_data["total_frames"],#frame_num,

|

| 259 |

+

decode_chunk_size=config.decode_chunk_size,

|

| 260 |

+

noise_aug_strength=config.noise_aug_strength,

|

| 261 |

+

min_guidance_scale=config.min_appearance_guidance_scale,

|

| 262 |

+

max_guidance_scale=config.max_appearance_guidance_scale,

|

| 263 |

+

overlap=config.overlap,

|

| 264 |

+

frames_per_batch=config.data.n_sample_frames,

|

| 265 |

+

num_inference_steps=config.num_inference_steps,

|

| 266 |

+

i2i_noise_strength=config.i2i_noise_strength,

|

| 267 |

+

).frames

|

| 268 |

+

|

| 269 |

+

|

| 270 |

+

video = (video*0.5 + 0.5).clamp(0, 1)

|

| 271 |

+

video = torch.cat([video.to(device="cuda")], dim=0).cpu()

|

| 272 |

+

|

| 273 |

+

save_videos_grid(video, f"{save_dir}/{video_name[:-4]}_{seed_input}.mp4", n_rows=1, fps=25)

|

| 274 |

+

|

| 275 |

+

if args.restore_frames:

|

| 276 |

+

video = video.squeeze(0)

|

| 277 |

+

os.makedirs(os.path.join(save_dir, "result_frames", f"{video_name[:-4]}_{seed_input}"),exist_ok=True)

|

| 278 |

+

print(os.path.join(save_dir, "result_frames", video_name[:-4]))

|

| 279 |

+

for i in range(video.shape[1]):

|

| 280 |

+

save_frames_path = os.path.join(f"{save_dir}/result_frames", f"{video_name[:-4]}_{seed_input}", f'{i:08d}.png')

|

| 281 |

+

save_image(video[:,i], save_frames_path)

|

| 282 |

+

|

| 283 |

+

|

| 284 |

+

def get_overlap_slide_window_indices(video_length, window_size, window_overlap):

|

| 285 |

+

inter_frame_list = []

|

| 286 |

+

for j in range(0, video_length, window_size-window_overlap):

|

| 287 |

+

inter_frame_list.append( [e % video_length for e in range(j, min(j + window_size, video_length))] )

|

| 288 |

+

|

| 289 |

+

return inter_frame_list

|

| 290 |

+

|

| 291 |

+

if __name__ == "__main__":

|

| 292 |

+

def parse_list(value):

|

| 293 |

+

return [int(x) for x in value.split(",")]

|

| 294 |

+

parser = argparse.ArgumentParser()

|

| 295 |

+

parser.add_argument("--config", type=str, default="./configs/infer.yaml")

|

| 296 |

+

parser.add_argument("--output_dir", type=str, default="output")

|

| 297 |

+

parser.add_argument("--seed", type=int, default=77)

|

| 298 |

+

parser.add_argument("--task_ids", type=parse_list, default=[0])

|

| 299 |

+

parser.add_argument("--input_path", type=str, default='./assert/lq/lq3.mp4')

|

| 300 |

+

parser.add_argument("--mask_path", type=str, default=None)

|

| 301 |

+

parser.add_argument("--restore_frames", action='store_true')

|

| 302 |

+

|

| 303 |

+

args = parser.parse_args()

|

| 304 |

+

config = OmegaConf.load(args.config)

|

| 305 |

+

main(config, args)

|

requirements.txt

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

accelerate

|

| 2 |

+

decord

|

| 3 |

+

diffusers

|

| 4 |

+

einops

|

| 5 |

+

moviepy==1.0.3

|

| 6 |

+

numpy<2.0

|

| 7 |

+

omegaconf

|

| 8 |

+

opencv-python

|

| 9 |

+

scikit-video

|

| 10 |

+

transformers

|

src/dataset/dataset.py

ADDED

|

@@ -0,0 +1,50 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import numpy as np

|

| 3 |

+

import random

|

| 4 |

+

from PIL import Image

|

| 5 |

+

import torch

|

| 6 |

+

from torch.utils.data import Dataset

|

| 7 |

+

import torchvision.transforms as transforms

|

| 8 |

+

from transformers import CLIPImageProcessor

|

| 9 |

+

# import librosa

|

| 10 |

+

|

| 11 |

+

import os

|

| 12 |

+

import cv2

|

| 13 |

+

|

| 14 |

+

mean_face_lm5p_256 = np.array([

|

| 15 |

+

[(30.2946+8)*2+16, 51.6963*2],

|

| 16 |

+

[(65.5318+8)*2+16, 51.5014*2],

|

| 17 |

+

[(48.0252+8)*2+16, 71.7366*2],

|

| 18 |

+

[(33.5493+8)*2+16, 92.3655*2],

|

| 19 |

+

[(62.7299+8)*2+16, 92.2041*2],

|

| 20 |

+

], dtype=np.float32)

|

| 21 |

+

|

| 22 |

+

def get_affine_transform(target_face_lm5p, mean_lm5p):

|

| 23 |

+

mat_warp = np.zeros((2,3))

|

| 24 |

+

A = np.zeros((4,4))

|

| 25 |

+

B = np.zeros((4))

|

| 26 |

+

for i in range(5):

|

| 27 |

+

A[0][0] += target_face_lm5p[i][0] * target_face_lm5p[i][0] + target_face_lm5p[i][1] * target_face_lm5p[i][1]

|

| 28 |

+

A[0][2] += target_face_lm5p[i][0]

|

| 29 |

+

A[0][3] += target_face_lm5p[i][1]

|

| 30 |

+

|

| 31 |

+

B[0] += target_face_lm5p[i][0] * mean_lm5p[i][0] + target_face_lm5p[i][1] * mean_lm5p[i][1] #sb[1] += a[i].x*b[i].y - a[i].y*b[i].x;

|

| 32 |

+

B[1] += target_face_lm5p[i][0] * mean_lm5p[i][1] - target_face_lm5p[i][1] * mean_lm5p[i][0]

|

| 33 |

+

B[2] += mean_lm5p[i][0]

|

| 34 |

+

B[3] += mean_lm5p[i][1]

|

| 35 |

+

|

| 36 |

+

A[1][1] = A[0][0]

|

| 37 |

+

A[2][1] = A[1][2] = -A[0][3]

|

| 38 |

+

A[3][1] = A[1][3] = A[2][0] = A[0][2]

|

| 39 |

+

A[2][2] = A[3][3] = 5

|

| 40 |

+

A[3][0] = A[0][3]

|

| 41 |

+

|

| 42 |

+

_, mat23 = cv2.solve(A, B, flags=cv2.DECOMP_SVD)

|

| 43 |

+

mat_warp[0][0] = mat23[0]

|

| 44 |

+

mat_warp[1][1] = mat23[0]

|

| 45 |

+

mat_warp[0][1] = -mat23[1]

|

| 46 |

+

mat_warp[1][0] = mat23[1]

|

| 47 |

+

mat_warp[0][2] = mat23[2]

|

| 48 |

+

mat_warp[1][2] = mat23[3]

|

| 49 |

+

|

| 50 |

+

return mat_warp

|

src/dataset/face_align/align.py

ADDED

|

@@ -0,0 +1,36 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import sys

|

| 3 |

+

BASE_DIR = os.path.dirname(os.path.abspath(__file__))

|

| 4 |

+

sys.path.append(BASE_DIR)

|

| 5 |

+

import torch

|

| 6 |

+

from src.dataset.face_align.yoloface import YoloFace

|

| 7 |

+

|

| 8 |

+

class AlignImage(object):

|

| 9 |

+

def __init__(self, device='cuda', det_path='checkpoints/yoloface_v5m.pt'):

|

| 10 |

+

self.facedet = YoloFace(pt_path=det_path, confThreshold=0.5, nmsThreshold=0.45, device=device)

|

| 11 |

+

|

| 12 |

+

@torch.no_grad()

|

| 13 |

+

def __call__(self, im, maxface=False):

|

| 14 |

+

bboxes, kpss, scores = self.facedet.detect(im)

|

| 15 |

+

face_num = bboxes.shape[0]

|

| 16 |

+

|

| 17 |

+

five_pts_list = []

|

| 18 |

+

scores_list = []

|

| 19 |

+

bboxes_list = []

|

| 20 |

+

for i in range(face_num):

|

| 21 |

+

five_pts_list.append(kpss[i].reshape(5,2))

|

| 22 |

+

scores_list.append(scores[i])

|

| 23 |

+

bboxes_list.append(bboxes[i])

|

| 24 |

+

|

| 25 |

+

if maxface and face_num>1:

|

| 26 |

+

max_idx = 0

|

| 27 |

+

max_area = (bboxes[0, 2])*(bboxes[0, 3])

|

| 28 |

+

for i in range(1, face_num):

|

| 29 |

+

area = (bboxes[i,2])*(bboxes[i,3])

|

| 30 |

+

if area>max_area:

|

| 31 |

+

max_idx = i

|

| 32 |

+

five_pts_list = [five_pts_list[max_idx]]

|

| 33 |

+

scores_list = [scores_list[max_idx]]

|

| 34 |

+

bboxes_list = [bboxes_list[max_idx]]

|

| 35 |

+

|

| 36 |

+

return five_pts_list, scores_list, bboxes_list

|

src/dataset/face_align/yoloface.py

ADDED

|

@@ -0,0 +1,310 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# -*- coding: UTF-8 -*-

|

| 2 |

+

import os

|

| 3 |

+

import cv2

|

| 4 |

+

import numpy as np

|

| 5 |

+

import torch

|

| 6 |

+

import torchvision

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

def xyxy2xywh(x):

|

| 10 |

+

# Convert nx4 boxes from [x1, y1, x2, y2] to [x, y, w, h] where xy1=top-left, xy2=bottom-right

|

| 11 |

+

y = x.clone() if isinstance(x, torch.Tensor) else np.copy(x)

|

| 12 |

+

y[:, 0] = (x[:, 0] + x[:, 2]) / 2 # x center

|

| 13 |

+

y[:, 1] = (x[:, 1] + x[:, 3]) / 2 # y center

|

| 14 |

+

y[:, 2] = x[:, 2] - x[:, 0] # width

|

| 15 |

+

y[:, 3] = x[:, 3] - x[:, 1] # height

|

| 16 |

+

return y

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

def xywh2xyxy(x):

|

| 20 |

+

# Convert nx4 boxes from [x, y, w, h] to [x1, y1, x2, y2] where xy1=top-left, xy2=bottom-right

|

| 21 |

+

y = x.clone() if isinstance(x, torch.Tensor) else np.copy(x)

|

| 22 |

+

y[:, 0] = x[:, 0] - x[:, 2] / 2 # top left x

|

| 23 |

+

y[:, 1] = x[:, 1] - x[:, 3] / 2 # top left y

|

| 24 |

+

y[:, 2] = x[:, 0] + x[:, 2] / 2 # bottom right x

|

| 25 |

+

y[:, 3] = x[:, 1] + x[:, 3] / 2 # bottom right y

|

| 26 |

+

return y

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

def box_iou(box1, box2):

|

| 30 |

+

# https://github.com/pytorch/vision/blob/master/torchvision/ops/boxes.py

|

| 31 |

+

"""

|

| 32 |

+

Return intersection-over-union (Jaccard index) of boxes.

|

| 33 |

+

Both sets of boxes are expected to be in (x1, y1, x2, y2) format.

|

| 34 |

+

Arguments:

|

| 35 |

+

box1 (Tensor[N, 4])

|

| 36 |

+

box2 (Tensor[M, 4])

|

| 37 |

+

Returns:

|

| 38 |

+

iou (Tensor[N, M]): the NxM matrix containing the pairwise

|

| 39 |

+

IoU values for every element in boxes1 and boxes2

|

| 40 |

+

"""

|

| 41 |

+

|

| 42 |

+

def box_area(box):

|

| 43 |

+

# box = 4xn

|

| 44 |

+

return (box[2] - box[0]) * (box[3] - box[1])

|

| 45 |

+

|

| 46 |

+

area1 = box_area(box1.T)

|

| 47 |

+

area2 = box_area(box2.T)

|

| 48 |

+

|

| 49 |

+

# inter(N,M) = (rb(N,M,2) - lt(N,M,2)).clamp(0).prod(2)

|

| 50 |

+

inter = (torch.min(box1[:, None, 2:], box2[:, 2:]) -

|

| 51 |

+

torch.max(box1[:, None, :2], box2[:, :2])).clamp(0).prod(2)

|

| 52 |

+

# iou = inter / (area1 + area2 - inter)

|

| 53 |

+

return inter / (area1[:, None] + area2 - inter)

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

def scale_coords(img1_shape, coords, img0_shape, ratio_pad=None):

|

| 57 |

+

# Rescale coords (xyxy) from img1_shape to img0_shape

|

| 58 |

+

if ratio_pad is None: # calculate from img0_shape

|

| 59 |

+

gain = min(img1_shape[0] / img0_shape[0], img1_shape[1] / img0_shape[1]) # gain = old / new

|

| 60 |

+

pad = (img1_shape[1] - img0_shape[1] * gain) / 2, (img1_shape[0] - img0_shape[0] * gain) / 2 # wh padding

|

| 61 |

+

else:

|

| 62 |

+

gain = ratio_pad[0][0]

|

| 63 |

+

pad = ratio_pad[1]

|

| 64 |

+

|

| 65 |

+

coords[:, [0, 2]] -= pad[0] # x padding

|

| 66 |

+

coords[:, [1, 3]] -= pad[1] # y padding

|

| 67 |

+

coords[:, :4] /= gain

|

| 68 |

+

clip_coords(coords, img0_shape)

|

| 69 |

+

return coords

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

def clip_coords(boxes, img_shape):

|

| 73 |

+

# Clip bounding xyxy bounding boxes to image shape (height, width)

|

| 74 |

+

boxes[:, 0].clamp_(0, img_shape[1]) # x1

|

| 75 |

+

boxes[:, 1].clamp_(0, img_shape[0]) # y1

|

| 76 |

+

boxes[:, 2].clamp_(0, img_shape[1]) # x2

|

| 77 |

+

boxes[:, 3].clamp_(0, img_shape[0]) # y2

|

| 78 |

+

|

| 79 |

+

|

| 80 |

+

def scale_coords_landmarks(img1_shape, coords, img0_shape, ratio_pad=None):

|

| 81 |

+

# Rescale coords (xyxy) from img1_shape to img0_shape

|

| 82 |

+

if ratio_pad is None: # calculate from img0_shape

|

| 83 |

+

gain = min(img1_shape[0] / img0_shape[0], img1_shape[1] / img0_shape[1]) # gain = old / new

|

| 84 |

+

pad = (img1_shape[1] - img0_shape[1] * gain) / 2, (img1_shape[0] - img0_shape[0] * gain) / 2 # wh padding

|

| 85 |

+

else:

|

| 86 |

+

gain = ratio_pad[0][0]

|

| 87 |

+

pad = ratio_pad[1]

|

| 88 |

+

|

| 89 |

+

coords[:, [0, 2, 4, 6, 8]] -= pad[0] # x padding

|

| 90 |

+

coords[:, [1, 3, 5, 7, 9]] -= pad[1] # y padding

|

| 91 |

+

coords[:, :10] /= gain

|

| 92 |

+

#clip_coords(coords, img0_shape)

|

| 93 |

+

coords[:, 0].clamp_(0, img0_shape[1]) # x1

|

| 94 |

+

coords[:, 1].clamp_(0, img0_shape[0]) # y1

|

| 95 |

+

coords[:, 2].clamp_(0, img0_shape[1]) # x2

|

| 96 |

+

coords[:, 3].clamp_(0, img0_shape[0]) # y2

|

| 97 |

+

coords[:, 4].clamp_(0, img0_shape[1]) # x3

|

| 98 |

+

coords[:, 5].clamp_(0, img0_shape[0]) # y3

|

| 99 |

+

coords[:, 6].clamp_(0, img0_shape[1]) # x4

|

| 100 |

+

coords[:, 7].clamp_(0, img0_shape[0]) # y4

|

| 101 |

+

coords[:, 8].clamp_(0, img0_shape[1]) # x5

|

| 102 |

+

coords[:, 9].clamp_(0, img0_shape[0]) # y5

|

| 103 |

+

return coords

|

| 104 |

+

|

| 105 |

+

|

| 106 |

+

def show_results(img, xywh, conf, landmarks, class_num):

|

| 107 |

+

h,w,c = img.shape

|

| 108 |

+

tl = 1 or round(0.002 * (h + w) / 2) + 1 # line/font thickness

|

| 109 |

+

x1 = int(xywh[0] * w - 0.5 * xywh[2] * w)

|

| 110 |

+

y1 = int(xywh[1] * h - 0.5 * xywh[3] * h)

|

| 111 |

+

x2 = int(xywh[0] * w + 0.5 * xywh[2] * w)

|

| 112 |

+

y2 = int(xywh[1] * h + 0.5 * xywh[3] * h)

|

| 113 |

+

cv2.rectangle(img, (x1,y1), (x2, y2), (0,255,0), thickness=tl, lineType=cv2.LINE_AA)

|

| 114 |

+

|

| 115 |

+

clors = [(255,0,0),(0,255,0),(0,0,255),(255,255,0),(0,255,255)]

|

| 116 |

+

|

| 117 |

+

for i in range(5):

|

| 118 |

+

point_x = int(landmarks[2 * i] * w)

|

| 119 |

+

point_y = int(landmarks[2 * i + 1] * h)

|

| 120 |

+

cv2.circle(img, (point_x, point_y), tl+1, clors[i], -1)

|

| 121 |

+

|

| 122 |

+

tf = max(tl - 1, 1) # font thickness

|

| 123 |

+

label = str(conf)[:5]

|

| 124 |

+

cv2.putText(img, label, (x1, y1 - 2), 0, tl / 3, [225, 255, 255], thickness=tf, lineType=cv2.LINE_AA)

|

| 125 |

+

return img

|

| 126 |

+

|

| 127 |

+

|

| 128 |

+

def make_divisible(x, divisor):

|

| 129 |

+

# Returns x evenly divisible by divisor

|

| 130 |

+

return (x // divisor) * divisor

|

| 131 |

+

|

| 132 |

+

|

| 133 |

+

def non_max_suppression_face(prediction, conf_thres=0.5, iou_thres=0.45, classes=None, agnostic=False, labels=()):

|

| 134 |

+

"""Performs Non-Maximum Suppression (NMS) on inference results

|

| 135 |

+

Returns:

|

| 136 |

+

detections with shape: nx6 (x1, y1, x2, y2, conf, cls)

|

| 137 |

+

"""

|

| 138 |

+

|

| 139 |

+

nc = prediction.shape[2] - 15 # number of classes

|

| 140 |

+