Commit

·

2995161

1

Parent(s):

f007fb2

fix oauth behavior locally

Browse files- README.md +60 -27

- app.py +3 -7

- assets/ui.png +0 -0

- demo.py +0 -61

- pdm.lock +127 -2

- pyproject.toml +1 -1

- src/distilabel_dataset_generator/__init__.py +26 -0

- src/distilabel_dataset_generator/apps/base.py +3 -2

- src/distilabel_dataset_generator/pipelines/base.py +1 -1

- src/distilabel_dataset_generator/utils.py +8 -19

README.md

CHANGED

|

@@ -18,47 +18,80 @@ hf_oauth_scopes:

|

|

| 18 |

- inference-api

|

| 19 |

---

|

| 20 |

|

| 21 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 22 |

|

| 23 |

-

|

| 24 |

-

<div class="title-container">

|

| 25 |

-

<h1 style="margin: 0; font-size: 2em;">🧬 Synthetic Data Generator</h1>

|

| 26 |

-

<p style="margin: 10px 0 0 0; color: #666; font-size: 1.1em;">Build datasets using natural language</p>

|

| 27 |

-

</div>

|

| 28 |

-

</div>

|

| 29 |

-

<br>

|

| 30 |

-

|

| 31 |

-

This repository contains the code for the [free Synthetic Data Generator app](https://huggingface.co/spaces/argilla/synthetic-data-generator), which is hosted on the Hugging Face Hub.

|

| 32 |

|

| 33 |

-

|

|

|

|

|

|

|

|

|

|

| 34 |

|

| 35 |

-

|

| 36 |

|

| 37 |

-

|

| 38 |

|

| 39 |

-

|

| 40 |

|

| 41 |

-

|

| 42 |

-

|

| 43 |

-

|

| 44 |

-

- Produce full-scale datasets with customizable parameters

|

| 45 |

-

- Push your generated datasets directly to the Hugging Face Hub

|

| 46 |

|

| 47 |

-

|

| 48 |

|

| 49 |

-

|

|

|

|

|

|

|

| 50 |

|

| 51 |

-

|

| 52 |

|

| 53 |

```bash

|

| 54 |

-

pip install -r requirements.txt

|

| 55 |

python app.py

|

| 56 |

```

|

| 57 |

|

| 58 |

-

|

| 59 |

-

|

| 60 |

-

## Do you need more control?

|

| 61 |

|

| 62 |

-

Each pipeline is based on

|

| 63 |

|

| 64 |

Check out the [distilabel library](https://github.com/argilla-io/distilabel) for more information.

|

|

|

|

| 18 |

- inference-api

|

| 19 |

---

|

| 20 |

|

| 21 |

+

<h1 align="center">

|

| 22 |

+

<br>

|

| 23 |

+

🧬 Synthetic Data Generator

|

| 24 |

+

<br>

|

| 25 |

+

</h1>

|

| 26 |

+

<h3 align="center">Build datasets using natural language</h2>

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

<p align="center">

|

| 31 |

+

<a href="https://pypi.org/project/synthetic-dataset-generator/">

|

| 32 |

+

<img alt="CI" src="https://img.shields.io/pypi/v/synthetic-dataset-generator.svg?style=flat-round&logo=pypi&logoColor=white">

|

| 33 |

+

</a>

|

| 34 |

+

<a href="https://pepy.tech/project/synthetic-dataset-generator">

|

| 35 |

+

<img alt="CI" src="https://static.pepy.tech/personalized-badge/argilla?period=month&units=international_system&left_color=grey&right_color=blue&left_text=pypi%20downloads/month">

|

| 36 |

+

</a>

|

| 37 |

+

<a href="https://huggingface.co/spaces/argilla/synthetic-data-generator?duplicate=true">

|

| 38 |

+

<img src="https://huggingface.co/datasets/huggingface/badges/raw/main/duplicate-this-space-sm.svg"/>

|

| 39 |

+

</a>

|

| 40 |

+

</p>

|

| 41 |

+

|

| 42 |

+

<p align="center">

|

| 43 |

+

<a href="https://twitter.com/argilla_io">

|

| 44 |

+

<img src="https://img.shields.io/badge/twitter-black?logo=x"/>

|

| 45 |

+

</a>

|

| 46 |

+

<a href="https://www.linkedin.com/company/argilla-io">

|

| 47 |

+

<img src="https://img.shields.io/badge/linkedin-blue?logo=linkedin"/>

|

| 48 |

+

</a>

|

| 49 |

+

<a href="http://hf.co/join/discord">

|

| 50 |

+

<img src="https://img.shields.io/badge/Discord-7289DA?&logo=discord&logoColor=white"/>

|

| 51 |

+

</a>

|

| 52 |

+

</p>

|

| 53 |

+

|

| 54 |

+

## Introduction

|

| 55 |

+

|

| 56 |

+

Synthetic Data Generator is a tool that allows you to create high-quality datasets for training and fine-tuning language models. It leverages the power of distilabel and LLMs to generate synthetic data tailored to your specific needs.

|

| 57 |

+

|

| 58 |

+

Supported Tasks:

|

| 59 |

+

|

| 60 |

+

- Text Classification

|

| 61 |

+

- Supervised Fine-Tuning

|

| 62 |

+

- Judging and rationale evaluation

|

| 63 |

|

| 64 |

+

This tool simplifies the process of creating custom datasets, enabling you to:

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 65 |

|

| 66 |

+

- Describe the characteristics of your desired application

|

| 67 |

+

- Iterate on sample datasets

|

| 68 |

+

- Produce full-scale datasets

|

| 69 |

+

- Push your datasets to the [Hugging Face Hub](https://huggingface.co/datasets?other=datacraft) and/or Argilla

|

| 70 |

|

| 71 |

+

By using the Synthetic Data Generator, you can rapidly prototype and create datasets for, accelerating your AI development process.

|

| 72 |

|

| 73 |

+

## Installation

|

| 74 |

|

| 75 |

+

You can simply install the package with:

|

| 76 |

|

| 77 |

+

```bash

|

| 78 |

+

pip install synthetic-dataset-generator

|

| 79 |

+

```

|

|

|

|

|

|

|

| 80 |

|

| 81 |

+

### Environment Variables

|

| 82 |

|

| 83 |

+

- `HF_TOKEN`: Your Hugging Face token to push your datasets to the Hugging Face Hub and run Inference Endpoints Requests. You can get one [here](https://huggingface.co/settings/tokens/new?ownUserPermissions=repo.content.read&ownUserPermissions=repo.write&globalPermissions=inference.serverless.write&tokenType=fineGrained).

|

| 84 |

+

- `ARGILLA_API_KEY`: Your Argilla API key to push your datasets to Argilla.

|

| 85 |

+

- `ARGILLA_API_URL`: Your Argilla API URL to push your datasets to Argilla.

|

| 86 |

|

| 87 |

+

## Quick Start

|

| 88 |

|

| 89 |

```bash

|

|

|

|

| 90 |

python app.py

|

| 91 |

```

|

| 92 |

|

| 93 |

+

## Custom synthetic data generation?

|

|

|

|

|

|

|

| 94 |

|

| 95 |

+

Each pipeline is based on distilabel, so you can easily change the LLM or the pipeline steps.

|

| 96 |

|

| 97 |

Check out the [distilabel library](https://github.com/argilla-io/distilabel) for more information.

|

app.py

CHANGED

|

@@ -1,12 +1,10 @@

|

|

| 1 |

-

import gradio as gr

|

| 2 |

-

|

| 3 |

from src.distilabel_dataset_generator._tabbedinterface import TabbedInterface

|

|

|

|

| 4 |

from src.distilabel_dataset_generator.apps.faq import app as faq_app

|

| 5 |

from src.distilabel_dataset_generator.apps.sft import app as sft_app

|

| 6 |

-

from src.distilabel_dataset_generator.apps.eval import app as eval_app

|

| 7 |

from src.distilabel_dataset_generator.apps.textcat import app as textcat_app

|

| 8 |

|

| 9 |

-

theme =

|

| 10 |

|

| 11 |

css = """

|

| 12 |

button[role="tab"][aria-selected="true"] { border: 0; background: var(--neutral-800); color: white; border-top-right-radius: var(--radius-md); border-top-left-radius: var(--radius-md)}

|

|

@@ -29,9 +27,7 @@ demo = TabbedInterface(

|

|

| 29 |

[textcat_app, sft_app, eval_app, faq_app],

|

| 30 |

["Text Classification", "Supervised Fine-Tuning", "Evaluation", "FAQ"],

|

| 31 |

css=css,

|

| 32 |

-

title=""

|

| 33 |

-

<h1>Synthetic Data Generator</h1>

|

| 34 |

-

""",

|

| 35 |

head="Synthetic Data Generator",

|

| 36 |

theme=theme,

|

| 37 |

)

|

|

|

|

|

|

|

|

|

|

| 1 |

from src.distilabel_dataset_generator._tabbedinterface import TabbedInterface

|

| 2 |

+

from src.distilabel_dataset_generator.apps.eval import app as eval_app

|

| 3 |

from src.distilabel_dataset_generator.apps.faq import app as faq_app

|

| 4 |

from src.distilabel_dataset_generator.apps.sft import app as sft_app

|

|

|

|

| 5 |

from src.distilabel_dataset_generator.apps.textcat import app as textcat_app

|

| 6 |

|

| 7 |

+

theme = "argilla/argilla-theme"

|

| 8 |

|

| 9 |

css = """

|

| 10 |

button[role="tab"][aria-selected="true"] { border: 0; background: var(--neutral-800); color: white; border-top-right-radius: var(--radius-md); border-top-left-radius: var(--radius-md)}

|

|

|

|

| 27 |

[textcat_app, sft_app, eval_app, faq_app],

|

| 28 |

["Text Classification", "Supervised Fine-Tuning", "Evaluation", "FAQ"],

|

| 29 |

css=css,

|

| 30 |

+

title="Synthetic Data Generator",

|

|

|

|

|

|

|

| 31 |

head="Synthetic Data Generator",

|

| 32 |

theme=theme,

|

| 33 |

)

|

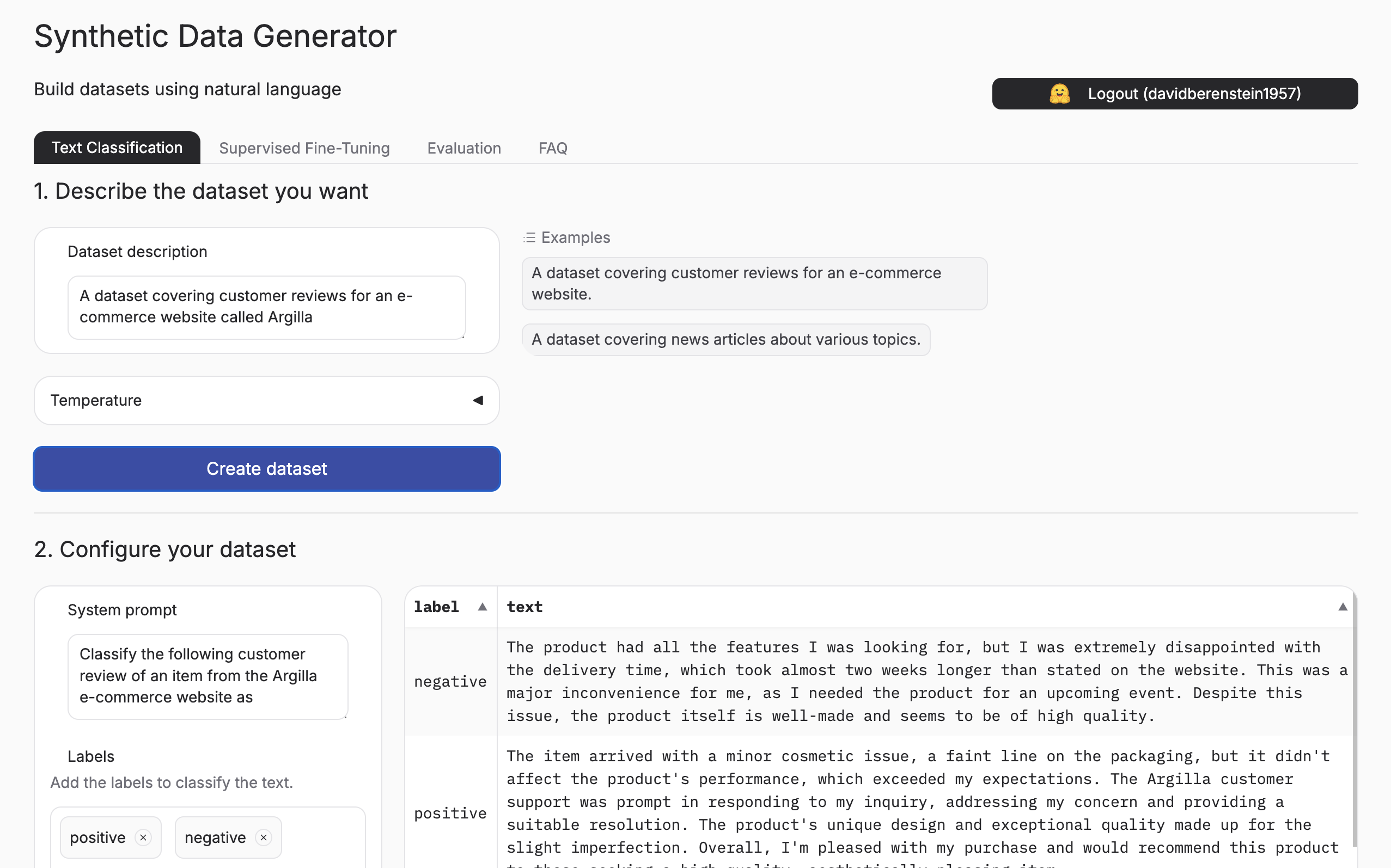

assets/ui.png

ADDED

|

demo.py

DELETED

|

@@ -1,61 +0,0 @@

|

|

| 1 |

-

import gradio as gr

|

| 2 |

-

|

| 3 |

-

from src.distilabel_dataset_generator._tabbedinterface import TabbedInterface

|

| 4 |

-

from src.distilabel_dataset_generator.apps.eval import app as eval_app

|

| 5 |

-

from src.distilabel_dataset_generator.apps.faq import app as faq_app

|

| 6 |

-

from src.distilabel_dataset_generator.apps.sft import app as sft_app

|

| 7 |

-

from src.distilabel_dataset_generator.apps.textcat import app as textcat_app

|

| 8 |

-

|

| 9 |

-

theme = gr.themes.Monochrome(

|

| 10 |

-

spacing_size="md",

|

| 11 |

-

font=[gr.themes.GoogleFont("Inter"), "ui-sans-serif", "system-ui", "sans-serif"],

|

| 12 |

-

)

|

| 13 |

-

|

| 14 |

-

css = """

|

| 15 |

-

.main_ui_logged_out{opacity: 0.3; pointer-events: none}

|

| 16 |

-

.tabitem{border: 0px}

|

| 17 |

-

.group_padding{padding: .55em}

|

| 18 |

-

#space_model .wrap > label:last-child{opacity: 0.3; pointer-events:none}

|

| 19 |

-

#system_prompt_examples {

|

| 20 |

-

color: black;

|

| 21 |

-

}

|

| 22 |

-

@media (prefers-color-scheme: dark) {

|

| 23 |

-

#system_prompt_examples {

|

| 24 |

-

color: white;

|

| 25 |

-

background-color: black;

|

| 26 |

-

}

|

| 27 |

-

}

|

| 28 |

-

button[role="tab"].selected,

|

| 29 |

-

button[role="tab"][aria-selected="true"],

|

| 30 |

-

button[role="tab"][data-tab-id][aria-selected="true"] {

|

| 31 |

-

background-color: #000000;

|

| 32 |

-

color: white;

|

| 33 |

-

border: none;

|

| 34 |

-

font-size: 16px;

|

| 35 |

-

font-weight: bold;

|

| 36 |

-

box-shadow: 0 4px 8px rgba(0, 0, 0, 0.2);

|

| 37 |

-

transition: background-color 0.3s ease, color 0.3s ease;

|

| 38 |

-

}

|

| 39 |

-

.gallery {

|

| 40 |

-

color: black !important;

|

| 41 |

-

}

|

| 42 |

-

.flex-shrink-0.truncate.px-1 {

|

| 43 |

-

color: black !important;

|

| 44 |

-

}

|

| 45 |

-

"""

|

| 46 |

-

|

| 47 |

-

demo = TabbedInterface(

|

| 48 |

-

[textcat_app, sft_app, eval_app, faq_app],

|

| 49 |

-

["Text Classification", "Supervised Fine-Tuning", "Evaluation", "FAQ"],

|

| 50 |

-

css=css,

|

| 51 |

-

title="""

|

| 52 |

-

<h1>Synthetic Data Generator</h1>

|

| 53 |

-

<h3>Build datasets using natural language</h3>

|

| 54 |

-

""",

|

| 55 |

-

head="Synthetic Data Generator",

|

| 56 |

-

theme=theme,

|

| 57 |

-

)

|

| 58 |

-

|

| 59 |

-

|

| 60 |

-

if __name__ == "__main__":

|

| 61 |

-

demo.launch()

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

pdm.lock

CHANGED

|

@@ -5,7 +5,7 @@

|

|

| 5 |

groups = ["default"]

|

| 6 |

strategy = ["inherit_metadata"]

|

| 7 |

lock_version = "4.5.0"

|

| 8 |

-

content_hash = "sha256:

|

| 9 |

|

| 10 |

[[metadata.targets]]

|

| 11 |

requires_python = ">=3.10,<3.13"

|

|

@@ -564,7 +564,7 @@ files = [

|

|

| 564 |

[[package]]

|

| 565 |

name = "distilabel"

|

| 566 |

version = "1.4.1"

|

| 567 |

-

extras = ["argilla", "hf-inference-endpoints", "outlines"]

|

| 568 |

requires_python = ">=3.9"

|

| 569 |

summary = "Distilabel is an AI Feedback (AIF) framework for building datasets with and for LLMs."

|

| 570 |

groups = ["default"]

|

|

@@ -572,6 +572,7 @@ dependencies = [

|

|

| 572 |

"argilla>=2.0.0",

|

| 573 |

"distilabel==1.4.1",

|

| 574 |

"huggingface-hub>=0.22.0",

|

|

|

|

| 575 |

"ipython",

|

| 576 |

"numba>=0.54.0",

|

| 577 |

"outlines>=0.0.40",

|

|

@@ -581,6 +582,28 @@ files = [

|

|

| 581 |

{file = "distilabel-1.4.1.tar.gz", hash = "sha256:0c373be234e8f2982ec7f940d9a95585b15306b6ab5315f5a6a45214d8f34006"},

|

| 582 |

]

|

| 583 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 584 |

[[package]]

|

| 585 |

name = "exceptiongroup"

|

| 586 |

version = "1.2.2"

|

|

@@ -942,6 +965,30 @@ files = [

|

|

| 942 |

{file = "importlib_resources-6.4.5.tar.gz", hash = "sha256:980862a1d16c9e147a59603677fa2aa5fd82b87f223b6cb870695bcfce830065"},

|

| 943 |

]

|

| 944 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 945 |

[[package]]

|

| 946 |

name = "interegular"

|

| 947 |

version = "0.3.3"

|

|

@@ -1015,6 +1062,52 @@ files = [

|

|

| 1015 |

{file = "jinja2-3.1.4.tar.gz", hash = "sha256:4a3aee7acbbe7303aede8e9648d13b8bf88a429282aa6122a993f0ac800cb369"},

|

| 1016 |

]

|

| 1017 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1018 |

[[package]]

|

| 1019 |

name = "joblib"

|

| 1020 |

version = "1.4.2"

|

|

@@ -1638,6 +1731,27 @@ files = [

|

|

| 1638 |

{file = "nvidia_nvtx_cu12-12.4.127-py3-none-win_amd64.whl", hash = "sha256:641dccaaa1139f3ffb0d3164b4b84f9d253397e38246a4f2f36728b48566d485"},

|

| 1639 |

]

|

| 1640 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1641 |

[[package]]

|

| 1642 |

name = "orjson"

|

| 1643 |

version = "3.10.11"

|

|

@@ -2680,6 +2794,17 @@ files = [

|

|

| 2680 |

{file = "tblib-3.0.0.tar.gz", hash = "sha256:93622790a0a29e04f0346458face1e144dc4d32f493714c6c3dff82a4adb77e6"},

|

| 2681 |

]

|

| 2682 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 2683 |

[[package]]

|

| 2684 |

name = "threadpoolctl"

|

| 2685 |

version = "3.5.0"

|

|

|

|

| 5 |

groups = ["default"]

|

| 6 |

strategy = ["inherit_metadata"]

|

| 7 |

lock_version = "4.5.0"

|

| 8 |

+

content_hash = "sha256:87e2a6c0c74be28ed570492c4401d430ae5ce4dfad5f015cd3e6b476f9c14f2f"

|

| 9 |

|

| 10 |

[[metadata.targets]]

|

| 11 |

requires_python = ">=3.10,<3.13"

|

|

|

|

| 564 |

[[package]]

|

| 565 |

name = "distilabel"

|

| 566 |

version = "1.4.1"

|

| 567 |

+

extras = ["argilla", "hf-inference-endpoints", "instructor", "outlines"]

|

| 568 |

requires_python = ">=3.9"

|

| 569 |

summary = "Distilabel is an AI Feedback (AIF) framework for building datasets with and for LLMs."

|

| 570 |

groups = ["default"]

|

|

|

|

| 572 |

"argilla>=2.0.0",

|

| 573 |

"distilabel==1.4.1",

|

| 574 |

"huggingface-hub>=0.22.0",

|

| 575 |

+

"instructor>=1.2.3",

|

| 576 |

"ipython",

|

| 577 |

"numba>=0.54.0",

|

| 578 |

"outlines>=0.0.40",

|

|

|

|

| 582 |

{file = "distilabel-1.4.1.tar.gz", hash = "sha256:0c373be234e8f2982ec7f940d9a95585b15306b6ab5315f5a6a45214d8f34006"},

|

| 583 |

]

|

| 584 |

|

| 585 |

+

[[package]]

|

| 586 |

+

name = "distro"

|

| 587 |

+

version = "1.9.0"

|

| 588 |

+

requires_python = ">=3.6"

|

| 589 |

+

summary = "Distro - an OS platform information API"

|

| 590 |

+

groups = ["default"]

|

| 591 |

+

files = [

|

| 592 |

+

{file = "distro-1.9.0-py3-none-any.whl", hash = "sha256:7bffd925d65168f85027d8da9af6bddab658135b840670a223589bc0c8ef02b2"},

|

| 593 |

+

{file = "distro-1.9.0.tar.gz", hash = "sha256:2fa77c6fd8940f116ee1d6b94a2f90b13b5ea8d019b98bc8bafdcabcdd9bdbed"},

|

| 594 |

+

]

|

| 595 |

+

|

| 596 |

+

[[package]]

|

| 597 |

+

name = "docstring-parser"

|

| 598 |

+

version = "0.16"

|

| 599 |

+

requires_python = ">=3.6,<4.0"

|

| 600 |

+

summary = "Parse Python docstrings in reST, Google and Numpydoc format"

|

| 601 |

+

groups = ["default"]

|

| 602 |

+

files = [

|

| 603 |

+

{file = "docstring_parser-0.16-py3-none-any.whl", hash = "sha256:bf0a1387354d3691d102edef7ec124f219ef639982d096e26e3b60aeffa90637"},

|

| 604 |

+

{file = "docstring_parser-0.16.tar.gz", hash = "sha256:538beabd0af1e2db0146b6bd3caa526c35a34d61af9fd2887f3a8a27a739aa6e"},

|

| 605 |

+

]

|

| 606 |

+

|

| 607 |

[[package]]

|

| 608 |

name = "exceptiongroup"

|

| 609 |

version = "1.2.2"

|

|

|

|

| 965 |

{file = "importlib_resources-6.4.5.tar.gz", hash = "sha256:980862a1d16c9e147a59603677fa2aa5fd82b87f223b6cb870695bcfce830065"},

|

| 966 |

]

|

| 967 |

|

| 968 |

+

[[package]]

|

| 969 |

+

name = "instructor"

|

| 970 |

+

version = "1.7.0"

|

| 971 |

+

requires_python = "<4.0,>=3.9"

|

| 972 |

+

summary = "structured outputs for llm"

|

| 973 |

+

groups = ["default"]

|

| 974 |

+

dependencies = [

|

| 975 |

+

"aiohttp<4.0.0,>=3.9.1",

|

| 976 |

+

"docstring-parser<0.17,>=0.16",

|

| 977 |

+

"jinja2<4.0.0,>=3.1.4",

|

| 978 |

+

"jiter<0.7,>=0.6.1",

|

| 979 |

+

"openai<2.0.0,>=1.52.0",

|

| 980 |

+

"pydantic-core<3.0.0,>=2.18.0",

|

| 981 |

+

"pydantic<3.0.0,>=2.8.0",

|

| 982 |

+

"requests<3.0.0,>=2.32.3",

|

| 983 |

+

"rich<14.0.0,>=13.7.0",

|

| 984 |

+

"tenacity<10.0.0,>=9.0.0",

|

| 985 |

+

"typer<1.0.0,>=0.9.0",

|

| 986 |

+

]

|

| 987 |

+

files = [

|

| 988 |

+

{file = "instructor-1.7.0-py3-none-any.whl", hash = "sha256:0bff965d71a5398aed9d3f728e07ffb7b5050569c81f306c0e5a8d022071fe29"},

|

| 989 |

+

{file = "instructor-1.7.0.tar.gz", hash = "sha256:51b308ae9c5e4d56096514be785ac4f28f710c91bed80af74412fc21593431b3"},

|

| 990 |

+

]

|

| 991 |

+

|

| 992 |

[[package]]

|

| 993 |

name = "interegular"

|

| 994 |

version = "0.3.3"

|

|

|

|

| 1062 |

{file = "jinja2-3.1.4.tar.gz", hash = "sha256:4a3aee7acbbe7303aede8e9648d13b8bf88a429282aa6122a993f0ac800cb369"},

|

| 1063 |

]

|

| 1064 |

|

| 1065 |

+

[[package]]

|

| 1066 |

+

name = "jiter"

|

| 1067 |

+

version = "0.6.1"

|

| 1068 |

+

requires_python = ">=3.8"

|

| 1069 |

+

summary = "Fast iterable JSON parser."

|

| 1070 |

+

groups = ["default"]

|

| 1071 |

+

files = [

|

| 1072 |

+

{file = "jiter-0.6.1-cp310-cp310-macosx_10_12_x86_64.whl", hash = "sha256:d08510593cb57296851080018006dfc394070178d238b767b1879dc1013b106c"},

|

| 1073 |

+

{file = "jiter-0.6.1-cp310-cp310-macosx_11_0_arm64.whl", hash = "sha256:adef59d5e2394ebbad13b7ed5e0306cceb1df92e2de688824232a91588e77aa7"},

|

| 1074 |

+

{file = "jiter-0.6.1-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl", hash = "sha256:b3e02f7a27f2bcc15b7d455c9df05df8ffffcc596a2a541eeda9a3110326e7a3"},

|

| 1075 |

+

{file = "jiter-0.6.1-cp310-cp310-manylinux_2_17_armv7l.manylinux2014_armv7l.whl", hash = "sha256:ed69a7971d67b08f152c17c638f0e8c2aa207e9dd3a5fcd3cba294d39b5a8d2d"},

|

| 1076 |

+

{file = "jiter-0.6.1-cp310-cp310-manylinux_2_17_ppc64le.manylinux2014_ppc64le.whl", hash = "sha256:b2019d966e98f7c6df24b3b8363998575f47d26471bfb14aade37630fae836a1"},

|

| 1077 |

+

{file = "jiter-0.6.1-cp310-cp310-manylinux_2_17_s390x.manylinux2014_s390x.whl", hash = "sha256:36c0b51a285b68311e207a76c385650322734c8717d16c2eb8af75c9d69506e7"},

|

| 1078 |

+

{file = "jiter-0.6.1-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:220e0963b4fb507c525c8f58cde3da6b1be0bfddb7ffd6798fb8f2531226cdb1"},

|

| 1079 |

+

{file = "jiter-0.6.1-cp310-cp310-manylinux_2_5_i686.manylinux1_i686.whl", hash = "sha256:aa25c7a9bf7875a141182b9c95aed487add635da01942ef7ca726e42a0c09058"},

|

| 1080 |

+

{file = "jiter-0.6.1-cp310-cp310-musllinux_1_1_aarch64.whl", hash = "sha256:e90552109ca8ccd07f47ca99c8a1509ced93920d271bb81780a973279974c5ab"},

|

| 1081 |

+

{file = "jiter-0.6.1-cp310-cp310-musllinux_1_1_x86_64.whl", hash = "sha256:67723a011964971864e0b484b0ecfee6a14de1533cff7ffd71189e92103b38a8"},

|

| 1082 |

+

{file = "jiter-0.6.1-cp310-none-win32.whl", hash = "sha256:33af2b7d2bf310fdfec2da0177eab2fedab8679d1538d5b86a633ebfbbac4edd"},

|

| 1083 |

+

{file = "jiter-0.6.1-cp310-none-win_amd64.whl", hash = "sha256:7cea41c4c673353799906d940eee8f2d8fd1d9561d734aa921ae0f75cb9732f4"},

|

| 1084 |

+

{file = "jiter-0.6.1-cp311-cp311-macosx_10_12_x86_64.whl", hash = "sha256:b03c24e7da7e75b170c7b2b172d9c5e463aa4b5c95696a368d52c295b3f6847f"},

|

| 1085 |

+

{file = "jiter-0.6.1-cp311-cp311-macosx_11_0_arm64.whl", hash = "sha256:47fee1be677b25d0ef79d687e238dc6ac91a8e553e1a68d0839f38c69e0ee491"},

|

| 1086 |

+

{file = "jiter-0.6.1-cp311-cp311-manylinux_2_17_aarch64.manylinux2014_aarch64.whl", hash = "sha256:25f0d2f6e01a8a0fb0eab6d0e469058dab2be46ff3139ed2d1543475b5a1d8e7"},

|

| 1087 |

+

{file = "jiter-0.6.1-cp311-cp311-manylinux_2_17_armv7l.manylinux2014_armv7l.whl", hash = "sha256:0b809e39e342c346df454b29bfcc7bca3d957f5d7b60e33dae42b0e5ec13e027"},

|

| 1088 |

+

{file = "jiter-0.6.1-cp311-cp311-manylinux_2_17_ppc64le.manylinux2014_ppc64le.whl", hash = "sha256:e9ac7c2f092f231f5620bef23ce2e530bd218fc046098747cc390b21b8738a7a"},

|

| 1089 |

+

{file = "jiter-0.6.1-cp311-cp311-manylinux_2_17_s390x.manylinux2014_s390x.whl", hash = "sha256:e51a2d80d5fe0ffb10ed2c82b6004458be4a3f2b9c7d09ed85baa2fbf033f54b"},

|

| 1090 |

+

{file = "jiter-0.6.1-cp311-cp311-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:3343d4706a2b7140e8bd49b6c8b0a82abf9194b3f0f5925a78fc69359f8fc33c"},

|

| 1091 |

+

{file = "jiter-0.6.1-cp311-cp311-manylinux_2_5_i686.manylinux1_i686.whl", hash = "sha256:82521000d18c71e41c96960cb36e915a357bc83d63a8bed63154b89d95d05ad1"},

|

| 1092 |

+

{file = "jiter-0.6.1-cp311-cp311-musllinux_1_1_aarch64.whl", hash = "sha256:3c843e7c1633470708a3987e8ce617ee2979ee18542d6eb25ae92861af3f1d62"},

|

| 1093 |

+

{file = "jiter-0.6.1-cp311-cp311-musllinux_1_1_x86_64.whl", hash = "sha256:a2e861658c3fe849efc39b06ebb98d042e4a4c51a8d7d1c3ddc3b1ea091d0784"},

|

| 1094 |

+

{file = "jiter-0.6.1-cp311-none-win32.whl", hash = "sha256:7d72fc86474862c9c6d1f87b921b70c362f2b7e8b2e3c798bb7d58e419a6bc0f"},

|

| 1095 |

+

{file = "jiter-0.6.1-cp311-none-win_amd64.whl", hash = "sha256:3e36a320634f33a07794bb15b8da995dccb94f944d298c8cfe2bd99b1b8a574a"},

|

| 1096 |

+

{file = "jiter-0.6.1-cp312-cp312-macosx_10_12_x86_64.whl", hash = "sha256:1fad93654d5a7dcce0809aff66e883c98e2618b86656aeb2129db2cd6f26f867"},

|

| 1097 |

+

{file = "jiter-0.6.1-cp312-cp312-macosx_11_0_arm64.whl", hash = "sha256:4e6e340e8cd92edab7f6a3a904dbbc8137e7f4b347c49a27da9814015cc0420c"},

|

| 1098 |

+

{file = "jiter-0.6.1-cp312-cp312-manylinux_2_17_aarch64.manylinux2014_aarch64.whl", hash = "sha256:691352e5653af84ed71763c3c427cff05e4d658c508172e01e9c956dfe004aba"},

|

| 1099 |

+

{file = "jiter-0.6.1-cp312-cp312-manylinux_2_17_armv7l.manylinux2014_armv7l.whl", hash = "sha256:defee3949313c1f5b55e18be45089970cdb936eb2a0063f5020c4185db1b63c9"},

|

| 1100 |

+

{file = "jiter-0.6.1-cp312-cp312-manylinux_2_17_ppc64le.manylinux2014_ppc64le.whl", hash = "sha256:26d2bdd5da097e624081c6b5d416d3ee73e5b13f1703bcdadbb1881f0caa1933"},

|

| 1101 |

+

{file = "jiter-0.6.1-cp312-cp312-manylinux_2_17_s390x.manylinux2014_s390x.whl", hash = "sha256:18aa9d1626b61c0734b973ed7088f8a3d690d0b7f5384a5270cd04f4d9f26c86"},

|

| 1102 |

+

{file = "jiter-0.6.1-cp312-cp312-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:7a3567c8228afa5ddcce950631c6b17397ed178003dc9ee7e567c4c4dcae9fa0"},

|

| 1103 |

+

{file = "jiter-0.6.1-cp312-cp312-manylinux_2_5_i686.manylinux1_i686.whl", hash = "sha256:e5c0507131c922defe3f04c527d6838932fcdfd69facebafd7d3574fa3395314"},

|

| 1104 |

+

{file = "jiter-0.6.1-cp312-cp312-musllinux_1_1_aarch64.whl", hash = "sha256:540fcb224d7dc1bcf82f90f2ffb652df96f2851c031adca3c8741cb91877143b"},

|

| 1105 |

+

{file = "jiter-0.6.1-cp312-cp312-musllinux_1_1_x86_64.whl", hash = "sha256:e7b75436d4fa2032b2530ad989e4cb0ca74c655975e3ff49f91a1a3d7f4e1df2"},

|

| 1106 |

+

{file = "jiter-0.6.1-cp312-none-win32.whl", hash = "sha256:883d2ced7c21bf06874fdeecab15014c1c6d82216765ca6deef08e335fa719e0"},

|

| 1107 |

+

{file = "jiter-0.6.1-cp312-none-win_amd64.whl", hash = "sha256:91e63273563401aadc6c52cca64a7921c50b29372441adc104127b910e98a5b6"},

|

| 1108 |

+

{file = "jiter-0.6.1.tar.gz", hash = "sha256:e19cd21221fc139fb032e4112986656cb2739e9fe6d84c13956ab30ccc7d4449"},

|

| 1109 |

+

]

|

| 1110 |

+

|

| 1111 |

[[package]]

|

| 1112 |

name = "joblib"

|

| 1113 |

version = "1.4.2"

|

|

|

|

| 1731 |

{file = "nvidia_nvtx_cu12-12.4.127-py3-none-win_amd64.whl", hash = "sha256:641dccaaa1139f3ffb0d3164b4b84f9d253397e38246a4f2f36728b48566d485"},

|

| 1732 |

]

|

| 1733 |

|

| 1734 |

+

[[package]]

|

| 1735 |

+

name = "openai"

|

| 1736 |

+

version = "1.56.0"

|

| 1737 |

+

requires_python = ">=3.8"

|

| 1738 |

+

summary = "The official Python library for the openai API"

|

| 1739 |

+

groups = ["default"]

|

| 1740 |

+

dependencies = [

|

| 1741 |

+

"anyio<5,>=3.5.0",

|

| 1742 |

+

"distro<2,>=1.7.0",

|

| 1743 |

+

"httpx<1,>=0.23.0",

|

| 1744 |

+

"jiter<1,>=0.4.0",

|

| 1745 |

+

"pydantic<3,>=1.9.0",

|

| 1746 |

+

"sniffio",

|

| 1747 |

+

"tqdm>4",

|

| 1748 |

+

"typing-extensions<5,>=4.11",

|

| 1749 |

+

]

|

| 1750 |

+

files = [

|

| 1751 |

+

{file = "openai-1.56.0-py3-none-any.whl", hash = "sha256:0751a6e139a09fca2e9cbbe8a62bfdab901b5865249d2555d005decf966ef9c3"},

|

| 1752 |

+

{file = "openai-1.56.0.tar.gz", hash = "sha256:f7fa159c8e18e7f9a8d71ff4b8052452ae70a4edc6b76a6e97eda00d5364923f"},

|

| 1753 |

+

]

|

| 1754 |

+

|

| 1755 |

[[package]]

|

| 1756 |

name = "orjson"

|

| 1757 |

version = "3.10.11"

|

|

|

|

| 2794 |

{file = "tblib-3.0.0.tar.gz", hash = "sha256:93622790a0a29e04f0346458face1e144dc4d32f493714c6c3dff82a4adb77e6"},

|

| 2795 |

]

|

| 2796 |

|

| 2797 |

+

[[package]]

|

| 2798 |

+

name = "tenacity"

|

| 2799 |

+

version = "9.0.0"

|

| 2800 |

+

requires_python = ">=3.8"

|

| 2801 |

+

summary = "Retry code until it succeeds"

|

| 2802 |

+

groups = ["default"]

|

| 2803 |

+

files = [

|

| 2804 |

+

{file = "tenacity-9.0.0-py3-none-any.whl", hash = "sha256:93de0c98785b27fcf659856aa9f54bfbd399e29969b0621bc7f762bd441b4539"},

|

| 2805 |

+

{file = "tenacity-9.0.0.tar.gz", hash = "sha256:807f37ca97d62aa361264d497b0e31e92b8027044942bfa756160d908320d73b"},

|

| 2806 |

+

]

|

| 2807 |

+

|

| 2808 |

[[package]]

|

| 2809 |

name = "threadpoolctl"

|

| 2810 |

version = "3.5.0"

|

pyproject.toml

CHANGED

|

@@ -1,7 +1,7 @@

|

|

| 1 |

[project]

|

| 2 |

name = "distilabel-dataset-generator"

|

| 3 |

version = "0.1.0"

|

| 4 |

-

description = "

|

| 5 |

authors = [

|

| 6 |

{name = "davidberenstein1957", email = "[email protected]"},

|

| 7 |

]

|

|

|

|

| 1 |

[project]

|

| 2 |

name = "distilabel-dataset-generator"

|

| 3 |

version = "0.1.0"

|

| 4 |

+

description = "Build datasets using natural language"

|

| 5 |

authors = [

|

| 6 |

{name = "davidberenstein1957", email = "[email protected]"},

|

| 7 |

]

|

src/distilabel_dataset_generator/__init__.py

CHANGED

|

@@ -1,6 +1,9 @@

|

|

|

|

|

|

|

|

| 1 |

from pathlib import Path

|

| 2 |

from typing import Optional, Union

|

| 3 |

|

|

|

|

| 4 |

import distilabel

|

| 5 |

import distilabel.distiset

|

| 6 |

from distilabel.utils.card.dataset_card import (

|

|

@@ -9,6 +12,29 @@ from distilabel.utils.card.dataset_card import (

|

|

| 9 |

)

|

| 10 |

from huggingface_hub import DatasetCardData, HfApi, upload_file

|

| 11 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 12 |

|

| 13 |

class CustomDistisetWithAdditionalTag(distilabel.distiset.Distiset):

|

| 14 |

def _generate_card(

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import warnings

|

| 3 |

from pathlib import Path

|

| 4 |

from typing import Optional, Union

|

| 5 |

|

| 6 |

+

import argilla as rg

|

| 7 |

import distilabel

|

| 8 |

import distilabel.distiset

|

| 9 |

from distilabel.utils.card.dataset_card import (

|

|

|

|

| 12 |

)

|

| 13 |

from huggingface_hub import DatasetCardData, HfApi, upload_file

|

| 14 |

|

| 15 |

+

HF_TOKENS = [os.getenv("HF_TOKEN")] + [os.getenv(f"HF_TOKEN_{i}") for i in range(1, 10)]

|

| 16 |

+

HF_TOKENS = [token for token in HF_TOKENS if token]

|

| 17 |

+

|

| 18 |

+

if len(HF_TOKENS) == 0:

|

| 19 |

+

raise ValueError(

|

| 20 |

+

"HF_TOKEN is not set. Ensure you have set the HF_TOKEN environment variable that has access to the Hugging Face Hub repositories and Inference Endpoints."

|

| 21 |

+

)

|

| 22 |

+

|

| 23 |

+

ARGILLA_API_URL = os.getenv("ARGILLA_API_URL")

|

| 24 |

+

ARGILLA_API_KEY = os.getenv("ARGILLA_API_KEY")

|

| 25 |

+

if ARGILLA_API_URL is None or ARGILLA_API_KEY is None:

|

| 26 |

+

ARGILLA_API_URL = os.getenv("ARGILLA_API_URL_SDG_REVIEWER")

|

| 27 |

+

ARGILLA_API_KEY = os.getenv("ARGILLA_API_KEY_SDG_REVIEWER")

|

| 28 |

+

|

| 29 |

+

if ARGILLA_API_URL is None or ARGILLA_API_KEY is None:

|

| 30 |

+

warnings.warn("ARGILLA_API_URL or ARGILLA_API_KEY is not set")

|

| 31 |

+

argilla_client = None

|

| 32 |

+

else:

|

| 33 |

+

argilla_client = rg.Argilla(

|

| 34 |

+

api_url=ARGILLA_API_URL,

|

| 35 |

+

api_key=ARGILLA_API_KEY,

|

| 36 |

+

)

|

| 37 |

+

|

| 38 |

|

| 39 |

class CustomDistisetWithAdditionalTag(distilabel.distiset.Distiset):

|

| 40 |

def _generate_card(

|

src/distilabel_dataset_generator/apps/base.py

CHANGED

|

@@ -195,7 +195,7 @@ def validate_argilla_user_workspace_dataset(

|

|

| 195 |

return ""

|

| 196 |

|

| 197 |

|

| 198 |

-

def get_org_dropdown(oauth_token: OAuthToken

|

| 199 |

orgs = list_orgs(oauth_token)

|

| 200 |

return gr.Dropdown(

|

| 201 |

label="Organization",

|

|

@@ -488,7 +488,7 @@ def show_success_message(org_name, repo_name) -> gr.Markdown:

|

|

| 488 |

</strong>

|

| 489 |

</p>

|

| 490 |

<p style="margin-top: 0.5em;">

|

| 491 |

-

The generated dataset is in the right format for fine-tuning with TRL, AutoTrain, or other frameworks. Your dataset is now available at:

|

| 492 |

<a href="https://huggingface.co/datasets/{org_name}/{repo_name}" target="_blank" style="color: #1565c0; text-decoration: none;">

|

| 493 |

https://huggingface.co/datasets/{org_name}/{repo_name}

|

| 494 |

</a>

|

|

@@ -503,5 +503,6 @@ def show_success_message(org_name, repo_name) -> gr.Markdown:

|

|

| 503 |

visible=True,

|

| 504 |

)

|

| 505 |

|

|

|

|

| 506 |

def hide_success_message() -> gr.Markdown:

|

| 507 |

return gr.Markdown(value="")

|

|

|

|

| 195 |

return ""

|

| 196 |

|

| 197 |

|

| 198 |

+

def get_org_dropdown(oauth_token: Union[OAuthToken, None]):

|

| 199 |

orgs = list_orgs(oauth_token)

|

| 200 |

return gr.Dropdown(

|

| 201 |

label="Organization",

|

|

|

|

| 488 |

</strong>

|

| 489 |

</p>

|

| 490 |

<p style="margin-top: 0.5em;">

|

| 491 |

+

The generated dataset is in the right format for fine-tuning with TRL, AutoTrain, or other frameworks. Your dataset is now available at:

|

| 492 |

<a href="https://huggingface.co/datasets/{org_name}/{repo_name}" target="_blank" style="color: #1565c0; text-decoration: none;">

|

| 493 |

https://huggingface.co/datasets/{org_name}/{repo_name}

|

| 494 |

</a>

|

|

|

|

| 503 |

visible=True,

|

| 504 |

)

|

| 505 |

|

| 506 |

+

|

| 507 |

def hide_success_message() -> gr.Markdown:

|

| 508 |

return gr.Markdown(value="")

|

src/distilabel_dataset_generator/pipelines/base.py

CHANGED

|

@@ -1,4 +1,4 @@

|

|

| 1 |

-

from src.distilabel_dataset_generator

|

| 2 |

|

| 3 |

DEFAULT_BATCH_SIZE = 5

|

| 4 |

TOKEN_INDEX = 0

|

|

|

|

| 1 |

+

from src.distilabel_dataset_generator import HF_TOKENS

|

| 2 |

|

| 3 |

DEFAULT_BATCH_SIZE = 5

|

| 4 |

TOKEN_INDEX = 0

|

src/distilabel_dataset_generator/utils.py

CHANGED

|

@@ -1,5 +1,4 @@

|

|

| 1 |

import json

|

| 2 |

-

import os

|

| 3 |

from typing import List, Optional, Union

|

| 4 |

|

| 5 |

import argilla as rg

|

|

@@ -16,10 +15,10 @@ from gradio.oauth import (

|

|

| 16 |

from huggingface_hub import whoami

|

| 17 |

from jinja2 import Environment, meta

|

| 18 |

|

|

|

|

|

|

|

| 19 |

_LOGGED_OUT_CSS = ".main_ui_logged_out{opacity: 0.3; pointer-events: none}"

|

| 20 |

|

| 21 |

-

HF_TOKENS = [os.getenv("HF_TOKEN")] + [os.getenv(f"HF_TOKEN_{i}") for i in range(1, 10)]

|

| 22 |

-

HF_TOKENS = [token for token in HF_TOKENS if token]

|

| 23 |

|

| 24 |

_CHECK_IF_SPACE_IS_SET = (

|

| 25 |

all(

|

|

@@ -48,7 +47,7 @@ def get_duplicate_button():

|

|

| 48 |

return gr.DuplicateButton(size="lg")

|

| 49 |

|

| 50 |

|

| 51 |

-

def list_orgs(oauth_token: OAuthToken = None):

|

| 52 |

try:

|

| 53 |

if oauth_token is None:

|

| 54 |

return []

|

|

@@ -72,7 +71,7 @@ def list_orgs(oauth_token: OAuthToken = None):

|

|

| 72 |

return organizations

|

| 73 |

|

| 74 |

|

| 75 |

-

def get_org_dropdown(oauth_token: OAuthToken = None):

|

| 76 |

if oauth_token is not None:

|

| 77 |

orgs = list_orgs(oauth_token)

|

| 78 |

else:

|

|

@@ -86,14 +85,14 @@ def get_org_dropdown(oauth_token: OAuthToken = None):

|

|

| 86 |

)

|

| 87 |

|

| 88 |

|

| 89 |

-

def get_token(oauth_token: OAuthToken

|

| 90 |

if oauth_token:

|

| 91 |

return oauth_token.token

|

| 92 |

else:

|

| 93 |

return ""

|

| 94 |

|

| 95 |

|

| 96 |

-

def swap_visibility(oauth_token:

|

| 97 |

if oauth_token:

|

| 98 |

return gr.update(elem_classes=["main_ui_logged_in"])

|

| 99 |

else:

|

|

@@ -123,18 +122,8 @@ def get_base_app():

|

|

| 123 |

|

| 124 |

|

| 125 |

def get_argilla_client() -> Union[rg.Argilla, None]:

|

| 126 |

-

|

| 127 |

-

|

| 128 |

-

api_key = os.getenv("ARGILLA_API_KEY_SDG_REVIEWER")

|

| 129 |

-

if api_url is None or api_key is None:

|

| 130 |

-

api_url = os.getenv("ARGILLA_API_URL")

|

| 131 |

-

api_key = os.getenv("ARGILLA_API_KEY")

|

| 132 |

-

return rg.Argilla(

|

| 133 |

-

api_url=api_url,

|

| 134 |

-

api_key=api_key,

|

| 135 |

-

)

|

| 136 |

-

except Exception:

|

| 137 |

-

return None

|

| 138 |

|

| 139 |

def get_preprocess_labels(labels: Optional[List[str]]) -> List[str]:

|

| 140 |

return list(set([label.lower().strip() for label in labels])) if labels else []

|

|

|

|

| 1 |

import json

|

|

|

|

| 2 |

from typing import List, Optional, Union

|

| 3 |

|

| 4 |

import argilla as rg

|

|

|

|

| 15 |

from huggingface_hub import whoami

|

| 16 |

from jinja2 import Environment, meta

|

| 17 |

|

| 18 |

+

from src.distilabel_dataset_generator import argilla_client

|

| 19 |

+

|

| 20 |

_LOGGED_OUT_CSS = ".main_ui_logged_out{opacity: 0.3; pointer-events: none}"

|

| 21 |

|

|

|

|

|

|

|

| 22 |

|

| 23 |

_CHECK_IF_SPACE_IS_SET = (

|

| 24 |

all(

|

|

|

|

| 47 |

return gr.DuplicateButton(size="lg")

|

| 48 |

|

| 49 |

|

| 50 |

+

def list_orgs(oauth_token: Union[OAuthToken, None] = None):

|

| 51 |

try:

|

| 52 |

if oauth_token is None:

|

| 53 |

return []

|

|

|

|

| 71 |

return organizations

|

| 72 |

|

| 73 |

|

| 74 |

+

def get_org_dropdown(oauth_token: Union[OAuthToken, None] = None):

|

| 75 |

if oauth_token is not None:

|

| 76 |

orgs = list_orgs(oauth_token)

|

| 77 |

else:

|

|

|

|

| 85 |

)

|

| 86 |

|

| 87 |

|

| 88 |

+

def get_token(oauth_token: Union[OAuthToken, None]):

|

| 89 |

if oauth_token:

|

| 90 |

return oauth_token.token

|

| 91 |

else:

|

| 92 |

return ""

|

| 93 |

|

| 94 |

|

| 95 |

+

def swap_visibility(oauth_token: Union[OAuthToken, None]):

|

| 96 |

if oauth_token:

|

| 97 |

return gr.update(elem_classes=["main_ui_logged_in"])

|

| 98 |

else:

|

|

|

|

| 122 |

|

| 123 |

|

| 124 |

def get_argilla_client() -> Union[rg.Argilla, None]:

|

| 125 |

+

return argilla_client

|

| 126 |

+

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 127 |

|

| 128 |

def get_preprocess_labels(labels: Optional[List[str]]) -> List[str]:

|

| 129 |

return list(set([label.lower().strip() for label in labels])) if labels else []

|