Chris Finlayson

commited on

Commit

·

ee9fa1c

1

Parent(s):

b5dd388

initial commit

Browse files- .ipynb_checkpoints/NLP-checkpoint.ipynb +236 -0

- NLP.ipynb +0 -0

- app.py +172 -0

- graph.png +0 -0

- requirements.txt +4 -0

.ipynb_checkpoints/NLP-checkpoint.ipynb

ADDED

|

@@ -0,0 +1,236 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "code",

|

| 5 |

+

"execution_count": null,

|

| 6 |

+

"id": "c49abf54-35c7-4b82-aa31-a155633c3327",

|

| 7 |

+

"metadata": {},

|

| 8 |

+

"outputs": [],

|

| 9 |

+

"source": []

|

| 10 |

+

},

|

| 11 |

+

{

|

| 12 |

+

"cell_type": "code",

|

| 13 |

+

"execution_count": null,

|

| 14 |

+

"id": "43644952-bca3-4060-af76-3d5a8357be06",

|

| 15 |

+

"metadata": {},

|

| 16 |

+

"outputs": [],

|

| 17 |

+

"source": [

|

| 18 |

+

"import re\n",

|

| 19 |

+

"import pandas as pd\n",

|

| 20 |

+

"import bs4\n",

|

| 21 |

+

"import requests\n",

|

| 22 |

+

"import spacy\n",

|

| 23 |

+

"from spacy import displacy\n",

|

| 24 |

+

"nlp = spacy.load('en_core_web_sm')\n",

|

| 25 |

+

"\n",

|

| 26 |

+

"from spacy.matcher import Matcher \n",

|

| 27 |

+

"from spacy.tokens import Span \n",

|

| 28 |

+

"\n",

|

| 29 |

+

"import networkx as nx\n",

|

| 30 |

+

"\n",

|

| 31 |

+

"import matplotlib.pyplot as plt\n",

|

| 32 |

+

"from tqdm import tqdm\n",

|

| 33 |

+

"\n",

|

| 34 |

+

"pd.set_option('display.max_colwidth', 200)\n",

|

| 35 |

+

"%matplotlib inline"

|

| 36 |

+

]

|

| 37 |

+

},

|

| 38 |

+

{

|

| 39 |

+

"cell_type": "code",

|

| 40 |

+

"execution_count": null,

|

| 41 |

+

"id": "1b73f085-2b8b-4f48-b26c-2da5fb22c9f2",

|

| 42 |

+

"metadata": {},

|

| 43 |

+

"outputs": [],

|

| 44 |

+

"source": [

|

| 45 |

+

"# import wikipedia sentences\n",

|

| 46 |

+

"candidate_sentences = pd.read_csv(\"../input/wiki-sentences1/wiki_sentences_v2.csv\")\n",

|

| 47 |

+

"candidate_sentences.shape"

|

| 48 |

+

]

|

| 49 |

+

},

|

| 50 |

+

{

|

| 51 |

+

"cell_type": "code",

|

| 52 |

+

"execution_count": null,

|

| 53 |

+

"id": "1bd9de52-e1bc-46a6-9f52-e90969ed9f0c",

|

| 54 |

+

"metadata": {},

|

| 55 |

+

"outputs": [],

|

| 56 |

+

"source": [

|

| 57 |

+

"def get_entities(sent):\n",

|

| 58 |

+

" ## chunk 1\n",

|

| 59 |

+

" ent1 = \"\"\n",

|

| 60 |

+

" ent2 = \"\"\n",

|

| 61 |

+

"\n",

|

| 62 |

+

" prv_tok_dep = \"\" # dependency tag of previous token in the sentence\n",

|

| 63 |

+

" prv_tok_text = \"\" # previous token in the sentence\n",

|

| 64 |

+

"\n",

|

| 65 |

+

" prefix = \"\"\n",

|

| 66 |

+

" modifier = \"\"\n",

|

| 67 |

+

"\n",

|

| 68 |

+

" #############################################################\n",

|

| 69 |

+

" \n",

|

| 70 |

+

" for tok in nlp(sent):\n",

|

| 71 |

+

" ## chunk 2\n",

|

| 72 |

+

" # if token is a punctuation mark then move on to the next token\n",

|

| 73 |

+

" if tok.dep_ != \"punct\":\n",

|

| 74 |

+

" # check: token is a compound word or not\n",

|

| 75 |

+

" if tok.dep_ == \"compound\":\n",

|

| 76 |

+

" prefix = tok.text\n",

|

| 77 |

+

" # if the previous word was also a 'compound' then add the current word to it\n",

|

| 78 |

+

" if prv_tok_dep == \"compound\":\n",

|

| 79 |

+

" prefix = prv_tok_text + \" \"+ tok.text\n",

|

| 80 |

+

" \n",

|

| 81 |

+

" # check: token is a modifier or not\n",

|

| 82 |

+

" if tok.dep_.endswith(\"mod\") == True:\n",

|

| 83 |

+

" modifier = tok.text\n",

|

| 84 |

+

" # if the previous word was also a 'compound' then add the current word to it\n",

|

| 85 |

+

" if prv_tok_dep == \"compound\":\n",

|

| 86 |

+

" modifier = prv_tok_text + \" \"+ tok.text\n",

|

| 87 |

+

" \n",

|

| 88 |

+

" ## chunk 3\n",

|

| 89 |

+

" if tok.dep_.find(\"subj\") == True:\n",

|

| 90 |

+

" ent1 = modifier +\" \"+ prefix + \" \"+ tok.text\n",

|

| 91 |

+

" prefix = \"\"\n",

|

| 92 |

+

" modifier = \"\"\n",

|

| 93 |

+

" prv_tok_dep = \"\"\n",

|

| 94 |

+

" prv_tok_text = \"\" \n",

|

| 95 |

+

"\n",

|

| 96 |

+

" ## chunk 4\n",

|

| 97 |

+

" if tok.dep_.find(\"obj\") == True:\n",

|

| 98 |

+

" ent2 = modifier +\" \"+ prefix +\" \"+ tok.text\n",

|

| 99 |

+

" \n",

|

| 100 |

+

" ## chunk 5 \n",

|

| 101 |

+

" # update variables\n",

|

| 102 |

+

" prv_tok_dep = tok.dep_\n",

|

| 103 |

+

" prv_tok_text = tok.text\n",

|

| 104 |

+

" #############################################################\n",

|

| 105 |

+

"\n",

|

| 106 |

+

" return [ent1.strip(), ent2.strip()]"

|

| 107 |

+

]

|

| 108 |

+

},

|

| 109 |

+

{

|

| 110 |

+

"cell_type": "code",

|

| 111 |

+

"execution_count": null,

|

| 112 |

+

"id": "11bec388-fdb8-4823-9049-aa4cf328eba6",

|

| 113 |

+

"metadata": {},

|

| 114 |

+

"outputs": [],

|

| 115 |

+

"source": [

|

| 116 |

+

"entity_pairs = []\n",

|

| 117 |

+

"\n",

|

| 118 |

+

"for i in tqdm(candidate_sentences[\"sentence\"]):\n",

|

| 119 |

+

" entity_pairs.append(get_entities(i))"

|

| 120 |

+

]

|

| 121 |

+

},

|

| 122 |

+

{

|

| 123 |

+

"cell_type": "code",

|

| 124 |

+

"execution_count": null,

|

| 125 |

+

"id": "02f56072-ae65-4b15-a3b6-674701040568",

|

| 126 |

+

"metadata": {},

|

| 127 |

+

"outputs": [],

|

| 128 |

+

"source": [

|

| 129 |

+

"def get_relation(sent):\n",

|

| 130 |

+

"\n",

|

| 131 |

+

" doc = nlp(sent)\n",

|

| 132 |

+

"\n",

|

| 133 |

+

" # Matcher class object \n",

|

| 134 |

+

" matcher = Matcher(nlp.vocab)\n",

|

| 135 |

+

"\n",

|

| 136 |

+

" #define the pattern \n",

|

| 137 |

+

" pattern = [{'DEP':'ROOT'}, \n",

|

| 138 |

+

" {'DEP':'prep','OP':\"?\"},\n",

|

| 139 |

+

" {'DEP':'agent','OP':\"?\"}, \n",

|

| 140 |

+

" {'POS':'ADJ','OP':\"?\"}] \n",

|

| 141 |

+

"\n",

|

| 142 |

+

" matcher.add(\"matching_1\", None, pattern) \n",

|

| 143 |

+

"\n",

|

| 144 |

+

" matches = matcher(doc)\n",

|

| 145 |

+

" k = len(matches) - 1\n",

|

| 146 |

+

"\n",

|

| 147 |

+

" span = doc[matches[k][1]:matches[k][2]] \n",

|

| 148 |

+

"\n",

|

| 149 |

+

" return(span.text)"

|

| 150 |

+

]

|

| 151 |

+

},

|

| 152 |

+

{

|

| 153 |

+

"cell_type": "code",

|

| 154 |

+

"execution_count": null,

|

| 155 |

+

"id": "ee3a774f-9f2d-4a4c-a77a-04bc420d4864",

|

| 156 |

+

"metadata": {},

|

| 157 |

+

"outputs": [],

|

| 158 |

+

"source": [

|

| 159 |

+

"relations = [get_relation(i) for i in tqdm(candidate_sentences['sentence'])]"

|

| 160 |

+

]

|

| 161 |

+

},

|

| 162 |

+

{

|

| 163 |

+

"cell_type": "code",

|

| 164 |

+

"execution_count": null,

|

| 165 |

+

"id": "c04581bb-46b5-48ce-bbe1-b465a789ad82",

|

| 166 |

+

"metadata": {},

|

| 167 |

+

"outputs": [],

|

| 168 |

+

"source": [

|

| 169 |

+

"# extract subject\n",

|

| 170 |

+

"source = [i[0] for i in entity_pairs]\n",

|

| 171 |

+

"\n",

|

| 172 |

+

"# extract object\n",

|

| 173 |

+

"target = [i[1] for i in entity_pairs]\n",

|

| 174 |

+

"\n",

|

| 175 |

+

"kg_df = pd.DataFrame({'source':source, 'target':target, 'edge':relations})"

|

| 176 |

+

]

|

| 177 |

+

},

|

| 178 |

+

{

|

| 179 |

+

"cell_type": "code",

|

| 180 |

+

"execution_count": null,

|

| 181 |

+

"id": "b0fec1f2-d370-4d79-8a92-2ebdff2be420",

|

| 182 |

+

"metadata": {},

|

| 183 |

+

"outputs": [],

|

| 184 |

+

"source": [

|

| 185 |

+

"# create a directed-graph from a dataframe\n",

|

| 186 |

+

"G=nx.from_pandas_edgelist(kg_df, \"source\", \"target\", \n",

|

| 187 |

+

" edge_attr=True, create_using=nx.MultiDiGraph())"

|

| 188 |

+

]

|

| 189 |

+

},

|

| 190 |

+

{

|

| 191 |

+

"cell_type": "code",

|

| 192 |

+

"execution_count": null,

|

| 193 |

+

"id": "39b80dbe-f991-4e12-b0a1-4026344af82f",

|

| 194 |

+

"metadata": {},

|

| 195 |

+

"outputs": [],

|

| 196 |

+

"source": [

|

| 197 |

+

"plt.figure(figsize=(12,12))\n",

|

| 198 |

+

"\n",

|

| 199 |

+

"pos = nx.spring_layout(G)\n",

|

| 200 |

+

"nx.draw(G, with_labels=True, node_color='skyblue', edge_cmap=plt.cm.Blues, pos = pos)\n",

|

| 201 |

+

"plt.show()"

|

| 202 |

+

]

|

| 203 |

+

},

|

| 204 |

+

{

|

| 205 |

+

"cell_type": "code",

|

| 206 |

+

"execution_count": null,

|

| 207 |

+

"id": "be07f563-0b61-441f-bb24-a9e884eef1b8",

|

| 208 |

+

"metadata": {},

|

| 209 |

+

"outputs": [],

|

| 210 |

+

"source": [

|

| 211 |

+

"#https://www.kaggle.com/code/pavansanagapati/knowledge-graph-nlp-tutorial-bert-spacy-nltk"

|

| 212 |

+

]

|

| 213 |

+

}

|

| 214 |

+

],

|

| 215 |

+

"metadata": {

|

| 216 |

+

"kernelspec": {

|

| 217 |

+

"display_name": "Python 3 (ipykernel)",

|

| 218 |

+

"language": "python",

|

| 219 |

+

"name": "python3"

|

| 220 |

+

},

|

| 221 |

+

"language_info": {

|

| 222 |

+

"codemirror_mode": {

|

| 223 |

+

"name": "ipython",

|

| 224 |

+

"version": 3

|

| 225 |

+

},

|

| 226 |

+

"file_extension": ".py",

|

| 227 |

+

"mimetype": "text/x-python",

|

| 228 |

+

"name": "python",

|

| 229 |

+

"nbconvert_exporter": "python",

|

| 230 |

+

"pygments_lexer": "ipython3",

|

| 231 |

+

"version": "3.11.5"

|

| 232 |

+

}

|

| 233 |

+

},

|

| 234 |

+

"nbformat": 4,

|

| 235 |

+

"nbformat_minor": 5

|

| 236 |

+

}

|

NLP.ipynb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

app.py

ADDED

|

@@ -0,0 +1,172 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

import os

|

| 3 |

+

import fitz

|

| 4 |

+

import re

|

| 5 |

+

import spacy

|

| 6 |

+

import spacy.cli

|

| 7 |

+

import re

|

| 8 |

+

import pandas as pd

|

| 9 |

+

import bs4

|

| 10 |

+

import requests

|

| 11 |

+

import spacy

|

| 12 |

+

from spacy import displacy

|

| 13 |

+

nlp = spacy.load('en_core_web_sm')

|

| 14 |

+

from spacy.matcher import Matcher

|

| 15 |

+

from spacy.tokens import Span

|

| 16 |

+

import networkx as nx

|

| 17 |

+

import matplotlib.pyplot as plt

|

| 18 |

+

from tqdm import tqdm

|

| 19 |

+

|

| 20 |

+

try:

|

| 21 |

+

nlp = spacy.load('en_core_web_sm')

|

| 22 |

+

except OSError:

|

| 23 |

+

print("Model not found. Downloading...")

|

| 24 |

+

spacy.cli.download("en_core_web_sm")

|

| 25 |

+

nlp = spacy.load('en_core_web_sm')

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

# def read_pdf(file):

|

| 29 |

+

# doc = fitz.open(file)

|

| 30 |

+

# text = ""

|

| 31 |

+

# for page in doc:

|

| 32 |

+

# text += page.get_text("text").split('\n')

|

| 33 |

+

# return text

|

| 34 |

+

|

| 35 |

+

def read_csv(file):

|

| 36 |

+

candidate_sentences = pd.read_csv("/Users/christopherfinlayson/wiki_sentences_v2.csv")

|

| 37 |

+

return candidate_sentences.shape

|

| 38 |

+

|

| 39 |

+

def get_entities(sent):

|

| 40 |

+

## chunk 1

|

| 41 |

+

ent1 = ""

|

| 42 |

+

ent2 = ""

|

| 43 |

+

|

| 44 |

+

prv_tok_dep = "" # dependency tag of previous token in the sentence

|

| 45 |

+

prv_tok_text = "" # previous token in the sentence

|

| 46 |

+

|

| 47 |

+

prefix = ""

|

| 48 |

+

modifier = ""

|

| 49 |

+

|

| 50 |

+

#############################################################

|

| 51 |

+

|

| 52 |

+

for tok in nlp(sent):

|

| 53 |

+

## chunk 2

|

| 54 |

+

# if token is a punctuation mark then move on to the next token

|

| 55 |

+

if tok.dep_ != "punct":

|

| 56 |

+

# check: token is a compound word or not

|

| 57 |

+

if tok.dep_ == "compound":

|

| 58 |

+

prefix = tok.text

|

| 59 |

+

# if the previous word was also a 'compound' then add the current word to it

|

| 60 |

+

if prv_tok_dep == "compound":

|

| 61 |

+

prefix = prv_tok_text + " "+ tok.text

|

| 62 |

+

|

| 63 |

+

# check: token is a modifier or not

|

| 64 |

+

if tok.dep_.endswith("mod") == True:

|

| 65 |

+

modifier = tok.text

|

| 66 |

+

# if the previous word was also a 'compound' then add the current word to it

|

| 67 |

+

if prv_tok_dep == "compound":

|

| 68 |

+

modifier = prv_tok_text + " "+ tok.text

|

| 69 |

+

|

| 70 |

+

## chunk 3

|

| 71 |

+

if tok.dep_.find("subj") == True:

|

| 72 |

+

ent1 = modifier +" "+ prefix + " "+ tok.text

|

| 73 |

+

prefix = ""

|

| 74 |

+

modifier = ""

|

| 75 |

+

prv_tok_dep = ""

|

| 76 |

+

prv_tok_text = ""

|

| 77 |

+

|

| 78 |

+

## chunk 4

|

| 79 |

+

if tok.dep_.find("obj") == True:

|

| 80 |

+

ent2 = modifier +" "+ prefix +" "+ tok.text

|

| 81 |

+

|

| 82 |

+

## chunk 5

|

| 83 |

+

# update variables

|

| 84 |

+

prv_tok_dep = tok.dep_

|

| 85 |

+

prv_tok_text = tok.text

|

| 86 |

+

#############################################################

|

| 87 |

+

|

| 88 |

+

return [ent1.strip(), ent2.strip()]

|

| 89 |

+

|

| 90 |

+

def get_relation(sent):

|

| 91 |

+

|

| 92 |

+

doc = nlp(sent)

|

| 93 |

+

|

| 94 |

+

# Matcher class object

|

| 95 |

+

matcher = Matcher(nlp.vocab)

|

| 96 |

+

|

| 97 |

+

#define the pattern

|

| 98 |

+

pattern = [{'DEP':'ROOT'},

|

| 99 |

+

{'DEP':'prep','OP':"?"},

|

| 100 |

+

{'DEP':'agent','OP':"?"},

|

| 101 |

+

{'POS':'ADJ','OP':"?"}]

|

| 102 |

+

|

| 103 |

+

matcher.add("matching_1", [pattern])

|

| 104 |

+

|

| 105 |

+

matches = matcher(doc)

|

| 106 |

+

k = len(matches) - 1

|

| 107 |

+

|

| 108 |

+

span = doc[matches[k][1]:matches[k][2]]

|

| 109 |

+

|

| 110 |

+

return(span.text)

|

| 111 |

+

|

| 112 |

+

def ulify(elements):

|

| 113 |

+

string = "<ul>\n"

|

| 114 |

+

string += "\n".join(["<li>" + str(s) + "</li>" for s in elements])

|

| 115 |

+

string += "\n</ul>"

|

| 116 |

+

return string

|

| 117 |

+

|

| 118 |

+

def execute_process(file, edge):

|

| 119 |

+

# candidate_sentences = pd.DataFrame(read_pdf(file), columns=['Sentences'])

|

| 120 |

+

candidate_sentences = pd.read_csv(file)

|

| 121 |

+

|

| 122 |

+

entity_pairs = []

|

| 123 |

+

for i in tqdm(candidate_sentences["sentence"]):

|

| 124 |

+

entity_pairs.append(get_entities(i))

|

| 125 |

+

relations = [get_relation(i) for i in tqdm(candidate_sentences['sentence'])]

|

| 126 |

+

# extract subject

|

| 127 |

+

source = [i[0] for i in entity_pairs]

|

| 128 |

+

|

| 129 |

+

# extract object

|

| 130 |

+

target = [i[1] for i in entity_pairs]

|

| 131 |

+

kg_df = pd.DataFrame({'source':source, 'target':target, 'edge':relations})

|

| 132 |

+

|

| 133 |

+

# create a variable of all unique edges

|

| 134 |

+

unique_edges = kg_df['edge'].unique() if kg_df['edge'].nunique() != 0 else None

|

| 135 |

+

# create a dataframe of all unique edges and their counts

|

| 136 |

+

edge_counts = kg_df['edge'].value_counts()

|

| 137 |

+

unique_edges_df = pd.DataFrame({'edge': edge_counts.index, 'count': edge_counts.values})

|

| 138 |

+

|

| 139 |

+

G=nx.from_pandas_edgelist(kg_df, "source", "target",

|

| 140 |

+

edge_attr=True, create_using=nx.MultiDiGraph())

|

| 141 |

+

|

| 142 |

+

if edge is not None:

|

| 143 |

+

G=nx.from_pandas_edgelist(kg_df[kg_df['edge']==edge], "source", "target",

|

| 144 |

+

edge_attr=True, create_using=nx.MultiDiGraph())

|

| 145 |

+

plt.figure(figsize=(12,12))

|

| 146 |

+

pos = nx.spring_layout(G)

|

| 147 |

+

nx.draw(G, with_labels=True, node_color='skyblue', edge_cmap=plt.cm.Blues, pos = pos)

|

| 148 |

+

plt.savefig("graph.png")

|

| 149 |

+

# return "graph.png", "\n".join(unique_edges)

|

| 150 |

+

return "graph.png", unique_edges_df

|

| 151 |

+

|

| 152 |

+

else:

|

| 153 |

+

plt.figure(figsize=(12,12))

|

| 154 |

+

pos = nx.spring_layout(G, k = 0.5) # k regulates the distance between nodes

|

| 155 |

+

nx.draw(G, with_labels=True, node_color='skyblue', node_size=1500, edge_cmap=plt.cm.Blues, pos = pos)

|

| 156 |

+

plt.savefig("graph.png")

|

| 157 |

+

# return "graph.png", "\n".join(unique_edges)

|

| 158 |

+

return "graph.png", unique_edges_df

|

| 159 |

+

|

| 160 |

+

inputs = [

|

| 161 |

+

gr.File(label="Upload PDF"),

|

| 162 |

+

gr.Textbox(label="Graph a particular edge", type="text")

|

| 163 |

+

]

|

| 164 |

+

|

| 165 |

+

outputs = [

|

| 166 |

+

gr.Image(label="Generated graph"),

|

| 167 |

+

gr.Dataframe(label="Unique edges", type="pandas")

|

| 168 |

+

]

|

| 169 |

+

|

| 170 |

+

description = 'This app reads all text from a PDF document, and allows the user to generate a knowledge which illustrates concepts and relationships within'

|

| 171 |

+

iface = gr.Interface(fn=execute_process, inputs=inputs, outputs=outputs, title="PDF Knowledge graph", description=description)

|

| 172 |

+

iface.launch()

|

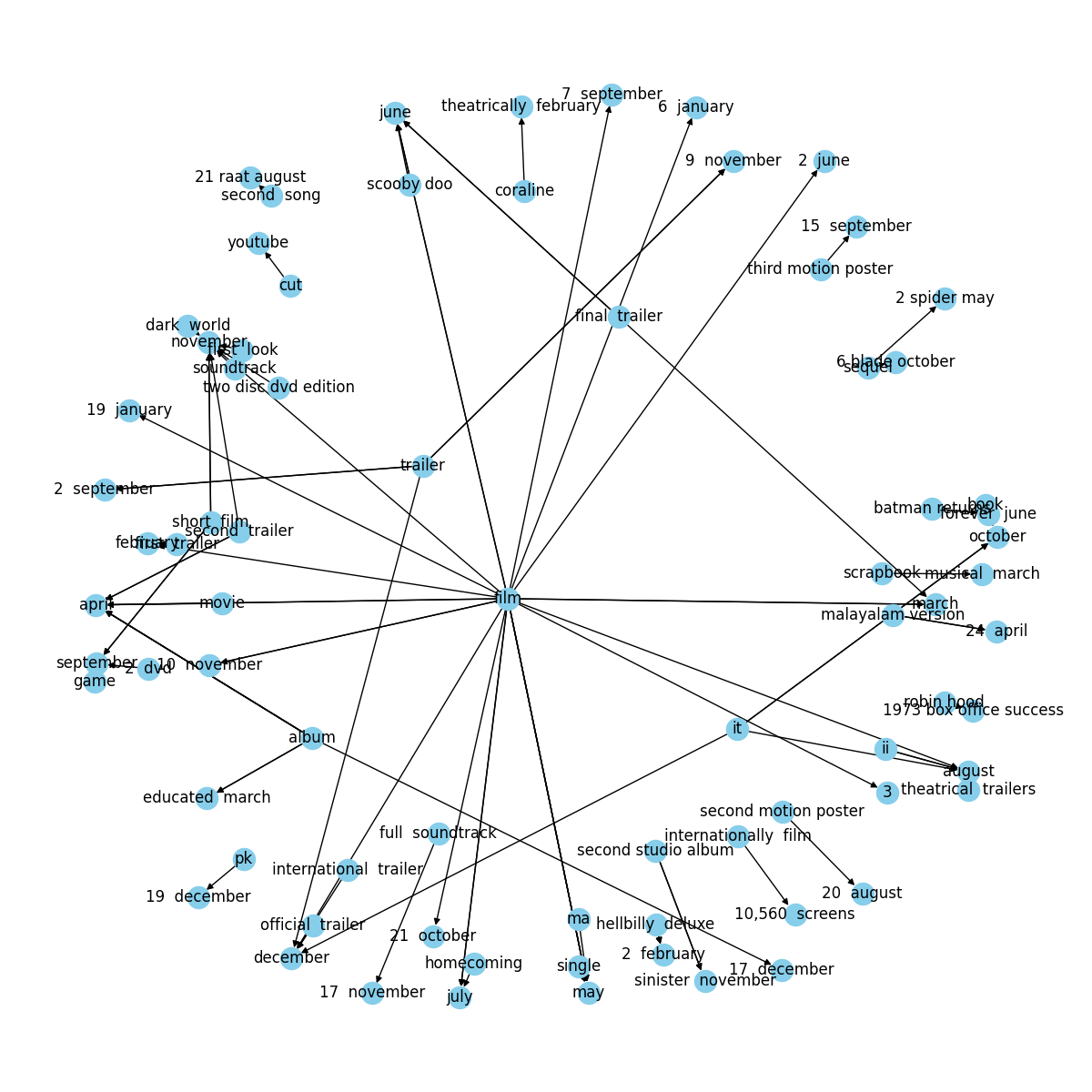

graph.png

ADDED

|

requirements.txt

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

gradio

|

| 2 |

+

PyMuPDF

|

| 3 |

+

transformers

|

| 4 |

+

plotly

|