Spaces:

Runtime error

Runtime error

Upload folder using huggingface_hub

Browse files- .gitattributes +0 -34

- .gradio/certificate.pem +31 -0

- .gradio/flagged/dataset1.csv +2 -0

- .gradio/flagged/image_file/1b2dea696b68f6c31e06/clipboard.png +0 -0

- README.md +119 -7

- app.py +248 -0

- cnn_model.h5 +3 -0

- packages.txt +2 -0

- project3.ipynb +0 -0

- requirements.txt +16 -0

- xception_model.weights.h5 +3 -0

.gitattributes

CHANGED

|

@@ -1,35 +1 @@

|

|

| 1 |

-

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

-

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

-

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

-

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

-

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

-

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

-

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

-

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

-

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

-

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 12 |

-

*.model filter=lfs diff=lfs merge=lfs -text

|

| 13 |

-

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 14 |

-

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 15 |

-

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 16 |

-

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 17 |

-

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 18 |

-

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 19 |

-

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 20 |

-

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 21 |

-

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 22 |

-

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 23 |

-

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 24 |

-

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 25 |

-

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 26 |

-

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 27 |

-

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

-

*.tar filter=lfs diff=lfs merge=lfs -text

|

| 29 |

-

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 30 |

-

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 31 |

-

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 32 |

-

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 33 |

-

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

-

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

-

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

*.h5 filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

.gradio/certificate.pem

ADDED

|

@@ -0,0 +1,31 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

-----BEGIN CERTIFICATE-----

|

| 2 |

+

MIIFazCCA1OgAwIBAgIRAIIQz7DSQONZRGPgu2OCiwAwDQYJKoZIhvcNAQELBQAw

|

| 3 |

+

TzELMAkGA1UEBhMCVVMxKTAnBgNVBAoTIEludGVybmV0IFNlY3VyaXR5IFJlc2Vh

|

| 4 |

+

cmNoIEdyb3VwMRUwEwYDVQQDEwxJU1JHIFJvb3QgWDEwHhcNMTUwNjA0MTEwNDM4

|

| 5 |

+

WhcNMzUwNjA0MTEwNDM4WjBPMQswCQYDVQQGEwJVUzEpMCcGA1UEChMgSW50ZXJu

|

| 6 |

+

ZXQgU2VjdXJpdHkgUmVzZWFyY2ggR3JvdXAxFTATBgNVBAMTDElTUkcgUm9vdCBY

|

| 7 |

+

MTCCAiIwDQYJKoZIhvcNAQEBBQADggIPADCCAgoCggIBAK3oJHP0FDfzm54rVygc

|

| 8 |

+

h77ct984kIxuPOZXoHj3dcKi/vVqbvYATyjb3miGbESTtrFj/RQSa78f0uoxmyF+

|

| 9 |

+

0TM8ukj13Xnfs7j/EvEhmkvBioZxaUpmZmyPfjxwv60pIgbz5MDmgK7iS4+3mX6U

|

| 10 |

+

A5/TR5d8mUgjU+g4rk8Kb4Mu0UlXjIB0ttov0DiNewNwIRt18jA8+o+u3dpjq+sW

|

| 11 |

+

T8KOEUt+zwvo/7V3LvSye0rgTBIlDHCNAymg4VMk7BPZ7hm/ELNKjD+Jo2FR3qyH

|

| 12 |

+

B5T0Y3HsLuJvW5iB4YlcNHlsdu87kGJ55tukmi8mxdAQ4Q7e2RCOFvu396j3x+UC

|

| 13 |

+

B5iPNgiV5+I3lg02dZ77DnKxHZu8A/lJBdiB3QW0KtZB6awBdpUKD9jf1b0SHzUv

|

| 14 |

+

KBds0pjBqAlkd25HN7rOrFleaJ1/ctaJxQZBKT5ZPt0m9STJEadao0xAH0ahmbWn

|

| 15 |

+

OlFuhjuefXKnEgV4We0+UXgVCwOPjdAvBbI+e0ocS3MFEvzG6uBQE3xDk3SzynTn

|

| 16 |

+

jh8BCNAw1FtxNrQHusEwMFxIt4I7mKZ9YIqioymCzLq9gwQbooMDQaHWBfEbwrbw

|

| 17 |

+

qHyGO0aoSCqI3Haadr8faqU9GY/rOPNk3sgrDQoo//fb4hVC1CLQJ13hef4Y53CI

|

| 18 |

+

rU7m2Ys6xt0nUW7/vGT1M0NPAgMBAAGjQjBAMA4GA1UdDwEB/wQEAwIBBjAPBgNV

|

| 19 |

+

HRMBAf8EBTADAQH/MB0GA1UdDgQWBBR5tFnme7bl5AFzgAiIyBpY9umbbjANBgkq

|

| 20 |

+

hkiG9w0BAQsFAAOCAgEAVR9YqbyyqFDQDLHYGmkgJykIrGF1XIpu+ILlaS/V9lZL

|

| 21 |

+

ubhzEFnTIZd+50xx+7LSYK05qAvqFyFWhfFQDlnrzuBZ6brJFe+GnY+EgPbk6ZGQ

|

| 22 |

+

3BebYhtF8GaV0nxvwuo77x/Py9auJ/GpsMiu/X1+mvoiBOv/2X/qkSsisRcOj/KK

|

| 23 |

+

NFtY2PwByVS5uCbMiogziUwthDyC3+6WVwW6LLv3xLfHTjuCvjHIInNzktHCgKQ5

|

| 24 |

+

ORAzI4JMPJ+GslWYHb4phowim57iaztXOoJwTdwJx4nLCgdNbOhdjsnvzqvHu7Ur

|

| 25 |

+

TkXWStAmzOVyyghqpZXjFaH3pO3JLF+l+/+sKAIuvtd7u+Nxe5AW0wdeRlN8NwdC

|

| 26 |

+

jNPElpzVmbUq4JUagEiuTDkHzsxHpFKVK7q4+63SM1N95R1NbdWhscdCb+ZAJzVc

|

| 27 |

+

oyi3B43njTOQ5yOf+1CceWxG1bQVs5ZufpsMljq4Ui0/1lvh+wjChP4kqKOJ2qxq

|

| 28 |

+

4RgqsahDYVvTH9w7jXbyLeiNdd8XM2w9U/t7y0Ff/9yi0GE44Za4rF2LN9d11TPA

|

| 29 |

+

mRGunUHBcnWEvgJBQl9nJEiU0Zsnvgc/ubhPgXRR4Xq37Z0j4r7g1SgEEzwxA57d

|

| 30 |

+

emyPxgcYxn/eR44/KJ4EBs+lVDR3veyJm+kXQ99b21/+jh5Xos1AnX5iItreGCc=

|

| 31 |

+

-----END CERTIFICATE-----

|

.gradio/flagged/dataset1.csv

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

image_file,Select Model,Prediction,Confidence,Saliency Map,Explanation,Logs,timestamp

|

| 2 |

+

.gradio/flagged/image_file/1b2dea696b68f6c31e06/clipboard.png,Custom CNN,,,,,,2024-11-19 13:58:41.965294

|

.gradio/flagged/image_file/1b2dea696b68f6c31e06/clipboard.png

ADDED

|

README.md

CHANGED

|

@@ -1,12 +1,124 @@

|

|

| 1 |

---

|

| 2 |

-

title:

|

| 3 |

-

|

| 4 |

-

colorFrom: red

|

| 5 |

-

colorTo: yellow

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 5.6.0

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 11 |

|

| 12 |

-

|

|

|

|

| 1 |

---

|

| 2 |

+

title: BRAIN_TUMOR_DEDECTOR

|

| 3 |

+

app_file: app.py

|

|

|

|

|

|

|

| 4 |

sdk: gradio

|

| 5 |

sdk_version: 5.6.0

|

|

|

|

|

|

|

| 6 |

---

|

| 7 |

+

# 🧠 Brain Tumor MRI Classification

|

| 8 |

+

|

| 9 |

+

[](https://www.python.org/)

|

| 10 |

+

[](https://www.tensorflow.org/)

|

| 11 |

+

[](https://streamlit.io/)

|

| 12 |

+

|

| 13 |

+

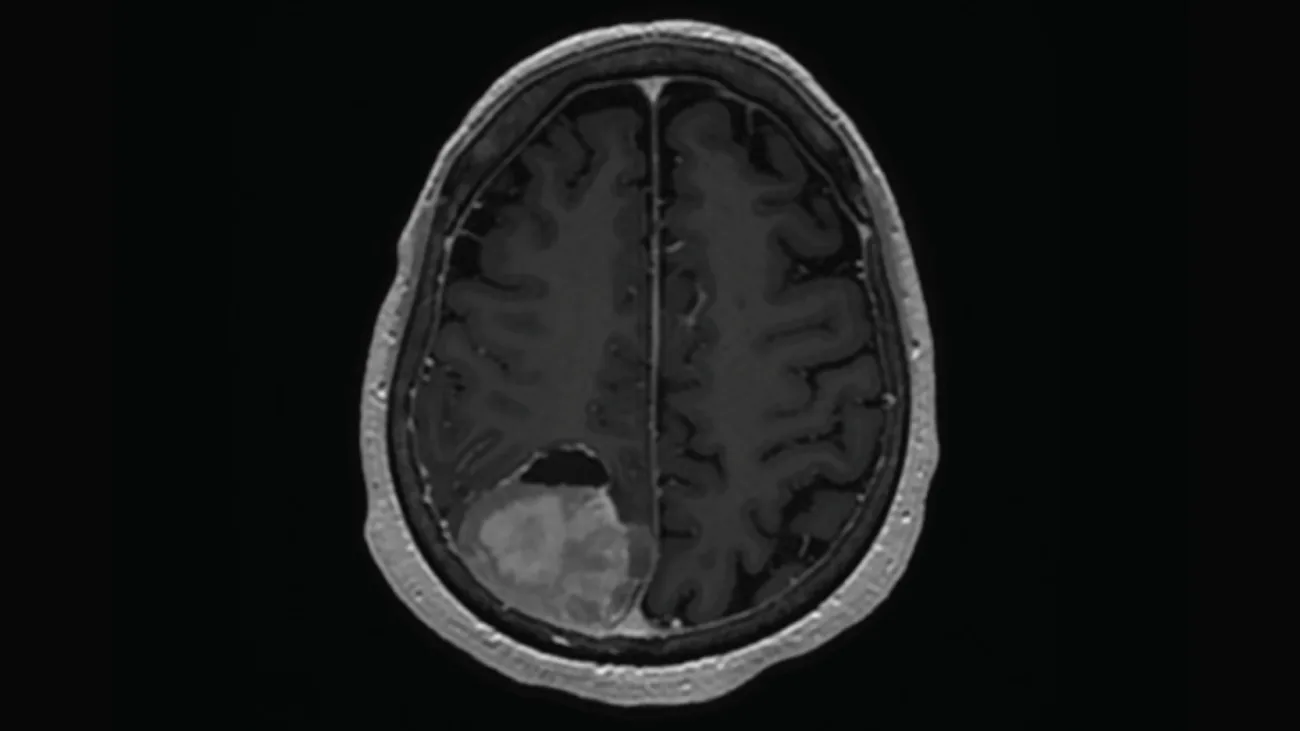

A deep learning project that uses transfer learning and custom CNN architectures to classify brain tumors from MRI scans into four categories: Glioma, Meningioma, Pituitary, and No Tumor.

|

| 14 |

+

|

| 15 |

+

## 🎯 Project Overview

|

| 16 |

+

|

| 17 |

+

This project implements two different deep learning approaches:

|

| 18 |

+

1. **Transfer Learning with Xception**: Leveraging a pre-trained model for enhanced accuracy

|

| 19 |

+

2. **Custom CNN**: A dedicated convolutional neural network built from scratch

|

| 20 |

+

|

| 21 |

+

Both models are deployed through a user-friendly Streamlit web interface that provides:

|

| 22 |

+

- Real-time tumor classification

|

| 23 |

+

- Confidence scores

|

| 24 |

+

- Saliency maps for model interpretability

|

| 25 |

+

- AI-generated explanations of the model's focus areas

|

| 26 |

+

|

| 27 |

+

## 📊 Model Architecture & Performance

|

| 28 |

+

|

| 29 |

+

### Transfer Learning Model (Xception)

|

| 30 |

+

- Pre-trained on ImageNet dataset

|

| 31 |

+

- 36 convolutional layers

|

| 32 |

+

- 21 million parameters

|

| 33 |

+

- Features:

|

| 34 |

+

- Max pooling

|

| 35 |

+

- Dropout layers for regularization

|

| 36 |

+

- Softmax activation for classification

|

| 37 |

+

- Performance metrics:

|

| 38 |

+

- High accuracy on test set

|

| 39 |

+

- Robust against overfitting

|

| 40 |

+

|

| 41 |

+

### Custom CNN Model

|

| 42 |

+

- 4 convolutional layers

|

| 43 |

+

- 4.7 million parameters

|

| 44 |

+

- Architecture:

|

| 45 |

+

- Multiple Conv2D layers with ReLU activation

|

| 46 |

+

- MaxPooling2D layers

|

| 47 |

+

- Dropout for regularization

|

| 48 |

+

- Dense layers with L2 regularization

|

| 49 |

+

- Softmax output layer

|

| 50 |

+

|

| 51 |

+

## 🛠️ Installation

|

| 52 |

+

|

| 53 |

+

```bash

|

| 54 |

+

# Clone the repository

|

| 55 |

+

git clone https://github.com/stonewerner/brain-tumor-ML.git

|

| 56 |

+

cd brain-tumor-ML

|

| 57 |

+

|

| 58 |

+

# Install required packages

|

| 59 |

+

pip install -r requirements.txt

|

| 60 |

+

```

|

| 61 |

+

|

| 62 |

+

## 📦 Dependencies

|

| 63 |

+

|

| 64 |

+

- tensorflow >= 2.0

|

| 65 |

+

- streamlit

|

| 66 |

+

- numpy

|

| 67 |

+

- pandas

|

| 68 |

+

- pillow

|

| 69 |

+

- plotly

|

| 70 |

+

- opencv-python

|

| 71 |

+

- google-generativeai

|

| 72 |

+

- python-dotenv

|

| 73 |

+

|

| 74 |

+

## 🚀 Usage

|

| 75 |

+

|

| 76 |

+

|

| 77 |

+

### Running the Web App

|

| 78 |

+

|

| 79 |

+

```bash

|

| 80 |

+

streamlit run app.py

|

| 81 |

+

```

|

| 82 |

+

|

| 83 |

+

## 🖥️ Web Interface Features

|

| 84 |

+

|

| 85 |

+

1. **Image Upload**: Support for jpg, jpeg, and png formats

|

| 86 |

+

2. **Model Selection**: Choose between Transfer Learning and Custom CNN

|

| 87 |

+

3. **Visualization**:

|

| 88 |

+

- Original MRI scan

|

| 89 |

+

- Saliency map highlighting model focus areas

|

| 90 |

+

4. **Results Display**:

|

| 91 |

+

- Predicted tumor type

|

| 92 |

+

- Confidence scores

|

| 93 |

+

- Interactive probability chart

|

| 94 |

+

- AI-generated explanation of the classification

|

| 95 |

+

|

| 96 |

+

## 📈 Data Processing

|

| 97 |

+

|

| 98 |

+

The project includes robust data handling:

|

| 99 |

+

- Image preprocessing and augmentation

|

| 100 |

+

- Brightness adjustment for training data

|

| 101 |

+

- Proper train/validation/test splits

|

| 102 |

+

- Standardized image sizing

|

| 103 |

+

|

| 104 |

+

## 🔍 Model Interpretability

|

| 105 |

+

|

| 106 |

+

- **Saliency Maps**: Visual explanation of model decisions

|

| 107 |

+

- **Region Focus**: Highlights critical areas in MRI scans

|

| 108 |

+

- **AI Explanations**: Generated using Google's Gemini model

|

| 109 |

+

- **Confidence Metrics**: Probability distribution across classes

|

| 110 |

+

|

| 111 |

+

## 👥 Contributing

|

| 112 |

+

|

| 113 |

+

1. Fork the repository

|

| 114 |

+

2. Create your feature branch (`git checkout -b feature/AmazingFeature`)

|

| 115 |

+

3. Commit your changes (`git commit -m 'Add some AmazingFeature'`)

|

| 116 |

+

4. Push to the branch (`git push origin feature/AmazingFeature`)

|

| 117 |

+

5. Open a Pull Request

|

| 118 |

+

|

| 119 |

+

|

| 120 |

+

## 📧 Contact

|

| 121 |

+

|

| 122 |

+

Stone Werner - stonewerner.com

|

| 123 |

|

| 124 |

+

Project Link: [https://github.com/stonewerner/brain-tumor-ML](https://github.com/stonewerner/brain-tumor-ML)

|

app.py

ADDED

|

@@ -0,0 +1,248 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

import streamlit as st

|

| 3 |

+

import tensorflow as tf

|

| 4 |

+

from tensorflow.keras.models import load_model

|

| 5 |

+

from tensorflow.keras.preprocessing import image

|

| 6 |

+

import numpy as np

|

| 7 |

+

import plotly.graph_objects as go

|

| 8 |

+

import cv2

|

| 9 |

+

from tensorflow.keras.models import Sequential

|

| 10 |

+

from tensorflow.keras.layers import Dense, Dropout, Flatten

|

| 11 |

+

from tensorflow.keras.optimizers import Adamax

|

| 12 |

+

from tensorflow.keras.metrics import Precision, Recall

|

| 13 |

+

import google.generativeai as genai

|

| 14 |

+

# from google.colab import userdata

|

| 15 |

+

import PIL.Image

|

| 16 |

+

import os

|

| 17 |

+

from dotenv import load_dotenv

|

| 18 |

+

load_dotenv()

|

| 19 |

+

|

| 20 |

+

genai.configure(api_key=os.getenv("GOOGLE_API_KEY"))

|

| 21 |

+

|

| 22 |

+

output_dir = 'saliency_maps'

|

| 23 |

+

os.makedirs(output_dir, exist_ok=True)

|

| 24 |

+

|

| 25 |

+

def generate_explanation(img_path, model_prediction, confidence):

|

| 26 |

+

|

| 27 |

+

prompt = f"""You are an expert neurologist. You are tasked with explaining a saliency map of a brain tumor MRI scan.

|

| 28 |

+

The saliency map was generated by a deep learning model that was trained to classify brain tumors as either

|

| 29 |

+

glioma, meningioma, pituitary, or no tumor.

|

| 30 |

+

|

| 31 |

+

The saliency map highlights the regions of the image that the machine learning model is focusing on to make the predictions.

|

| 32 |

+

|

| 33 |

+

The deep learning model predicted the image to be of class '{model_prediction}' with a confidence of {confidence * 100}%.

|

| 34 |

+

|

| 35 |

+

In your response:

|

| 36 |

+

- Explain what regions of the brain the model is focusing on, based on the saliency map. Refer to the regions highlighted in light cyan, those are the regions where the model is focusing on.

|

| 37 |

+

- Explain possible reasons why the model made the prediction it did.

|

| 38 |

+

- Don't mention anything like 'The saliency map highlights the regions the model is focusing on, which are in light cyan' in your explanation.

|

| 39 |

+

- Keep your explanation to 5 sentences max.

|

| 40 |

+

|

| 41 |

+

Your response will go directly on the report to the doctor and patient, so don't add any extra phrases like 'Sure!' or ask any questions at the end

|

| 42 |

+

Let's think step by step about this.

|

| 43 |

+

"""

|

| 44 |

+

|

| 45 |

+

img = PIL.Image.open(img_path)

|

| 46 |

+

|

| 47 |

+

model = genai.GenerativeModel(model_name="gemini-1.5-flash")

|

| 48 |

+

response = model.generate_content([prompt, img])

|

| 49 |

+

|

| 50 |

+

return response.text

|

| 51 |

+

|

| 52 |

+

def generate_saliency_map(model, img_array, class_index, img_size):

|

| 53 |

+

with tf.GradientTape() as tape:

|

| 54 |

+

img_tensor = tf.convert_to_tensor(img_array)

|

| 55 |

+

tape.watch(img_tensor)

|

| 56 |

+

predictions = model(img_tensor)

|

| 57 |

+

target_class = predictions[:, class_index]

|

| 58 |

+

|

| 59 |

+

gradients = tape.gradient(target_class, img_tensor)

|

| 60 |

+

gradients = tf.math.abs(gradients)

|

| 61 |

+

gradients = tf.reduce_max(gradients, axis=-1)

|

| 62 |

+

gradients = gradients.numpy().squeeze()

|

| 63 |

+

|

| 64 |

+

# Resize gradients to match original image size

|

| 65 |

+

gradients = cv2.resize(gradients, img_size)

|

| 66 |

+

|

| 67 |

+

# Create a circular mask for the brain area

|

| 68 |

+

center = (gradients.shape[0] // 2, gradients.shape[1] // 2)

|

| 69 |

+

radius = min(center[0], center[1]) - 10

|

| 70 |

+

y, x = np.ogrid[:gradients.shape[0], :gradients.shape[1]]

|

| 71 |

+

mask = (x - center[0])**2 + (y - center[1])**2 <= radius**2

|

| 72 |

+

|

| 73 |

+

# Apply mask to gradients

|

| 74 |

+

gradients = gradients * mask

|

| 75 |

+

|

| 76 |

+

# Normalize only the brain area

|

| 77 |

+

brain_gradients = gradients[mask]

|

| 78 |

+

if brain_gradients.max() > brain_gradients.min():

|

| 79 |

+

brain_gradients = (brain_gradients - brain_gradients.min()) / (brain_gradients.max() - brain_gradients.min())

|

| 80 |

+

gradients[mask] = brain_gradients

|

| 81 |

+

|

| 82 |

+

# Apply a higher threshold

|

| 83 |

+

threshold = np.percentile(gradients[mask], 80)

|

| 84 |

+

gradients[gradients < threshold] = 0

|

| 85 |

+

|

| 86 |

+

# Apply more aggressive smoothing

|

| 87 |

+

gradients = cv2.GaussianBlur(gradients, (11, 11), 0)

|

| 88 |

+

|

| 89 |

+

# Create a heatmap overlay with enhanced contrast

|

| 90 |

+

heatmap = cv2.applyColorMap(np.uint8(255 * gradients), cv2.COLORMAP_JET)

|

| 91 |

+

heatmap = cv2.cvtColor(heatmap, cv2.COLOR_BGR2RGB)

|

| 92 |

+

|

| 93 |

+

# Resize heatmap to match original image size

|

| 94 |

+

heatmap = cv2.resize(heatmap, img_size)

|

| 95 |

+

|

| 96 |

+

# Superimpose the heatmap on original image with increased opacity

|

| 97 |

+

original_img = image.img_to_array(img)

|

| 98 |

+

superimposed_img = heatmap * 0.7 + original_img * 0.3

|

| 99 |

+

superimposed_img = superimposed_img.astype(np.uint8)

|

| 100 |

+

|

| 101 |

+

img_path = os.path.join(output_dir, uploaded_file.name)

|

| 102 |

+

with open(img_path, "wb") as f:

|

| 103 |

+

f.write(uploaded_file.getbuffer())

|

| 104 |

+

|

| 105 |

+

saliency_map_path = f'saliency_maps/{uploaded_file.name}'

|

| 106 |

+

|

| 107 |

+

# Save saliency map

|

| 108 |

+

cv2.imwrite(saliency_map_path, cv2.cvtColor(superimposed_img, cv2.COLOR_RGB2BGR))

|

| 109 |

+

|

| 110 |

+

return superimposed_img

|

| 111 |

+

|

| 112 |

+

|

| 113 |

+

|

| 114 |

+

def load_xception_model(model_path):

|

| 115 |

+

img_shape=(299, 299, 3)

|

| 116 |

+

base_model = tf.keras.applications.Xception(include_top=False, weights="imagenet", input_shape=img_shape, pooling='max')

|

| 117 |

+

|

| 118 |

+

model = Sequential([

|

| 119 |

+

base_model,

|

| 120 |

+

Flatten(),

|

| 121 |

+

Dropout(rate=0.3),

|

| 122 |

+

Dense(128, activation='relu'),

|

| 123 |

+

Dropout(rate=0.25),

|

| 124 |

+

Dense(4, activation='softmax')

|

| 125 |

+

])

|

| 126 |

+

|

| 127 |

+

model.build((None,) + img_shape)

|

| 128 |

+

|

| 129 |

+

# Compile the model

|

| 130 |

+

model.compile(Adamax(learning_rate=0.001),

|

| 131 |

+

loss='categorical_crossentropy',

|

| 132 |

+

metrics=['accuracy', Precision(), Recall()])

|

| 133 |

+

model.load_weights(model_path)

|

| 134 |

+

return model

|

| 135 |

+

|

| 136 |

+

st.title("Brain Tumor Classification")

|

| 137 |

+

|

| 138 |

+

st.write("Upload an image of a brain MRI scan to classify.")

|

| 139 |

+

|

| 140 |

+

uploaded_file = st.file_uploader("Choose an image...", type=["jpg", "jpeg", "png"])

|

| 141 |

+

|

| 142 |

+

if uploaded_file is not None:

|

| 143 |

+

selected_model = st.radio(

|

| 144 |

+

"Select Model",

|

| 145 |

+

("Transfer Learning - Xception", "Custom CNN")

|

| 146 |

+

)

|

| 147 |

+

|

| 148 |

+

if selected_model == "Transfer Learning - Xception":

|

| 149 |

+

model = load_xception_model('xception_model.weights.h5')

|

| 150 |

+

img_size=(299, 299)

|

| 151 |

+

else:

|

| 152 |

+

model = load_model('cnn_model.h5')

|

| 153 |

+

img_size = (224, 224)

|

| 154 |

+

|

| 155 |

+

|

| 156 |

+

labels = ['Glioma', 'Meningioma', 'No Tumor', 'Pituitary']

|

| 157 |

+

img = image.load_img(uploaded_file, target_size=img_size)

|

| 158 |

+

img_array = image.img_to_array(img)

|

| 159 |

+

img_array = np.expand_dims(img_array, axis=0)

|

| 160 |

+

img_array /= 255.0

|

| 161 |

+

|

| 162 |

+

prediction = model.predict(img_array)

|

| 163 |

+

|

| 164 |

+

# Get the class with the highest probability

|

| 165 |

+

class_index = np.argmax(prediction[0])

|

| 166 |

+

result = labels[class_index]

|

| 167 |

+

|

| 168 |

+

saliency_map = generate_saliency_map(model, img_array, class_index, img_size)

|

| 169 |

+

|

| 170 |

+

|

| 171 |

+

col1, col2 = st.columns(2)

|

| 172 |

+

with col1:

|

| 173 |

+

st.image(uploaded_file, caption='Uploaded Image', use_column_width=True)

|

| 174 |

+

with col2:

|

| 175 |

+

st.image(saliency_map, caption='Saliency Map', use_column_width=True)

|

| 176 |

+

|

| 177 |

+

|

| 178 |

+

st.write("## Classification Results")

|

| 179 |

+

|

| 180 |

+

result_container = st.container()

|

| 181 |

+

result_container = st.container()

|

| 182 |

+

result_container.markdown(

|

| 183 |

+

f"""

|

| 184 |

+

<div style="background-color: #000000; color: #ffffff; padding: 30px; border-radius: 15px;">

|

| 185 |

+

<div style="display: flex; justify-content: space-between; align-items: center;">

|

| 186 |

+

<div style="flex: 1; text-align: center;">

|

| 187 |

+

<h3 style="color: #ffffff; margin-bottom: 10px; font-size: 20px;">Prediction</h3>

|

| 188 |

+

<p style="font-size: 36px; font-weight: 800; color: #FF0000; margin: 0;">

|

| 189 |

+

{result}

|

| 190 |

+

</p>

|

| 191 |

+

</div>

|

| 192 |

+

<div style="width: 2px; height: 80px; background-color: #ffffff; margin: 0 20px;"></div>

|

| 193 |

+

<div style="flex: 1; text-align: center;">

|

| 194 |

+

<h3 style="color: #ffffff; margin-bottom: 10px; font-size: 20px;">Confidence</h3>

|

| 195 |

+

<p style="font-size: 36px; font-weight: 800; color: #2196F3; margin: 0;">

|

| 196 |

+

{prediction[0][class_index]:.4%}

|

| 197 |

+

</p>

|

| 198 |

+

</div>

|

| 199 |

+

</div>

|

| 200 |

+

</div>

|

| 201 |

+

""",

|

| 202 |

+

unsafe_allow_html=True

|

| 203 |

+

)

|

| 204 |

+

|

| 205 |

+

# Prepare data for Plotly chart

|

| 206 |

+

probabilities = prediction[0]

|

| 207 |

+

sorted_indices = np.argsort(probabilities)[::-1]

|

| 208 |

+

sorted_labels = [labels[i] for i in sorted_indices]

|

| 209 |

+

sorted_probabilities = probabilities[sorted_indices]

|

| 210 |

+

|

| 211 |

+

|

| 212 |

+

# Create Plotly bar chart

|

| 213 |

+

fig = go.Figure(go.Bar(

|

| 214 |

+

x=sorted_probabilities,

|

| 215 |

+

y=sorted_labels,

|

| 216 |

+

orientation='h',

|

| 217 |

+

marker_color=['red' if label == result else 'blue' for label in sorted_labels]

|

| 218 |

+

))

|

| 219 |

+

|

| 220 |

+

# Customize chart layout

|

| 221 |

+

fig.update_layout(

|

| 222 |

+

title='Probability for each class',

|

| 223 |

+

xaxis_title='Probability',

|

| 224 |

+

yaxis_title='Class',

|

| 225 |

+

height=400,

|

| 226 |

+

width=600,

|

| 227 |

+

yaxis=dict(autorange='reversed')

|

| 228 |

+

)

|

| 229 |

+

|

| 230 |

+

# Add value labels to the bars

|

| 231 |

+

for i, prob in enumerate(sorted_probabilities):

|

| 232 |

+

fig.add_annotation(

|

| 233 |

+

x=prob,

|

| 234 |

+

y=i,

|

| 235 |

+

text=f'{prob:.4f}',

|

| 236 |

+

showarrow=False,

|

| 237 |

+

xanchor='left',

|

| 238 |

+

xshift=5

|

| 239 |

+

)

|

| 240 |

+

|

| 241 |

+

# Display Plotly chart

|

| 242 |

+

st.plotly_chart(fig)

|

| 243 |

+

|

| 244 |

+

saliency_map_path = f'saliency_maps/{uploaded_file.name}'

|

| 245 |

+

explanation = generate_explanation(saliency_map_path, result, prediction[0][class_index])

|

| 246 |

+

|

| 247 |

+

st.write("## Explanation")

|

| 248 |

+

st.write(explanation)

|

cnn_model.h5

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:4bebec7bad9a17d4274d47327ef4eb98d76ab1966a38e7abd14817a5ba93f89f

|

| 3 |

+

size 57367792

|

packages.txt

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

libgl1

|

| 2 |

+

libglib2.0-0

|

project3.ipynb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

requirements.txt

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

tensorflow>=2.0.0

|

| 2 |

+

streamlit>=1.0.0

|

| 3 |

+

numpy>=1.19.2

|

| 4 |

+

pandas>=1.1.3

|

| 5 |

+

Pillow>=8.0.0

|

| 6 |

+

matplotlib>=3.3.2

|

| 7 |

+

seaborn>=0.11.0

|

| 8 |

+

scikit-learn>=0.23.2

|

| 9 |

+

plotly>=4.14.3

|

| 10 |

+

opencv-python>=4.5.1

|

| 11 |

+

google-generativeai>=0.3.0

|

| 12 |

+

python-dotenv>=0.19.0

|

| 13 |

+

pyngrok>=5.0.0

|

| 14 |

+

kaggle>=1.5.12

|

| 15 |

+

tensorflow-hub>=0.12.0

|

| 16 |

+

protobuf>=3.20.0,<4.0.0

|

xception_model.weights.h5

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a5c510a9f88fdd7618ba0542c95351e537fc12fa0a451d372c4dfc8e5e14dfa0

|

| 3 |

+

size 253528168

|