End of training

Browse files- README.md +5 -5

- all_results.json +19 -0

- egy_training_log.txt +2 -0

- eval_results.json +13 -0

- train_results.json +9 -0

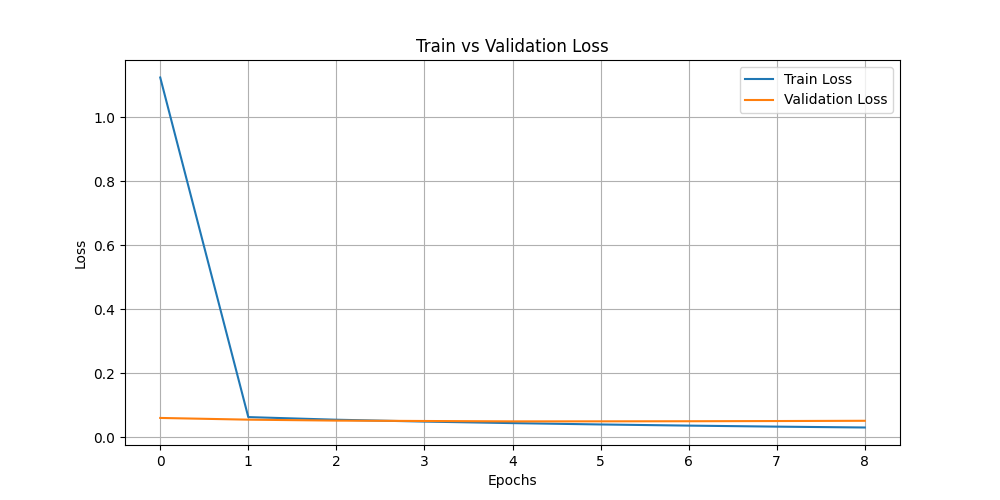

- train_vs_val_loss.png +0 -0

- trainer_state.json +241 -0

README.md

CHANGED

|

@@ -17,11 +17,11 @@ should probably proofread and complete it, then remove this comment. -->

|

|

| 17 |

|

| 18 |

This model is a fine-tuned version of [aubmindlab/aragpt2-base](https://huggingface.co/aubmindlab/aragpt2-base) on an unknown dataset.

|

| 19 |

It achieves the following results on the evaluation set:

|

| 20 |

-

- Loss: 0.

|

| 21 |

-

- Bleu: 0.

|

| 22 |

-

- Rouge1: 0.

|

| 23 |

-

- Rouge2: 0.

|

| 24 |

-

- Rougel: 0.

|

| 25 |

|

| 26 |

## Model description

|

| 27 |

|

|

|

|

| 17 |

|

| 18 |

This model is a fine-tuned version of [aubmindlab/aragpt2-base](https://huggingface.co/aubmindlab/aragpt2-base) on an unknown dataset.

|

| 19 |

It achieves the following results on the evaluation set:

|

| 20 |

+

- Loss: 0.0499

|

| 21 |

+

- Bleu: 0.0393

|

| 22 |

+

- Rouge1: 0.3590

|

| 23 |

+

- Rouge2: 0.1242

|

| 24 |

+

- Rougel: 0.3572

|

| 25 |

|

| 26 |

## Model description

|

| 27 |

|

all_results.json

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"epoch": 10.0,

|

| 3 |

+

"eval_bleu": 0.03933306151343601,

|

| 4 |

+

"eval_loss": 0.04986047372221947,

|

| 5 |

+

"eval_rouge1": 0.35895878709405493,

|

| 6 |

+

"eval_rouge2": 0.124247769713655,

|

| 7 |

+

"eval_rougeL": 0.3572225001900925,

|

| 8 |

+

"eval_runtime": 36.9239,

|

| 9 |

+

"eval_samples": 1672,

|

| 10 |

+

"eval_samples_per_second": 45.282,

|

| 11 |

+

"eval_steps_per_second": 5.66,

|

| 12 |

+

"perplexity": 1.0511244266653859,

|

| 13 |

+

"total_flos": 3.49347446784e+16,

|

| 14 |

+

"train_loss": 0.15054923290270938,

|

| 15 |

+

"train_runtime": 5668.5001,

|

| 16 |

+

"train_samples": 6685,

|

| 17 |

+

"train_samples_per_second": 23.586,

|

| 18 |

+

"train_steps_per_second": 2.95

|

| 19 |

+

}

|

egy_training_log.txt

CHANGED

|

@@ -296,3 +296,5 @@ INFO:root:Epoch 9.0: Train Loss = 0.0334, Eval Loss = 0.050896577537059784

|

|

| 296 |

INFO:absl:Using default tokenizer.

|

| 297 |

INFO:root:Epoch 10.0: Train Loss = 0.0307, Eval Loss = 0.05167483910918236

|

| 298 |

INFO:absl:Using default tokenizer.

|

|

|

|

|

|

|

|

|

| 296 |

INFO:absl:Using default tokenizer.

|

| 297 |

INFO:root:Epoch 10.0: Train Loss = 0.0307, Eval Loss = 0.05167483910918236

|

| 298 |

INFO:absl:Using default tokenizer.

|

| 299 |

+

INFO:__main__:*** Evaluate ***

|

| 300 |

+

INFO:absl:Using default tokenizer.

|

eval_results.json

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"epoch": 10.0,

|

| 3 |

+

"eval_bleu": 0.03933306151343601,

|

| 4 |

+

"eval_loss": 0.04986047372221947,

|

| 5 |

+

"eval_rouge1": 0.35895878709405493,

|

| 6 |

+

"eval_rouge2": 0.124247769713655,

|

| 7 |

+

"eval_rougeL": 0.3572225001900925,

|

| 8 |

+

"eval_runtime": 36.9239,

|

| 9 |

+

"eval_samples": 1672,

|

| 10 |

+

"eval_samples_per_second": 45.282,

|

| 11 |

+

"eval_steps_per_second": 5.66,

|

| 12 |

+

"perplexity": 1.0511244266653859

|

| 13 |

+

}

|

train_results.json

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"epoch": 10.0,

|

| 3 |

+

"total_flos": 3.49347446784e+16,

|

| 4 |

+

"train_loss": 0.15054923290270938,

|

| 5 |

+

"train_runtime": 5668.5001,

|

| 6 |

+

"train_samples": 6685,

|

| 7 |

+

"train_samples_per_second": 23.586,

|

| 8 |

+

"train_steps_per_second": 2.95

|

| 9 |

+

}

|

train_vs_val_loss.png

ADDED

|

trainer_state.json

ADDED

|

@@ -0,0 +1,241 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"best_metric": 0.04986047372221947,

|

| 3 |

+

"best_model_checkpoint": "/home/iais_marenpielka/Bouthaina/res_nw_gulf_aragpt2-base/checkpoint-4180",

|

| 4 |

+

"epoch": 10.0,

|

| 5 |

+

"eval_steps": 500,

|

| 6 |

+

"global_step": 8360,

|

| 7 |

+

"is_hyper_param_search": false,

|

| 8 |

+

"is_local_process_zero": true,

|

| 9 |

+

"is_world_process_zero": true,

|

| 10 |

+

"log_history": [

|

| 11 |

+

{

|

| 12 |

+

"epoch": 1.0,

|

| 13 |

+

"grad_norm": 0.2068207710981369,

|

| 14 |

+

"learning_rate": 4.896424167694204e-05,

|

| 15 |

+

"loss": 1.1245,

|

| 16 |

+

"step": 836

|

| 17 |

+

},

|

| 18 |

+

{

|

| 19 |

+

"epoch": 1.0,

|

| 20 |

+

"eval_bleu": 0.00522127887673706,

|

| 21 |

+

"eval_loss": 0.060549940913915634,

|

| 22 |

+

"eval_rouge1": 0.16261381712821865,

|

| 23 |

+

"eval_rouge2": 0.016191591492833397,

|

| 24 |

+

"eval_rougeL": 0.16017425267387253,

|

| 25 |

+

"eval_runtime": 159.9696,

|

| 26 |

+

"eval_samples_per_second": 10.452,

|

| 27 |

+

"eval_steps_per_second": 1.306,

|

| 28 |

+

"step": 836

|

| 29 |

+

},

|

| 30 |

+

{

|

| 31 |

+

"epoch": 2.0,

|

| 32 |

+

"grad_norm": 0.19557742774486542,

|

| 33 |

+

"learning_rate": 4.638717632552405e-05,

|

| 34 |

+

"loss": 0.0632,

|

| 35 |

+

"step": 1672

|

| 36 |

+

},

|

| 37 |

+

{

|

| 38 |

+

"epoch": 2.0,

|

| 39 |

+

"eval_bleu": 0.011943726030788412,

|

| 40 |

+

"eval_loss": 0.05519399791955948,

|

| 41 |

+

"eval_rouge1": 0.2541122949630313,

|

| 42 |

+

"eval_rouge2": 0.051699799633347324,

|

| 43 |

+

"eval_rougeL": 0.2510765914158727,

|

| 44 |

+

"eval_runtime": 221.3727,

|

| 45 |

+

"eval_samples_per_second": 7.553,

|

| 46 |

+

"eval_steps_per_second": 0.944,

|

| 47 |

+

"step": 1672

|

| 48 |

+

},

|

| 49 |

+

{

|

| 50 |

+

"epoch": 3.0,

|

| 51 |

+

"grad_norm": 0.14342406392097473,

|

| 52 |

+

"learning_rate": 4.3810110974106046e-05,

|

| 53 |

+

"loss": 0.055,

|

| 54 |

+

"step": 2508

|

| 55 |

+

},

|

| 56 |

+

{

|

| 57 |

+

"epoch": 3.0,

|

| 58 |

+

"eval_bleu": 0.02252740132414782,

|

| 59 |

+

"eval_loss": 0.05222811922430992,

|

| 60 |

+

"eval_rouge1": 0.30471930149085674,

|

| 61 |

+

"eval_rouge2": 0.08185853906387802,

|

| 62 |

+

"eval_rougeL": 0.30154781860876523,

|

| 63 |

+

"eval_runtime": 36.8724,

|

| 64 |

+

"eval_samples_per_second": 45.346,

|

| 65 |

+

"eval_steps_per_second": 5.668,

|

| 66 |

+

"step": 2508

|

| 67 |

+

},

|

| 68 |

+

{

|

| 69 |

+

"epoch": 4.0,

|

| 70 |

+

"grad_norm": 0.16051243245601654,

|

| 71 |

+

"learning_rate": 4.1233045622688044e-05,

|

| 72 |

+

"loss": 0.0492,

|

| 73 |

+

"step": 3344

|

| 74 |

+

},

|

| 75 |

+

{

|

| 76 |

+

"epoch": 4.0,

|

| 77 |

+

"eval_bleu": 0.032582447132208606,

|

| 78 |

+

"eval_loss": 0.051017943769693375,

|

| 79 |

+

"eval_rouge1": 0.3346924293421615,

|

| 80 |

+

"eval_rouge2": 0.10192760972807041,

|

| 81 |

+

"eval_rougeL": 0.33180504017979595,

|

| 82 |

+

"eval_runtime": 159.805,

|

| 83 |

+

"eval_samples_per_second": 10.463,

|

| 84 |

+

"eval_steps_per_second": 1.308,

|

| 85 |

+

"step": 3344

|

| 86 |

+

},

|

| 87 |

+

{

|

| 88 |

+

"epoch": 5.0,

|

| 89 |

+

"grad_norm": 0.15151090919971466,

|

| 90 |

+

"learning_rate": 3.8655980271270036e-05,

|

| 91 |

+

"loss": 0.0444,

|

| 92 |

+

"step": 4180

|

| 93 |

+

},

|

| 94 |

+

{

|

| 95 |

+

"epoch": 5.0,

|

| 96 |

+

"eval_bleu": 0.03933306151343601,

|

| 97 |

+

"eval_loss": 0.04986047372221947,

|

| 98 |

+

"eval_rouge1": 0.35895878709405493,

|

| 99 |

+

"eval_rouge2": 0.124247769713655,

|

| 100 |

+

"eval_rougeL": 0.3572225001900925,

|

| 101 |

+

"eval_runtime": 159.8295,

|

| 102 |

+

"eval_samples_per_second": 10.461,

|

| 103 |

+

"eval_steps_per_second": 1.308,

|

| 104 |

+

"step": 4180

|

| 105 |

+

},

|

| 106 |

+

{

|

| 107 |

+

"epoch": 6.0,

|

| 108 |

+

"grad_norm": 0.11806467920541763,

|

| 109 |

+

"learning_rate": 3.6078914919852034e-05,

|

| 110 |

+

"loss": 0.0402,

|

| 111 |

+

"step": 5016

|

| 112 |

+

},

|

| 113 |

+

{

|

| 114 |

+

"epoch": 6.0,

|

| 115 |

+

"eval_bleu": 0.04622708241683158,

|

| 116 |

+

"eval_loss": 0.04995572566986084,

|

| 117 |

+

"eval_rouge1": 0.3810393862934106,

|

| 118 |

+

"eval_rouge2": 0.13792791296322293,

|

| 119 |

+

"eval_rougeL": 0.3788340225664032,

|

| 120 |

+

"eval_runtime": 159.7942,

|

| 121 |

+

"eval_samples_per_second": 10.463,

|

| 122 |

+

"eval_steps_per_second": 1.308,

|

| 123 |

+

"step": 5016

|

| 124 |

+

},

|

| 125 |

+

{

|

| 126 |

+

"epoch": 7.0,

|

| 127 |

+

"grad_norm": 0.15260820090770721,

|

| 128 |

+

"learning_rate": 3.350184956843403e-05,

|

| 129 |

+

"loss": 0.0366,

|

| 130 |

+

"step": 5852

|

| 131 |

+

},

|

| 132 |

+

{

|

| 133 |

+

"epoch": 7.0,

|

| 134 |

+

"eval_bleu": 0.04988840099834184,

|

| 135 |

+

"eval_loss": 0.05027288198471069,

|

| 136 |

+

"eval_rouge1": 0.3961215133733164,

|

| 137 |

+

"eval_rouge2": 0.15246703105477005,

|

| 138 |

+

"eval_rougeL": 0.3937993288436519,

|

| 139 |

+

"eval_runtime": 36.8733,

|

| 140 |

+

"eval_samples_per_second": 45.344,

|

| 141 |

+

"eval_steps_per_second": 5.668,

|

| 142 |

+

"step": 5852

|

| 143 |

+

},

|

| 144 |

+

{

|

| 145 |

+

"epoch": 8.0,

|

| 146 |

+

"grad_norm": 0.14294394850730896,

|

| 147 |

+

"learning_rate": 3.092478421701603e-05,

|

| 148 |

+

"loss": 0.0334,

|

| 149 |

+

"step": 6688

|

| 150 |

+

},

|

| 151 |

+

{

|

| 152 |

+

"epoch": 8.0,

|

| 153 |

+

"eval_bleu": 0.05614309403616892,

|

| 154 |

+

"eval_loss": 0.050896577537059784,

|

| 155 |

+

"eval_rouge1": 0.407117249999222,

|

| 156 |

+

"eval_rouge2": 0.15984144200856937,

|

| 157 |

+

"eval_rougeL": 0.40517229812025873,

|

| 158 |

+

"eval_runtime": 37.0371,

|

| 159 |

+

"eval_samples_per_second": 45.144,

|

| 160 |

+

"eval_steps_per_second": 5.643,

|

| 161 |

+

"step": 6688

|

| 162 |

+

},

|

| 163 |

+

{

|

| 164 |

+

"epoch": 9.0,

|

| 165 |

+

"grad_norm": 0.20682789385318756,

|

| 166 |

+

"learning_rate": 2.8347718865598028e-05,

|

| 167 |

+

"loss": 0.0307,

|

| 168 |

+

"step": 7524

|

| 169 |

+

},

|

| 170 |

+

{

|

| 171 |

+

"epoch": 9.0,

|

| 172 |

+

"eval_bleu": 0.0606895848585085,

|

| 173 |

+

"eval_loss": 0.05167483910918236,

|

| 174 |

+

"eval_rouge1": 0.41011122575047865,

|

| 175 |

+

"eval_rouge2": 0.1731982844904956,

|

| 176 |

+

"eval_rougeL": 0.40855415076231816,

|

| 177 |

+

"eval_runtime": 159.7295,

|

| 178 |

+

"eval_samples_per_second": 10.468,

|

| 179 |

+

"eval_steps_per_second": 1.308,

|

| 180 |

+

"step": 7524

|

| 181 |

+

},

|

| 182 |

+

{

|

| 183 |

+

"epoch": 10.0,

|

| 184 |

+

"grad_norm": 0.13990797102451324,

|

| 185 |

+

"learning_rate": 2.5770653514180026e-05,

|

| 186 |

+

"loss": 0.0283,

|

| 187 |

+

"step": 8360

|

| 188 |

+

},

|

| 189 |

+

{

|

| 190 |

+

"epoch": 10.0,

|

| 191 |

+

"eval_bleu": 0.06531719425427113,

|

| 192 |

+

"eval_loss": 0.05382031202316284,

|

| 193 |

+

"eval_rouge1": 0.41672477670989894,

|

| 194 |

+

"eval_rouge2": 0.17546430438593302,

|

| 195 |

+

"eval_rougeL": 0.4150315774802549,

|

| 196 |

+

"eval_runtime": 159.9272,

|

| 197 |

+

"eval_samples_per_second": 10.455,

|

| 198 |

+

"eval_steps_per_second": 1.307,

|

| 199 |

+

"step": 8360

|

| 200 |

+

},

|

| 201 |

+

{

|

| 202 |

+

"epoch": 10.0,

|

| 203 |

+

"step": 8360,

|

| 204 |

+

"total_flos": 3.49347446784e+16,

|

| 205 |

+

"train_loss": 0.15054923290270938,

|

| 206 |

+

"train_runtime": 5668.5001,

|

| 207 |

+

"train_samples_per_second": 23.586,

|

| 208 |

+

"train_steps_per_second": 2.95

|

| 209 |

+

}

|

| 210 |

+

],

|

| 211 |

+

"logging_steps": 500,

|

| 212 |

+

"max_steps": 16720,

|

| 213 |

+

"num_input_tokens_seen": 0,

|

| 214 |

+

"num_train_epochs": 20,

|

| 215 |

+

"save_steps": 500,

|

| 216 |

+

"stateful_callbacks": {

|

| 217 |

+

"EarlyStoppingCallback": {

|

| 218 |

+

"args": {

|

| 219 |

+

"early_stopping_patience": 5,

|

| 220 |

+

"early_stopping_threshold": 0.0

|

| 221 |

+

},

|

| 222 |

+

"attributes": {

|

| 223 |

+

"early_stopping_patience_counter": 0

|

| 224 |

+

}

|

| 225 |

+

},

|

| 226 |

+

"TrainerControl": {

|

| 227 |

+

"args": {

|

| 228 |

+

"should_epoch_stop": false,

|

| 229 |

+

"should_evaluate": false,

|

| 230 |

+

"should_log": false,

|

| 231 |

+

"should_save": true,

|

| 232 |

+

"should_training_stop": true

|

| 233 |

+

},

|

| 234 |

+

"attributes": {}

|

| 235 |

+

}

|

| 236 |

+

},

|

| 237 |

+

"total_flos": 3.49347446784e+16,

|

| 238 |

+

"train_batch_size": 8,

|

| 239 |

+

"trial_name": null,

|

| 240 |

+

"trial_params": null

|

| 241 |

+

}

|