alish

commited on

Commit

·

6504675

1

Parent(s):

c7c0881

added media

Browse files- README.md +25 -14

- assets/cover.jpg +0 -0

- assets/logo.svg +17 -0

- assets/results.png +0 -0

README.md

CHANGED

|

@@ -12,7 +12,14 @@ base_model:

|

|

| 12 |

|

| 13 |

# NAVI verifiers (Nace Automated Verification Intelligence)

|

| 14 |

|

| 15 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 16 |

|

| 17 |

- **Developed by:** Nace.AI

|

| 18 |

- **Model type:** Policy Alignment Verifier

|

|

@@ -108,7 +115,7 @@ NAVI utilizes latest advances in Knowledge Augmentation and Memory in order to i

|

|

| 108 |

|

| 109 |

#### Training Hyperparameters

|

| 110 |

|

| 111 |

-

- **Training regime:** We

|

| 112 |

|

| 113 |

## Evaluation

|

| 114 |

|

|

@@ -116,7 +123,7 @@ NAVI utilizes latest advances in Knowledge Augmentation and Memory in order to i

|

|

| 116 |

|

| 117 |

#### Testing Data

|

| 118 |

|

| 119 |

-

We

|

| 120 |

|

| 121 |

#### Factors

|

| 122 |

|

|

@@ -128,17 +135,21 @@ F1 score was used to measure performance, prioritizing detection of noncomplianc

|

|

| 128 |

|

| 129 |

### Results

|

| 130 |

|

| 131 |

-

NAVI-small-preview achieved an F1 score of 86.8

|

| 132 |

-

|

| 133 |

-

| Model

|

| 134 |

-

|

| 135 |

-

|

|

| 136 |

-

| NAVI

|

| 137 |

-

|

|

| 138 |

-

|

|

| 139 |

-

|

|

| 140 |

-

|

|

| 141 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 142 |

|

| 143 |

## Model Card Contact

|

| 144 |

|

|

|

|

| 12 |

|

| 13 |

# NAVI verifiers (Nace Automated Verification Intelligence)

|

| 14 |

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

NAVI (Nace Automated Verification Intelligence) is a policy alignment verification solution designed to analyze text for compliance with documents and policies, identifying any violations. Optimized for enterprise applications, it supports automated compliance checks for text generation. To encourage open-source adoption, we offer NAVI-small-preview, an open-weights version of the deployed model, focused on verifying assistant outputs against policy documents. The full solution is accessible via the [NAVI platform and API](https://naviml.com/).

|

| 19 |

+

|

| 20 |

+

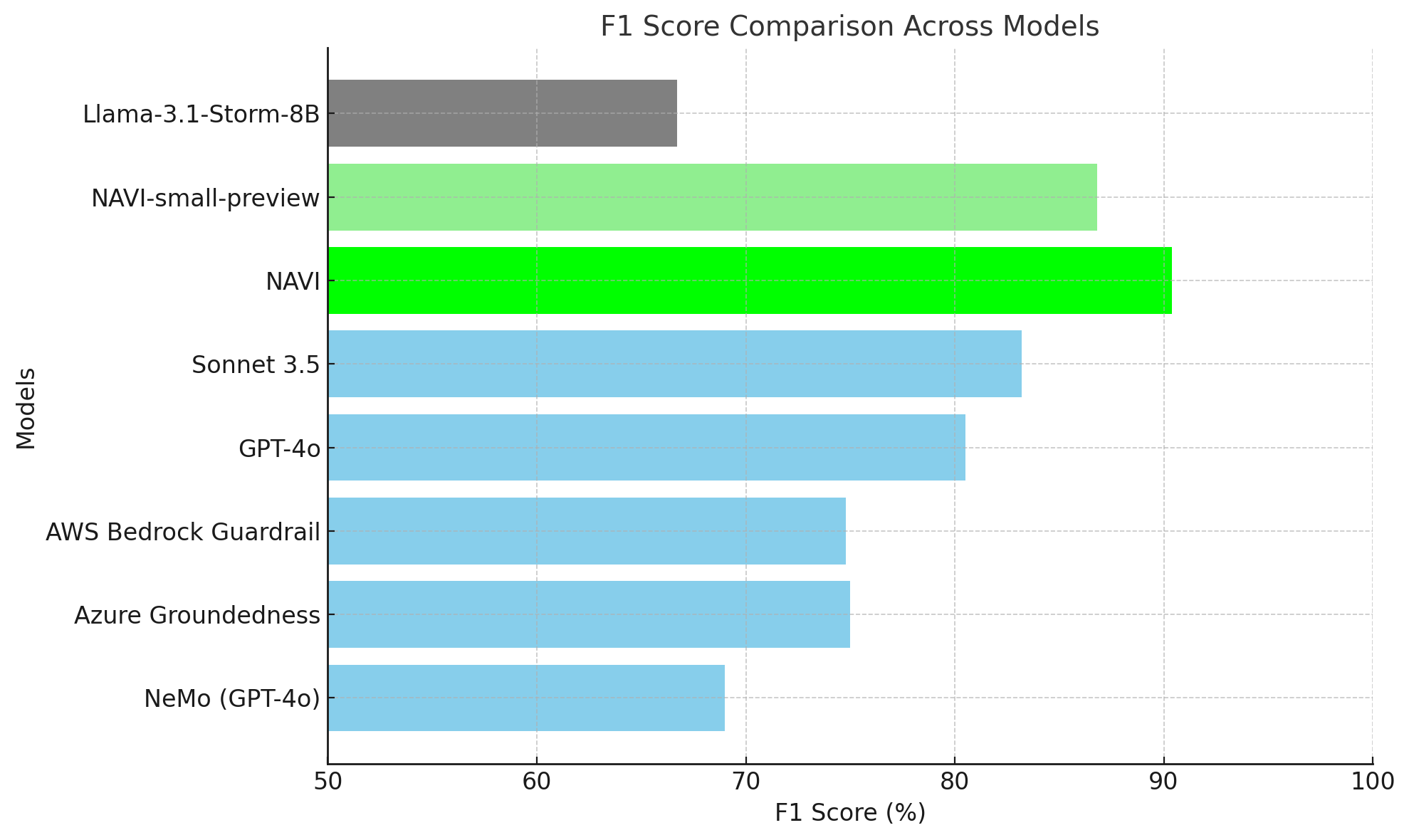

The chart below illustrates NAVI's strong performance, with the full model achieving an F1 score of 90.4%, outperforming all competitors. NAVI-small-preview also demonstrates impressive results, providing an open-source option with significant improvements over baseline models while maintaining reliable policy alignment verification.

|

| 21 |

+

|

| 22 |

+

|

| 23 |

|

| 24 |

- **Developed by:** Nace.AI

|

| 25 |

- **Model type:** Policy Alignment Verifier

|

|

|

|

| 115 |

|

| 116 |

#### Training Hyperparameters

|

| 117 |

|

| 118 |

+

- **Training regime:** We perform thorough hyperparameter search during finetuning. The resulting model is a Lora adapter that uses all linear modules for all Transformer layers with rank 16, alpha 32, learning rate 5e-5, effective batch size 32. Trained with 8 A100s for 6 epochs using Pytorch Distributed Data Parallel.

|

| 119 |

|

| 120 |

## Evaluation

|

| 121 |

|

|

|

|

| 123 |

|

| 124 |

#### Testing Data

|

| 125 |

|

| 126 |

+

We curated the Policy Alignment Verification (PAV) dataset to evaluate diverse policy verification use cases, releasing a public subset of 125 examples spanning six industry-specific scenarios: AT&T, Airbnb, Cadence Bank, Delta Airlines, Verisk, and Walgreens. This open-sourced subset ensures transparency and facilitates benchmarking of model performance. We evaluate our models and alternative solutions on this test set.

|

| 127 |

|

| 128 |

#### Factors

|

| 129 |

|

|

|

|

| 135 |

|

| 136 |

### Results

|

| 137 |

|

| 138 |

+

The table below shows performance of models evaluated on the public subset of PAV dataset. NAVI-small-preview achieved an F1 score of 86.8%, outperforming all tested alternatives except full-scale NAVI. We evaluate against general-purpose solutions like Claude and Open AI models, as well as some guardrails focusing on groundedness to demonstrate a clear distinction of policy verification from the more common groundedness verification.

|

| 139 |

+

|

| 140 |

+

| Model | F1 Score (%) | Precision (%) | Recall (%) | Accuracy (%) |

|

| 141 |

+

|-----------------------|--------------|---------------|------------|--------------|

|

| 142 |

+

| Llama-3.1-Storm-8B | 66.7 | 86.4 | 54.3 | 69.6 |

|

| 143 |

+

| NAVI-small-preview | 86.8 | 80.5 | 94.3 | 84.0 |

|

| 144 |

+

| NAVI | **90.4** | **93.8** | **87.1** | **89.6** |

|

| 145 |

+

| Sonnet 3.5 | 83.2 | 85.1 | 81.4 | 81.6 |

|

| 146 |

+

| GPT-4o | 80.5 | 73.8 | 88.6 | 76.0 |

|

| 147 |

+

| AWS Bedrock Guardrail | 74.8 | 87.1 | 65.6 | 67.2 |

|

| 148 |

+

| Azure Groundedness | 75.0 | 62.3 | 94.3 | 64.8 |

|

| 149 |

+

| NeMo (GPT-4o) | 69.0 | 67.2 | 70.9 | 72.0 |

|

| 150 |

+

|

| 151 |

+

|

| 152 |

+

\*NAVI-small-preview is not deployed anywhere, latency calculation was obtained from running PAV evaluation with vLLM on 1 80GB A100 gpu.

|

| 153 |

|

| 154 |

## Model Card Contact

|

| 155 |

|

assets/cover.jpg

ADDED

|

assets/logo.svg

ADDED

|

|

assets/results.png

ADDED

|