add viewer + update plots

Browse files- analyze_results.ipynb +0 -0

- comparison_rank.png +3 -0

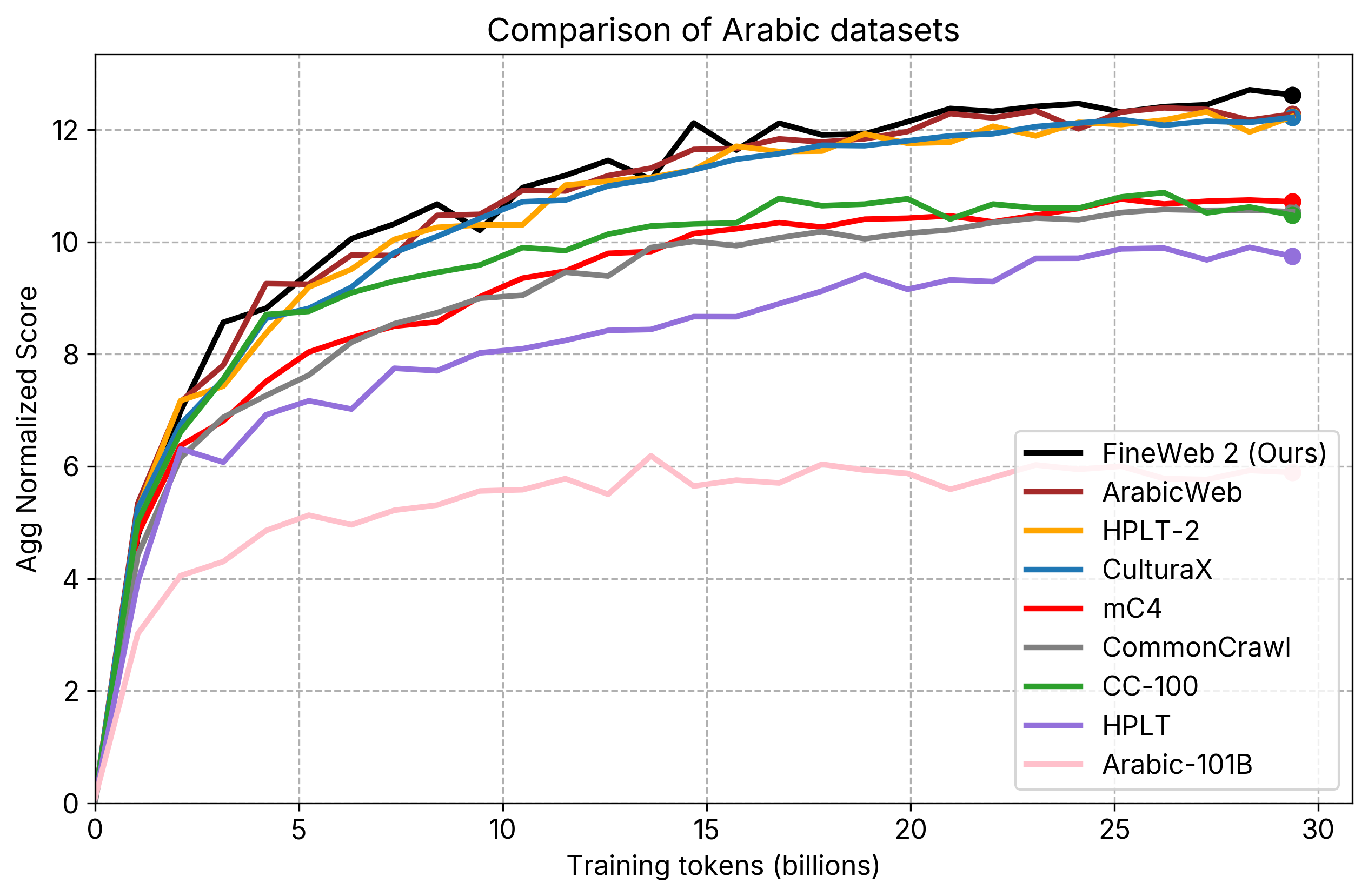

- individual_plots/comparison_ar.png +2 -2

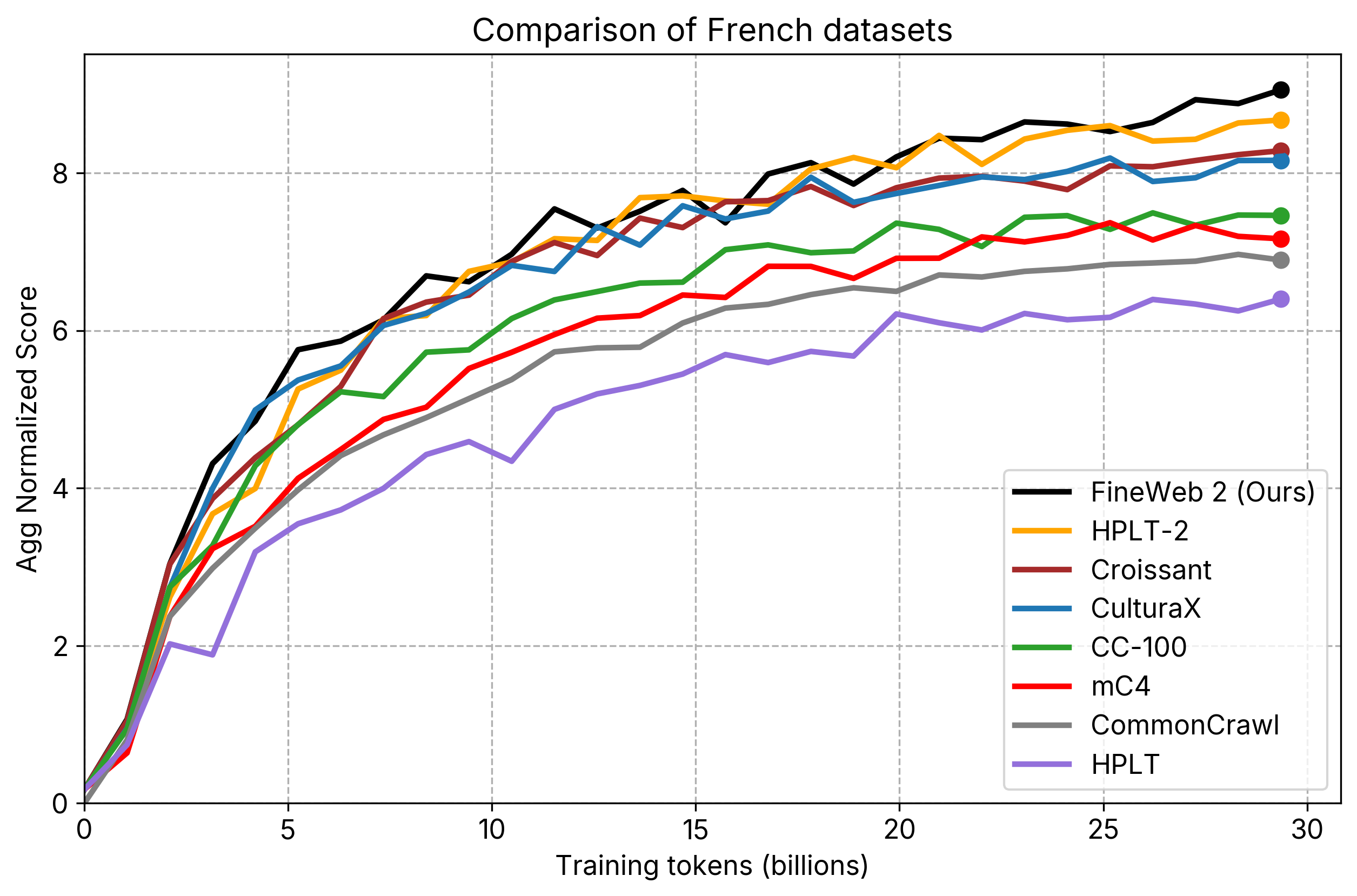

- individual_plots/comparison_fr.png +2 -2

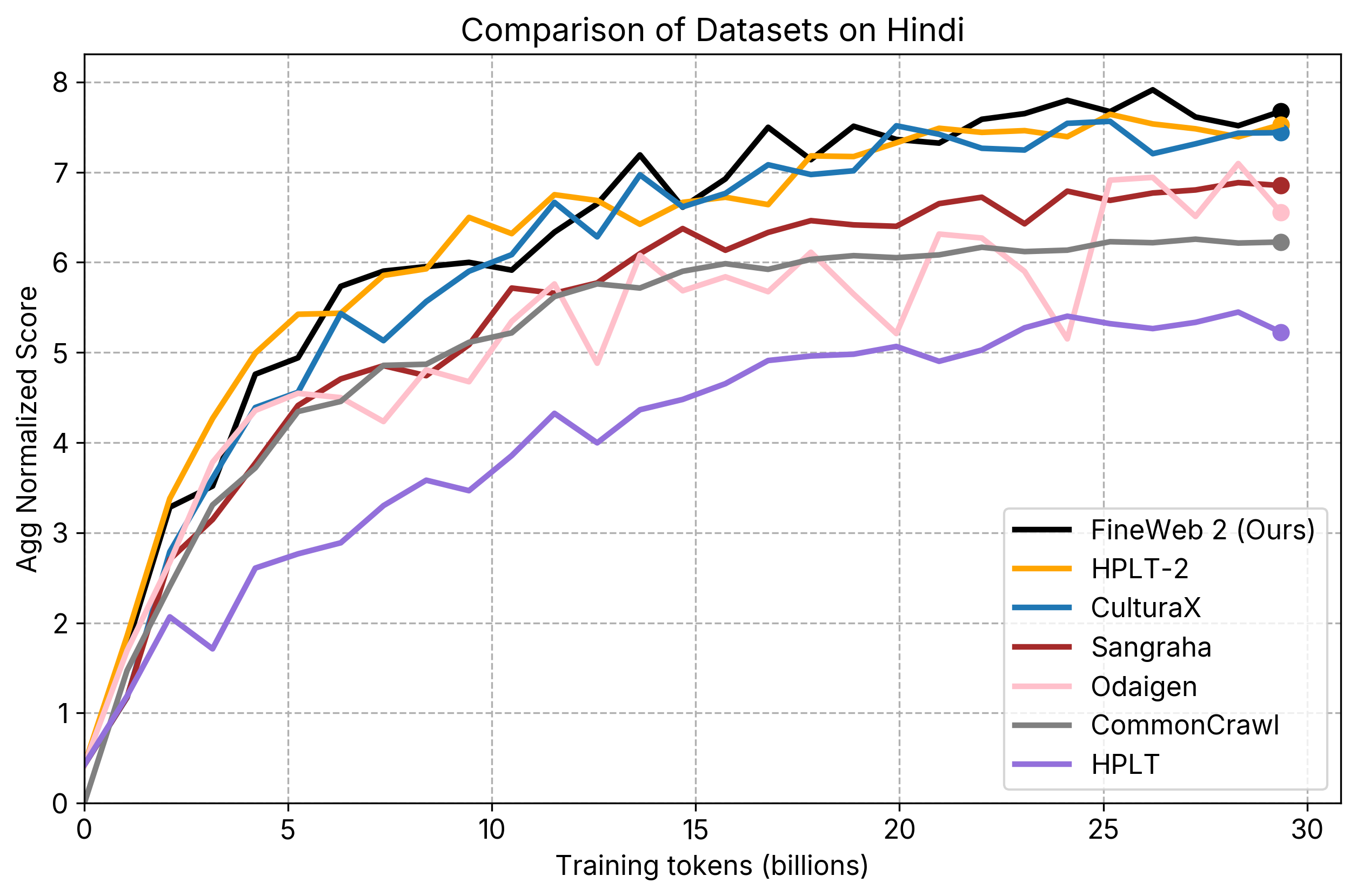

- individual_plots/comparison_hi.png +2 -2

- individual_plots/comparison_ru.png +2 -2

- individual_plots/comparison_sw.png +2 -2

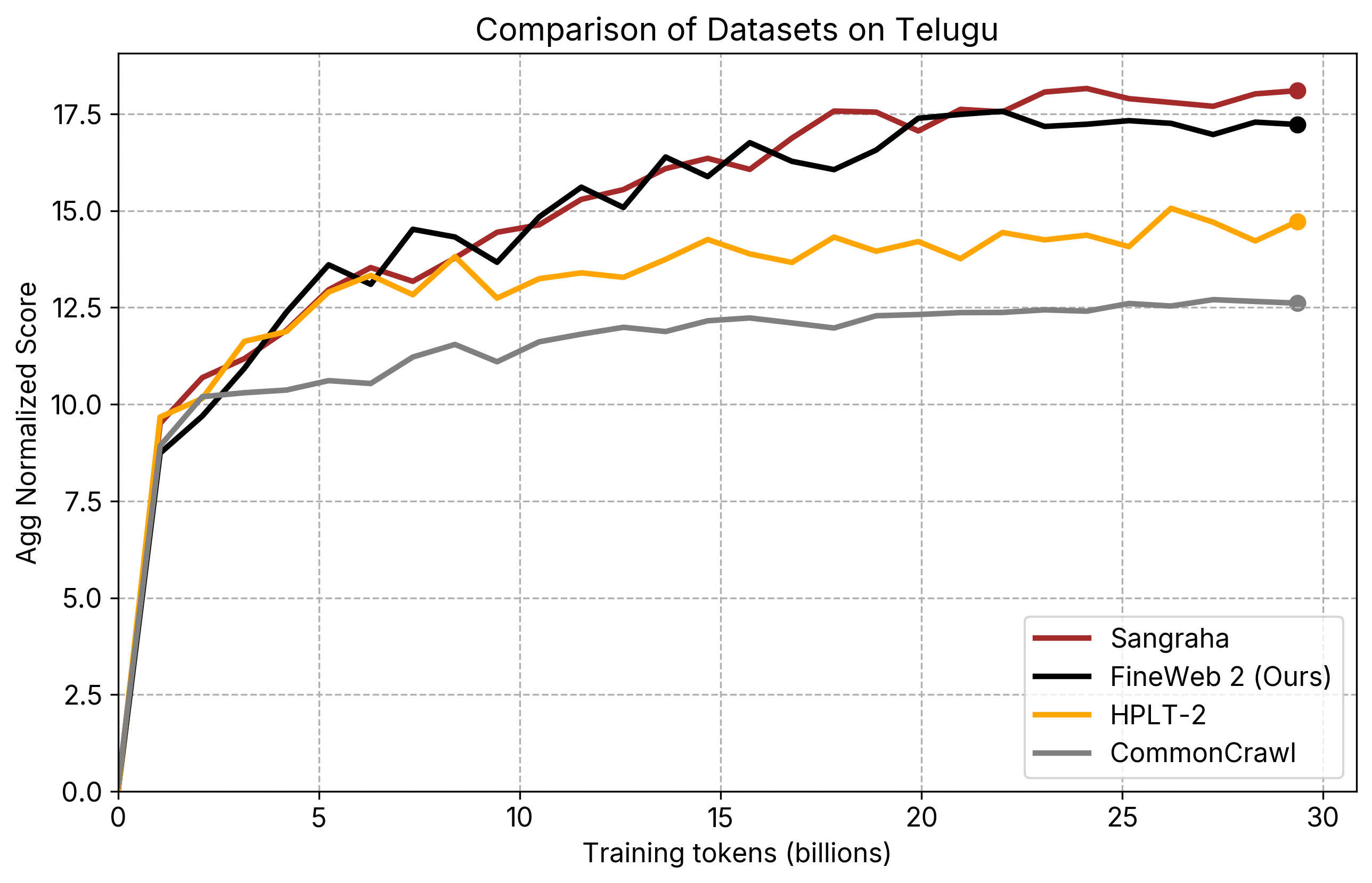

- individual_plots/comparison_te.png +2 -2

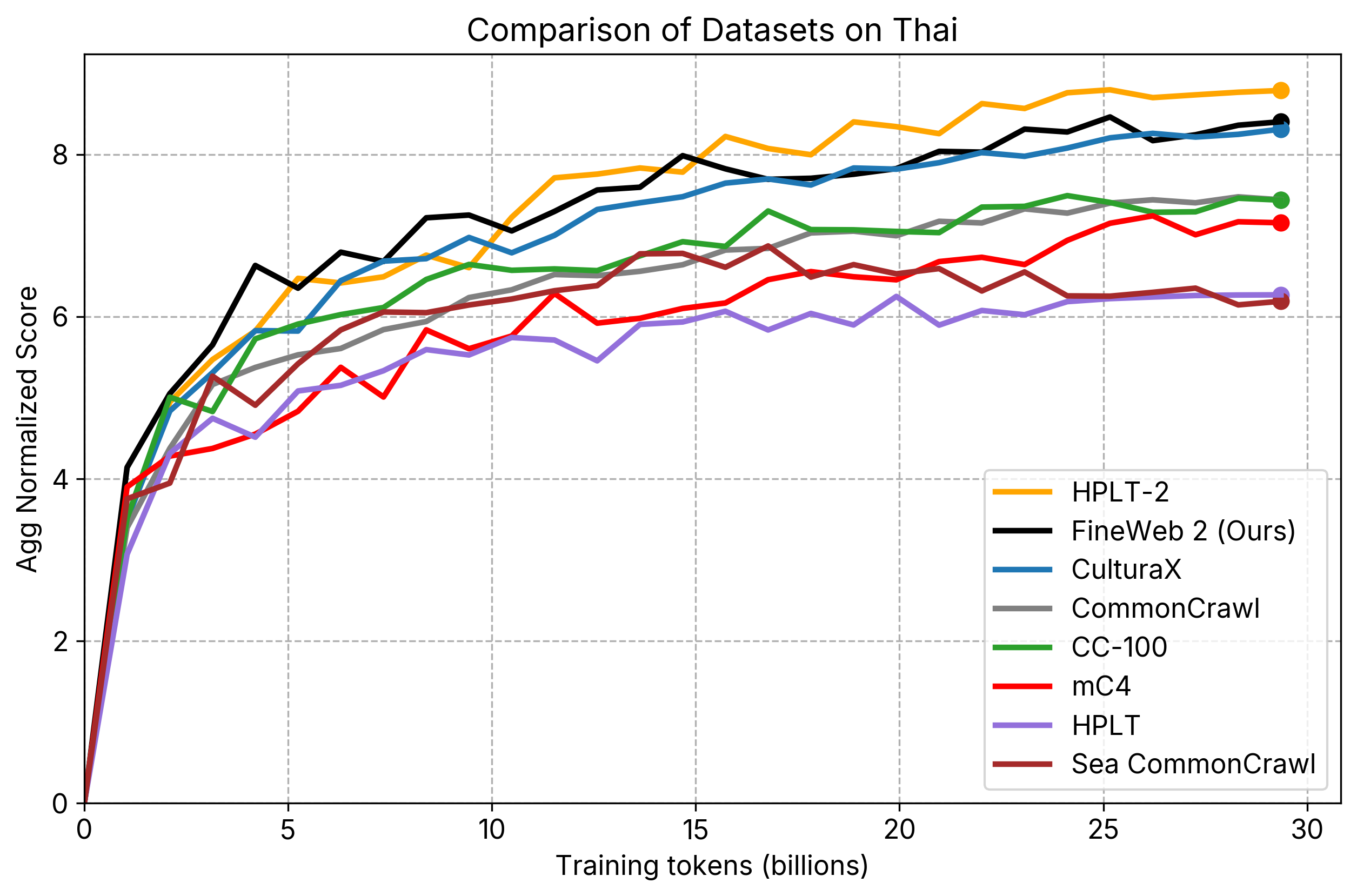

- individual_plots/comparison_th.png +2 -2

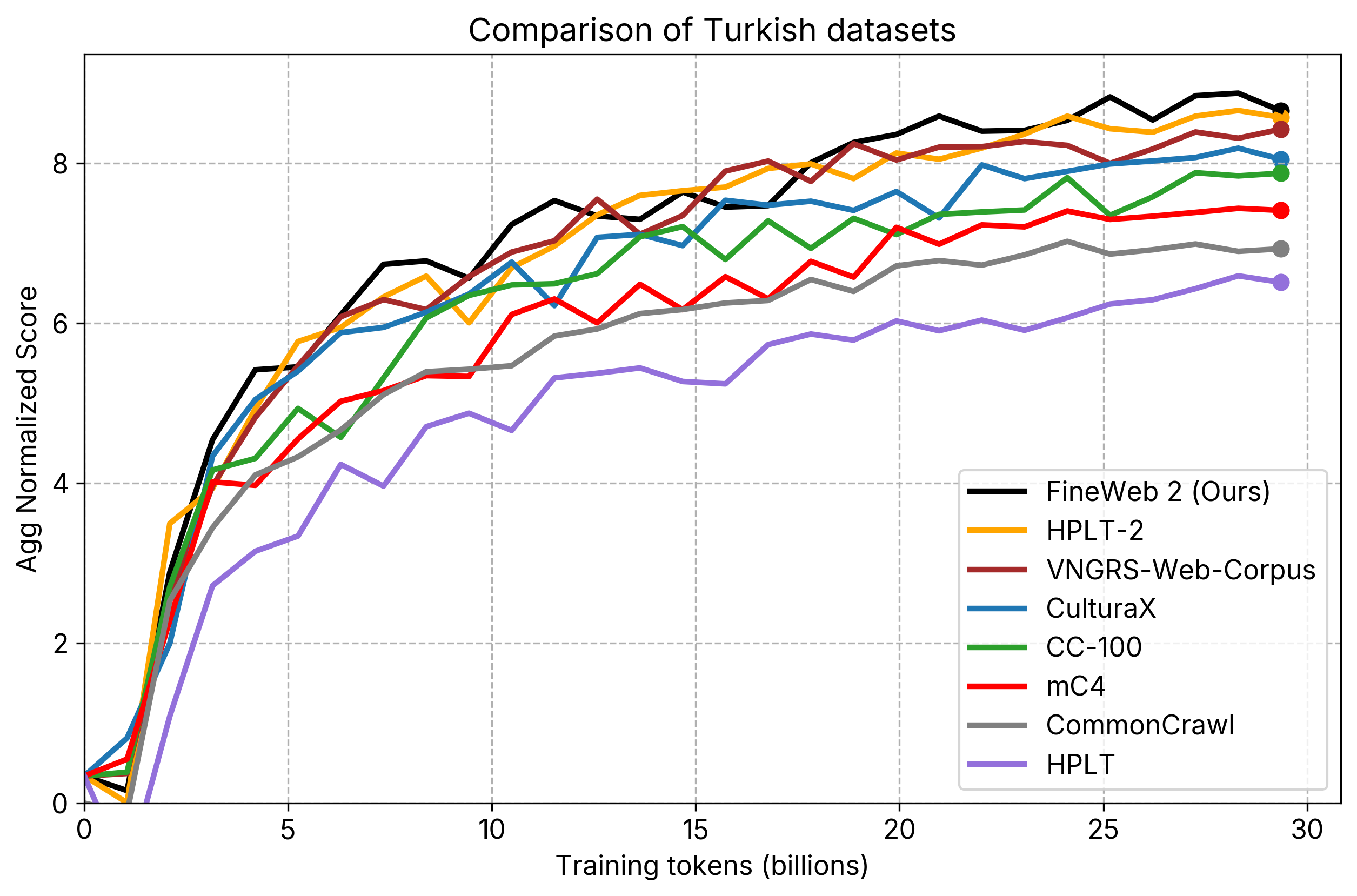

- individual_plots/comparison_tr.png +2 -2

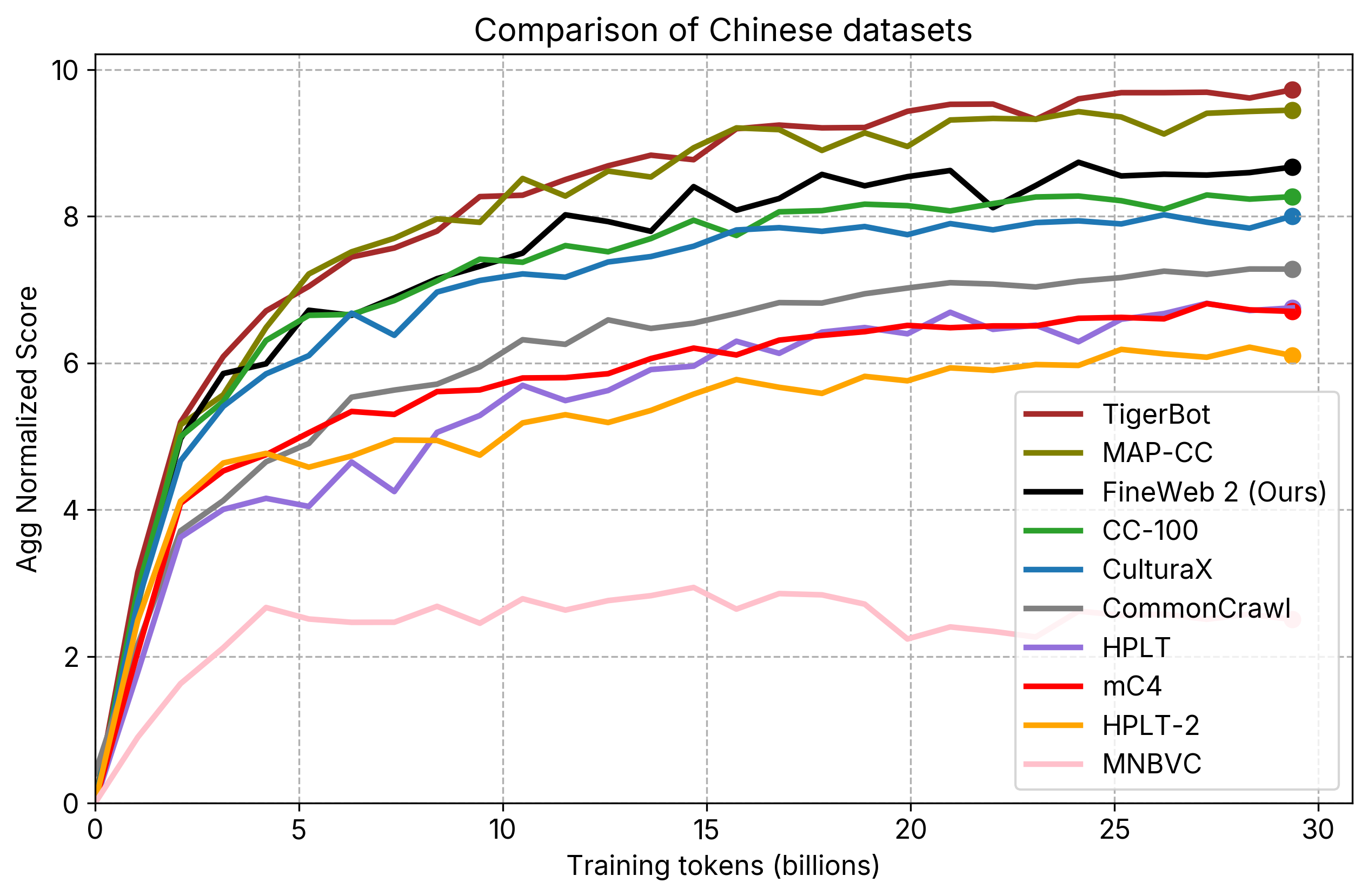

- individual_plots/comparison_zh.png +2 -2

- viewer/__init__.py +0 -0

- viewer/__pycache__/__init__.cpython-310.pyc +0 -0

- viewer/__pycache__/__init__.cpython-312.pyc +0 -0

- viewer/__pycache__/__init__.cpython-313.pyc +0 -0

- viewer/__pycache__/agg_score_metrics.cpython-310.pyc +0 -0

- viewer/__pycache__/agg_score_metrics.cpython-312.pyc +0 -0

- viewer/__pycache__/literals.cpython-310.pyc +0 -0

- viewer/__pycache__/literals.cpython-312.pyc +0 -0

- viewer/__pycache__/literals.cpython-313.pyc +0 -0

- viewer/__pycache__/results.cpython-310.pyc +0 -0

- viewer/__pycache__/results.cpython-312.pyc +0 -0

- viewer/__pycache__/results.cpython-313.pyc +0 -0

- viewer/__pycache__/stats.cpython-310.pyc +0 -0

- viewer/__pycache__/stats.cpython-312.pyc +0 -0

- viewer/__pycache__/stats.cpython-313.pyc +0 -0

- viewer/__pycache__/task_type_mapping.cpython-310.pyc +0 -0

- viewer/__pycache__/task_type_mapping.cpython-312.pyc +0 -0

- viewer/__pycache__/utils.cpython-310.pyc +0 -0

- viewer/__pycache__/utils.cpython-312.pyc +0 -0

- viewer/agg_score_metrics.py +297 -0

- viewer/app.py +277 -0

- viewer/literals.py +9 -0

- viewer/plot.py +131 -0

- viewer/results.py +421 -0

- viewer/stats.py +189 -0

- viewer/task_type_mapping.py +41 -0

- viewer/utils.py +186 -0

analyze_results.ipynb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

comparison_rank.png

ADDED

|

Git LFS Details

|

individual_plots/comparison_ar.png

CHANGED

|

Git LFS Details

|

|

Git LFS Details

|

individual_plots/comparison_fr.png

CHANGED

|

Git LFS Details

|

|

Git LFS Details

|

individual_plots/comparison_hi.png

CHANGED

|

Git LFS Details

|

|

Git LFS Details

|

individual_plots/comparison_ru.png

CHANGED

|

Git LFS Details

|

|

Git LFS Details

|

individual_plots/comparison_sw.png

CHANGED

|

Git LFS Details

|

|

Git LFS Details

|

individual_plots/comparison_te.png

CHANGED

|

Git LFS Details

|

|

Git LFS Details

|

individual_plots/comparison_th.png

CHANGED

|

Git LFS Details

|

|

Git LFS Details

|

individual_plots/comparison_tr.png

CHANGED

|

Git LFS Details

|

|

Git LFS Details

|

individual_plots/comparison_zh.png

CHANGED

|

Git LFS Details

|

|

Git LFS Details

|

viewer/__init__.py

ADDED

|

File without changes

|

viewer/__pycache__/__init__.cpython-310.pyc

ADDED

|

Binary file (171 Bytes). View file

|

|

|

viewer/__pycache__/__init__.cpython-312.pyc

ADDED

|

Binary file (166 Bytes). View file

|

|

|

viewer/__pycache__/__init__.cpython-313.pyc

ADDED

|

Binary file (166 Bytes). View file

|

|

|

viewer/__pycache__/agg_score_metrics.cpython-310.pyc

ADDED

|

Binary file (5.58 kB). View file

|

|

|

viewer/__pycache__/agg_score_metrics.cpython-312.pyc

ADDED

|

Binary file (5.79 kB). View file

|

|

|

viewer/__pycache__/literals.cpython-310.pyc

ADDED

|

Binary file (556 Bytes). View file

|

|

|

viewer/__pycache__/literals.cpython-312.pyc

ADDED

|

Binary file (583 Bytes). View file

|

|

|

viewer/__pycache__/literals.cpython-313.pyc

ADDED

|

Binary file (583 Bytes). View file

|

|

|

viewer/__pycache__/results.cpython-310.pyc

ADDED

|

Binary file (16.4 kB). View file

|

|

|

viewer/__pycache__/results.cpython-312.pyc

ADDED

|

Binary file (25.4 kB). View file

|

|

|

viewer/__pycache__/results.cpython-313.pyc

ADDED

|

Binary file (25.8 kB). View file

|

|

|

viewer/__pycache__/stats.cpython-310.pyc

ADDED

|

Binary file (6.17 kB). View file

|

|

|

viewer/__pycache__/stats.cpython-312.pyc

ADDED

|

Binary file (11 kB). View file

|

|

|

viewer/__pycache__/stats.cpython-313.pyc

ADDED

|

Binary file (11.1 kB). View file

|

|

|

viewer/__pycache__/task_type_mapping.cpython-310.pyc

ADDED

|

Binary file (2.02 kB). View file

|

|

|

viewer/__pycache__/task_type_mapping.cpython-312.pyc

ADDED

|

Binary file (2.47 kB). View file

|

|

|

viewer/__pycache__/utils.cpython-310.pyc

ADDED

|

Binary file (7.1 kB). View file

|

|

|

viewer/__pycache__/utils.cpython-312.pyc

ADDED

|

Binary file (11.2 kB). View file

|

|

|

viewer/agg_score_metrics.py

ADDED

|

@@ -0,0 +1,297 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

from typing import Literal

|

| 3 |

+

|

| 4 |

+

AGG_SCORE_METRICS_TYPE = Literal["agg_score_metrics", "agg_score_metrics_prob_raw", "agg_score_metrics_acc"]

|

| 5 |

+

|

| 6 |

+

agg_score_metrics_dict_prob = {

|

| 7 |

+

"ar": [

|

| 8 |

+

'custom|alghafa:mcq_exams_test_ar|0/prob_norm_token',

|

| 9 |

+

'custom|alghafa:meta_ar_msa|0/prob_norm',

|

| 10 |

+

'custom|alghafa:multiple_choice_grounded_statement_soqal_task|0/prob_norm_token',

|

| 11 |

+

'custom|arabic_mmlu_native:_average|0/prob_norm_pmi',

|

| 12 |

+

'custom|arc_easy_ar|0/prob_norm_token',

|

| 13 |

+

'custom|hellaswag-ar|0/prob_norm_token',

|

| 14 |

+

'custom|mlqa-ar|0/qa_ar_f1',

|

| 15 |

+

'custom|piqa_ar|0/prob_norm_token',

|

| 16 |

+

'custom|race_ar|0/prob_norm_token',

|

| 17 |

+

'custom|sciq_ar|0/prob_norm_token',

|

| 18 |

+

'custom|tydiqa-ar|0/qa_ar_f1',

|

| 19 |

+

'custom|x-codah-ar|0/prob_norm_token',

|

| 20 |

+

'custom|x-csqa-ar|0/prob_norm_pmi',

|

| 21 |

+

'custom|xnli-2.0-bool-v2-ar|0/prob',

|

| 22 |

+

'custom|arcd|0/qa_ar_f1',

|

| 23 |

+

'custom|xstory_cloze-ar|0/prob_norm_token'

|

| 24 |

+

],

|

| 25 |

+

"fr": [

|

| 26 |

+

'custom|belebele-fr|0/prob_norm_token',

|

| 27 |

+

'custom|fquadv2|0/qa_fr_f1',

|

| 28 |

+

'custom|french-hellaswag|0/prob_norm_token',

|

| 29 |

+

'custom|mintaka-fr|0/qa_fr_f1',

|

| 30 |

+

'custom|meta_mmlu-fr:_average|0/prob_norm_pmi',

|

| 31 |

+

'custom|x-codah-fr|0/prob_norm_token',

|

| 32 |

+

'custom|x-csqa-fr|0/prob_norm_pmi',

|

| 33 |

+

'custom|xnli-2.0-bool-v2-fr|0/prob',

|

| 34 |

+

'custom|arc-fr|0/prob_norm_pmi'

|

| 35 |

+

],

|

| 36 |

+

"hi": [

|

| 37 |

+

'custom|belebele-hi|0/prob_norm_token',

|

| 38 |

+

'custom|hellaswag-hi|0/prob_norm_token',

|

| 39 |

+

'custom|hi-arc:easy|0/arc_norm_token',

|

| 40 |

+

'custom|indicqa.hi|0/qa_hi_f1',

|

| 41 |

+

'custom|meta_mmlu-hi:_average|0/prob_norm_pmi',

|

| 42 |

+

'custom|x-codah-hi|0/prob_norm_token',

|

| 43 |

+

'custom|x-csqa-hi|0/prob_norm_pmi',

|

| 44 |

+

'custom|xcopa-hi|0/prob_norm_token',

|

| 45 |

+

'custom|indicnxnli-hi-bool-v2-hi|0/prob_norm_token',

|

| 46 |

+

'custom|xstory_cloze-hi|0/prob_norm_token'

|

| 47 |

+

],

|

| 48 |

+

"ru": [

|

| 49 |

+

'custom|arc-ru|0/prob_norm_pmi',

|

| 50 |

+

'custom|belebele-ru|0/prob_norm_token',

|

| 51 |

+

'custom|hellaswag-ru|0/prob_norm_token',

|

| 52 |

+

'custom|parus|0/prob_norm_token',

|

| 53 |

+

'custom|rummlu:_average|0/prob_norm_pmi',

|

| 54 |

+

'custom|ruopenbookqa|0/prob_norm_token',

|

| 55 |

+

'custom|tydiqa-ru|0/qa_ru_f1',

|

| 56 |

+

'custom|x-codah-ru|0/prob_norm_token',

|

| 57 |

+

'custom|x-csqa-ru|0/prob_norm_pmi',

|

| 58 |

+

'custom|xnli-2.0-bool-v2-ru|0/prob',

|

| 59 |

+

'custom|sber_squad|0/qa_ru_f1',

|

| 60 |

+

'custom|xstory_cloze-ru|0/prob_norm_token',

|

| 61 |

+

'custom|xquad-ru|0/qa_ru_f1'

|

| 62 |

+

],

|

| 63 |

+

"sw": [

|

| 64 |

+

'custom|belebele-sw|0/prob_norm_token',

|

| 65 |

+

'custom|arc-sw:easy|0/prob_norm_token',

|

| 66 |

+

'custom|kenswquad|0/qa_sw_f1',

|

| 67 |

+

'custom|tydiqa-sw|0/qa_sw_f1',

|

| 68 |

+

'custom|m3exam-sw|0/prob_norm_token',

|

| 69 |

+

'custom|x-csqa-sw|0/prob_norm_pmi',

|

| 70 |

+

'custom|xcopa-sw|0/prob_norm_token',

|

| 71 |

+

'custom|xnli-2.0-bool-v2-sw|0/prob_norm',

|

| 72 |

+

'custom|xstory_cloze-sw|0/prob_norm_token'

|

| 73 |

+

],

|

| 74 |

+

"te": [

|

| 75 |

+

'custom|belebele-te|0/prob_norm_token',

|

| 76 |

+

'custom|custom_hellaswag-te|0/prob_norm_token',

|

| 77 |

+

'custom|indicqa.te|0/qa_te_f1',

|

| 78 |

+

'custom|mmlu-te:_average|0/prob_norm_token',

|

| 79 |

+

'custom|indicnxnli-te-bool-v2-te|0/prob_norm_token',

|

| 80 |

+

'custom|xcopa-te|0/prob_norm_token',

|

| 81 |

+

'custom|xstory_cloze-te|0/prob_norm_token'

|

| 82 |

+

],

|

| 83 |

+

"th": [

|

| 84 |

+

'custom|belebele-th|0/prob_norm_token',

|

| 85 |

+

'custom|m3exam-th|0/prob_norm_token',

|

| 86 |

+

'custom|meta_mmlu-th:_average|0/prob_norm_pmi',

|

| 87 |

+

'custom|xnli-2.0-bool-v2-th|0/prob',

|

| 88 |

+

'custom|custom_hellaswag-th|0/prob_norm_token',

|

| 89 |

+

'custom|thaiqa|0/qa_th_f1',

|

| 90 |

+

'custom|xquad-th|0/qa_th_f1',

|

| 91 |

+

],

|

| 92 |

+

"tr": [

|

| 93 |

+

'custom|arc-v2-tr|0/prob_norm',

|

| 94 |

+

'custom|belebele-tr|0/prob_norm',

|

| 95 |

+

'custom|exams-tr:_average|0/prob_norm',

|

| 96 |

+

'custom|hellaswag-tr|0/prob_norm',

|

| 97 |

+

'custom|mmlu-tr:_average|0/prob_norm_pmi',

|

| 98 |

+

'custom|tqduad2|0/qa_tr_f1',

|

| 99 |

+

'custom|xcopa-tr|0/prob_norm',

|

| 100 |

+

'custom|xnli-2.0-bool-v2-tr|0/prob',

|

| 101 |

+

'custom|xquad-tr|0/qa_tr_f1'

|

| 102 |

+

],

|

| 103 |

+

"zh": [

|

| 104 |

+

'custom|agieval_NEW_LINE:_average|0/prob_norm_pmi',

|

| 105 |

+

'custom|belebele-zh|0/prob_norm_token',

|

| 106 |

+

'custom|c3|0/prob_norm_token',

|

| 107 |

+

'custom|ceval_NEW_LINE:_average|0/prob_norm_token',

|

| 108 |

+

'custom|cmmlu:_average|0/prob_norm_token',

|

| 109 |

+

'custom|cmrc|0/qa_zh_f1',

|

| 110 |

+

'custom|hellaswag-zh|0/prob_norm_token',

|

| 111 |

+

'custom|m3exam-zh|0/prob_norm_token',

|

| 112 |

+

'custom|mlqa-zh|0/qa_zh_f1',

|

| 113 |

+

'custom|x-codah-zh|0/prob_norm_token',

|

| 114 |

+

'custom|x-csqa-zh|0/prob_norm_pmi',

|

| 115 |

+

'custom|xcopa-zh|0/prob_norm_token',

|

| 116 |

+

'custom|ocnli-bool-v2-zh|0/prob',

|

| 117 |

+

'custom|chinese-squad|0/qa_zh_f1',

|

| 118 |

+

'custom|xstory_cloze-zh|0/prob_norm_token',

|

| 119 |

+

'custom|xwinograd-zh|0/prob_norm_token'

|

| 120 |

+

]

|

| 121 |

+

}

|

| 122 |

+

|

| 123 |

+

|

| 124 |

+

def transform_for_prob_raw(metric: str):

|

| 125 |

+

# The pmi can't be used for unormalized probabilities

|

| 126 |

+

# Secondly the acc_norm_token is better in terms of monotonicity for this evaluation metric

|

| 127 |

+

splitted_metric = metric.split("/")

|

| 128 |

+

|

| 129 |

+

# If it's generative metric we don't do anything

|

| 130 |

+

if not "prob" in splitted_metric[-1]:

|

| 131 |

+

return metric

|

| 132 |

+

return "/".join(splitted_metric[:-1] + ["prob_raw_norm_token"])

|

| 133 |

+

|

| 134 |

+

|

| 135 |

+

|

| 136 |

+

|

| 137 |

+

agg_score_metrics_dict_prob_raw = {

|

| 138 |

+

lang: [transform_for_prob_raw(metric) for metric in metrics]

|

| 139 |

+

for lang, metrics in agg_score_metrics_dict_prob.items()

|

| 140 |

+

}

|

| 141 |

+

|

| 142 |

+

agg_score_metrics_dict_acc = {

|

| 143 |

+

lang: [metric.replace("/prob", "/acc") for metric in metrics]

|

| 144 |

+

for lang, metrics in agg_score_metrics_dict_prob.items()

|

| 145 |

+

}

|

| 146 |

+

|

| 147 |

+

agg_score_metrics_dict_both = {

|

| 148 |

+

lang: agg_score_metrics_dict_prob[lang] + agg_score_metrics_dict_acc[lang]

|

| 149 |

+

for lang in agg_score_metrics_dict_prob.keys()

|

| 150 |

+

}

|

| 151 |

+

|

| 152 |

+

|

| 153 |

+

agg_score_metrics = {

|

| 154 |

+

"prob": agg_score_metrics_dict_prob,

|

| 155 |

+

"prob_raw": agg_score_metrics_dict_prob_raw,

|

| 156 |

+

"acc": agg_score_metrics_dict_acc,

|

| 157 |

+

"both": agg_score_metrics_dict_both

|

| 158 |

+

}

|

| 159 |

+

|

| 160 |

+

# Allow to override default groupping behaviour for aggregate tasks

|

| 161 |

+

# The key is the task name and value is the list of tasks to use for the average

|

| 162 |

+

custom_task_aggregate_groups = {

|

| 163 |

+

'custom|agieval_NEW_LINE': [

|

| 164 |

+

'gaokao-biology', 'gaokao-chemistry', 'gaokao-chinese', 'gaokao-geography',

|

| 165 |

+

'gaokao-history', 'gaokao-physics', 'jec-qa-ca',

|

| 166 |

+

'jec-qa-kd', 'logiqa-zh'

|

| 167 |

+

]

|

| 168 |

+

}

|

| 169 |

+

|

| 170 |

+

def is_agg_score_col(col: str, agg_score_type: AGG_SCORE_METRICS_TYPE, lang: str):

|

| 171 |

+

metric_name = col

|

| 172 |

+

if agg_score_type == "agg_score_metrics_prob_raw":

|

| 173 |

+

return metric_name in agg_score_metrics_dict_prob_raw.get(lang, [])

|

| 174 |

+

elif agg_score_type == "agg_score_metrics_acc":

|

| 175 |

+

return metric_name in agg_score_metrics_dict_acc.get(lang, [])

|

| 176 |

+

|

| 177 |

+

return agg_score_metrics_dict_prob.get(lang, [])

|

| 178 |

+

|

| 179 |

+

|

| 180 |

+

# agg_score_metrics = {

|

| 181 |

+

# "ar": [

|

| 182 |

+

# 'custom|alghafa:mcq_exams_test_ar|0/acc_norm_token',

|

| 183 |

+

# 'custom|alghafa:meta_ar_msa|0/acc_norm',

|

| 184 |

+

# 'custom|alghafa:multiple_choice_grounded_statement_soqal_task|0/acc_norm_token',

|

| 185 |

+

# 'custom|arabic_mmlu_native:_average|0/acc_norm_pmi',

|

| 186 |

+

# 'custom|arc_easy_ar|0/acc_norm_token',

|

| 187 |

+

# 'custom|hellaswag-ar|0/acc_norm_token',

|

| 188 |

+

# 'custom|mlqa-ar|0/qa_ar_f1',

|

| 189 |

+

# 'custom|piqa_ar|0/acc_norm_token',

|

| 190 |

+

# 'custom|race_ar|0/acc_norm_token',

|

| 191 |

+

# 'custom|sciq_ar|0/acc_norm_token',

|

| 192 |

+

# 'custom|tydiqa-ar|0/qa_ar_f1',

|

| 193 |

+

# 'custom|x-codah-ar|0/acc_norm_token',

|

| 194 |

+

# 'custom|x-csqa-ar|0/acc_norm_pmi',

|

| 195 |

+

# 'custom|xnli-2.0-bool-v2-ar|0/acc',

|

| 196 |

+

# 'custom|arcd|0/qa_ar_f1',

|

| 197 |

+

# 'custom|xstory_cloze-ar|0/acc_norm_token'

|

| 198 |

+

# ],

|

| 199 |

+

# "fr": [

|

| 200 |

+

# 'custom|belebele-fr|0/acc_norm_token',

|

| 201 |

+

# 'custom|fquadv2|0/qa_fr_f1',

|

| 202 |

+

# 'custom|french-hellaswag|0/acc_norm_token',

|

| 203 |

+

# 'custom|mintaka-fr|0/qa_fr_f1',

|

| 204 |

+

# 'custom|meta_mmlu-fr:_average|0/acc_norm_pmi',

|

| 205 |

+

# 'custom|pawns-v2-fr|0/acc',

|

| 206 |

+

# 'custom|x-codah-fr|0/acc_norm_token',

|

| 207 |

+

# 'custom|x-csqa-fr|0/acc_norm_pmi',

|

| 208 |

+

# 'custom|xnli-2.0-bool-v2-fr|0/acc',

|

| 209 |

+

# 'custom|arc-fr|0/acc_norm_pmi'

|

| 210 |

+

# ],

|

| 211 |

+

# "hi": [

|

| 212 |

+

# 'custom|belebele-hi|0/acc_norm_token',

|

| 213 |

+

# 'custom|hellaswag-hi|0/acc_norm_token',

|

| 214 |

+

# 'custom|hi-arc:easy|0/arc_norm_token',

|

| 215 |

+

# 'custom|indicqa.hi|0/qa_hi_f1',

|

| 216 |

+

# 'custom|meta_mmlu-hi:_average|0/acc_norm_pmi',

|

| 217 |

+

# 'custom|x-codah-hi|0/acc_norm_token',

|

| 218 |

+

# 'custom|x-csqa-hi|0/acc_norm_pmi',

|

| 219 |

+

# 'custom|xcopa-hi|0/acc_norm_token',

|

| 220 |

+

# 'custom|indicnxnli-hi-bool-v2-hi|0/acc_norm_token',

|

| 221 |

+

# 'custom|xstory_cloze-hi|0/acc_norm_token'

|

| 222 |

+

# ],

|

| 223 |

+

# "ru": [

|

| 224 |

+

# 'custom|arc-ru|0/acc_norm_pmi',

|

| 225 |

+

# 'custom|belebele-ru|0/acc_norm_token',

|

| 226 |

+

# 'custom|hellaswag-ru|0/acc_norm_token',

|

| 227 |

+

# 'custom|parus|0/acc_norm_token',

|

| 228 |

+

# 'custom|rummlu:_average|0/acc_norm_pmi',

|

| 229 |

+

# 'custom|ruopenbookqa|0/acc_norm_token',

|

| 230 |

+

# 'custom|tydiqa-ru|0/qa_ru_f1',

|

| 231 |

+

# 'custom|x-codah-ru|0/acc_norm_token',

|

| 232 |

+

# 'custom|x-csqa-ru|0/acc_norm_pmi',

|

| 233 |

+

# 'custom|xnli-2.0-bool-v2-ru|0/acc',

|

| 234 |

+

# 'custom|sber_squad|0/qa_ru_f1',

|

| 235 |

+

# 'custom|xstory_cloze-ru|0/acc_norm_token',

|

| 236 |

+

# 'custom|xquad-ru|0/qa_ru_f1'

|

| 237 |

+

# ],

|

| 238 |

+

# "sw": [

|

| 239 |

+

# 'custom|belebele-sw|0/acc_norm_token',

|

| 240 |

+

# 'custom|arc-sw:easy|0/acc_norm_token',

|

| 241 |

+

# 'custom|kenswquad|0/qa_sw_f1',

|

| 242 |

+

# 'custom|tydiqa-sw|0/qa_sw_f1',

|

| 243 |

+

# 'custom|m3exam-sw|0/acc_norm_token',

|

| 244 |

+

# 'custom|x-csqa-sw|0/acc_norm_pmi',

|

| 245 |

+

# 'custom|xcopa-sw|0/acc_norm_token',

|

| 246 |

+

# 'custom|xnli-2.0-bool-v2-sw|0/acc_norm',

|

| 247 |

+

# 'custom|xstory_cloze-sw|0/acc_norm_token'

|

| 248 |

+

# ],

|

| 249 |

+

# "te": [

|

| 250 |

+

# 'custom|belebele-te|0/acc_norm_token',

|

| 251 |

+

# 'custom|custom_hellaswag-te|0/acc_norm_token',

|

| 252 |

+

# 'custom|indicqa.te|0/qa_te_f1',

|

| 253 |

+

# 'custom|mmlu-te:_average|0/acc_norm_token',

|

| 254 |

+

# 'custom|indicnxnli-te-bool-v2-te|0/acc_norm_token',

|

| 255 |

+

# 'custom|xcopa-te|0/acc_norm_token',

|

| 256 |

+

# 'custom|xstory_cloze-te|0/acc_norm_token'

|

| 257 |

+

# ],

|

| 258 |

+

# "th": [

|

| 259 |

+

# 'custom|belebele-th|0/acc_norm_token',

|

| 260 |

+

# 'custom|m3exam-th|0/acc_norm_token',

|

| 261 |

+

# 'custom|meta_mmlu-th:_average|0/acc_norm_pmi',

|

| 262 |

+

# 'custom|xnli-2.0-bool-v2-th|0/acc',

|

| 263 |

+

# 'custom|custom_hellaswag-th|0/acc_norm_token',

|

| 264 |

+

# 'custom|thaiqa|0/qa_th_f1',

|

| 265 |

+

# 'custom|xquad-th|0/qa_th_f1',

|

| 266 |

+

# 'custom|thai-exams:tgat|0/acc_norm_token'

|

| 267 |

+

# ],

|

| 268 |

+

# "tr": [

|

| 269 |

+

# 'custom|arc-v2-tr|0/acc_norm',

|

| 270 |

+

# 'custom|belebele-tr|0/acc_norm',

|

| 271 |

+

# 'custom|exams-tr:_average|0/acc_norm',

|

| 272 |

+

# 'custom|hellaswag-tr|0/acc_norm',

|

| 273 |

+

# 'custom|mmlu-tr:_average|0/acc_norm_pmi',

|

| 274 |

+

# 'custom|tqduad2|0/qa_tr_f1',

|

| 275 |

+

# 'custom|xcopa-tr|0/acc_norm',

|

| 276 |

+

# 'custom|xnli-2.0-bool-v2-tr|0/acc',

|

| 277 |

+

# 'custom|xquad-tr|0/qa_tr_f1'

|

| 278 |

+

# ],

|

| 279 |

+

# "zh": [

|

| 280 |

+

# 'custom|agieval_NEW_LINE:_average|0/acc_norm_pmi',

|

| 281 |

+

# 'custom|belebele-zh|0/acc_norm_token',

|

| 282 |

+

# 'custom|c3|0/acc_norm_token',

|

| 283 |

+

# 'custom|ceval_NEW_LINE:_average|0/acc_norm_token',

|

| 284 |

+

# 'custom|cmmlu:_average|0/acc_norm_token',

|

| 285 |

+

# 'custom|cmrc|0/qa_zh_f1',

|

| 286 |

+

# 'custom|hellaswag-zh|0/acc_norm_token',

|

| 287 |

+

# 'custom|m3exam-zh|0/acc_norm_token',

|

| 288 |

+

# 'custom|mlqa-zh|0/qa_zh_f1',

|

| 289 |

+

# 'custom|x-codah-zh|0/acc_norm_token',

|

| 290 |

+

# 'custom|x-csqa-zh|0/acc_norm_pmi',

|

| 291 |

+

# 'custom|xcopa-zh|0/acc_norm_token',

|

| 292 |

+

# 'custom|ocnli-bool-v2-zh|0/acc',

|

| 293 |

+

# 'custom|chinese-squad|0/qa_zh_f1',

|

| 294 |

+

# 'custom|xstory_cloze-zh|0/acc_norm_token',

|

| 295 |

+

# 'custom|xwinograd-zh|0/acc_norm_token'

|

| 296 |

+

# ]

|

| 297 |

+

# }

|

viewer/app.py

ADDED

|

@@ -0,0 +1,277 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from collections import defaultdict

|

| 2 |

+

from typing import get_args

|

| 3 |

+

|

| 4 |

+

import gradio as gr

|

| 5 |

+

import numpy as np

|

| 6 |

+

|

| 7 |

+

from literals import TASK_CONSISTENCY_BUTTON_LABEL, CHECK_MISSING_DATAPOINTS_BUTTON_LABEL

|

| 8 |

+

from plot import prepare_plot_data, plot_metric

|

| 9 |

+

from viewer.results import fetch_run_results, fetch_run_list, init_input_normalization_runs, select_runs_by_regex, \

|

| 10 |

+

select_runs_by_language, \

|

| 11 |

+

init_input_component_values, init_std_dev_runs, render_results_table, export_results_csv, \

|

| 12 |

+

check_missing_datapoints

|

| 13 |

+

from viewer.stats import generate_and_export_stats, format_statistics, calculate_statistics, smooth_tasks

|

| 14 |

+

from viewer.utils import PlotOptions, check_task_hash_consistency, BASELINE_GROUPING_MODE

|

| 15 |

+

|

| 16 |

+

with gr.Blocks() as demo:

|

| 17 |

+

list_of_runs = gr.State([])

|

| 18 |

+

plot_data = gr.State([])

|

| 19 |

+

statistics = gr.State(defaultdict(lambda: np.nan))

|

| 20 |

+

login_button = gr.LoginButton(visible=False)

|

| 21 |

+

run_data = gr.State([])

|

| 22 |

+

gr.Markdown("# FineWeb Multilingual experiments results explorer V2")

|

| 23 |

+

results_uri = gr.Textbox(label="TB HF Repo", value="s3://fineweb-multilingual-v1/evals/results/", visible=True)

|

| 24 |

+

with gr.Column():

|

| 25 |

+

with gr.Row():

|

| 26 |

+

# crop_prefix = gr.Textbox(label="Prefix to crop", value="tb/fineweb-exps-1p82G-")

|

| 27 |

+

steps = gr.Textbox(label="Training steps", value="%500",

|

| 28 |

+

info="Use \",\" to separate. Use \"%32000\" for every 32000 steps. Use \"-\" for ranges. You can also combine them: \"1000-5000%1000\", 1000 to 5000 every 1000 steps.",

|

| 29 |

+

interactive=True)

|

| 30 |

+

with gr.Column():

|

| 31 |

+

select_by_language = gr.Dropdown(choices=["ar", "fr", "ru", "hi", "th", "tr", "zh", "sw", "te"],

|

| 32 |

+

interactive=True, label="Select language",

|

| 33 |

+

info="Choose a language preset")

|

| 34 |

+

mcq_type = gr.Radio(choices=["prob_raw", "prob", "acc"], value="prob", label="MCQ agg metric type")

|

| 35 |

+

with gr.Column():

|

| 36 |

+

select_by_regex_text = gr.Textbox(label="Regex to select runs",

|

| 37 |

+

value="1p46G-gemma-fp-.*-{lang}-.*")

|

| 38 |

+

select_by_regex_button = gr.Button("Select matching runs")

|

| 39 |

+

selected_runs = gr.Dropdown(choices=[], interactive=True, multiselect=True, label="Selected runs")

|

| 40 |

+

fetch_res = gr.Button("Fetch results")

|

| 41 |

+

with gr.Column():

|

| 42 |

+

aggregate_score_cols = gr.Dropdown(

|

| 43 |

+

choices=[], interactive=True, multiselect=True,

|

| 44 |

+

value=[],

|

| 45 |

+

label="Aggregate score columns", allow_custom_value=True,

|

| 46 |

+

info="The values from these columns/metrics will be averaged to produce the \"agg_score\""

|

| 47 |

+

)

|

| 48 |

+

metrics_to_show = gr.Checkboxgroup(

|

| 49 |

+

interactive=True,

|

| 50 |

+

value=["agg_score_metrics"],

|

| 51 |

+

choices=["agg_score_metrics"],

|

| 52 |

+

label="Metrics to display",

|

| 53 |

+

info="Results for these metrics will be shown")

|

| 54 |

+

with gr.Row():

|

| 55 |

+

with gr.Column(scale=1):

|

| 56 |

+

task_averaging = gr.Checkboxgroup(

|

| 57 |

+

interactive=True,

|

| 58 |

+

choices=["show averages", "show expanded"],

|

| 59 |

+

value=["show averages"],

|

| 60 |

+

label="Task averaging",

|

| 61 |

+

info="Behaviour for tasks with subsets")

|

| 62 |

+

|

| 63 |

+

std_dev_run = gr.Dropdown(

|

| 64 |

+

interactive=True,

|

| 65 |

+

choices=[],

|

| 66 |

+

label="Run for std_dev",

|

| 67 |

+

info="Select a run to compute std_devs. Must have multiple seeds."

|

| 68 |

+

)

|

| 69 |

+

with gr.Column(scale=2):

|

| 70 |

+

# includes the seed

|

| 71 |

+

with gr.Row():

|

| 72 |

+

with gr.Column(scale=1):

|

| 73 |

+

normalization_runs = gr.Dropdown(

|

| 74 |

+

interactive=True,

|

| 75 |

+

value=[], choices=[],

|

| 76 |

+

multiselect=True,

|

| 77 |

+

label="Normalization runs",

|

| 78 |

+

info="Select runs to use for normalization"

|

| 79 |

+

)

|

| 80 |

+

normalization_mode = gr.Radio(

|

| 81 |

+

choices=["No norm", "Rescale", "Z-norm"],

|

| 82 |

+

value="Z-norm",

|

| 83 |

+

label="Normalization mode"

|

| 84 |

+

)

|

| 85 |

+

clip_scores_checkbox = gr.Checkbox(value=False, label="Clip Scores")

|

| 86 |

+

with gr.Column(scale=1):

|

| 87 |

+

baseline_runs = gr.Dropdown(

|

| 88 |

+

interactive=True,

|

| 89 |

+

value=[], choices=[],

|

| 90 |

+

multiselect=True,

|

| 91 |

+

label="Baseline runs",

|

| 92 |

+

info="Select runs to use as baseline"

|

| 93 |

+

)

|

| 94 |

+

baseline_groupping_mode = gr.Dropdown(choices=list(get_args(BASELINE_GROUPING_MODE)), value="Mean", label="Baseline grouping mode")

|

| 95 |

+

results_df = gr.Dataframe(interactive=False)

|

| 96 |

+

|

| 97 |

+

with gr.Row():

|

| 98 |

+

with gr.Column():

|

| 99 |

+

export_button = gr.Button("Export Results")

|

| 100 |

+

csv = gr.File(interactive=False, visible=False)

|

| 101 |

+

with gr.Column():

|

| 102 |

+

export_stats_button = gr.Button("Export Stats")

|

| 103 |

+

stats_csv = gr.File(interactive=False, visible=False)

|

| 104 |

+

|

| 105 |

+

check_missing_checkpoints = gr.Button(CHECK_MISSING_DATAPOINTS_BUTTON_LABEL)

|

| 106 |

+

check_task_consistency_button = gr.Button(TASK_CONSISTENCY_BUTTON_LABEL, visible=True)

|

| 107 |

+

|

| 108 |

+

task_consistency_output = gr.Json(label="Task hash consistency", visible=False)

|

| 109 |

+

missing_list = gr.Json(label="Missing datapoints", visible=False)

|

| 110 |

+

with gr.Row():

|

| 111 |

+

column_to_plot = gr.Dropdown(

|

| 112 |

+

choices=[], interactive=True,

|

| 113 |

+

value='agg_score_macro',

|

| 114 |

+

label="Task and metric", allow_custom_value=True)

|

| 115 |

+

score_step = gr.Number(

|

| 116 |

+

value=14000,

|

| 117 |

+

label="Step to use for computing benchmark score",

|

| 118 |

+

)

|

| 119 |

+

baseline_window = gr.Number(

|

| 120 |

+

value=5,

|

| 121 |

+

label="Window size for computing variability and randomness",

|

| 122 |

+

)

|

| 123 |

+

with gr.Row():

|

| 124 |

+

with gr.Column():

|

| 125 |

+

gr.Markdown("### Monotonicity - Spearman Rank Correlation (steps vs score)")

|

| 126 |

+

monotonicity_md = gr.Markdown()

|

| 127 |

+

with gr.Column():

|

| 128 |

+

gr.Markdown("### Variability (Windowed) - std_dev (all steps of std_dev_run) and SNR (last step)")

|

| 129 |

+

variability_md = gr.Markdown()

|

| 130 |

+

with gr.Column():

|

| 131 |

+

gr.Markdown("### Randomness (Windowed) - distance to RB (in std_dev)")

|

| 132 |

+

randomness_md = gr.Markdown()

|

| 133 |

+

with gr.Column():

|

| 134 |

+

gr.Markdown("### Ordering - Kendall Tau (steps vs score)")

|

| 135 |

+

ordering_md = gr.Markdown()

|

| 136 |

+

with gr.Row():

|

| 137 |

+

merge_seeds = gr.Dropdown(

|

| 138 |

+

choices=["none", "min", "max", "mean"],

|

| 139 |

+

value='mean',

|

| 140 |

+

label="Seed merging")

|

| 141 |

+

smoothing_steps = gr.Number(

|

| 142 |

+

value=3,

|

| 143 |

+

label="Smooth every N datapoints (sliding window)",

|

| 144 |

+

)

|

| 145 |

+

stds_to_plot = gr.Number(

|

| 146 |

+

value=0,

|

| 147 |

+

label="plot N stds as error bars",

|

| 148 |

+

)

|

| 149 |

+

with gr.Column():

|

| 150 |

+

interpolate_checkbox = gr.Checkbox(value=False, label="Interpolate missing steps")

|

| 151 |

+

percent_checkbox = gr.Checkbox(value=False, label="%")

|

| 152 |

+

barplot_checkbox = gr.Checkbox(value=False, label="Bar plot")

|

| 153 |

+

plot = gr.Plot()

|

| 154 |

+

|

| 155 |

+

# run selection

|

| 156 |

+

gr.on(

|

| 157 |

+

triggers=[results_uri.change],

|

| 158 |

+

fn=fetch_run_list, inputs=[results_uri], outputs=[list_of_runs, selected_runs]

|

| 159 |

+

)

|

| 160 |

+

gr.on(

|

| 161 |

+

triggers=[select_by_regex_button.click],

|

| 162 |

+

fn=select_runs_by_regex,

|

| 163 |

+

inputs=[list_of_runs, selected_runs, select_by_regex_text, select_by_language], outputs=[selected_runs]

|

| 164 |

+

)

|

| 165 |

+

gr.on(

|

| 166 |

+

triggers=[select_by_language.change, mcq_type.change],

|

| 167 |

+

fn=select_runs_by_language,

|

| 168 |

+

inputs=[list_of_runs, selected_runs, select_by_language, aggregate_score_cols, mcq_type], outputs=[selected_runs, aggregate_score_cols]

|

| 169 |

+

)

|

| 170 |

+

demo.load(fn=fetch_run_list, inputs=[results_uri], outputs=[list_of_runs, selected_runs])

|

| 171 |

+

|

| 172 |

+

gr.on(

|

| 173 |

+

triggers=[selected_runs.change],

|

| 174 |

+

fn=init_std_dev_runs,

|

| 175 |

+

inputs=[selected_runs, std_dev_run],

|

| 176 |

+

outputs=[std_dev_run]

|

| 177 |

+

)

|

| 178 |

+

# fetch result

|

| 179 |

+

gr.on(

|

| 180 |

+

triggers=[fetch_res.click],

|

| 181 |

+

fn=fetch_run_results,

|

| 182 |

+

inputs=[results_uri, selected_runs, steps],

|

| 183 |

+

# We set the plot as output, as state has stae has no loading indicator

|

| 184 |

+

outputs=[run_data, plot]

|

| 185 |

+

).then(

|

| 186 |

+

fn=init_input_component_values, inputs=[run_data, normalization_mode, select_by_language],

|

| 187 |

+

outputs=[metrics_to_show, normalization_runs, baseline_runs]

|

| 188 |

+

).then(

|

| 189 |

+

fn=render_results_table,

|

| 190 |

+

inputs=[run_data, metrics_to_show, task_averaging, normalization_runs, baseline_runs, baseline_groupping_mode, clip_scores_checkbox,

|

| 191 |

+

normalization_mode, aggregate_score_cols, select_by_language, baseline_window, mcq_type],

|

| 192 |

+

outputs=[results_df, aggregate_score_cols, column_to_plot]

|

| 193 |

+

)

|

| 194 |

+

# change results table

|

| 195 |

+

gr.on(

|

| 196 |

+

triggers=[

|

| 197 |

+

metrics_to_show.input,

|

| 198 |

+

task_averaging.input,

|

| 199 |

+

normalization_runs.input,

|

| 200 |

+

baseline_runs.input,

|

| 201 |

+

clip_scores_checkbox.input,

|

| 202 |

+

baseline_groupping_mode.input,

|

| 203 |

+

aggregate_score_cols.input

|

| 204 |

+

],

|

| 205 |

+

fn=render_results_table,

|

| 206 |

+

inputs=[run_data, metrics_to_show, task_averaging, normalization_runs, baseline_runs, baseline_groupping_mode, clip_scores_checkbox,

|

| 207 |

+

normalization_mode, aggregate_score_cols, select_by_language, baseline_window, mcq_type],

|

| 208 |

+

outputs=[results_df, aggregate_score_cols, column_to_plot]

|

| 209 |

+

)

|

| 210 |

+

|

| 211 |

+

# On normalization mode we first have to preinit the compoentntns

|

| 212 |

+

gr.on(

|

| 213 |

+

triggers=[normalization_mode.input],

|

| 214 |

+

fn=init_input_normalization_runs,

|

| 215 |

+

inputs=[run_data, normalization_mode],

|

| 216 |

+

outputs=[normalization_runs]

|

| 217 |

+

).then(

|

| 218 |

+

fn=render_results_table,

|

| 219 |

+

inputs=[run_data, metrics_to_show, task_averaging, normalization_runs, baseline_runs, baseline_groupping_mode, clip_scores_checkbox,

|

| 220 |

+

normalization_mode, aggregate_score_cols, select_by_language, baseline_window, mcq_type],

|

| 221 |

+

outputs=[results_df, aggregate_score_cols, column_to_plot]

|

| 222 |

+

)

|

| 223 |

+

# table actions

|

| 224 |

+

gr.on(

|

| 225 |

+

triggers=[export_button.click],

|

| 226 |

+

fn=export_results_csv, inputs=[results_df], outputs=[csv]

|

| 227 |

+

)

|

| 228 |

+

gr.on(

|

| 229 |

+

triggers=[check_missing_checkpoints.click],

|

| 230 |

+

fn=check_missing_datapoints, inputs=[selected_runs, steps, run_data, check_missing_checkpoints],

|

| 231 |

+

outputs=[missing_list, check_missing_checkpoints]

|

| 232 |

+

)

|

| 233 |

+

|

| 234 |

+

gr.on(

|

| 235 |

+

triggers=[check_task_consistency_button.click],

|

| 236 |

+

fn=check_task_hash_consistency, inputs=[run_data, check_task_consistency_button],

|

| 237 |

+

outputs=[task_consistency_output, check_task_consistency_button]

|

| 238 |

+

)

|

| 239 |

+

# plot

|

| 240 |

+

gr.on(

|

| 241 |

+

triggers=[results_df.change, column_to_plot.input, merge_seeds.input, smoothing_steps.input, stds_to_plot.input,

|

| 242 |

+

interpolate_checkbox.input, percent_checkbox.input, baseline_window.input, barplot_checkbox.input],

|

| 243 |

+

fn=lambda df, col, merge_seeds, smoothing_steps, interpolate_checkbox, percent_checkbox:

|

| 244 |

+

prepare_plot_data(df,

|

| 245 |

+

col,

|

| 246 |

+

merge_seeds,

|

| 247 |

+

PlotOptions(

|

| 248 |

+

smoothing=smoothing_steps,

|

| 249 |

+

interpolate=interpolate_checkbox,

|

| 250 |

+

pct=percent_checkbox,

|

| 251 |

+

merge_seeds=merge_seeds)),

|

| 252 |

+

inputs=[results_df, column_to_plot, merge_seeds, smoothing_steps, interpolate_checkbox, percent_checkbox],

|

| 253 |

+

outputs=[plot_data]

|

| 254 |

+

).then(

|

| 255 |

+

fn=lambda df ,std_dev_run_name, column_name, score_s, variance_window, smoothing_steps:

|

| 256 |

+

calculate_statistics(smooth_tasks(df, smoothing_steps), std_dev_run_name, column_name, score_s, variance_window),

|

| 257 |

+

inputs=[results_df, std_dev_run, column_to_plot, score_step, baseline_window, smoothing_steps],

|

| 258 |

+

outputs=[statistics]

|

| 259 |

+

).then(

|

| 260 |

+

fn=plot_metric,

|

| 261 |

+

inputs=[plot_data, column_to_plot, merge_seeds, percent_checkbox, statistics, stds_to_plot, select_by_language, barplot_checkbox],

|

| 262 |

+

outputs=[plot]

|

| 263 |

+

).then(

|

| 264 |

+

fn=format_statistics,

|

| 265 |

+

inputs=[statistics],

|

| 266 |

+

outputs=[monotonicity_md, variability_md, randomness_md, ordering_md]

|

| 267 |

+

)

|

| 268 |

+

|

| 269 |

+

gr.on(

|

| 270 |

+

triggers=[export_stats_button.click],

|

| 271 |

+

fn=generate_and_export_stats,

|

| 272 |

+

inputs=[run_data, std_dev_run, baseline_runs, baseline_groupping_mode,

|

| 273 |

+

score_step, baseline_window],

|

| 274 |

+

outputs=[stats_csv]

|

| 275 |

+

)

|

| 276 |

+

|

| 277 |

+

demo.launch()

|

viewer/literals.py

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

TASK_CONSISTENCY_BUTTON_LABEL = "Check task hash consistency"

|

| 2 |

+

TASK_CONSISTENCY_BUTTON_CLOSE_LABEL = "Close results"

|

| 3 |

+

CHECK_MISSING_DATAPOINTS_BUTTON_LABEL = "Check missing datapoints"

|

| 4 |

+

CHECK_MISSING_DATAPOINTS_BUTTON_CLOSE_LABEL = "Close results"

|

| 5 |

+

FALLBACK_TOKEN_NAME = "HF_TOKEN"

|

| 6 |

+

BASLINE_RUN_NAME = "baseline-6-"

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

REFERENCE_RUNS = ["cc-100", "commoncrawl", "culturax", "hplt", "mc4"]

|

viewer/plot.py

ADDED

|

@@ -0,0 +1,131 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import numpy as np

|

| 2 |

+

import pandas as pd

|

| 3 |

+

from plotly import graph_objects as go

|

| 4 |

+

import plotly.express as px

|

| 5 |

+

from viewer.utils import PlotOptions

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

def parse_merge_runs_to_plot(df, metric_name, merge_method):

|

| 9 |

+

if merge_method == "none":

|

| 10 |

+

return [

|

| 11 |

+

(group["steps"], group[metric_name], f'{runname}-s{seed}')

|

| 12 |

+

for (runname, seed), group in df.groupby(["runname", "seed"])

|

| 13 |

+

]

|

| 14 |

+

if metric_name not in df.columns:

|

| 15 |

+

return []

|

| 16 |

+

grouped = df.groupby(['runname', 'steps']).agg({metric_name: merge_method}).reset_index()

|

| 17 |

+

return [

|

| 18 |

+

(group["steps"], group[metric_name], runname)

|

| 19 |

+

for (runname,), group in grouped.groupby(["runname"])

|

| 20 |

+

]

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

def prepare_plot_data(df: pd.DataFrame, metric_name: str, seed_merge_method: str,

|

| 24 |

+

plot_options: PlotOptions) -> pd.DataFrame:

|

| 25 |

+

if df is None or "steps" not in df or metric_name not in df.columns:

|

| 26 |

+

return pd.DataFrame()

|

| 27 |

+

|

| 28 |

+

df = df.copy().sort_values(by=["steps"])

|

| 29 |

+

plot_data = parse_merge_runs_to_plot(df, metric_name, seed_merge_method)

|

| 30 |

+

|

| 31 |

+

# Create DataFrame with all possible steps as index

|

| 32 |

+

all_steps = sorted(set(step for xs, _, _ in plot_data for step in xs))

|

| 33 |

+

result_df = pd.DataFrame(index=all_steps)

|

| 34 |

+

|

| 35 |

+

# Populate the DataFrame respecting xs for each series

|

| 36 |

+

for xs, ys, runname in plot_data:

|

| 37 |

+

result_df[runname] = pd.Series(index=xs.values, data=ys.values)

|

| 38 |

+

|

| 39 |

+

# Interpolate or keep NaN based on the interpolate flag

|

| 40 |

+

if plot_options.interpolate:

|

| 41 |

+

# this is done per run, as each run is in a diff column

|

| 42 |

+

result_df = result_df.interpolate(method='linear')

|

| 43 |

+

# Apply smoothing if needed

|

| 44 |

+

if plot_options.smoothing > 0:

|

| 45 |

+

result_df = result_df.rolling(window=plot_options.smoothing, min_periods=1).mean()

|

| 46 |

+

if plot_options.pct:

|

| 47 |

+

result_df = result_df * 100

|

| 48 |

+

|

| 49 |

+

return result_df

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

def plot_metric(plot_df: pd.DataFrame, metric_name: str, seed_merge_method: str, pct: bool, statistics: dict,

|

| 53 |

+

nb_stds: int, language: str = None, barplot: bool = False) -> go.Figure:

|

| 54 |

+

if barplot:

|

| 55 |

+

return plot_metric_barplot(plot_df, metric_name, seed_merge_method, pct, statistics, nb_stds, language)

|

| 56 |

+

return plot_metric_scatter(plot_df, metric_name, seed_merge_method, pct, statistics, nb_stds, language)

|

| 57 |

+

|

| 58 |

+

def plot_metric_scatter(plot_df: pd.DataFrame, metric_name: str, seed_merge_method: str, pct: bool, statistics: dict,

|

| 59 |

+

nb_stds: int, language: str = None) -> go.Figure:

|

| 60 |

+

fig = go.Figure()

|

| 61 |

+

if not isinstance(plot_df, pd.DataFrame) or plot_df.empty:

|

| 62 |

+

return fig

|

| 63 |

+

show_error_bars = nb_stds > 0 and not np.isnan(statistics["mean_std"])

|

| 64 |

+

error_value = statistics["mean_std"] * nb_stds * (100 if pct else 1) if show_error_bars else 0.0

|

| 65 |

+

|

| 66 |

+

last_y_values = {runname: plot_df[runname].iloc[-1] for runname in plot_df.columns}

|

| 67 |

+

sorted_runnames = sorted(last_y_values, key=last_y_values.get, reverse=True)

|

| 68 |

+

for runname in sorted_runnames:

|

| 69 |

+

fig.add_trace(

|

| 70 |

+

go.Scatter(x=plot_df.index, y=plot_df[runname], mode='lines+markers', name=runname,

|

| 71 |

+

hovertemplate=f'%{{y:.2f}} ({runname})<extra></extra>',

|

| 72 |

+

error_y=dict(

|

| 73 |

+

type='constant', # Use a constant error value

|

| 74 |

+

value=error_value, # Single error value

|

| 75 |

+

visible=show_error_bars # Show error bars

|

| 76 |

+

))

|

| 77 |

+

)

|

| 78 |

+

|

| 79 |

+

lang_string = f" ({language})" if language else ""

|

| 80 |

+

|

| 81 |

+

fig.update_layout(

|

| 82 |

+

title=f"Run comparisons{lang_string}: {metric_name}" +

|

| 83 |

+

(f" ({seed_merge_method} over seeds)" if seed_merge_method != "none" else "") + (f" [%]" if pct else ""),

|

| 84 |

+

xaxis_title="Training steps",

|

| 85 |

+

yaxis_title=metric_name,

|

| 86 |

+

hovermode="x unified"

|

| 87 |

+

)

|

| 88 |

+

return fig

|

| 89 |

+

|

| 90 |

+

|

| 91 |

+

def plot_metric_barplot(plot_df: pd.DataFrame, metric_name: str, seed_merge_method: str, pct: bool, statistics: dict,

|

| 92 |

+

nb_stds: int, language: str = None) -> go.Figure:

|

| 93 |

+

fig = go.Figure()

|

| 94 |

+

if not isinstance(plot_df, pd.DataFrame) or plot_df.empty:

|

| 95 |

+

return fig

|

| 96 |

+

|

| 97 |

+

show_error_bars = nb_stds > 0 and not np.isnan(statistics["mean_std"])

|

| 98 |

+

error_value = statistics["mean_std"] * nb_stds * (100 if pct else 1) if show_error_bars else 0.0

|

| 99 |

+

|

| 100 |

+

last_values = {runname: plot_df[runname].iloc[-1] for runname in plot_df.columns}

|

| 101 |

+

sorted_runnames = sorted(last_values, key=last_values.get, reverse=True)

|

| 102 |

+

|

| 103 |

+

# Create color map for consistent colors

|

| 104 |

+

colors = px.colors.qualitative.Set1

|

| 105 |

+

color_map = {run: colors[i % len(colors)] for i, run in enumerate(plot_df.columns)}

|

| 106 |

+

|

| 107 |

+

fig.add_trace(

|

| 108 |

+

go.Bar(

|

| 109 |

+

x=sorted_runnames,

|

| 110 |

+

y=[last_values[run] for run in sorted_runnames],

|

| 111 |

+

marker_color=[color_map[run] for run in sorted_runnames],

|

| 112 |

+

error_y=dict(

|

| 113 |

+

type='constant',

|

| 114 |

+

value=error_value,

|

| 115 |

+

visible=show_error_bars

|

| 116 |

+

),

|

| 117 |

+

hovertemplate='%{y:.2f}<extra></extra>'

|

| 118 |

+

)

|

| 119 |

+

)

|

| 120 |

+

|

| 121 |

+

lang_string = f" ({language})" if language else ""

|

| 122 |

+

|

| 123 |

+

fig.update_layout(

|

| 124 |

+

title=f"Run comparisons{lang_string}: {metric_name}" +

|

| 125 |

+

(f" ({seed_merge_method} over seeds)" if seed_merge_method != "none" else "") + (

|

| 126 |

+

f" [%]" if pct else ""),

|

| 127 |

+

xaxis_title="Runs",

|

| 128 |

+

yaxis_title=metric_name,

|

| 129 |

+

hovermode="x"

|

| 130 |

+

)

|

| 131 |

+

return fig

|

viewer/results.py

ADDED

|

@@ -0,0 +1,421 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|