File size: 582 Bytes

269c40f 552e421 06a8306 269c40f f5526d3 ff6510b |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

---

library_name: transformers

license: llama2

tags:

- code

---

Converted version of [CodeLlama-34b](https://huggingface.co/meta-llama/CodeLlama-34b-hf) to 4-bit using bitsandbytes. For more information about the model, refer to the model's page.

## Impact on performance

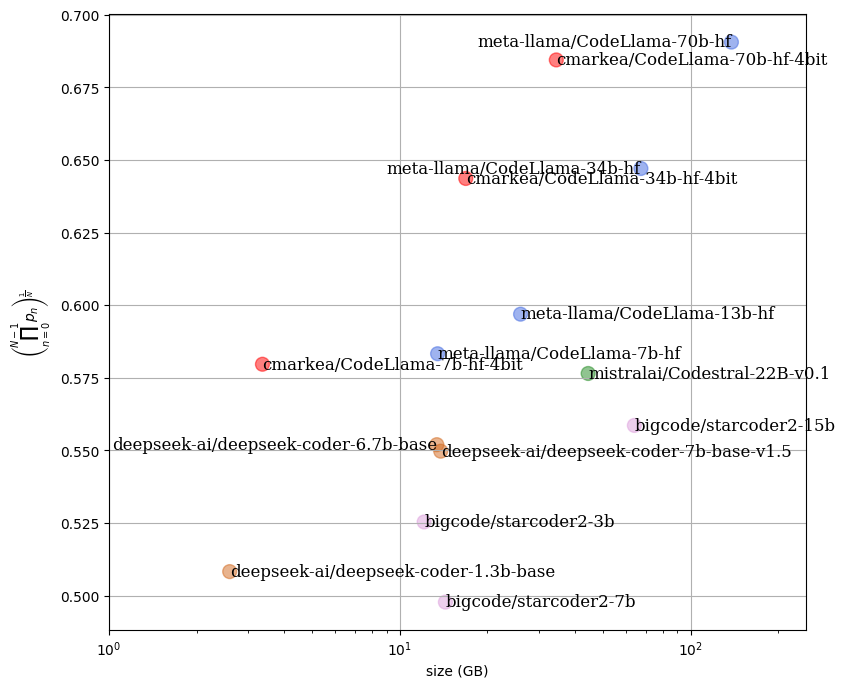

In the following figure, we can see the impact on the performance of a set of models relative to the required RAM space. It is noticeable that the quantized models have equivalent performance while providing a significant gain in RAM usage.

|