Create README.md

Browse files

README.md

ADDED

|

@@ -0,0 +1,134 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

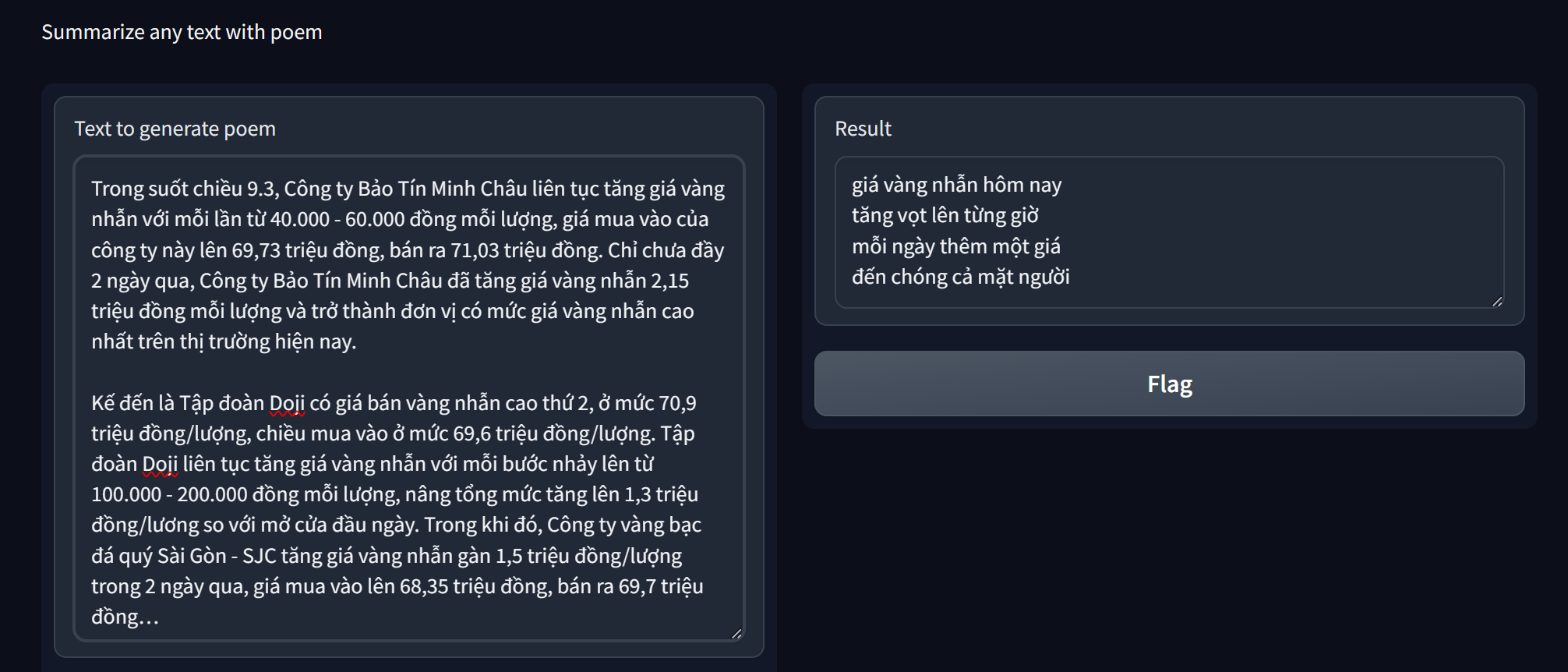

# Vietnamese Text Summarization with Poem

|

| 2 |

+

Summarize a piece of text with poem. Doesn't it sound fun? </br>

|

| 3 |

+

|

| 4 |

+

## Introduction

|

| 5 |

+

|

| 6 |

+

Jokes aside, this is a fun project by my team at FPT University about fine-tuning a Large Language Model (LLM) at summarizing a piece of long Vietnamese text in the form of **poems**. We call the model **VistralPoem5**. </br>

|

| 7 |

+

Here's a little example:

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

## HuggingFace 🤗

|

| 11 |

+

``` python

|

| 12 |

+

from transformers import AutoTokenizer, AutoModelForCausalLM

|

| 13 |

+

|

| 14 |

+

model_name = "pphuc25/VistralPoem5"

|

| 15 |

+

tokenizer = AutoTokenizer.from_pretrained(model_name, device_map="auto")

|

| 16 |

+

model = AutoModelForCausalLM.from_pretrained(model_name, device_map="auto")

|

| 17 |

+

|

| 18 |

+

inputs = [

|

| 19 |

+

{"role": "system", "content": "Bạn là một nhà thơ chuyên nghiệp, nhiệm vụ của bạn là chuyển bài văn này thành 1 bài thơ 5 chữ từ khoảng 1 đến 3 khổ"},

|

| 20 |

+

{"role": "user", "content": "nhớ tới lời mẹ dặn\nsợ mẹ buồn con đau\nnên tự mình đứng dậy\nnhanh như có phép màu"}

|

| 21 |

+

]

|

| 22 |

+

|

| 23 |

+

input_ids = tokenizer.apply_chat_template(inputs, return_tensors="pt").to(model.device)

|

| 24 |

+

outputs = model.generate(

|

| 25 |

+

input_ids=input_ids,

|

| 26 |

+

max_new_tokens=200,

|

| 27 |

+

do_sample=True,

|

| 28 |

+

top_p=0.95,

|

| 29 |

+

top_k=20,

|

| 30 |

+

temperature=0.1,

|

| 31 |

+

repetition_penalty=1.05,

|

| 32 |

+

)

|

| 33 |

+

|

| 34 |

+

output_str = tokenizer.batch_decode(outputs[:, input_ids.size(1): ], skip_special_tokens=True)[0].strip()

|

| 35 |

+

print(output_str)

|

| 36 |

+

```

|

| 37 |

+

|

| 38 |

+

## Fine-tuning

|

| 39 |

+

|

| 40 |

+

[](https://colab.research.google.com/github/andythetechnerd03/Vietnamese-Text-Summarization-Poem/blob/main/notebooks/fine_tune_with_axolotl.ipynb)

|

| 41 |

+

|

| 42 |

+

This is not an easy task. The model we are using is a Vietnamese version of the popular [Mistral-7B](https://arxiv.org/abs/2310.06825) with 7 billion parameters. Obviously, it is very computationally expensive to fine-tune, therefore we applied various state-of-the-art optimization techniques:

|

| 43 |

+

- [Flash Attention](https://github.com/Dao-AILab/flash-attention): helps reduce computation complexity of Attention from $O(n^2)$ to $O(n\log n)$

|

| 44 |

+

- [QLoRA (Quantized Low-Rank Adaptation)](https://arxiv.org/abs/2305.14314): train a smaller "adapter" which is a low-rank weight matrices, allowing for less computation. Furthermore, the base model is quantized to only `4-bit`, this is great for storing large models.

|

| 45 |

+

- [Mixed Precision Training](https://arxiv.org/abs/1710.03740): here we combine `float32` with `bfloat16` data type for faster training.

|

| 46 |

+

|

| 47 |

+

To train the LLM seamlessly as possible, we used a popular open-source fine-tuning platform called [Axolotl](https://github.com/OpenAccess-AI-Collective/axolotl). This platform helps you declare the parameters and config and train quickly without much code.

|

| 48 |

+

|

| 49 |

+

### Code for fine-tuning model

|

| 50 |

+

To customize the configuration, you can modify the `create_file_config.py` file. After making your changes, run the script to generate a personalized configuration file. The following is an example of how to execute the model training:

|

| 51 |

+

``` python

|

| 52 |

+

cd src

|

| 53 |

+

export PYTHONPATH="$PWD"

|

| 54 |

+

accelerate launch -m axolotl.cli.train config.yaml

|

| 55 |

+

```

|

| 56 |

+

|

| 57 |

+

## Data

|

| 58 |

+

This is not easy. Such data that takes the input as a long text (newspaper article, story) and output a poem is very hard to find. So we created our own... by using *prompt engineering*.

|

| 59 |

+

|

| 60 |

+

- The collection of poems is straightforward. There are many repositories and prior works that collected a handful of Vietnamese poems, as well as publicly available samples online. We collected from [FPT Software AI Lab](https://github.com/fsoft-ailab/Poem-Generator) and [HuggingFace](https://github.com/fsoft-ailab/Poem-Generator).

|

| 61 |

+

- From the poems we use prompt engineering to ask our base model to generate a story from such poem. The prompt is in the form </br>

|

| 62 |

+

``` Bạn là một nhà kể chuyện phiếm, nhiệm vụ của bạn là hãy kể 1 câu chuyện đơn giản và ngắn gọn từ một bài thơ, câu chuyện nên là 1 bài liền mạch, thực tế\n\n{insert poem here}```

|

| 63 |

+

- Speaking of prompt engineering, there is another prompt to generate poem from context. </br>

|

| 64 |

+

```Bạn là một nhà thơ chuyên nghiệp, nhiệm vụ của bạn là chuyển bài văn này thành 1 bài thơ 5 chữ từ khoảng 1 đến 3 khổ: \n {insert context here}```

|

| 65 |

+

- The pre-processing step is faily simple. A bit of lowercase here, punctuation removal there, plus reducing poems to 1-3 random paragraphs, and we are done.

|

| 66 |

+

|

| 67 |

+

After all, we have about 72,101 samples with a ratio of 0.05 (68495 on the train set and 3606 on the test set)

|

| 68 |

+

|

| 69 |

+

We published the dataset at [here](https://huggingface.co/datasets/pphuc25/poem-5-words-vietnamese)

|

| 70 |

+

|

| 71 |

+

### Custom Evaluation Data

|

| 72 |

+

As part of the final evaluation for benchmark, we gathered around 27 Vietnamese children's stories and divided into many samples, accumulating to 118 samples. The dataset can be found [here](/data/eval_set.json)

|

| 73 |

+

|

| 74 |

+

## Model

|

| 75 |

+

As mentioned earlier, we use [Vistral-7B-Chat](https://huggingface.co/Viet-Mistral/Vistral-7B-Chat) as the base model and we fine-tune it on our curated dataset earlier. Here's a few configurations:

|

| 76 |

+

- The model is based on Transformer’s decoder-only architechture:

|

| 77 |

+

- Number of Attention Heads: 32

|

| 78 |

+

- Hidden Size: 4096

|

| 79 |

+

- Vocab size: 38369

|

| 80 |

+

- Data type: bfloat16

|

| 81 |

+

- Number of Hidden Layers (Nx): 32

|

| 82 |

+

- Loss function: Cross-entropy

|

| 83 |

+

- Parameter-Efficient Finetuning: QLora

|

| 84 |

+

- 4 bit

|

| 85 |

+

- Alpha: 16

|

| 86 |

+

- Rank: 32

|

| 87 |

+

- Target: Linear

|

| 88 |

+

- Gradient accumulation: 4

|

| 89 |

+

- Learning Rate: 0.0002

|

| 90 |

+

- Warmup Steps: 10

|

| 91 |

+

- LR Scheduler: Cosine

|

| 92 |

+

- Max Steps: 400

|

| 93 |

+

- Batch size: 16

|

| 94 |

+

- Optimizer: Adamw bnb 8bit

|

| 95 |

+

- Sequence Len: 1096

|

| 96 |

+

|

| 97 |

+

The weights can be found [here](https://huggingface.co/pphuc25/poem-vistral)

|

| 98 |

+

|

| 99 |

+

The notebook for training can be found at `notebook/Fine_tune_LLMs_with_Axolotl.ipynb`

|

| 100 |

+

|

| 101 |

+

## Benchmark

|

| 102 |

+

We used the custom evaluation dataset to perform benchmark. Since popular metrics such as ROUGE is not applicable to poem format, we chose a simpler approach - counting the probability of 5-word poems in the result. </br>

|

| 103 |

+

Here's the result:

|

| 104 |

+

| Model | Number of Parameters | Hardware | Probability of 5-word(Higher is better) | Average inference time(Lower is better) |

|

| 105 |

+

|----------------------------|----------------------|----------------------|-----------------------------------------|-----------------------------------------|

|

| 106 |

+

| Vistral-7B-Chat (baseline) | 7B | 1x Nvidia Tesla A100 | 4.15% | 6.75s |

|

| 107 |

+

| Google Gemini Pro* | > 100B | **Multi-TPU** | 18.3% | 3.4s |

|

| 108 |

+

| **VistralPoem5 (Ours)** | **7B** | 1x Nvidia Tesla A100 | **61.4%** | **3.14s** |

|

| 109 |

+

|

| 110 |

+

* API call, meaning inference time may be affected

|

| 111 |

+

|

| 112 |

+

The benchmark code can be found at `notebook/infer_poem_model.ipynb` and `notebook/probability_5word.ipynb`

|

| 113 |

+

|

| 114 |

+

|

| 115 |

+

## Deployment

|

| 116 |

+

We used Gradio for fast deployment on Google Colab. It should be in `notebook/infer_poem_model.ipynb` as well.

|

| 117 |

+

|

| 118 |

+

|

| 119 |

+

Docker Image, coming soon...

|

| 120 |

+

## Future Work

|

| 121 |

+

- [ ] Make a custom loss function to align rhythm and tones.

|

| 122 |

+

- [ ] Use a better metric for evaluating poems (rhythm and content summarization)

|

| 123 |

+

- [ ] Use RLHF to align poems with human values.

|

| 124 |

+

- [ ] And more...

|

| 125 |

+

|

| 126 |

+

## Credits

|

| 127 |

+

- [Phan Phuc](https://github.com/pphuc25) for doing the fine-tuning.

|

| 128 |

+

- [Me](https://github.com/andythetechnerd03) for designing the pipeline and testing the model.

|

| 129 |

+

- [Truong Vo](https://github.com/justinvo277) for collecting the data.

|

| 130 |

+

|

| 131 |

+

|

| 132 |

+

|

| 133 |

+

|

| 134 |

+

|